How to Solve Statistical Problems Efficiently [Master Your Data Analysis Skills]

- November 17, 2023

Are you tired of feeling overstimulated by statistical problems? Welcome – you have now found the perfect article.

We understand the frustration that comes with trying to make sense of complex data sets.

Let’s work hand-in-hand to unpack those statistical secrets and find clarity in the numbers.

Do you find yourself stuck, unable to move forward because of statistical roadblocks? We’ve been there too. Our skill in solving statistical problems will help you find the way in through the toughest tough difficulties with confidence. Let’s tackle these problems hand-in-hand and pave the way to success.

As experts in the field, we know what it takes to conquer statistical problems effectively. This article is adjusted to meet your needs and provide you with the solutions you’ve been searching for. Join us on this voyage towards mastering statistics and unpack a world of possibilities.

Key Takeaways

- Data collection is the foundation of statistical analysis and must be accurate.

- Understanding descriptive and inferential statistics is critical for looking at and interpreting data effectively.

- Probability quantifies uncertainty and helps in making smart decisionss during statistical analysis.

- Identifying common statistical roadblocks like misinterpreting data or selecting inappropriate tests is important for effective problem-solving.

- Strategies like understanding the problem, choosing the right tools, and practicing regularly are key to tackling statistical tough difficulties.

- Using tools such as statistical software, graphing calculators, and online resources can aid in solving statistical problems efficiently.

Understanding Statistical Problems

When exploring the world of statistics, it’s critical to assimilate the nature of statistical problems. These problems often involve interpreting data, looking at patterns, and drawing meaningful endings. Here are some key points to consider:

- Data Collection: The foundation of statistical analysis lies in accurate data collection. Whether it’s surveys, experiments, or observational studies, gathering relevant data is important.

- Descriptive Statistics: Understanding descriptive statistics helps in summarizing and interpreting data effectively. Measures such as mean, median, and standard deviation provide useful ideas.

- Inferential Statistics: This branch of statistics involves making predictions or inferences about a population based on sample data. It helps us understand patterns and trends past the observed data.

- Probability: Probability is huge in statistical analysis by quantifying uncertainty. It helps us assess the likelihood of events and make smart decisionss.

To solve statistical problems proficiently, one must have a solid grasp of these key concepts.

By honing our statistical literacy and analytical skills, we can find the way in through complex data sets with confidence.

Let’s investigate more into the area of statistics and unpack its secrets.

Identifying Common Statistical Roadblocks

When tackling statistical problems, identifying common roadblocks is important to effectively find the way in the problem-solving process.

Let’s investigate some key problems individuals often encounter:

- Misinterpretation of Data: One of the primary tough difficulties is misinterpreting the data, leading to erroneous endings and flawed analysis.

- Selection of Appropriate Statistical Tests: Choosing the right statistical test can be perplexing, impacting the accuracy of results. It’s critical to have a solid understanding of when to apply each test.

- Assumptions Violation: Many statistical methods are based on certain assumptions. Violating these assumptions can skew results and mislead interpretations.

To overcome these roadblocks, it’s necessary to acquire a solid foundation in statistical principles and methodologies.

By honing our analytical skills and continuously improving our statistical literacy, we can adeptly address these tough difficulties and excel in statistical problem-solving.

For more ideas on tackling statistical problems, refer to this full guide on Common Statistical Errors .

Strategies for Tackling Statistical Tough difficulties

When facing statistical tough difficulties, it’s critical to employ effective strategies to find the way in through complex data analysis.

Here are some key approaches to tackle statistical problems:

- Understand the Problem: Before exploring analysis, ensure a clear comprehension of the statistical problem at hand.

- Choose the Right Tools: Selecting appropriate statistical tests is important for accurate results.

- Check Assumptions: Verify that the data meets the assumptions of the chosen statistical method to avoid skewed outcomes.

- Consult Resources: Refer to reputable sources like textbooks or online statistical guides for assistance.

- Practice Regularly: Improve statistical skills through consistent practice and application in various scenarios.

- Seek Guidance: When in doubt, seek advice from experienced statisticians or mentors.

By adopting these strategies, individuals can improve their problem-solving abilities and overcome statistical problems with confidence.

For further ideas on statistical problem-solving, refer to a full guide on Common Statistical Errors .

Tools for Solving Statistical Problems

When it comes to tackling statistical tough difficulties effectively, having the right tools at our disposal is important.

Here are some key tools that can aid us in solving statistical problems:

- Statistical Software: Using software like R or Python can simplify complex calculations and streamline data analysis processes.

- Graphing Calculators: These tools are handy for visualizing data and identifying trends or patterns.

- Online Resources: Websites like Kaggle or Stack Overflow offer useful ideas, tutorials, and communities for statistical problem-solving.

- Textbooks and Guides: Referencing textbooks such as “Introduction to Statistical Learning” or online guides can provide in-depth explanations and step-by-step solutions.

By using these tools effectively, we can improve our problem-solving capabilities and approach statistical tough difficulties with confidence.

For further ideas on common statistical errors to avoid, we recommend checking out the full guide on Common Statistical Errors For useful tips and strategies.

Putting in place Effective Solutions

When approaching statistical problems, it’s critical to have a strategic plan in place.

Here are some key steps to consider for putting in place effective solutions:

- Define the Problem: Clearly outline the statistical problem at hand to understand its scope and requirements fully.

- Collect Data: Gather relevant data sets from credible sources or conduct surveys to acquire the necessary information for analysis.

- Choose the Right Model: Select the appropriate statistical model based on the nature of the data and the specific question being addressed.

- Use Advanced Tools: Use statistical software such as R or Python to perform complex analyses and generate accurate results.

- Validate Results: Verify the accuracy of the findings through strict testing and validation procedures to ensure the reliability of the endings.

By following these steps, we can streamline the statistical problem-solving process and arrive at well-informed and data-driven decisions.

For further ideas and strategies on tackling statistical tough difficulties, we recommend exploring resources such as DataCamp That offer interactive learning experiences and tutorials on statistical analysis.

- Recent Posts

- How to Calculate Chi Square [Master Chi-Square Analysis Now] - August 26, 2024

- How to Interpret Correlation Matrix Table [Master Interpretation Techniques] - August 26, 2024

- How much does a software engineer make at Modern Treasury? [Discover the Salary Range Now] - August 25, 2024

Have questions? Contact us at (770) 518-9967 or [email protected]

Statistical Problem Solving (SPS)

- Statistical Problem Solving

Problem solving in any organization is a problem. Nobody wants to own the responsibility for a problem and that is the reason, when a problem shows up fingers may be pointing at others rather than self.

This is a natural human instinctive defense mechanism and hence cannot hold it against any one. However, it is to be realized the problems in industry are real and cannot be wished away, solution must be sought either by hunch or by scientific methods. Only a systematic disciplined approach for defining and solving problems consistently and effectively reveal the real nature of a problem and the best possible solutions .

A Chinese proverb says, “ it is cheap to do guesswork for solution, but a wrong guess can be very expensive”. This is to emphasize that although occasional success is possible trough hunches gained through long years of experience in doing the same job, but a lasting solution is possible only through scientific methods.

One of the major scientific method for problem solving is through Statistical Problem Solving (SPS) this method is aimed at not only solving problems but may be used for improvement on existing situation. It involves a team armed with process and product knowledge, having willingness to work together as a team, can undertake selection of some statistical methods, have willingness to adhere to principles of economy and willingness to learn along the way.

Statistical Problem Solving (SPS) could be used for process control or product control. In many situations, the product would be customer dictated, tried, tested and standardized in the facility may involve testing at both internal to facility or external to facility may be complex and may require customer approval for changes which could be time consuming and complex. But if the problem warrants then this should be taken up.

Process controls are lot simpler than product control where SPS may be used effectively for improving profitability of the industry, by reducing costs and possibly eliminating all 7 types of waste through use of Kaizen and lean management techniques.

The following could be used as 7 steps for Statistical Problem Solving (SPS)

- Defining the problem

- Listing variables

- Prioritizing variables

- Evaluating top few variables

- Optimizing variable settings

- Monitor and Measure results

- Reward/Recognize Team members

Defining the problem: Source for defining the problem could be from customer complaints, in-house rejections, observations by team lead or supervisor or QC personnel, levels of waste generated or such similar factors.

Listing and prioritizing variables involves all features associated with the processes. Example temperature, feed and speed of the machine, environmental factors, operator skills etc. It may be difficult to try and find solution for all variables together. Hence most probable variables are to be selected based on collective wisdom and experience of the team attempting to solve the problem.

Collection of data: Most common method in collecting data is the X bar and R charts. Time is used as the variable in most cases and plotted on X axis, and other variables such as dimensions etc. are plotted graphically as shown in example below.

Once data is collected based on probable list of variables, then the data is brought to the attention of the team for brainstorming on what variables are to be controlled and how solution could be obtained. In other words , optimizing variables settings . Based on the brainstorming session process control variables are evaluated using popular techniques like “5 why”, “8D”, “Pareto Analysis”, “Ishikawa diagram”, “Histogram” etc. The techniques are used to limit variables and design the experiments and collect data again. Values of variables are identified from data which shows improvement. This would lead to narrowing down the variables and modify the processes, to achieve improvement continually. The solutions suggested are to be implemented and results are to be recorded. This data is to be measured at varying intervals to see the status of implementation and the progress of improvement is to be monitored till the suggested improvements become normal routine. When results indicate resolution of problem and the rsults are consistent then Team memebres are to be rewarded and recognized to keep up their morale for future projects.

Who Should Pursue SPS

- Statistical Problem Solving can be pursued by a senior leadership group for example group of quality executives meeting weekly to review quality issues, identify opportunities for costs saving and generate ideas for working smarter across the divisions

- Statistical Problem solving can equally be pursued by a staff work group within an institution that possesses a diversity of experience that can gather data on various product features and tabulate them statistically for drawing conclusions

- The staff work group proposes methods for rethinking and reworking models of collaboration and consultation at the facility

- The senior leadership group and staff work group work in partnership with university faculty and staff to identify research communications and solve problems across the organization

Benefits of Statistical Problem Solving

- Long term commitment to organizations and companies to work smarter.

- Reduces costs, enhances services and increases revenues.

- Mitigating the impact of budget reductions while at the same time reducing operational costs.

- Improving operations and processes, resulting in a more efficient, less redundant organization.

- Promotion of entrepreneurship intelligence, risk taking corporations and engagement across interactions with business and community partners.

- A culture change in a way a business or organization collaborates both internally and externally.

- Identification and solving of problems.

- Helps to repetition of problems

- Meets the mandatory requirement for using scientific methods for problem solving

- Savings in revenue by reducing quality costs

- Ultimate improvement in Bottom -Line

- Improvement in teamwork and morale in working

- Improvement in overall problem solving instead of harping on accountability

Business Impact

- Scientific data backed up problem solving techniques puts the business at higher pedestal in the eyes of the customer.

- Eradication of over consulting within businesses and organizations which may become a pitfall especially where it affects speed of information.

- Eradication of blame game

QSE’s Approach to Statistical Problem Solving

By leveraging vast experience, it has, QSE organizes the entire implementation process for Statistical Problem Solving in to Seven simple steps

- Define the Problem

- List Suspect Variables

- Prioritize Selected Variables

- Evaluate Critical Variables

- Optimize Critical Variables

- Monitor and Measure Results

- Reward/Recognize Team Members

- Define the Problem (Vital Few -Trivial Many):

List All the problems which may be hindering Operational Excellence . Place them in a Histogram under as many categories as required.

Select Problems based on a simple principle of Vital Few that is select few problems which contribute to most deficiencies within the facility

QSE advises on how to Use X and R Charts to gather process data.

- List Suspect Variables:

QSE Advises on how to gather data for the suspect variables involving cross functional teams and available past data

- Prioritize Selected Variables Using Cause and Effect Analysis:

QSE helps organizations to come up prioritization of select variables that are creating the problem and the effect that are caused by them. The details of this exercise are to be represented in the Fishbone Diagram or Ishikawa Diagram

- Evaluate Critical Variables:

Use Brain Storming method to use critical variables for collecting process data and Incremental Improvement for each selected critical variable

QSE with its vast experiences guides and conducts brain storming sessions in the facility to identify KAIZEN (Small Incremental projects) to bring in improvements. Create a bench mark to be achieved through the suggested improvement projects

- Optimize Critical Variable Through Implementing the Incremental Improvements:

QSE helps facilities to implement incremental improvements and gather data to see the results of the efforts in improvements

- Monitor and Measure to Collect Data on Consolidated incremental achievements :

Consolidate and make the major change incorporating all incremental improvements and then gather data again to see if the benchmarks have been reached

QSE educates and assists the teams on how these can be done in a scientific manner using lean and six sigma techniques

QSE organizes verification of Data to compare the results from the original results at the start of the projects. Verify if the suggestions incorporated are repeatable for same or better results as planned

Validate the improvement project by multiple repetitions

- Reward and Recognize Team Members:

QSE will provide all kinds of support in identifying the great contributors to the success of the projects and make recommendation to the Management to recognize the efforts in a manner which befits the organization to keep up the morale of the contributors.

Need Certification?

Quality System Enhancement has been a leader in global certification services for the past 30 years . With more than 800 companies successfully certified, our proprietary 10-Step Approach™ to certification offers an unmatched 100% success rate for our clients.

Recent Posts

ISO 14155:2020 Clinical investigation of medical devices for human subjects — Good clinical practice

CDFA Proposition 12 – Farm Animal Confinement

Have a question, sign up for our newsletter.

Hear about the latest industry trends from the QSE team of experts. Receive special offers for training services and invitations to free webinars.

ISO Standards

- ISO 9001:2015

- ISO 10993-1:2018

- ISO 13485:2016

- ISO 14001:2015

- ISO 15189:2018

- ISO 15190:2020

- ISO 15378:2017

- ISO/IEC 17020:2012

- ISO/IEC 17025:2017

- ISO 20000-1:2018

- ISO 22000:2018

- ISO 22301:2019

- ISO 27001:2015

- ISO 27701:2019

- ISO 28001:2007

- ISO 37001:2016

- ISO 45001:2018

- ISO 50001:2018

- ISO 55001:2014

Telecommunication Standards

- TL 9000 Version 6.1

Automotive Standards

- IATF 16949:2016

- ISO/SAE 21434:2021

Aerospace Standards

Forestry standards.

- FSC - Forest Stewardship Council

- PEFC - Program for the Endorsement of Forest Certification

- SFI - Sustainable Forest Initiative

Steel Construction Standards

Food safety standards.

- FDA Gluten Free Labeling & Certification

- Hygeine Excellence & Sanitation Excellence

GFSI Recognized Standards

- BRC Version 9

- FSSC 22000:2019

- Hygeine Excellent & Sanitation Excellence

- IFS Version 7

- SQF Edition 9

- All GFSI Recognized Standards for Packaging Industries

Problem Solving Tools

- Corrective & Preventative Actions

- Root Cause Analysis

- Supplier Development

Excellence Tools

- Bottom Line Improvement

- Customer Satisfaction Measurement

- Document Simplification

- Hygiene Excellence & Sanitation

- Lean & Six Sigma

- Malcom Baldridge National Quality Award

- Operational Excellence

- Safety (including STOP and OHSAS 45001)

- Sustainability (Reduce, Reuse, & Recycle)

- Total Productive Maintenance

Other Standards

- California Transparency Act

- Global Organic Textile Standard (GOTS)

- Hemp & Cannabis Management Systems

- Recycling & Re-Using Electronics

- ESG - Environmental, Social & Governance

- CDFA Proposition 12 Animal Welfare

Simplification Delivered™

QSE has helped over 800 companies across North America achieve certification utilizing our unique 10-Step Approach ™ to management system consulting. Schedule a consultation and learn how we can help you achieve your goals as quickly, simply and easily as possible.

Teach yourself statistics

Statistics Problems

One of the best ways to learn statistics is to solve practice problems. These problems test your understanding of statistics terminology and your ability to solve common statistics problems. Each problem includes a step-by-step explanation of the solution.

- Use the dropdown boxes to describe the type of problem you want to work on.

- click the Submit button to see problems and solutions.

Main topic:

Problem description:

In one state, 52% of the voters are Republicans, and 48% are Democrats. In a second state, 47% of the voters are Republicans, and 53% are Democrats. Suppose a simple random sample of 100 voters are surveyed from each state.

What is the probability that the survey will show a greater percentage of Republican voters in the second state than in the first state?

The correct answer is C. For this analysis, let P 1 = the proportion of Republican voters in the first state, P 2 = the proportion of Republican voters in the second state, p 1 = the proportion of Republican voters in the sample from the first state, and p 2 = the proportion of Republican voters in the sample from the second state. The number of voters sampled from the first state (n 1 ) = 100, and the number of voters sampled from the second state (n 2 ) = 100.

The solution involves four steps.

- Make sure the sample size is big enough to model differences with a normal population. Because n 1 P 1 = 100 * 0.52 = 52, n 1 (1 - P 1 ) = 100 * 0.48 = 48, n 2 P 2 = 100 * 0.47 = 47, and n 2 (1 - P 2 ) = 100 * 0.53 = 53 are each greater than 10, the sample size is large enough.

- Find the mean of the difference in sample proportions: E(p 1 - p 2 ) = P 1 - P 2 = 0.52 - 0.47 = 0.05.

σ d = sqrt{ [ P1( 1 - P 1 ) / n 1 ] + [ P 2 (1 - P 2 ) / n 2 ] }

σ d = sqrt{ [ (0.52)(0.48) / 100 ] + [ (0.47)(0.53) / 100 ] }

σ d = sqrt (0.002496 + 0.002491) = sqrt(0.004987) = 0.0706

z p 1 - p 2 = (x - μ p 1 - p 2 ) / σ d = (0 - 0.05)/0.0706 = -0.7082

Using Stat Trek's Normal Distribution Calculator , we find that the probability of a z-score being -0.7082 or less is 0.24.

Therefore, the probability that the survey will show a greater percentage of Republican voters in the second state than in the first state is 0.24.

See also: Difference Between Proportions

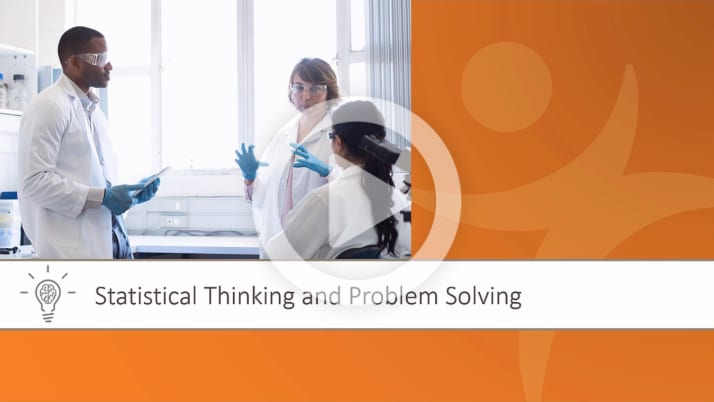

Statistical Thinking for Industrial Problem Solving

A free online statistics course.

Back to Course Overview

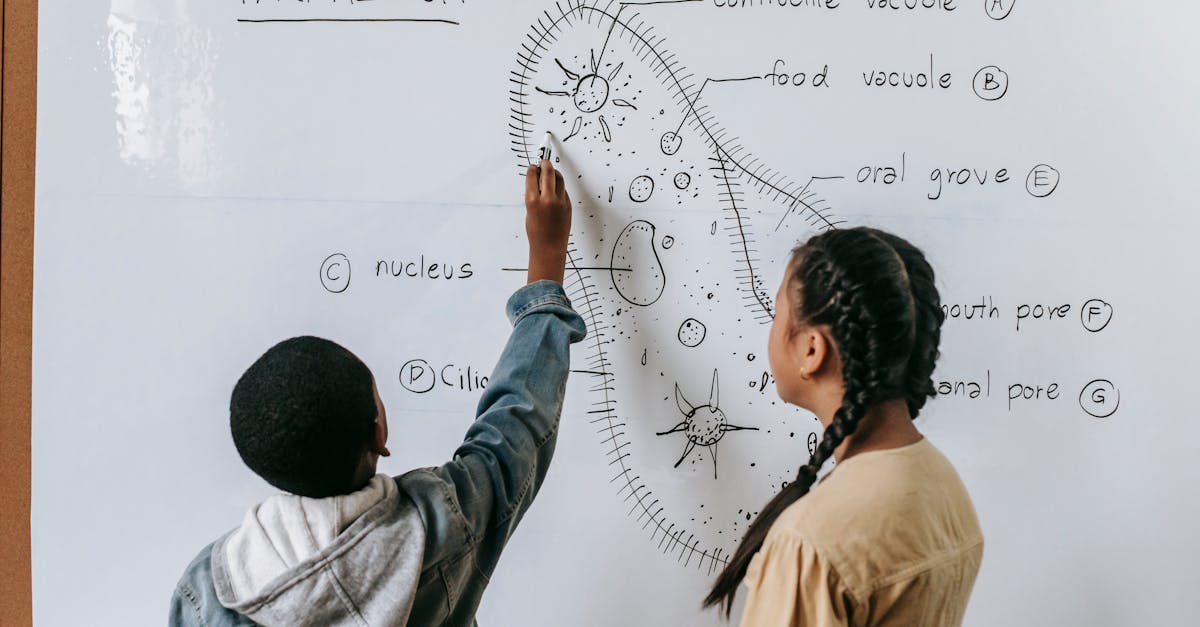

Statistical Thinking and Problem Solving

Statistical thinking is vital for solving real-world problems. At the heart of statistical thinking is making decisions based on data. This requires disciplined approaches to identifying problems and the ability to quantify and interpret the variation that you observe in your data.

In this module, you will learn how to clearly define your problem and gain an understanding of the underlying processes that you will improve. You will learn techniques for identifying potential root causes of the problem. Finally, you will learn about different types of data and different approaches to data collection.

Estimated time to complete this module: 2 to 3 hours

Statistical Thinking and Problem Solving Overview (0:36)

Specific topics covered in this module include:

Statistical thinking.

- What is Statistical Thinking

Problem Solving

- Overview of Problem Solving

- Statistical Problem Solving

- Types of Problems

- Defining the Problem

- Goals and Key Performance Indicators

- The White Polymer Case Study

Defining the Process

- What is a Process?

- Developing a SIPOC Map

- Developing an Input/Output Process Map

- Top-Down and Deployment Flowcharts

Identifying Potential Root Causes

- Tools for Identifying Potential Causes

- Brainstorming

- Multi-voting

- Using Affinity Diagrams

- Cause-and-Effect Diagrams

- The Five Whys

- Cause-and-Effect Matrices

Compiling and Collecting Data

- Data Collection for Problem Solving

- Types of Data

- Operational Definitions

- Data Collection Strategies

- Importing Data for Analysis

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organizations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organize and summarize the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalize your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarize your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, other interesting articles.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalize your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

| Variable | Type of data |

|---|---|

| Age | Quantitative (ratio) |

| Gender | Categorical (nominal) |

| Race or ethnicity | Categorical (nominal) |

| Baseline test scores | Quantitative (interval) |

| Final test scores | Quantitative (interval) |

| Parental income | Quantitative (ratio) |

|---|---|

| GPA | Quantitative (interval) |

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalizable findings, you should use a probability sampling method. Random selection reduces several types of research bias , like sampling bias , and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to at risk for biases like self-selection bias , they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalizing your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalize your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialized, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalized in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardized indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarize them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organizing data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

- Visualizing the relationship between two variables using a scatter plot .

By visualizing your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

| Pretest scores | Posttest scores | |

|---|---|---|

| Mean | 68.44 | 75.25 |

| Standard deviation | 9.43 | 9.88 |

| Variance | 88.96 | 97.96 |

| Range | 36.25 | 45.12 |

| 30 | ||

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

| Parental income (USD) | GPA | |

|---|---|---|

| Mean | 62,100 | 3.12 |

| Standard deviation | 15,000 | 0.45 |

| Variance | 225,000,000 | 0.16 |

| Range | 8,000–378,000 | 2.64–4.00 |

| 653 | ||

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimize the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasizes null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

Methodology

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hostile attribution bias

- Affect heuristic

Is this article helpful?

Other students also liked.

- Descriptive Statistics | Definitions, Types, Examples

- Inferential Statistics | An Easy Introduction & Examples

- Choosing the Right Statistical Test | Types & Examples

More interesting articles

- Akaike Information Criterion | When & How to Use It (Example)

- An Easy Introduction to Statistical Significance (With Examples)

- An Introduction to t Tests | Definitions, Formula and Examples

- ANOVA in R | A Complete Step-by-Step Guide with Examples

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Chi-Square (Χ²) Distributions | Definition & Examples

- Chi-Square (Χ²) Table | Examples & Downloadable Table

- Chi-Square (Χ²) Tests | Types, Formula & Examples

- Chi-Square Goodness of Fit Test | Formula, Guide & Examples

- Chi-Square Test of Independence | Formula, Guide & Examples

- Coefficient of Determination (R²) | Calculation & Interpretation

- Correlation Coefficient | Types, Formulas & Examples

- Frequency Distribution | Tables, Types & Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | 4 Ways with Examples & Explanation

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Mode | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Hypothesis Testing | A Step-by-Step Guide with Easy Examples

- Interval Data and How to Analyze It | Definitions & Examples

- Levels of Measurement | Nominal, Ordinal, Interval and Ratio

- Linear Regression in R | A Step-by-Step Guide & Examples

- Missing Data | Types, Explanation, & Imputation

- Multiple Linear Regression | A Quick Guide (Examples)

- Nominal Data | Definition, Examples, Data Collection & Analysis

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- One-way ANOVA | When and How to Use It (With Examples)

- Ordinal Data | Definition, Examples, Data Collection & Analysis

- Parameter vs Statistic | Definitions, Differences & Examples

- Pearson Correlation Coefficient (r) | Guide & Examples

- Poisson Distributions | Definition, Formula & Examples

- Probability Distribution | Formula, Types, & Examples

- Quartiles & Quantiles | Calculation, Definition & Interpretation

- Ratio Scales | Definition, Examples, & Data Analysis

- Simple Linear Regression | An Easy Introduction & Examples

- Skewness | Definition, Examples & Formula

- Statistical Power and Why It Matters | A Simple Introduction

- Student's t Table (Free Download) | Guide & Examples

- T-distribution: What it is and how to use it

- Test statistics | Definition, Interpretation, and Examples

- The Standard Normal Distribution | Calculator, Examples & Uses

- Two-Way ANOVA | Examples & When To Use It

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Understanding P values | Definition and Examples

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Kurtosis? | Definition, Examples & Formula

- What Is Standard Error? | How to Calculate (Guide with Examples)

What is your plagiarism score?

Step-by-Step Statistics Solutions

Get help on your statistics homework with our easy-to-use statistics calculators.

Here, you will find all the help you need to be successful in your statistics class. Check out our statistics calculators to get step-by-step solutions to almost any statistics problem. Choose from topics such as numerical summary, confidence interval, hypothesis testing, simple regression and more.

Statistics Calculators

Table and graph, numerical summary, basic probability, discrete distribution, continuous distribution, sampling distribution, confidence interval, hypothesis testing, two population, population variance, goodness of fit, analysis of variance, simple regression, multiple regression, time series analysis.

Standard Normal

T-distribution, f-distribution.

What Is Statistics?

- First Online: 10 December 2017

Cite this chapter

- Christopher J. Wild 4 ,

- Jessica M. Utts 5 &

- Nicholas J. Horton 6

Part of the book series: Springer International Handbooks of Education ((SIHE))

3663 Accesses

18 Citations

What is statistics? We attempt to answer this question as it relates to grounding research in statistics education. We discuss the nature of statistics as the science of learning from data, its history and traditions, what characterizes statistical thinking and how it differs from mathematics, connections with computing and data science, why learning statistics is essential, and what is most important. Finally, we attempt to gaze into the future, drawing upon what is known about the fast-growing demand for statistical skills and the portents of where the discipline is heading, especially those arising from data science and the promises and problems of big data.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Similar content being viewed by others

Navigating in a New Pedagogical Landscape with an Introductory Course in Applied Statistics

The Relationships Between Statistics, Statistical Modelling and Mathematical Modelling

Innovations in statistical modeling to connect data, chance and context.

American Association for the Advancement of Science (2015). Meeting theme: Innovations, information, and imaging. Retrieved from https://www.aaas.org/AM2015/theme .

Google Scholar

American Statistical Association Undergraduate Guidelines Workgroup. (2014). Curriculum guidelines for undergraduate programs in statistical science . Alexandria, VA: American Statistical Association. Online. Retrieved from http://www.amstat.org/asa/education/Curriculum-Guidelines-for-Undergraduate-Programs-in-Statistical-Science.aspx

AP Computer Science Principles. (2017). Course and exam description. Retrieved from https://secure-media.collegeboard.org/digitalServices/pdf/ap/ap-computer-science-principles-course-and-exam-description.pdf .

AP Statistics. (2016). Course overview. Retrieved from https://apstudent.collegeboard.org/apcourse/ap-statistics/course-details .

Applebaum, B. (2015, May 21). Vague on your monthly spending? You’re not alone. New York Times , A3.

Arnold, P. A. (2013). Statistical Investigative Questions: An enquiry into posing and answering investigative questions from existing data . Ph.D. thesis, Statistics University of Auckland. Retrieved from https://researchspace.auckland.ac.nz/bitstream/handle/2292/21305/whole.pdf?sequence=2 .

Baldi, B., & Utts, J. (2015). What your future doctor should know about statistics: Must-include topics for introductory undergraduate biostatistics. The American Statistician, 69 (3), 231–240.

Article Google Scholar

Bartholomew, D. (1995). What is statistics? Journal of the Royal Statistical Society, Series A: Statistics in Society, 158 , 1–20.

Box, G. E. P. (1990). Commentary. Technometrics, 32 (3), 251–252.

Breiman, L. (2001). Statistical modeling: The two cultures. Statistical Science, 16 (3), 199–231.

Brown, E. N., & Kass, R. E. (2009). What is statistics? (with discussion). The American Statistician, 63 (2), 105–123.

Carver, R. H., & Stevens, M. (2014). It is time to include data management in introductory statistics. In K. Makar, B. de Sousa, & R. Gould (Eds.), Proceedings of the ninth international conference on teaching statistics . Retrieved from http://iase-web.org/icots/9/proceedings/pdfs/ICOTS9_C134_CARVER.pdf

Chambers, J. M. (1993). Greater or lesser statistics: A choice for future research. Statistics and Computing, 3 (4), 182–184.

Chance, B. (2002). Components of statistical thinking and implications for instruction and assessment. Journal of Statistics Education, 10 (3). Retrieved from http://www.amstat.org/publications/jse/v10n3/chance.html .

Cobb, G. W. (2015). Mere renovation is too little, too late: We need to rethink the undergraduate curriculum from the ground up. The American Statistician, 69 (4), 266–282.

Cobb, G. W., & Moore, D. S. (1997). Mathematics, statistics, and teaching. The American Mathematical Monthly, 104 (9), 801–823.

Cohn, V., & Cope, L. (2011). News and numbers: A writer’s guide to statistics . Hoboken, NJ: Wiley-Blackwell.

CRA. (2012). Challenges and opportunities with big data: A community white paper developed by leading researchers across the United States. Retrieved from http://cra.org/ccc/wp-content/uploads/sites/2/2015/05/bigdatawhitepaper.pdf .

De Veaux, R. D., & Velleman, P. (2008). Math is music; statistics is literature. Amstat News, 375 , 54–60.

Eddy, D. M. (1982). Probabilistic reasoning in clinical medicine: Problems and opportunities. In D. Kahneman, P. Slovic, & A. Tversky (Eds.), Judgment under uncertainty: Heuristics and biases (pp. 249–267). Cambridge, England: Cambridge University Press.

Chapter Google Scholar

Farrell, D., & Greig, F. (2015, May). Weathering volatility: Big data on the financial ups and downs of U.S. individuals (J.P. Morgan Chase & Co. Institute Technical Report). Retrieved from August 15, 2015, http://www.jpmorganchase.com/corporate/institute/research.htm .

Fienberg, S. E. (1992). A brief history of statistics in three and one-half chapters: A review essay. Statistical Science, 7 (2), 208–225.

Fienberg, S. E. (2014). What is statistics? Annual Review of Statistics and Its Applications, 1 , 1–9.

Finzer, W. (2013). The data science education dilemma. Technology Innovations in Statistics Education, 7 (2). Retrieved from http://escholarship.org/uc/item/7gv0q9dc .

Forbes, S. (2014). The coming of age of statistics education in New Zealand, and its influence internationally. Journal of Statistics Education, 22 (2). Retrieved from http://www.amstat.org/publications/jse/v22n2/forbes.pdf .

Friedman, J. H. (2001). The role of statistics in the data revolution? International Statistical Review, 69 (1), 5–10.

Friendly, M. (2008). The golden age of statistical graphics. Statistical Science, 23 (4), 502–535.

Future of Statistical Sciences. (2013). Statistics and Science: A report of the London Workshop on the Future of the Statistical Sciences . Retrieved from http://bit.ly/londonreport .

GAISE College Report. (2016). Guidelines for assessment and instruction in Statistics Education College Report , American Statistical Association, Alexandria, VA. Retrieved from http://www.amstat.org/education/gaise .

GAISE K-12 Report. (2005). Guidelines for assessment and instruction in Statistics Education K-12 Report , American Statistical Association, Alexandria, VA. Retrieved from http://www.amstat.org/education/gaise .

Gigerenzer, G., Gaissmaier, W., Kurz-Milcke, E., Schwartz, L. M., & Woloshin, S. (2008). Helping doctors and patients make sense of health statistics. Psychological Science in the Public Interest, 8 (2), 53–96.

Grolemund, G., & Wickham, H. (2014). A cognitive interpretation of data analysis. International Statistical Review, 82 (2), 184–204.

Hacking, I. (1990). The taming of chance . New York, NY: Cambridge University Press.

Book Google Scholar

Hahn, G. J., & Doganaksoy, N. (2012). A career in statistics: Beyond the numbers . Hoboken, NJ: Wiley.

Hand, D. J. (2014). The improbability principle: Why coincidences, miracles, and rare events happen every day . New York, NY: Scientific American.

Holmes, P. (2003). 50 years of statistics teaching in English schools: Some milestones (with discussion). Journal of the Royal Statistical Society, Series D (The Statistician), 52 (4), 439–474.

Horton, N. J. (2015). Challenges and opportunities for statistics and statistical education: Looking back, looking forward. The American Statistician, 69 (2), 138–145.

Horton, N. J., & Hardin, J. (2015). Teaching the next generation of statistics students to “Think with Data”: Special issue on statistics and the undergraduate curriculum. The American Statistician, 69 (4), 258–265. Retrieved from http://amstat.tandfonline.com/doi/full/10.1080/00031305.2015.1094283

Ioannidis, J. (2005). Why most published research findings are false. PLoS Medicine, 2 , e124.

Kendall, M. G. (1960). Studies in the history of probability and statistics. Where shall the history of statistics begin? Biometrika, 47 (3), 447–449.

Konold, C., & Pollatsek, A. (2002). Data analysis as the search for signals in noisy processes. Journal for Research in Mathematics Education, 33 (4), 259–289.

Lawes, C. M., Vander Hoorn, S., Law, M. R., & Rodgers, A. (2004). High cholesterol. In M. Ezzati, A. D. Lopez, A. Rodgers, & C. J. L. Murray (Eds.), Comparative quantification of health risks, global and regional burden of disease attributable to selected major risk factors (Vol. 1, pp. 391–496). Geneva: World Health Organization.

Live Science. (2012, February 22). Citrus fruits lower women’s stroke risk . Retrieved from http://www.livescience.com/18608-citrus-fruits-stroke-risk.html .

MacKay, R. J., & Oldford, R. W. (2000). Scientific method, statistical method and the speed of light. Statistical Science, 15 (3), 254–278.

Madigan, D., & Gelman, A. (2009). Comment. The American Statistician, 63 (2), 114–115.

Manyika, J., Chui, M., Brown B., Bughin, J., Dobbs, R., Roxburgh, C., & Byers, A. H. (2011). Big data: The next frontier for innovation, competition, and productivity. Retrieved from http://www.mckinsey.com/business-functions/digital-mckinsey/our-insights/big-data-the-next-frontier-for-innovation .

Marquardt, D. W. (1987). The importance of statisticians. Journal of the American Statistical Association, 82 (397), 1–7.

Moore, D. S. (1998). Statistics among the Liberal Arts. Journal of the American Statistical Association, 93 (444), 1253–1259.

Moore, D. S. (1999). Discussion: What shall we teach beginners? International Statistical Review, 67 (3), 250–252.

Moore, D. S., & Notz, W. I. (2016). Statistics: Concepts and controversies (9th ed.). New York, NY: Macmillan Learning.

NBC News. (2011, January 4). Walk faster and you just might live longer . Retrieved from http://www.nbcnews.com/id/40914372/ns/health-fitness/t/walk-faster-you-just-might-live-longer/#.Vc-yHvlViko .

NBC News. (2012, May 16). 6 cups a day? Coffee lovers less likely to die, study finds . Retrieved from http://vitals.nbcnews.com/_news/2012/05/16/11704493-6-cups-a-day-coffee-lovers-less-likely-to-die-study-finds?lite .

Nolan, D., & Perrett, J. (2016). Teaching and learning data visualization: Ideas and assignments. The American Statistician 70(3):260–269. Retrieved from http://arxiv.org/abs/1503.00781 .

Nolan, D., & Temple Lang, D. (2010). Computing in the statistics curricula. The American Statistician, 64 (2), 97–107.

Nolan, D., & Temple Lang, D. (2014). XML and web technologies for data sciences with R . New York, NY: Springer.

Nuzzo, R. (2014). Scientific method: Statistical errors. Nature, 506 , 150–152. Retrieved from http://www.nature.com/news/scientific-method-statistical-errors-1.14700

Pfannkuch, M., Budget, S., Fewster, R., Fitch, M., Pattenwise, S., Wild, C., et al. (2016). Probability modeling and thinking: What can we learn from practice? Statistics Education Research Journal, 15 (2), 11–37. Retrieved from http://iase-web.org/documents/SERJ/SERJ15(2)_Pfannkuch.pdf

Pfannkuch, M., & Wild, C. J. (2004). Towards an understanding of statistical thinking. In D. Ben-Zvi & J. Garfield (Eds.), The challenge of developing statistical literacy, reasoning, and thinking (pp. 17–46). Dordrecht, The Netherlands: Kluwer Academic Publishers.

Porter, T. M. (1986). The rise of statistical thinking 1820–1900 . Princeton, NJ: Princeton University Press.

Pullinger, J. (2014). Statistics making an impact. Journal of the Royal Statistical Society, A, 176 (4), 819–839.

Ridgway, J. (2015). Implications of the data revolution for statistics education. International Statistical Review, 84 (3), 528–549. Retrieved from http://onlinelibrary.wiley.com/doi/10.1111/insr.12110/epdf

Rodriguez, R. N. (2013). The 2012 ASA Presidential Address: Building the big tent for statistics. Journal of the American Statistical Association, 108 (501), 1–6.

Scheaffer, R. L. (2001). Statistics education: Perusing the past, embracing the present, and charting the future. Newsletter for the Section on Statistical Education, 7 (1). Retrieved from https://www.amstat.org/sections/educ/newsletter/v7n1/Perusing.html .

Schoenfeld, A. H. (1985). Mathematical problem solving . Orlando, FL: Academic Press.

Silver, N. (2014, August 25). Is the polling industry in stasis or in crisis? FiveThirtyEight Politics. Retrieved August 15, 2015, from http://fivethirtyeight.com/features/is-the-polling-industry-in-stasis-or-in-crisis .

Snee, R. (1990). Statistical thinking and its contribution to quality. The American Statistician, 44 (2), 116–121.

Stigler, S. M. (1986). The history of statistics: The measurement of uncertainty before 1900 . Cambridge, MA: Harvard University Press.

Stigler, S. M. (2016). The seven pillars of statistical wisdom . Cambridge, MA: Harvard University Press.

Utts, J. (2003). What educated citizens should know about statistics and probability. The American Statistician, 57 (2), 74–79.

Utts, J. (2010). Unintentional lies in the media: Don’t blame journalists for what we don’t teach. In C. Reading (Ed.), Proceedings of the Eighth International Conference on Teaching Statistics. Data and Context in Statistics Education . Voorburg, The Netherlands: International Statistical Institute.

Utts, J. (2015a). Seeing through statistics (4th ed.). Stamford, CT: Cengage Learning.

Utts, J. (2015b). The many facets of statistics education: 175 years of common themes. The American Statistician, 69 (2), 100–107.

Utts, J., & Heckard, R. (2015). Mind on statistics (5th ed.). Stamford, CT: Cengage Learning.

Vere-Jones, D. (1995). The coming of age of statistical education. International Statistical Review, 63 (1), 3–23.

Wasserstein, R. (2015). Communicating the power and impact of our profession: A heads up for the next Executive Directors of the ASA. The American Statistician, 69 (2), 96–99.

Wasserstein, R. L., & Lazar, N. A. (2016). The ASA’s statement on p -values: Context, process, and purpose. The American Statistician, 70 (2), 129–133.

Wickham, H. (2014). Tidy data. Journal of Statistical Software, 59 (10). Retrieved from http://www.jstatsoft.org/v59/i10/ .

Wild, C. J. (1994). On embracing the ‘wider view’ of statistics. The American Statistician, 48 (2), 163–171.

Wild, C. J. (2015). Further, faster, wider. The American Statistician . Retrieved from http://nhorton.people.amherst.edu/mererenovation/18_Wild.PDF

Wild, C. J. (2017). Statistical literacy as the earth moves. Statistics Education Research Journal, 16 (1), 31–37.

Wild, C. J., & Pfannkuch, M. (1999). Statistical thinking in empirical enquiry (with discussion). International Statistical Review, 67 (3), 223–265.

Download references

Author information

Authors and affiliations.

Department of Statistics, The University of Auckland, Auckland, New Zealand

Christopher J. Wild

Department of Statistics, University of California—Irvine, Irvine, CA, USA

Jessica M. Utts

Department of Mathematics and Statistics, Amherst College, Amherst, MA, USA

Nicholas J. Horton

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Christopher J. Wild .

Editor information

Editors and affiliations.

Faculty of Education, The University of Haifa, Haifa, Israel

Dani Ben-Zvi

School of Education, University of Queensland, St Lucia, Queensland, Australia

Katie Makar

Department of Educational Psychology, The University of Minnesota, Minneapolis, Minnesota, USA

Joan Garfield

Rights and permissions

Reprints and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Wild, C.J., Utts, J.M., Horton, N.J. (2018). What Is Statistics?. In: Ben-Zvi, D., Makar, K., Garfield, J. (eds) International Handbook of Research in Statistics Education. Springer International Handbooks of Education. Springer, Cham. https://doi.org/10.1007/978-3-319-66195-7_1

Download citation

DOI : https://doi.org/10.1007/978-3-319-66195-7_1

Published : 10 December 2017

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-66193-3

Online ISBN : 978-3-319-66195-7

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Topical Articles =>

- PMP Certification

- CAPM Certification

- Agile Training

- Corporate Training

- Project Management Tools

Home / Six Sigma / The Six Sigma Approach: A Data-Driven Approach To Problem-Solving

The Six Sigma Approach: A Data-Driven Approach To Problem-Solving

If you are a project manager or an engineer, you may have heard of the 6 Sigma approach to problem-solving by now. In online Six Sigma courses that teach the Six Sigma principles , you will learn that a data-driven approach to problem-solving , or the Six Sigma approach, is a better way to approach problems. If you have a Six Sigma Green Belt certification then you will be able to turn practical problems into practical solutions using only facts and data.

Attend our 100% Online & Self-Paced Free Six Sigma Training .

This approach does not have room for gut feel or jumping to conclusions. However, if you are reading this article, you are probably still curious about the Six Sigma approach to problem-solving.

What is the Six Sigma Approach?

Let’s see what the Six Sigma approach or thinking is. As briefly described in free Six Sigma Green Belt Certification training , this approach is abbreviated as DMAIC. The DMAIC methodology of Six Sigma states that all processes can be Defined, Measured, Analyzed, Improved and Controlled . These are the phases in this approach. Collectively, it is called as DMAIC. Every Six Sigma project goes through these five stages. In the Define phase, the problem is looked at from several perspectives to identify the scope of the problem. All possible inputs in the process that may be causing the problem are compared and the critical few are identified. These inputs are Measured and Analyzed to determine whether they are the root cause of the problem. Once the root cause has been identified, the problem can be fixed or Improved. After the process has been improved, it must be controlled to ensure that the problem has been fixed in the long-term.

Check our Six Sigma Training Video

Every output (y) is a function of one or multiple inputs (x)

Any process which has inputs (X), and delivers outputs (Y) comes under the purview of the Six Sigma approach. X may represent an input, cause or problem, and Y may represent output, effect or symptom . We can say here that controlling inputs will control outputs. Because the output Y will be generated based on the inputs X.

This Six Sigma approach is called Y=f(X) thinking. It is the mechanism of the Six Sigma. Every problematic situation has to be converted into this equation. It may look difficult but it is just a new way of looking at the problem.

Please remember that the context of relating X and Y to each other would vary from situation to situation. If X is your input, then only Y becomes your output. If X is your cause, Y will not be regarded as the output. If X is your input, Y cannot be called as an effect.

Let’s go further. The equation of Y=f(X) could involve several subordinate outputs, perhaps as leading indicators of the overall “Big Y.” For example, if TAT was identified as the Big Y, the improvement team may examine leading indicators, such as Cycle Time; Lead Time as little Ys. Each subordinate Y may flow down into its own Y= f(X) relationship wherein some of the critical variables for one also may affect another little Y. That another little variable could be your potential X or critical X.

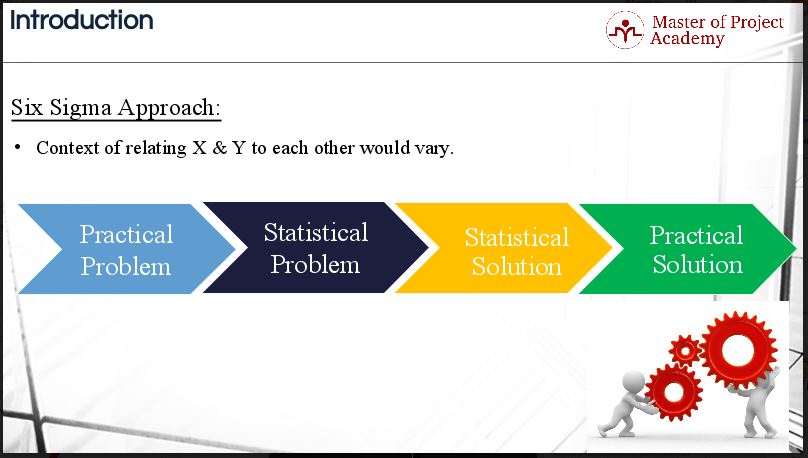

A practical vs. a statistical problem and solution

In the Six Sigma approach, the practical problem is the problem or pain area which has been persisting on your production or shop floor. You will need to c onvert this practical problem into a statistical problem. A statistical problem is the problem that is addressed with facts and data analysis methods. Just a reminder, the measurement, and analysis of a statistical problem is completed in Measure and Analyze phase of the Six Sigma approach or DMAIC.

In this approach, the statistical problem will then be converted into a statistical solution. It is the solution with a known confidence or risk levels versus an “I think” solution. This solution is not based on gut feeling. It’s a completely data-driven solution because it was found using the Six Sigma approach.

A Six Sigma approach of DMAIC project would assist you to convert your Practical Problem into Statistical Problem and then your Statistical Problem into Statistical Solution. The same project would also give you the Practical Solutions that aren’t complex and too difficult to implement. That’s how the Six Sigma approach works.

This approach may seem like a lot of work. Wouldn’t it be better to guess what the problem is and work on it from there? That would certainly be easier, but consider that randomly choosing a root cause of a problem may lead to hard work that doesn’t solve the problem permanently. You may be working to create a solution that will only fix 10% of the problem while following the Six Sigma approach will help you to identify the true root cause of the problem . Using this data-driven Six Sigma approach, you will only have to go through the problem-solving process once.

The Six Sigma approach is a truly powerful problem-solving tool. By working from a practical problem to a statistical problem, a statistical solution and finally a practical solution, you will be assured that you have identified the correct root cause of the problem which affects the quality of your products. The Six Sigma approach follows a standard approach – DMAIC – that helps the problem-solver to convert the practical problem into a practical solution based on facts and data . It’s very important to note that the Six Sigma approach is not a one-man show. Problem solving should be approached as a team with subject matter experts and decicion makers involved.

Related Posts

20 thoughts on “ the six sigma approach: a data-driven approach to problem-solving ”.

- Pingback: 5 Positions Which Must Be in a Six Sigma Team - Master of Project

- Pingback: What is the Difference Between DMAIC and DMADV in Six Sigma? - Master of Project

- Pingback: 4 Benefits of Lean Six Sigma Certification - Master of Project

- Pingback: Six Sigma: What is the Normal Distribution Curve? - Master of Project

- Pingback: How Do The Six Sigma Statistics Work? - Master of Project

- Pingback: Design for Six Sigma: Why DFSS is Important? - Master of Project

- Pingback: Defects Per Unit (DPU): The Crux Of Six Sigma - Master of Project