- - Google Chrome

Intended for healthcare professionals

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

Discourse analysis

- Related content

- Peer review

- Brian David Hodges , associate professor, vice chair (education), and director 1 ,

- Ayelet Kuper , assistant professor 2 ,

- Scott Reeves , associate professor 3

- 1 Department of Psychiatry, Wilson Centre for Research in Education, University of Toronto, 200 Elizabeth Street, Eaton South 1-565, Toronto, ON, Canada M5G 2C4

- 2 Department of Medicine, Sunnybrook Health Sciences Centre, and Wilson Centre for Research in Education, University of Toronto, 2075 Bayview Avenue, Room HG 08, Toronto, ON, Canada M4N 3M5

- 3 Department of Psychiatry, Li Ka Shing Knowledge Institute, Centre for Faculty Development, and Wilson Centre for Research in Education, University of Toronto, 200 Elizabeth Street, Eaton South 1-565, Toronto, ON, Canada M5G 2C4

- Correspondence to: B D Hodges brian.hodges{at}utoronto.ca

This articles explores how discourse analysis is useful for a wide range of research questions in health care and the health professions

Previous articles in this series discussed several methodological approaches used by qualitative researchers in the health professions. This article focuses on discourse analysis. It provides background information for those who will encounter this approach in their reading, rather than instructions for conducting such research.

What is discourse analysis?

Discourse analysis is about studying and analysing the uses of language. Because the term is used in many different ways, we have simplified approaches to discourse analysis into three clusters (table 1 ⇓ ) and illustrated how each of these approaches might be used to study a single domain: doctor-patient communication about diabetes management (table 2 ⇓ ). Regardless of approach, a vast array of data sources is available to the discourse analyst, including transcripts from interviews, focus groups, samples of conversations, published literature, media, and web based materials.

- In this window

- In a new window

Three approaches to discourse analysis

Three approaches to a specific research question: example of doctor-patient communications about diabetes management

What is formal linguistic discourse analysis?

The first approach, formal linguistic discourse analysis, involves a structured analysis of text in order to find general underlying rules of linguistic or communicative function behind the text. 4 For example, Lacson and colleagues compared human-human and machine-human dialogues in order to study the possibility of using computers to compress human conversations about patients in a dialysis unit into a form that physicians could use to make clinical decisions. 5 They transcribed phone conversations between nurses and 25 adult dialysis patients over a three month period and coded all 17 385 words by semantic type (categories of meaning) and structure (for example, sentence length, word position). They presented their work as a “first step towards an automatic analysis of spoken medical dialogue” that would allow physicians to “answer questions …

Log in using your username and password

BMA Member Log In

If you have a subscription to The BMJ, log in:

- Need to activate

- Log in via institution

- Log in via OpenAthens

Log in through your institution

Subscribe from £184 *.

Subscribe and get access to all BMJ articles, and much more.

* For online subscription

Access this article for 1 day for: £33 / $40 / €36 ( excludes VAT )

You can download a PDF version for your personal record.

Buy this article

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Critical Discourse Analysis | Definition, Guide & Examples

Critical Discourse Analysis | Definition, Guide & Examples

Published on August 23, 2019 by Amy Luo . Revised on June 22, 2023.

Critical discourse analysis (or discourse analysis) is a research method for studying written or spoken language in relation to its social context. It aims to understand how language is used in real life situations.

When you conduct discourse analysis, you might focus on:

- The purposes and effects of different types of language

- Cultural rules and conventions in communication

- How values, beliefs and assumptions are communicated

- How language use relates to its social, political and historical context

Discourse analysis is a common qualitative research method in many humanities and social science disciplines, including linguistics, sociology, anthropology, psychology and cultural studies.

Table of contents

What is discourse analysis used for, how is discourse analysis different from other methods, how to conduct discourse analysis, other interesting articles.

Conducting discourse analysis means examining how language functions and how meaning is created in different social contexts. It can be applied to any instance of written or oral language, as well as non-verbal aspects of communication such as tone and gestures.

Materials that are suitable for discourse analysis include:

- Books, newspapers and periodicals

- Marketing material, such as brochures and advertisements

- Business and government documents

- Websites, forums, social media posts and comments

- Interviews and conversations

By analyzing these types of discourse, researchers aim to gain an understanding of social groups and how they communicate.

Prevent plagiarism. Run a free check.

Unlike linguistic approaches that focus only on the rules of language use, discourse analysis emphasizes the contextual meaning of language.

It focuses on the social aspects of communication and the ways people use language to achieve specific effects (e.g. to build trust, to create doubt, to evoke emotions, or to manage conflict).

Instead of focusing on smaller units of language, such as sounds, words or phrases, discourse analysis is used to study larger chunks of language, such as entire conversations, texts, or collections of texts. The selected sources can be analyzed on multiple levels.

| Level of communication | What is analyzed? |

|---|---|

| Vocabulary | Words and phrases can be analyzed for ideological associations, formality, and euphemistic and metaphorical content. |

| Grammar | The way that sentences are constructed (e.g., , active or passive construction, and the use of imperatives and questions) can reveal aspects of intended meaning. |

| Structure | The structure of a text can be analyzed for how it creates emphasis or builds a narrative. |

| Genre | Texts can be analyzed in relation to the conventions and communicative aims of their genre (e.g., political speeches or tabloid newspaper articles). |

| Non-verbal communication | Non-verbal aspects of speech, such as tone of voice, pauses, gestures, and sounds like “um”, can reveal aspects of a speaker’s intentions, attitudes, and emotions. |

| Conversational codes | The interaction between people in a conversation, such as turn-taking, interruptions and listener response, can reveal aspects of cultural conventions and social roles. |

Discourse analysis is a qualitative and interpretive method of analyzing texts (in contrast to more systematic methods like content analysis ). You make interpretations based on both the details of the material itself and on contextual knowledge.

There are many different approaches and techniques you can use to conduct discourse analysis, but the steps below outline the basic structure you need to follow. Following these steps can help you avoid pitfalls of confirmation bias that can cloud your analysis.

Step 1: Define the research question and select the content of analysis

To do discourse analysis, you begin with a clearly defined research question . Once you have developed your question, select a range of material that is appropriate to answer it.

Discourse analysis is a method that can be applied both to large volumes of material and to smaller samples, depending on the aims and timescale of your research.

Step 2: Gather information and theory on the context

Next, you must establish the social and historical context in which the material was produced and intended to be received. Gather factual details of when and where the content was created, who the author is, who published it, and whom it was disseminated to.

As well as understanding the real-life context of the discourse, you can also conduct a literature review on the topic and construct a theoretical framework to guide your analysis.

Step 3: Analyze the content for themes and patterns

This step involves closely examining various elements of the material – such as words, sentences, paragraphs, and overall structure – and relating them to attributes, themes, and patterns relevant to your research question.

Step 4: Review your results and draw conclusions

Once you have assigned particular attributes to elements of the material, reflect on your results to examine the function and meaning of the language used. Here, you will consider your analysis in relation to the broader context that you established earlier to draw conclusions that answer your research question.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Measures of central tendency

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Thematic analysis

- Cohort study

- Peer review

- Ethnography

Research bias

- Implicit bias

- Cognitive bias

- Conformity bias

- Hawthorne effect

- Availability heuristic

- Attrition bias

- Social desirability bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Luo, A. (2023, June 22). Critical Discourse Analysis | Definition, Guide & Examples. Scribbr. Retrieved June 10, 2024, from https://www.scribbr.com/methodology/discourse-analysis/

Is this article helpful?

Other students also liked

What is qualitative research | methods & examples, what is a case study | definition, examples & methods, how to do thematic analysis | step-by-step guide & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Linguistics

- Discourse Analysis

DISCOURSE ANALYSIS

- September 2015

- In book: Issues in the study of language and literature (pp.169-195)

- Publisher: Ibadan: Kraft Books Limited.

- University of Port Harcourt

- University of Ibadan

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Roger Fowler

- Doris L. Payne

- Deborah Schiffrin

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Critical Discourse Analysis | Definition, Guide & Examples

Critical Discourse Analysis | Definition, Guide & Examples

Published on 5 May 2022 by Amy Luo . Revised on 5 December 2022.

Discourse analysis is a research method for studying written or spoken language in relation to its social context. It aims to understand how language is used in real-life situations.

When you do discourse analysis, you might focus on:

- The purposes and effects of different types of language

- Cultural rules and conventions in communication

- How values, beliefs, and assumptions are communicated

- How language use relates to its social, political, and historical context

Discourse analysis is a common qualitative research method in many humanities and social science disciplines, including linguistics, sociology, anthropology, psychology, and cultural studies. It is also called critical discourse analysis.

Table of contents

What is discourse analysis used for, how is discourse analysis different from other methods, how to conduct discourse analysis.

Conducting discourse analysis means examining how language functions and how meaning is created in different social contexts. It can be applied to any instance of written or oral language, as well as non-verbal aspects of communication, such as tone and gestures.

Materials that are suitable for discourse analysis include:

- Books, newspapers, and periodicals

- Marketing material, such as brochures and advertisements

- Business and government documents

- Websites, forums, social media posts, and comments

- Interviews and conversations

By analysing these types of discourse, researchers aim to gain an understanding of social groups and how they communicate.

Prevent plagiarism, run a free check.

Unlike linguistic approaches that focus only on the rules of language use, discourse analysis emphasises the contextual meaning of language.

It focuses on the social aspects of communication and the ways people use language to achieve specific effects (e.g., to build trust, to create doubt, to evoke emotions, or to manage conflict).

Instead of focusing on smaller units of language, such as sounds, words, or phrases, discourse analysis is used to study larger chunks of language, such as entire conversations, texts, or collections of texts. The selected sources can be analysed on multiple levels.

| Level of communication | What is analysed? |

|---|---|

| Vocabulary | Words and phrases can be analysed for ideological associations, formality, and euphemistic and metaphorical content. |

| Grammar | The way that sentences are constructed (e.g., verb tenses, active or passive construction, and the use of imperatives and questions) can reveal aspects of intended meaning. |

| Structure | The structure of a text can be analysed for how it creates emphasis or builds a narrative. |

| Genre | Texts can be analysed in relation to the conventions and communicative aims of their genre (e.g., political speeches or tabloid newspaper articles). |

| Non-verbal communication | Non-verbal aspects of speech, such as tone of voice, pauses, gestures, and sounds like ‘um’, can reveal aspects of a speaker’s intentions, attitudes, and emotions. |

| Conversational codes | The interaction between people in a conversation, such as turn-taking, interruptions, and listener response, can reveal aspects of cultural conventions and social roles. |

Discourse analysis is a qualitative and interpretive method of analysing texts (in contrast to more systematic methods like content analysis ). You make interpretations based on both the details of the material itself and on contextual knowledge.

There are many different approaches and techniques you can use to conduct discourse analysis, but the steps below outline the basic structure you need to follow.

Step 1: Define the research question and select the content of analysis

To do discourse analysis, you begin with a clearly defined research question . Once you have developed your question, select a range of material that is appropriate to answer it.

Discourse analysis is a method that can be applied both to large volumes of material and to smaller samples, depending on the aims and timescale of your research.

Step 2: Gather information and theory on the context

Next, you must establish the social and historical context in which the material was produced and intended to be received. Gather factual details of when and where the content was created, who the author is, who published it, and whom it was disseminated to.

As well as understanding the real-life context of the discourse, you can also conduct a literature review on the topic and construct a theoretical framework to guide your analysis.

Step 3: Analyse the content for themes and patterns

This step involves closely examining various elements of the material – such as words, sentences, paragraphs, and overall structure – and relating them to attributes, themes, and patterns relevant to your research question.

Step 4: Review your results and draw conclusions

Once you have assigned particular attributes to elements of the material, reflect on your results to examine the function and meaning of the language used. Here, you will consider your analysis in relation to the broader context that you established earlier to draw conclusions that answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Luo, A. (2022, December 05). Critical Discourse Analysis | Definition, Guide & Examples. Scribbr. Retrieved 10 June 2024, from https://www.scribbr.co.uk/research-methods/discourse-analysis-explained/

Is this article helpful?

Other students also liked

Case study | definition, examples & methods, how to do thematic analysis | guide & examples, content analysis | a step-by-step guide with examples.

- Privacy Policy

Home » Discourse Analysis – Methods, Types and Examples

Discourse Analysis – Methods, Types and Examples

Table of Contents

Discourse Analysis

Definition:

Discourse Analysis is a method of studying how people use language in different situations to understand what they really mean and what messages they are sending. It helps us understand how language is used to create social relationships and cultural norms.

It examines language use in various forms of communication such as spoken, written, visual or multi-modal texts, and focuses on how language is used to construct social meaning and relationships, and how it reflects and reinforces power dynamics, ideologies, and cultural norms.

Types of Discourse Analysis

Some of the most common types of discourse analysis are:

Conversation Analysis

This type of discourse analysis focuses on analyzing the structure of talk and how participants in a conversation make meaning through their interaction. It is often used to study face-to-face interactions, such as interviews or everyday conversations.

Critical discourse Analysis

This approach focuses on the ways in which language use reflects and reinforces power relations, social hierarchies, and ideologies. It is often used to analyze media texts or political speeches, with the aim of uncovering the hidden meanings and assumptions that are embedded in these texts.

Discursive Psychology

This type of discourse analysis focuses on the ways in which language use is related to psychological processes such as identity construction and attribution of motives. It is often used to study narratives or personal accounts, with the aim of understanding how individuals make sense of their experiences.

Multimodal Discourse Analysis

This approach focuses on analyzing not only language use, but also other modes of communication, such as images, gestures, and layout. It is often used to study digital or visual media, with the aim of understanding how different modes of communication work together to create meaning.

Corpus-based Discourse Analysis

This type of discourse analysis uses large collections of texts, or corpora, to analyze patterns of language use across different genres or contexts. It is often used to study language use in specific domains, such as academic writing or legal discourse.

Descriptive Discourse

This type of discourse analysis aims to describe the features and characteristics of language use, without making any value judgments or interpretations. It is often used in linguistic studies to describe grammatical structures or phonetic features of language.

Narrative Discourse

This approach focuses on analyzing the structure and content of stories or narratives, with the aim of understanding how they are constructed and how they shape our understanding of the world. It is often used to study personal narratives or cultural myths.

Expository Discourse

This type of discourse analysis is used to study texts that explain or describe a concept, process, or idea. It aims to understand how information is organized and presented in such texts and how it influences the reader’s understanding of the topic.

Argumentative Discourse

This approach focuses on analyzing texts that present an argument or attempt to persuade the reader or listener. It aims to understand how the argument is constructed, what strategies are used to persuade, and how the audience is likely to respond to the argument.

Discourse Analysis Conducting Guide

Here is a step-by-step guide for conducting discourse analysis:

- What are you trying to understand about the language use in a particular context?

- What are the key concepts or themes that you want to explore?

- Select the data: Decide on the type of data that you will analyze, such as written texts, spoken conversations, or media content. Consider the source of the data, such as news articles, interviews, or social media posts, and how this might affect your analysis.

- Transcribe or collect the data: If you are analyzing spoken language, you will need to transcribe the data into written form. If you are using written texts, make sure that you have access to the full text and that it is in a format that can be easily analyzed.

- Read and re-read the data: Read through the data carefully, paying attention to key themes, patterns, and discursive features. Take notes on what stands out to you and make preliminary observations about the language use.

- Develop a coding scheme : Develop a coding scheme that will allow you to categorize and organize different types of language use. This might include categories such as metaphors, narratives, or persuasive strategies, depending on your research question.

- Code the data: Use your coding scheme to analyze the data, coding different sections of text or spoken language according to the categories that you have developed. This can be a time-consuming process, so consider using software tools to assist with coding and analysis.

- Analyze the data: Once you have coded the data, analyze it to identify patterns and themes that emerge. Look for similarities and differences across different parts of the data, and consider how different categories of language use are related to your research question.

- Interpret the findings: Draw conclusions from your analysis and interpret the findings in relation to your research question. Consider how the language use in your data sheds light on broader cultural or social issues, and what implications it might have for understanding language use in other contexts.

- Write up the results: Write up your findings in a clear and concise way, using examples from the data to support your arguments. Consider how your research contributes to the broader field of discourse analysis and what implications it might have for future research.

Applications of Discourse Analysis

Here are some of the key areas where discourse analysis is commonly used:

- Political discourse: Discourse analysis can be used to analyze political speeches, debates, and media coverage of political events. By examining the language used in these contexts, researchers can gain insight into the political ideologies, values, and agendas that underpin different political positions.

- Media analysis: Discourse analysis is frequently used to analyze media content, including news reports, television shows, and social media posts. By examining the language used in media content, researchers can understand how media narratives are constructed and how they influence public opinion.

- Education : Discourse analysis can be used to examine classroom discourse, student-teacher interactions, and educational policies. By analyzing the language used in these contexts, researchers can gain insight into the social and cultural factors that shape educational outcomes.

- Healthcare : Discourse analysis is used in healthcare to examine the language used by healthcare professionals and patients in medical consultations. This can help to identify communication barriers, cultural differences, and other factors that may impact the quality of healthcare.

- Marketing and advertising: Discourse analysis can be used to analyze marketing and advertising messages, including the language used in product descriptions, slogans, and commercials. By examining these messages, researchers can gain insight into the cultural values and beliefs that underpin consumer behavior.

When to use Discourse Analysis

Discourse analysis is a valuable research methodology that can be used in a variety of contexts. Here are some situations where discourse analysis may be particularly useful:

- When studying language use in a particular context: Discourse analysis can be used to examine how language is used in a specific context, such as political speeches, media coverage, or healthcare interactions. By analyzing language use in these contexts, researchers can gain insight into the social and cultural factors that shape communication.

- When exploring the meaning of language: Discourse analysis can be used to examine how language is used to construct meaning and shape social reality. This can be particularly useful in fields such as sociology, anthropology, and cultural studies.

- When examining power relations: Discourse analysis can be used to examine how language is used to reinforce or challenge power relations in society. By analyzing language use in contexts such as political discourse, media coverage, or workplace interactions, researchers can gain insight into how power is negotiated and maintained.

- When conducting qualitative research: Discourse analysis can be used as a qualitative research method, allowing researchers to explore complex social phenomena in depth. By analyzing language use in a particular context, researchers can gain rich and nuanced insights into the social and cultural factors that shape communication.

Examples of Discourse Analysis

Here are some examples of discourse analysis in action:

- A study of media coverage of climate change: This study analyzed media coverage of climate change to examine how language was used to construct the issue. The researchers found that media coverage tended to frame climate change as a matter of scientific debate rather than a pressing environmental issue, thereby undermining public support for action on climate change.

- A study of political speeches: This study analyzed political speeches to examine how language was used to construct political identity. The researchers found that politicians used language strategically to construct themselves as trustworthy and competent leaders, while painting their opponents as untrustworthy and incompetent.

- A study of medical consultations: This study analyzed medical consultations to examine how language was used to negotiate power and authority between doctors and patients. The researchers found that doctors used language to assert their authority and control over medical decisions, while patients used language to negotiate their own preferences and concerns.

- A study of workplace interactions: This study analyzed workplace interactions to examine how language was used to construct social identity and maintain power relations. The researchers found that language was used to construct a hierarchy of power and status within the workplace, with those in positions of authority using language to assert their dominance over subordinates.

Purpose of Discourse Analysis

The purpose of discourse analysis is to examine the ways in which language is used to construct social meaning, relationships, and power relations. By analyzing language use in a systematic and rigorous way, discourse analysis can provide valuable insights into the social and cultural factors that shape communication and interaction.

The specific purposes of discourse analysis may vary depending on the research context, but some common goals include:

- To understand how language constructs social reality: Discourse analysis can help researchers understand how language is used to construct meaning and shape social reality. By analyzing language use in a particular context, researchers can gain insight into the cultural and social factors that shape communication.

- To identify power relations: Discourse analysis can be used to examine how language use reinforces or challenges power relations in society. By analyzing language use in contexts such as political discourse, media coverage, or workplace interactions, researchers can gain insight into how power is negotiated and maintained.

- To explore social and cultural norms: Discourse analysis can help researchers understand how social and cultural norms are constructed and maintained through language use. By analyzing language use in different contexts, researchers can gain insight into how social and cultural norms are reproduced and challenged.

- To provide insights for social change: Discourse analysis can provide insights that can be used to promote social change. By identifying problematic language use or power imbalances, researchers can provide insights that can be used to challenge social norms and promote more equitable and inclusive communication.

Characteristics of Discourse Analysis

Here are some key characteristics of discourse analysis:

- Focus on language use: Discourse analysis is centered on language use and how it constructs social meaning, relationships, and power relations.

- Multidisciplinary approach: Discourse analysis draws on theories and methodologies from a range of disciplines, including linguistics, anthropology, sociology, and psychology.

- Systematic and rigorous methodology: Discourse analysis employs a systematic and rigorous methodology, often involving transcription and coding of language data, in order to identify patterns and themes in language use.

- Contextual analysis : Discourse analysis emphasizes the importance of context in shaping language use, and takes into account the social and cultural factors that shape communication.

- Focus on power relations: Discourse analysis often examines power relations and how language use reinforces or challenges power imbalances in society.

- Interpretive approach: Discourse analysis is an interpretive approach, meaning that it seeks to understand the meaning and significance of language use from the perspective of the participants in a particular discourse.

- Emphasis on reflexivity: Discourse analysis emphasizes the importance of reflexivity, or self-awareness, in the research process. Researchers are encouraged to reflect on their own positionality and how it may shape their interpretation of language use.

Advantages of Discourse Analysis

Discourse analysis has several advantages as a methodological approach. Here are some of the main advantages:

- Provides a detailed understanding of language use: Discourse analysis allows for a detailed and nuanced understanding of language use in specific social contexts. It enables researchers to identify patterns and themes in language use, and to understand how language constructs social reality.

- Emphasizes the importance of context : Discourse analysis emphasizes the importance of context in shaping language use. By taking into account the social and cultural factors that shape communication, discourse analysis provides a more complete understanding of language use than other approaches.

- Allows for an examination of power relations: Discourse analysis enables researchers to examine power relations and how language use reinforces or challenges power imbalances in society. By identifying problematic language use, discourse analysis can contribute to efforts to promote social justice and equality.

- Provides insights for social change: Discourse analysis can provide insights that can be used to promote social change. By identifying problematic language use or power imbalances, researchers can provide insights that can be used to challenge social norms and promote more equitable and inclusive communication.

- Multidisciplinary approach: Discourse analysis draws on theories and methodologies from a range of disciplines, including linguistics, anthropology, sociology, and psychology. This multidisciplinary approach allows for a more holistic understanding of language use in social contexts.

Limitations of Discourse Analysis

Some Limitations of Discourse Analysis are as follows:

- Time-consuming and resource-intensive: Discourse analysis can be a time-consuming and resource-intensive process. Collecting and transcribing language data can be a time-consuming task, and analyzing the data requires careful attention to detail and a significant investment of time and resources.

- Limited generalizability: Discourse analysis is often focused on a particular social context or community, and therefore the findings may not be easily generalized to other contexts or populations. This means that the insights gained from discourse analysis may have limited applicability beyond the specific context being studied.

- Interpretive nature: Discourse analysis is an interpretive approach, meaning that it relies on the interpretation of the researcher to identify patterns and themes in language use. This subjectivity can be a limitation, as different researchers may interpret language data differently.

- Limited quantitative analysis: Discourse analysis tends to focus on qualitative analysis of language data, which can limit the ability to draw statistical conclusions or make quantitative comparisons across different language uses or contexts.

- Ethical considerations: Discourse analysis may involve the collection and analysis of sensitive language data, such as language related to trauma or marginalization. Researchers must carefully consider the ethical implications of collecting and analyzing this type of data, and ensure that the privacy and confidentiality of participants is protected.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Bimodal Histogram – Definition, Examples

Documentary Analysis – Methods, Applications and...

Narrative Analysis – Types, Methods and Examples

Grounded Theory – Methods, Examples and Guide

Cluster Analysis – Types, Methods and Examples

Graphical Methods – Types, Examples and Guide

Advertisement

AI Through Ethical Lenses: A Discourse Analysis of Guidelines for AI in Healthcare

- Original Research/Scholarship

- Open access

- Published: 04 June 2024

- Volume 30 , article number 24 , ( 2024 )

Cite this article

You have full access to this open access article

- Laura Arbelaez Ossa ORCID: orcid.org/0000-0002-8303-8789 1 ,

- Stephen R. Milford ORCID: orcid.org/0000-0002-7325-9940 1 ,

- Michael Rost ORCID: orcid.org/0000-0001-6537-9793 1 ,

- Anja K. Leist ORCID: orcid.org/0000-0002-5074-5209 2 ,

- David M. Shaw ORCID: orcid.org/0000-0001-8180-6927 1 , 3 &

- Bernice S. Elger ORCID: orcid.org/0000-0002-4249-7399 1 , 4

319 Accesses

Explore all metrics

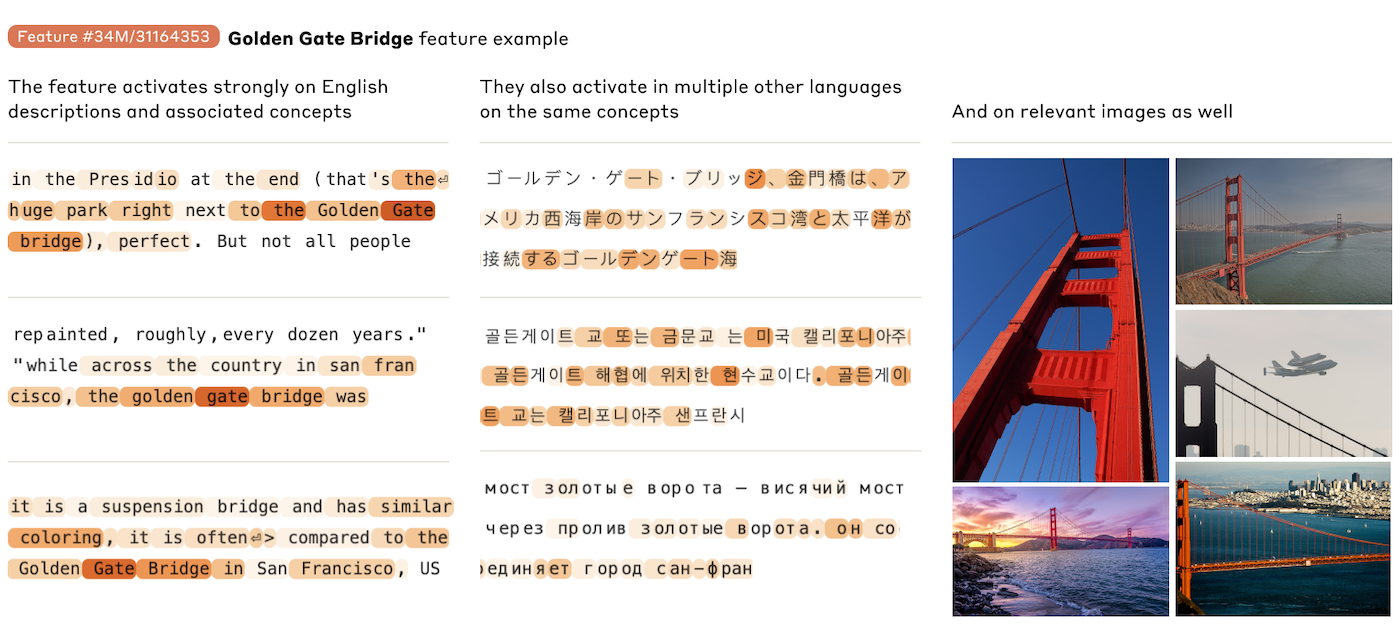

While the technologies that enable Artificial Intelligence (AI) continue to advance rapidly, there are increasing promises regarding AI’s beneficial outputs and concerns about the challenges of human–computer interaction in healthcare. To address these concerns, institutions have increasingly resorted to publishing AI guidelines for healthcare, aiming to align AI with ethical practices. However, guidelines as a form of written language can be analyzed to recognize the reciprocal links between its textual communication and underlying societal ideas. From this perspective, we conducted a discourse analysis to understand how these guidelines construct, articulate, and frame ethics for AI in healthcare. We included eight guidelines and identified three prevalent and interwoven discourses: (1) AI is unavoidable and desirable; (2) AI needs to be guided with (some forms of) principles (3) trust in AI is instrumental and primary. These discourses signal an over-spillage of technical ideals to AI ethics, such as over-optimism and resulting hyper-criticism. This research provides insights into the underlying ideas present in AI guidelines and how guidelines influence the practice and alignment of AI with ethical, legal, and societal values expected to shape AI in healthcare.

Similar content being viewed by others

Integrating ethics in AI development: a qualitative study

Ethics of ai and health care: towards a substantive human rights framework.

Implementing Ethics in Healthcare AI-Based Applications: A Scoping Review

Avoid common mistakes on your manuscript.

Introduction

The increasing number of Artificial intelligence (AI) ethics guidelines reflects the growing recognition of AI’s potential benefits and risks. As AI technology advances, there is increasing enthusiasm for AI, especially machine learning (ML) techniques, because of their capacity to analyze already available health data for preventive, diagnostic, or treatment support (Leist et al., 2022 ). However, the assumption that AI applications might become more prevalent in society has raised concerns over the ethical implications of its use. Common questions include what is necessary to trust AI, respect people's autonomy, and avoid biases and discrimination (Floridi et al., 2018 ; Murphy et al., 2021 ). AI guidelines aim to guide our approach to AI for the benefit of society through the use of principles, statements, rules, or recommendations. As such, academic, (non)governmental, and other institutions worldwide have published guidelines to guide AI development and those working with it.

Reviews of generic AI guidelines (AI used across settings without specific healthcare focus) have sought to map and examine the common themes and areas of focus they address (Bélisle-Pipon et al., 2022 ; Fjeld et al., 2020 ; Fukuda-Parr & Gibbons, 2021 ; Jobin et al., 2019 ; Ryan & Stahl, 2020 ). Some concerns generic AI guidelines address include privacy, bias, transparency, autonomy, explainability, well-being promotion, and responsibility. These reviews provide a helpful overview of the state of AI ethics guidelines to understand critical issues and challenges related to AI ethics. Although generic AI guidelines could apply across different disciplines, some guidelines specifically address the use of AI in healthcare. These guidelines strongly emphasize considering the ethical implications of using AI in medical decision-making and other healthcare applications. A prominent example is the World Health Organization (WHO) publication on "ethics and governance of artificial intelligence for health" (World Health Organization, 2021 ).

The field of AI in healthcare is still relatively new, and there is an ongoing debate about the best approaches to ensuring the ethical use of AI. Noticeably, the use of AI in healthcare raises specific ethical issues related to the beneficence and respect of autonomy, as patients and communities require assurance that introducing AI would not jeopardize their rights. Beyond challenges inherent to AI, decisions taken in healthcare are frequently intertwined with high-risk scenarios and highly sensitive data. Health is central to individual well-being; doctors must support, safeguard, and advocate for patients. For example, an essential pillar of medical ethics, shared decision-making between patients and their doctors, could be affected by the introduction of AI as a potential threat to patients' and doctors' autonomy if AI does not account for their rights and preferences (Abbasgholizadeh Rahimi et al., 2022 ).

Guidelines as a form of written language can be analyzed to identify the links between textual communication and our societal ideas. Discourse (i.e. a group of ideas or patterned ways of thinking in textual form) not only reflects but reproduces our social realities with its dominant beliefs, power structures, and ideologies (Lupton, 1992 ). Discourse analysis (DA) as a qualitative methodology can analyze the contextual structure surrounding communication, including the context in which it takes place and how it shapes a common sociocultural understanding (Fairclough, 2022 ; Lupton, 1992 ; Yazdannik et al., 2017 ). From that perspective, the discourse in ethical guidelines for AI can significantly shape the healthcare community's understanding and approach to ethics. Therefore, guidelines discourse requires particular attention because it is a powerful driver for discussing and (re-)orienting AI ethics. For example, guidelines can base their ideals on practical (e.g., efficiency), technical (e.g., performance), or ethical (e.g., beneficence) frameworks, thus, helping to legitimize certain foundations, concepts, and notions in AI ethics for healthcare. Therefore, AI guidelines can establish a common framework for thinking about and addressing ethical issues in AI. In that sense, it is essential to look at the understanding of ethics in AI guidelines and critically examine if it meets the moral requirements of healthcare settings.

This paper analyzes how guidelines construct, articulate, and frame AI ethics for healthcare. The aim is to look beyond what is written and critically interpret these guidelines' underlying ideologies ((Cheek, 2004 ; Lupton, 1992 ; Yazdannik et al., 2017 ). As such, we are interested in how the guidelines shape AI ethics in healthcare, including whose perspectives are considered when determining ethical issues in AI and the implications for ethics, AI, and healthcare stakeholders.

Previous work has synthesized generic AI guidelines through thematic or content summaries (Fjeld et al., 2020 ; Jobin et al., 2019 ; Ryan & Stahl, 2020 ). Policy and social researchers have used Critical Discourse Analysis (CDA) to understand public health documents, albeit this methodology has yet to be applied to AI-guiding documents. However, the usability of CDA has been visible in other domains, for example, by using CDA to examine how health policy documents constructed chronically ill patients' roles or how inclusion policies framed health inequalities (Tweed et al., 2022 ; Walton & Lazzaro-Salazar, 2016 ). Other researchers used CDA to analyze the discourse surrounding AI in social media and the academic discussion on artificial general intelligence (Graham, 2022 ; Mao & Shi-Kupfer, 2021 ; Singler, 2020 ). Given the importance of written AI guidelines for understanding AI ethics for healthcare, we undertook a CDA of AI guidelines, which allows us to have an in-depth interpretation of the construction, articulation, and framing of AI ethics for healthcare. Therefore, we aimed to analyze the discourse in AI guidelines rather than systematically map the content and themes.

Identifying Relevant Studies

First, given the absence of a unified database for AI healthcare guidelines, we reviewed all the documents inventoried by previous researchers for potential inclusion. Additionally, we reviewed database initiatives that track AI policies: Nesta’s Footnote 1 “AI governance database”, Algorithm Watch’s Footnote 2 “AI Ethics Guidelines Global Inventory”, OECD.AI’s Footnote 3 “policy observatory”, and AI Ethics Lab’s Footnote 4 “Toolbox: Dynamics of AI Principles”. We use a purposive sample to find documents written by influential institutions such as governments, intergovernmental organizations, or non-profit organizations. Second, Google Search was used as a general search engine because AI guidelines are not academic publications and thus fall under the "gray literature" category. The first author searched and screened for AI guidelines to select a final set.

Inclusion and Exclusion Criteria

For this review, we consider ‘AI guidelines’ to be documents that provide ethical guidance, including policies, guidelines, principles, or position papers introduced by governmental, inter-governmental, or professional organizations. Including this type of AI guidelines allow us to analyze how influential institutions construct, articulate, and frame AI ethics in healthcare. To be included, guidelines must provide normative guidance for AI in healthcare: principles, tenets, recommendations, propositions, or tangible steps for developing or implementing AI in healthcare.

We excluded documents that provided observations regarding advances in AI for a particular year. Additionally, we excluded “internal” company principles due to the limited intended audience, as they are primarily created for the respective institution. We also excluded documents solely focusing on one disease application or a specific medical specialty because these might not be generalizable to other healthcare contexts. We finalized the search in August 2022. The first author screened 179 document titles. We excluded 169 documents because they either did not qualify as guidelines or were outside the scope of this review (i.e., documents that were not about AI or were unrelated to healthcare). Summary of reasons for exclusion in Supplementary materials 2.

We departed from the analytical positivist approach of a systematic literature review. Footnote 5 DA is a diverse methodology for analyzing the language in use and how discourse creates a shared understanding of a topic. DA goes beyond the content of words and interprets how a topic is constructed, represented, and reflected within its context (Fairclough, 2013 , 2022 ). In particular, we used CDA because language expresses and shapes social and political relationships, and its analysis can uncover underlying ideologies or power dynamics.

We transferred the guideline texts to a qualitative data management software (MAXQDA) to carry out data analysis. We analyzed the guidelines in three phases. First, the first author read the included guidelines in detail and extracted high-level information. During data familiarization, the authors discussed preliminary ideas on trends in the guidelines and created a list of specific questions that we considered relevant to answer the main research question. The first author analyzed the guidelines in the second phase by creating high-level analytical themes that focus on organizing the material into the following discourse strands: How do guidelines (1) discuss ethical motivation to develop and implement AI and ethics (e.g., what is the justification and primary goal of guidelines); (2) construct ethical AI (e.g., if guidelines used principles); (3) assign the roles of different stakeholders. Third, all authors tested and critically interrogated the analytical themes and organization of results. The authors reached a consensus about the structure and characteristics of the several discourses. This process eventually resulted in the description of three discourses.

See Fig. 1 .

Flow diagram (PRISMA) (Page et al., 2021 )

Applying the selection criteria led to eight guidelines ultimately being included in this analysis (Supplementary materials 1). Most of them were published in 2021. Intergovernmental organizations published two documents. All other guidelines came from high-income countries (the United Kingdom, the United States of America, Canada, Singapore, and the United Arab Emirates) (Table 1 ). The length of the documents varies widely, with G1 being the longest (114 pages) and G5 the shortest (two pages). Guidelines G3, G5, G6, and G7 focus on (general) good practice or good AI. Guidelines G2, G4, and G8 are generally intended to guide AI in healthcare but do not specifically focus on ethical AI. Guideline G1 focuses on ethics and governance.

The guidelines address AI developers (G1, G8) but also describe them as innovators (G3, G6), and manufacturers (G4, G7, G8). Other described addressees are policymakers (G1, G2, G5), healthcare professionals (G1, G4) and healthcare institutions (G1, G4). "AI actors" describes all stakeholders in the AI system lifecycle (G2.1 p. 7). The guideline G8 uses an umbrella group called 'implementers' that could include healthcare professionals and institutions. To this extent, G8 acknowledges that the " groups are not mutually exclusive " (G8 p. 8), which creates some uncertainties in interpreting guidelines for individual stakeholders. The guidelines sometimes discuss AI recommendations without specifying a responsible party. For example, G4 mentions the need for verifiable and explainable AI without indicating who should ensure this (G4 p.8). Guideline G5 mentions a human in the loop without describing anyone specifically.

Lack of standard definition of AI

Most guidelines focus their discussion on AI (G1, G2, G4, G6, G7). Four guidelines make a distinction: G3 describes “ digital and data-driven technologies ” that include AI, guideline G5 focuses only on machine learning (ML), and G6-G7 combines both as AI/ML-enabled medical devices (Supplementary materials 3 in Table 1 ).

The guidelines lack a standard definition of AI, thus, leading to different interpretations between data-driven programs (such as prediction or diagnosis) and a potential program that resembles a more general state of intelligence (human-like cognition). When the object of regulation is still a topic of debate, it may result in regulating entirely different or not yet existing systems, including Artificial General Intelligence. Consequently, these guidelines could evoke an understanding of AI driven by the potential human-like capacities of the systems rather than a more measurable technical definition. Informing the definition of AI with such futuristic perceptions may contribute to the mystification of AI and increase fears regarding its application. Fears can result in learned helplessness, where people disengage from AI, diminish participation in discussions, and become relegated to passive acceptance and hindering participation (Lindebaum et al., 2020 ).

Discourse 1: AI is Unavoidable and Desirable

All guidelines agree that AI will be an agent of change in medicine. Discussions on AI are fundamentally based on its potential, making these AI guidelines future-looking, prospective, and, to some extent, speculative. Most guidelines describe the benefits and risks of AI techniques (G1, G2, G3, G5, G6, G7, G8). For example, G2 states that AI in healthcare has "profound potential, but real risks "(G2 p. 7). The guideline G5 mentions that AI and ML “ have the potential to transform health care […], but they also present unique considerations due to their complexity and the iterative and data-driven nature ” (G5 p. 1). In doing so, guidelines frequently juxtapose opportunities and threats while justifying the need for considerations to avoid harm. Therefore, guidelines tend to describe their primary motivation as avoiding harm while harnessing the promised potential of AI technologies (Supplementary materials 3 in Table 2 ). These statements are pragmatic formulations derived from the (unspoken) assumption that AI will be implemented and that healthcare needs to make the best of it. However, this type of discourse entails a matrix of beliefs: AI is an unavoidable development and undeniably useful.

Guidelines fail to be sufficiently cautionary against the techno-cultural ideals and the hype surrounding technological developments. The pressure to adopt innovation based on enthusiasm and economic or technical forces could undermine the debate about demonstrating that AI improves healthcare quality (Dixon-Woods et al., 2011 ). Guideline G1 (G2 also, to some extent) questions whether AI should be used (or not) and the risk of overestimating the benefits of AI or dismissing the risks (G1 p. 31–33). None of the guidelines were sufficiently critical against the base assumptions that AI is an agent of benefits and progress in medicine. However, there is no evidence yet of this change because most AI systems are not currently used in daily real clinical scenarios. For example, a guideline states that they “recognizes that AI holds great promise for the practice of public health and medicine” (G1 p. xi). The guideline G6 states that “ the use of AI/ML […] presents a significant potential benefit to patients and health systems in a wide range of clinical applications […] ” (G6 p. 4). In that sense, there is an unspoken but present assumption that AI is mainly—at least potentially—beneficial and that if used correctly, AI will change life and medicine. In the guidelines, the desire to harness or guide the potentials of AI indicates that this innovation is at least an acceptable reality or a potentially desirable development. This discourse might echo sentiments from the technology industry, where innovation is the ultimate goal and something new might be better just because it is new. However, a strong pro-innovation stance could lead to risk-taking or scientifically unfounded experimentation for innovation and change. Slota et al. rightly pointed out this challenge and have critically questioned that innovation may not be positive per se and cannot be unquestionably accepted and suggested that innovation needs to abide by prerequisites to be considered positive, for example, reliability measurements (Slota et al., 2021 ).

When guidelines base their discussion primarily on AI’s potential, AI might have a special status compared to other healthcare innovations, especially because AI’s potential became a justification for its support and development. For example, drug development guidelines request manufacturers to establish benefit/risk assessment on the evidence for a drug’s safety and effectiveness to improve, change or remove diseases. Guidelines are cautious, even when using unproven interventions (with no evidence available through clinical trials), emphasizing that potential benefits must be substantial and that there should be no other alternatives (EMA, 2018a ; FDA, 2019 ). Giving AI special treatment due to the desire to realize AI’s potential risks prompts technology companies to take advantage of their expertise and unduly influence governmental decisions regarding AI’s regulation and practices. For example, in contact tracing technology for Covid-19 (although not always AI-enabled), government concerns over data privacy allowed technology companies to gain influence because of their expertise in data privacy, inadvertently permitting them to influence how this technology was developed (Sharon, 2021 ). As technology develops, many small decisions need to be taken, which, when combined, can significantly impact how a policy is implemented and its practical interpretation. In AI guidelines, industry representatives are often involved and may have an imbalance of influence over the development of these guidelines compared with other directly impacted stakeholders such as patients (Bélisle-Pipon et al., 2022 ).

Discourse 2: The Necessity of Principles to Guide AI

Despite using different terms, having different aims, and addressing different stakeholder groups, guidelines agree that AI needs principles to be guided. However, there is wide variation in the usage and conceptualization of these principles, with most documents not clarifying the theoretical basis for including them. Only G1 provides an account of their definition of principles, which references bioethics and human rights as the theoretical framework. G6–G7 cross-references the definition and construction used in G1. There is no common assumption about the conceptual framework behind using these principles, leaving their interpretation and operationalization up to the reader's discretion (Supplementary materials 3 in Table 3).

Positively, guidelines aim to help AI be developed within the acceptable limits of society and human ideals, including safety protocols. However, the guidelines see principles as a viable, feasible, and acceptable solution to guide AI. This cultural understanding could have originated from the influential science fiction work by Isaac Asimov, in which robots must follow hardwired social and moral norms (do no harm to humans, obey humans, and protect themselves) (Asimov, 1950 ; Jung, 2018 ). Asimov’s laws were the author’s answer to finding protection against the potential malicious consequences of technology, though he also acknowledged in his work the potential for conflict between these laws. Using principles in the guidelines comes from a similar perspective whereby there are concerns about the potential negative consequences of AI.

Guidelines fluctuate between discussions on important principles and how to apply these and develop acceptable AI. For example, G6–G7 discusses aspects of AI such as suitability and robustness while adding ethical aspects such as inclusiveness, fairness, or risks for health discrimination. Guideline G1 starts with ethical principles and continues to add recommendations on AI’s development, while G8 includes fairness in the guiding principles and recommendations for data representativeness. The guideline G3 requests manufacturers to ensure “t he product is easy to use and accessible to all users ” and “ ensure that the product is clinically safe to use ” which are both operationalizations (G3 p. 7, 9). The same guideline (G3) also asks manufacturers for ethical behavior and to “ be fair, transparent and accountable about what data is being used ” (G3 p. 12). Although more technical, several guidelines (G5, G6, G7, G8) do not provide measurable estimations on AI’s behavior or what is acceptable. For example, stating that “ to promote technical robustness, manufacturers […] should test performance by comparing it to existing benchmarks, ensuring that the results are reproducible […]and reported using standard performance metrics ” (G6 p. 13). However, there is no mention of what would be acceptable for performance metrics or how to select acceptable benchmarks.

Most guidelines emphasize "non-maleficence" (G1, G2, G3, G4, G6, G7, G8). However, the emphasis on producing no harm could create a paradoxical interpretation where ‘no harm’ becomes the aim. For example, G1 discusses its principle to “ promote human well-being, safety and public interes t” by stating that “ AI technologies should not harm people. They should satisfy regulatory requirements for safety, accuracy, and efficacy […] to assess whether they have any detrimental impact […]. Preventing harm requires that use of AI technologies does not result in any mental or physical harm ” (G1 p. 26). These prevention-framed messages emphasize behavior to avoid possible negative consequences. Still, they do not highlight what benefits can justify the usage of AI. Moreover, avoiding all harm might be an unrealistic expectation for AI. For example, an AI robot that performs surgery needs to produce an injury (surgical incision) to perform a procedure. If the principles aim to avoid all physical harm, would it be acceptable to have a surgical AI? In the discourse, it is difficult to clarify. Moreover, patients' risk acceptance is not a dichotomous ‘all or nothing,’ as most patients understand that risk is a spectrum of likelihood. For example, patients with psoriasis were willing to accept the risk of serious infection between 20 to 59% as a side effect of their treatment, depending on their disease severity (Kauf et al., 2015 ). There are nuances in what is acceptable for healthcare stakeholders, and creating principles—although appealing—might not meet healthcare needs. Hutler et al. utilize a similar example of a surgical robot to state that it is not as simple as “training” robots to avoid harm and that challenges exist to conceptualize what is harmful and what should be morally allowed while designing robots (Hutler et al., 2023 ).

Nearly all guidelines consider transparency or explainability essential for ethical, good, or responsible AI (G1, G2, G4, G5, G6, G7, G8). However, explainability is a debated concept without consensus on its importance or meaning (Mittelstadt et al., 2019 ). Guidelines often see transparency as an enabler of ethical practices by rendering AI’s processes visible and able to be held accountable (unclear if AI or the people working with it). However, there is no unified definition or acceptability about what and when AI is transparent. Considering that an explainable AI equals ethical AI might be a fig leaf where AI developers cover methodological shortfalls by providing end-users with a false understanding (Starke et al., 2022 ). In contrast, when these principles aim to provide a basis for technical assurance, they should be described as technically feasible and operationalizable. In the current form, guidelines principles seem to be best followed as a thought experiment that re-analyzes the expectations for AI rather than a static set of rules for AI’s development or ethical behavior.

Discourse 3: The Primacy of Trust

Guidelines frame trust, as in ‘ trustworthy AI ’, as the answer to overcoming public doubt. While well-performing AI might build trust, when the center of the discussion is on trustworthy AI, there is a shift from performance expectations (quality) to trust. Reading statements within the guidelines in which trust is central gives one the impression that trust matters more than AI's usability, feasibility, or performance. For example, G1 acknowledges that " trust is key to facilitating the adoption of AI in medicine ." (G1 p. 48); G2 discusses entirely trustworthy AI, and G6-G7 repeatedly discusses trustworthy innovation. Guideline G3 mentions that achieving algorithm transparency can “ build trust in users and enable better adoption and uptake ” (G3 p. 16). Potentially, these statements implicitly apply trustworthiness as a quality seal for good AI, although trust and good are slippery concepts and do not equate to one another. For example, a guideline mentions that “ discussions are crucial to guide the development and use of trustworthy AI for the wider good” (G2 p. 6). The guideline G3 states, “we must approach the adoption of these promising technologies responsibly and in a way that is conducive to public trust, ” (G3 p. 5). Some guidelines consider the lack of trust to impede the usage of data. For example, a guideline mentions that “lack of trust […], in how data are used and protected is a major impediment to data use,and sharing.” (G2 p. 16). Others equate trust as an impediment to the development of AI itself; for example, mentioning “ whether AI can advance […] depends on numerous factors beyond the state of AI science and on the trust of providers, patients, and health-care professionals ” (G1 p. 15). These arguments frame trust as a commodity (measured, managed, or acquired) for the benefit of innovation or technical interests instead of focusing on the preconditions for acceptable AI, such as technical robustness, proven effectiveness, and protection frameworks in case of errors (Krüger & Wilson, 2022 ).

When guidelines describe trust as a means to further innovation, they may fall into the role of advocates for technology, especially when they motivate or suggest that trust in AI is crucial. For example, a guideline “re cognizes that ethics guidance […] is critical to build trust in these technologies to guard against negative or erosive effects and to avoid the proliferation of contradictory guidelines ” (G1 p. 3). The guideline G8 states that “ with the increasing use of healthcare AI […], the intent of the [guideline] is to improve clinical and public trust in the technology by providing a set of recommendations to encourage the safe development and implementation[…] ” (G8 p. 5). This discourse indicates that (1) public trust in AI matters; (2) there might be concerns that the public does not trust AI. The importance of healthcare stakeholders, especially patients, is narrowed to the expectation of acquiring their trust and their position of vulnerability in healthcare.

Patients’ roles are discussed concerning data protection, safety assurance, and as subjects that must trust AI. There is a cursory mention of " patient-centricity " in the guidelines and the importance of patients in AI design. Guideline G1 mentions the importance of patients and their role in ensuring " human warranty ". Guideline G3 mentions that patients need assurance, G4 mentions patients as part of their potential audience. Although these guidelines touch on other situations requiring patients' input, they do not give them an active voice. Most guidelines focus on informing patients about AI (G1, G3, G6, G7, G8) and their data usage. Guidelines discuss the role of patients as subjects worthy of protection due to their vulnerability in healthcare but limit their role to passive bystanders (Table 2 ). Guidelines have tended to focus more on treating patients as mere data subjects. While G1, G5, G8 mention a citizen participation mechanism as they welcome feedback through public docket or direct contact, the feedback is only collected after the first iteration of guidelines. None of the guidelines are written specifically for patients, by or in collaboration with patients, even though guidelines advocate for including patients in AI’s design. In generic AI ethics guidelines, researchers observed that the lack of stakeholder engagement is a prevalent issue, with less than 6% included citizen participation (Bélisle-Pipon et al., 2022 ). Most guidelines do not mention allowing patients to decide if or when to use AI. Uniquely among the guidelines, G8 refers to patients’ ability to decide whether to continue using AI or receive care from a clinician instead (G8 p. 33). Another guideline, for example, only allows people “ to opt out of their confidential patient information being used for purposes beyond their individual care and treatment ” (G3 p. 13).

Our analysis of guidelines for AI in healthcare identified a lack of a standard definition of AI and three main discourses: (1) AI is a desirable and unavoidable development, (2) Principles are the solution to guiding AI, and (3) Trust has a central role. Important for the intended audience of these documents (mainly software developers, but also innovators and manufacturers) is that the discourses were largely concerned with AI applications possibly available in an undefined future. Each of the guidelines discourses cannot be taken in isolation as, to some extent, they reference and influence each other. For example, G1 references the definitions used in G2, and G6-G7 references the principles in G1. In that sense, there may be certain reproductions of ideas that do not exclusively represent the vision of the publishing institution. While acknowledging this possibility, in its totality, the discourses seem to be, in many instances, determined by broader societal discourses, such as the technology industry's optimistic and innovation-driven ideals. In a review of techno-optimism, Danaher concludes that while common in industry and policy, strong forms of techno-optimism may be unwarranted without further analysis and justifications (Danaher, 2022 ). However, the optimistic assessment of AI regarding its qualities and faculties is well-established in other policy documents for generic AI applications. In a discourse analysis published after the completion of this paper, researchers reviewed policies from China, the United States, France, and Germany that also established AI as inevitable and framed an interdependence between technology and societal good creating a powerful rhetoric “that sheds pivotal attention and necessity to AI, lifting it into a sublime aura of a savior”(Bareis & Katzenbach, 2022 ). In the broad European policy context—albeit also not healthcare specific—researchers found that AI is also represented as a “transformational force, either with redeeming of “salvific” qualities drawing from techno-solutionist discourse, or through mystified lens with allusion to dystopian narratives” (Gonzalez Torres et al., 2023 ). Our results demonstrate that similar discourses are built into the AI guidelines for healthcare.

While experts and institutions contributing to the guidelines have made a commendable effort to stay on top of AI innovation, the guidelines are undoubtedly a work in progress. In particular, the discourses show a tension between a pro-growth stance (AI as medical progress) and the need for caution (guidance, principles, trust, and ethics). For example, technical performance metrics, such as achieving the highest accuracy in prediction or classification, can conflict with ethical performance, which aims to avoid making decisions based on sensitive attributes or proxies of those attributes. The problem is already part of the discussion in non-AI clinical decision algorithms where race has been (wrongly) used to change risk assessments, for example, kidney function (Vyas et al., 2020 ). For the current AI discussion, it is unclear how to reconcile both views and if we can or should. For example, commitments to ensuring AI is fair or respects human dignity might not be specific enough to be action-guiding or operationalizable. On the contrary, focusing simply on technical measurements could not meet ethical requirements. Cybersecurity and data protection are often conflated with respect for autonomy or non-maleficence, potentially simplifying the interpretation and applicability of the ethical value. Ethically, respect for autonomy is associated with the right of patients to decide if, when, and how to receive health care. Operationalizing respect for autonomy would include a discussion on patients’ consent to use AI, including their preferences, and not only about data consent. To an extent, AI ethics might fail to uphold its boundaries, especially to the techno-optimism driving AI and its techno-solutionism.

Most guidelines do not include the sociotechnical context of those involved in AI. The most common addressees (developers, innovators, and manufacturers) might need a more comprehensive understanding of ethical concepts during their training or support afterward. Indeed, some ethical statements in these guidelines are meaningless without the proper ethical acculturation. For example, ethical education in computer science degrees in Europe is often a standalone subject with limited hours (Stavrakakis et al., 2022 ). The discourse often addresses stakeholders' responsibilities (using terms such as ‘should’ or ‘shall’). However, there is limited engagement in defining rights. For example, what are the rights of end-users? The rights could be implicit, but if the desire is to promote the active engagement of other non-technology stakeholders in the ethical development of AI, they should be made aware of their rights and educated about their options.

As an overarching analysis, we identified that AI guidelines switch between technical and ethical expectations, concepts, and notions. Other applications are precise in distinguishing their aim and intended usage. For example, guidance for medical devices for cervical cancer includes quality management, standards, and operational consideration (WHO, 2020 ). As another example, good manufacturing practices describe the minimum standards pharmaceutical manufacturers must meet in their production processes (EMA, 2018b ; European Commission, 2003 ; WHO, 2014 ). Quality-by-design is an approach to ensure the quality of medicines by “employing statistical, analytical and risk-management methodology in the design, development, and manufacturing of medicines” (EMA, 2018c ). Finally, Good Clinical Research Practice (GCP) principles are descriptive and focus on making research scientifically sound and justifiable (WHO, 2005 ). The lack of precision could be one of the reasons why there has been a backlash against utilizing ethics as a framework to inform AI guidelines. Some academics have criticized AI ethics for being toothless, useless or vague (Fukuda-Parr & Gibbons, 2021 ; Héder, 2020 ; Heilinger, 2022 ; Munn, 2022 ). Critics have mentioned that AI ethical guidelines do not offer robust strategies to protect human rights and cannot emphasize accountability, participation, and remedy as protection mechanisms for people (Fukuda-Parr & Gibbons, 2021 ). Others have criticized AI ethics in its current form, for the difficulty of implementing moral ideals in technological practices and the lack of consensus on ethical principles for AI (Munn, 2022 ).

The criticism of AI ethics might be due to a misconception of the role of ethics and the way guidelines are constructed, articulated, and framed for healthcare. Simplifying guidelines as a document that includes all AI, aims to guide in all scenarios, and tries to cover all stakeholders is over-ambitious. Compared to guidelines in other medical areas, principles for AI include autonomy, transparency, non-maleficence, fairness, trust, and responsibility. Therefore, AI’s approach to ethics tends to remain abstract, hindering the value of AI ethics and its potential application (Zhou & Chen, 2022 ). For example, ethics-by-design in AI replaced quality-by-design in pharmaceutical development. Ethics-by-design aims to make people consider ethical concerns and requirements such as respect for human agency, privacy and data governance, transparency, fairness, and individual, social, and environmental well-being (European Commission, 2021 ). However, ethics-by-design is not as operable as quality-by-design. When the goal is to operationalize ethics, AI guidelines might lack qualitative and quantitative suggestions to validate when and how to achieve and respect the proposed principles (Zhou & Chen, 2022 ). Therefore, limiting the contribution of AI ethics and potentially legitimizing content-thin ethics that are easy—at least pretend to be easy—to follow. In that sense, the criticism of ethical guidelines does not directly signal a failure of ethics but a potential over-spill between theoretical boundaries and aims. In the worst case, these guidelines can delay effective legislation. Guidelines can be used for ethics-washing, where it becomes easier to appear ethical than take ethical actions, especially if guidelines rely on forms of self-regulation and there are no legal consequences for the actions or if the content of the guidelines is abstract or general (Wagner, 2018 ). AI actors could use superficial recommendations as a red herring, resulting in widely ignored or superficially followed guidelines because they lack operational consequences for their choices.

Limitations

To our knowledge, this is the first comprehensive review of healthcare AI guidelines (from governments or institutions) from an ethical perspective, carried out by a multidisciplinary team. Although including various subjects (bioethics, philosophy, medicine, public health, theology, and psychology), our background has certainly informed our research and influenced our analysis. However, to overcome these challenges, we have reflected on our positionality and analyzed the guidelines in a nonlinear nature that forced us to contest our assumptions continuously. Given the continuous development of AI guidelines, the vast nature of AI, and our available resources, we noted several limitations. We did not aim to do a systematic review but to examine the widely available and influential guidelines worldwide critically. However, some relevant documents might have been excluded because they are hard to locate online or unavailable in the public domain. Limiting the analysis to English documents implied some linguistic exclusions and might limit a broad geographical interpretation. The search ended in the first half of 2022, which might be too early as most of the included guidelines were published from 2021 onwards. For example, the WHO outlined considerations for regulating artificial intelligence for health in Nov 2023, which indicates that other guidelines may be available since the final completion of this paper in Feb 2023. At least two research teams have done discourse analysis of AI policies, and have been published recently—albeit not healthcare-specific (Bareis & Katzenbach, 2022 ; Gonzalez Torres et al., 2023 ). The search for gray literature is challenging and could lead to biased inclusion of those documents which contain key search terms in their titles. We could not include guidelines from Latin America, Central Asia, or Africa, as none of the available guidelines fulfilled the inclusion criteria (domain-specific guidelines for healthcare). Previous researchers have acknowledged this limitation because they have also been unable to analyze guidelines from those geographical regions (Jobin et al., 2019 ). However, we noticed that initiatives are starting to emerge for the general governance of AI, such as national strategies (Kenya) or data focus AI guidelines in several Latin American countries (Gaffley et al., 2022 ; tmg, 2020 ). Given the nature of CDA as a qualitative research method, our results cannot be generalized for other guidelines not included in this study.

Conclusions