- Data Science

Caltech Bootcamp / Blog / /

What is Data Visualization, and What is its Role in Data Science?

- Written by Karin Kelley

- Updated on July 29, 2024

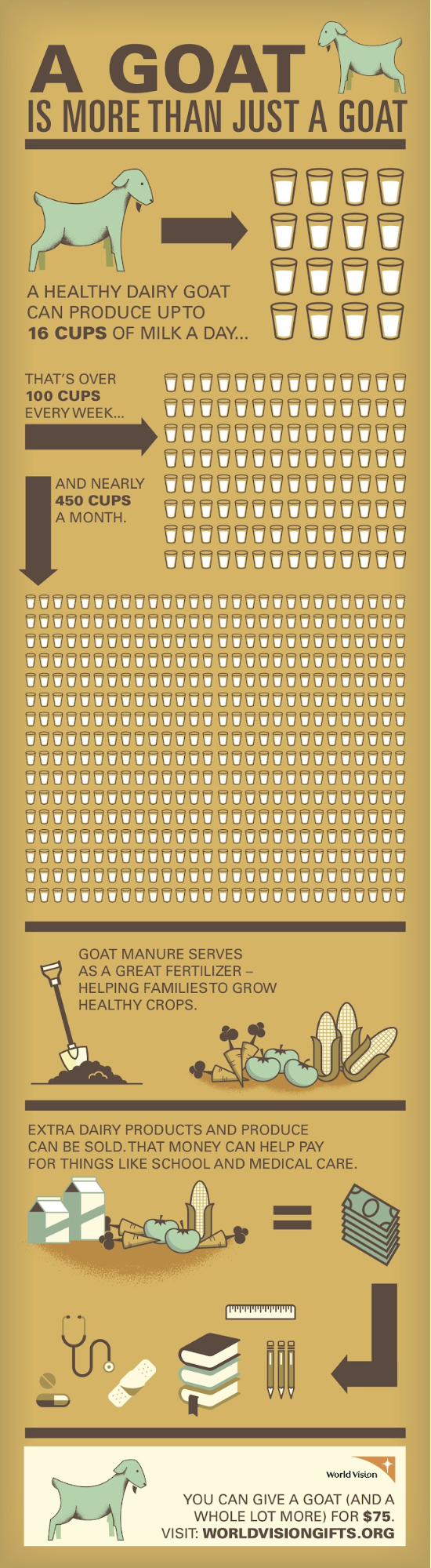

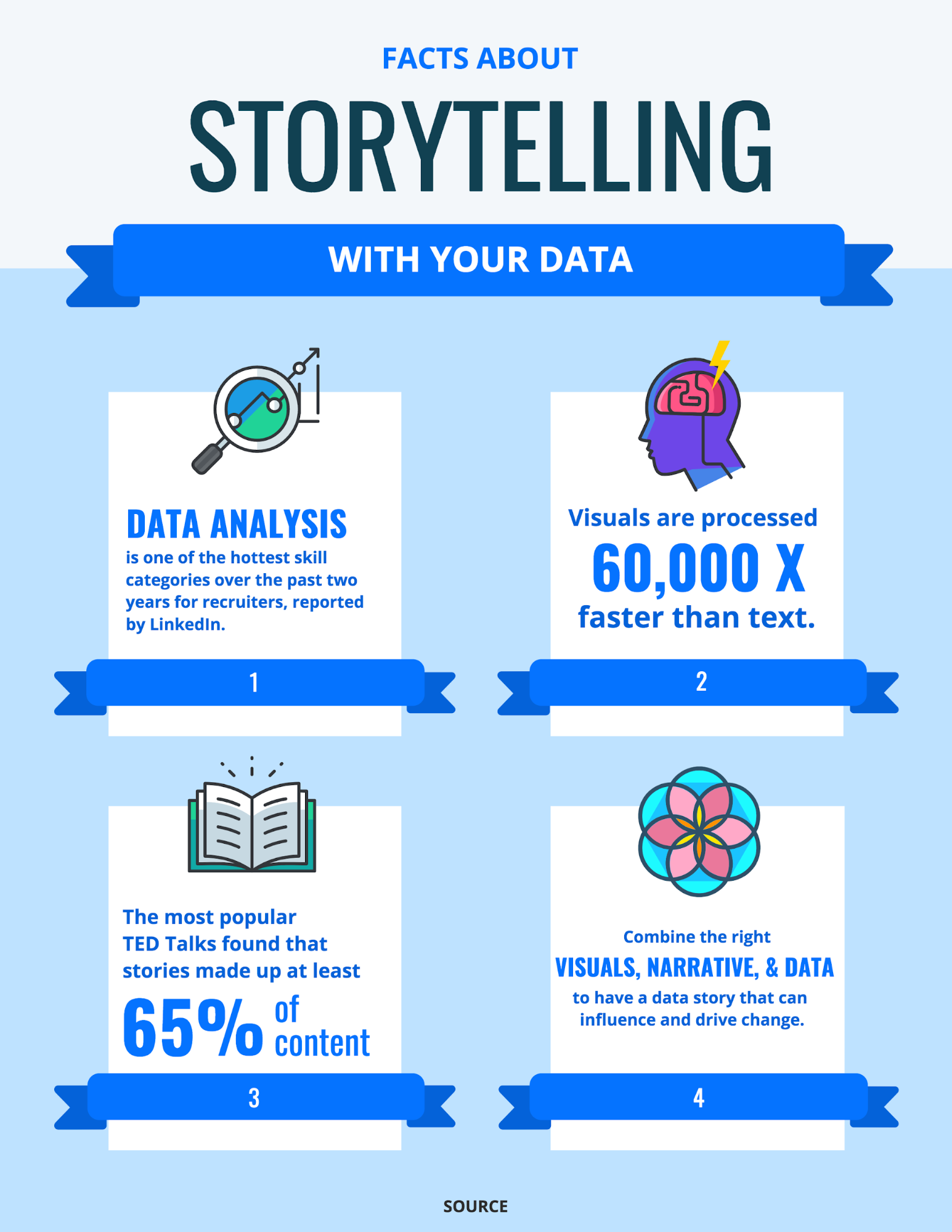

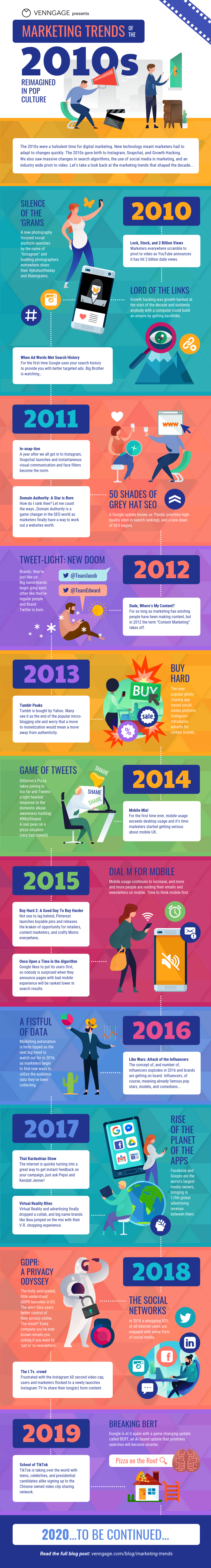

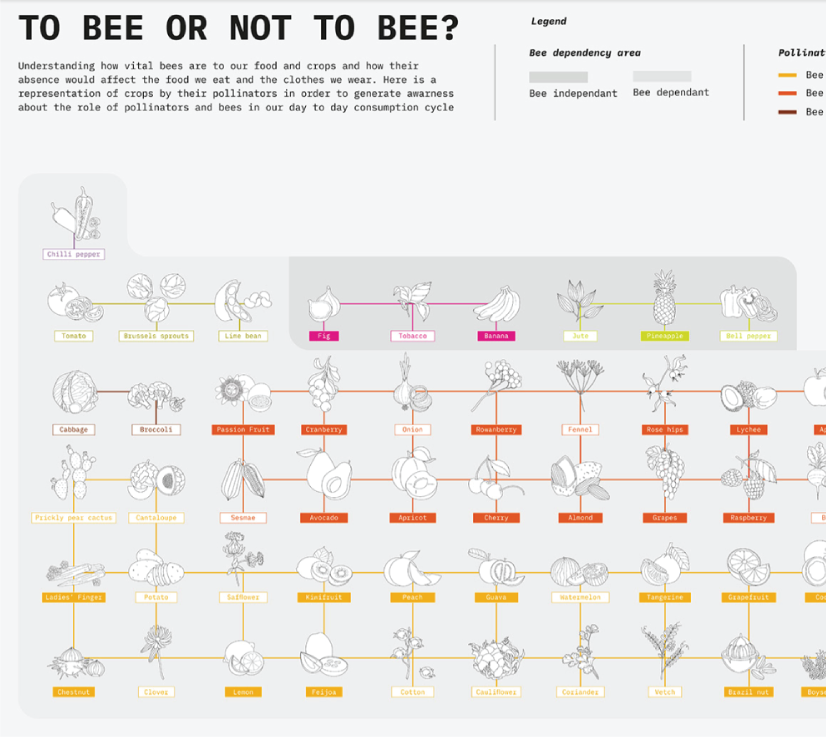

How do you transform endless rows of data into a story that can drive decisions and inspire action? Data visualization is the answer. In our data-driven world, converting complex data sets into intuitive, visual formats is essential for uncovering insights and making informed decisions.

This blog answers the high-level question: “What is data visualization?” and discusses its importance, various categories, techniques, and practical applications. We’ll explore how visualizing data can turn raw numbers into powerful narratives that inform, engage, and persuade. Those looking to master this skill and advance their data science career should consider enrolling in a comprehensive data science bootcamp .

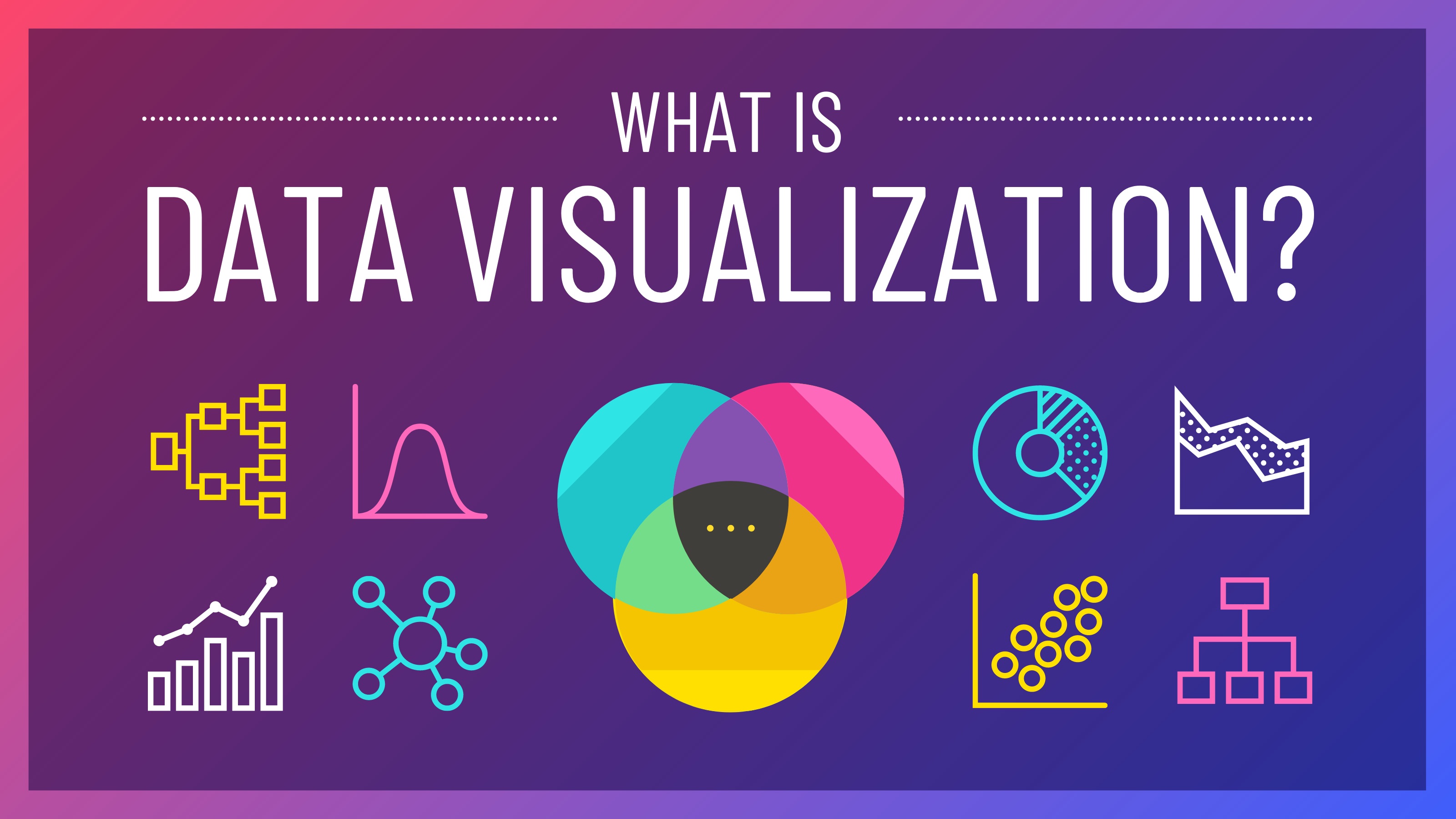

So, What is Data Visualization?

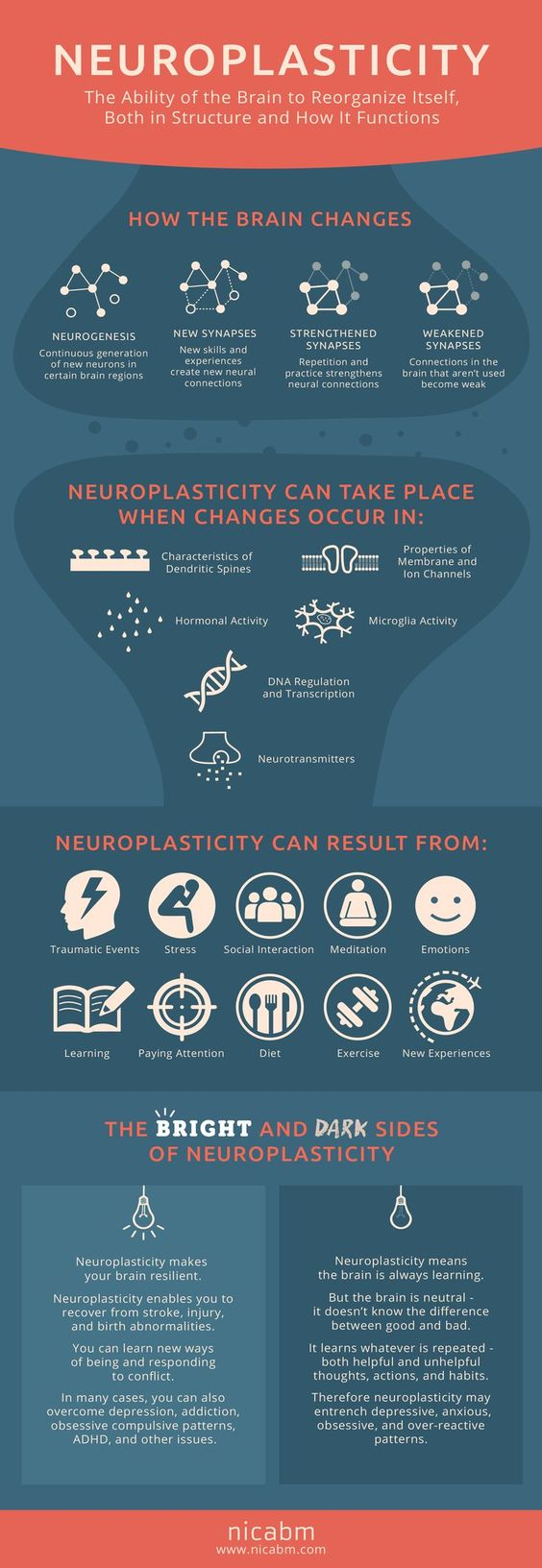

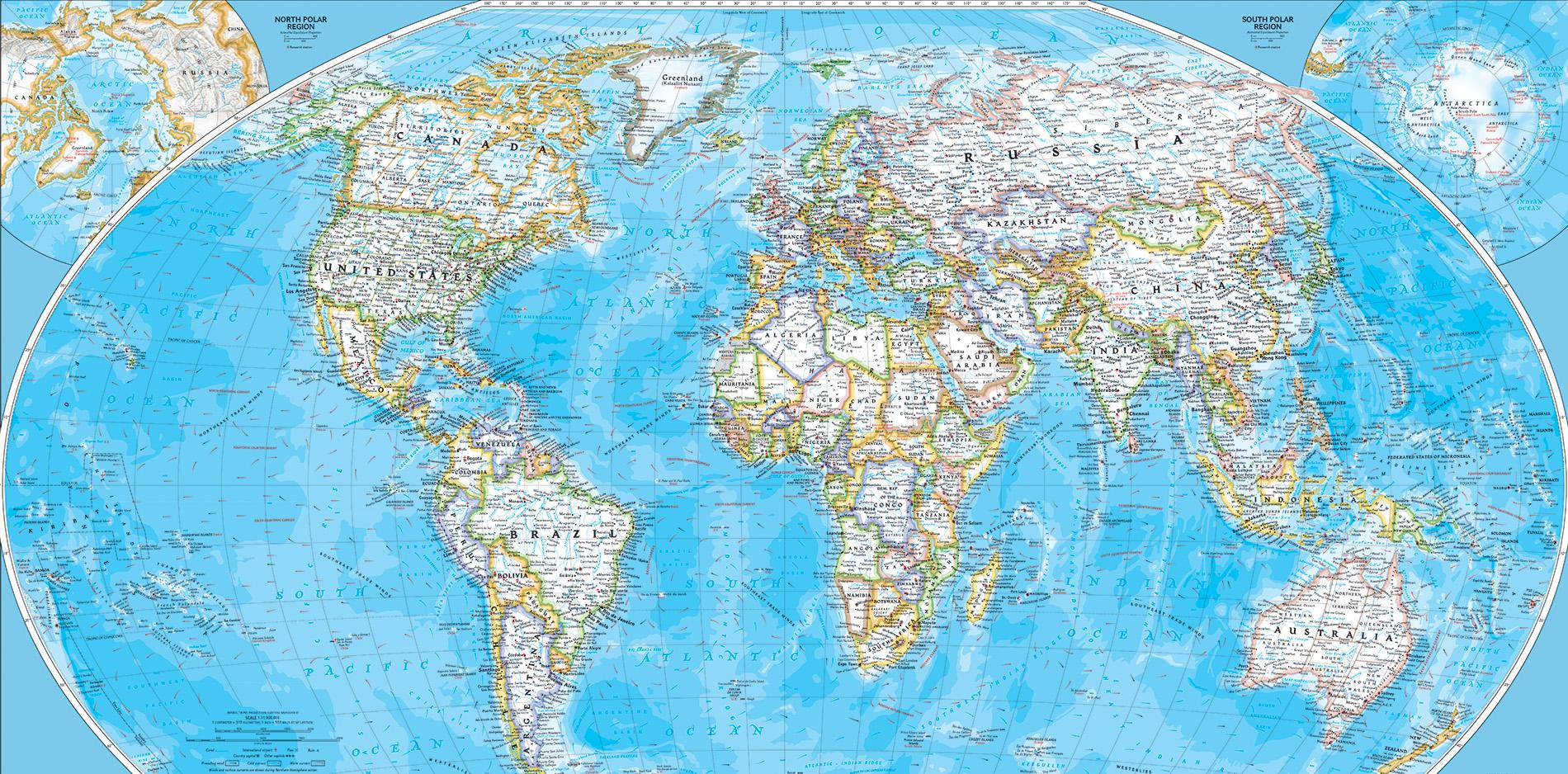

Data visualization is the graphical representation of information and data. Using visual elements like charts, graphs, and maps, data visualization tools provide an accessible way to see and understand trends, outliers, and patterns in data. This visual context helps users understand the data’s insights and make data-driven decisions more effectively. It’s a powerful tool for exploratory data analysis and conveying findings to others.

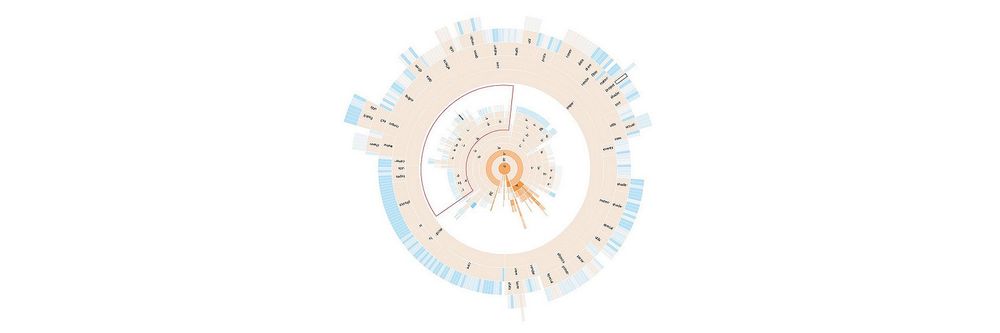

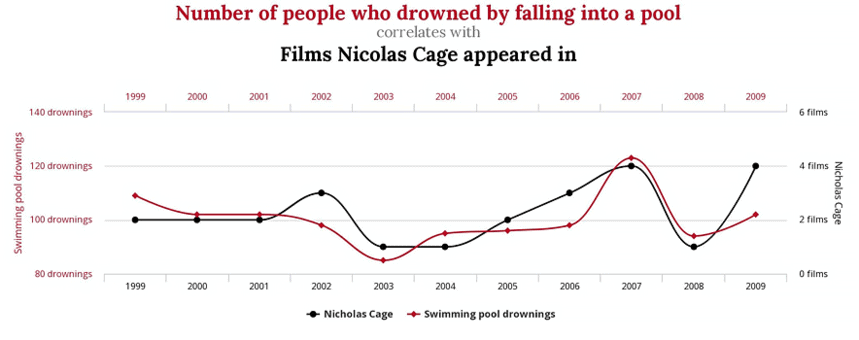

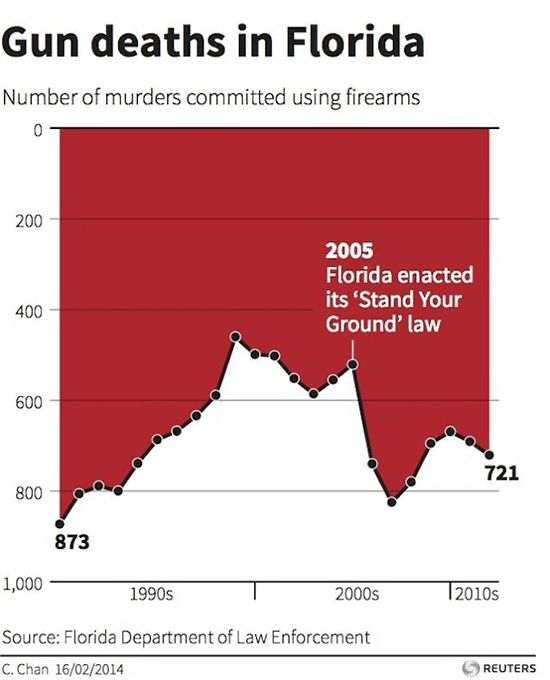

Data visualization involves transforming raw data into visual formats that reveal patterns, trends, and correlations. This transformation process can range from simple static charts to highly interactive and complex visualizations. The goal is to make the data more understandable, insightful, and actionable. Effective data visualization leverages visual perception principles to present data in a way that is both aesthetically pleasing and informative.

It is not just about creating pretty pictures but about creating meaningful representations that can tell a story or answer a question. This process involves understanding the audience, selecting the appropriate visualization techniques, and presenting the data in a way that aligns with the intended message. For instance, a bar chart might be used to compare sales figures across different regions, while a line chart could illustrate trends in stock prices over time.

Also Read: Technology at Work: Data Science in Finance

Why is Data Visualization Important?

Data visualization is essential because it transforms complex data into clear and actionable insights. Converting raw numbers into visual formats allows for quick comprehension and informed decision-making.

Here are some key reasons why data visualization is so crucial.

- Unlocking the narrative: Data visualization helps uncover the story hidden within data, allowing decision-makers to grasp complex concepts and identify new patterns.

- Effective communication: It plays a vital role in presenting data findings clearly and concisely, ensuring that the message is easily understood.

- Handling big data : Visualization tools enable data scientists and analysts to interpret large data sets quickly and efficiently, driving better business strategies and operations.

- Immediate understanding: Humans are inherently visual creatures, and our brains process visual information more effectively than text or numbers alone. Visual representations allow us to quickly identify trends, spot outliers, and understand relationships within the data.

- Essential for quick decision-making: This immediate comprehension is particularly valuable in fast-paced environments where quick decision-making is crucial.

- Broadening audience reach : Data visualization makes complex data accessible and understandable to a broader audience, including business executives, policymakers, and laypersons.

- Democratizing data: By making data more accessible, visualization empowers more people to engage with and make informed decisions based on data.

What is Data Visualization? Big Data Visualization Categories

Big data visualization involves handling vast and complex data sets, often requiring advanced tools and techniques. It can be categorized into three main types:

Interactive Visualization

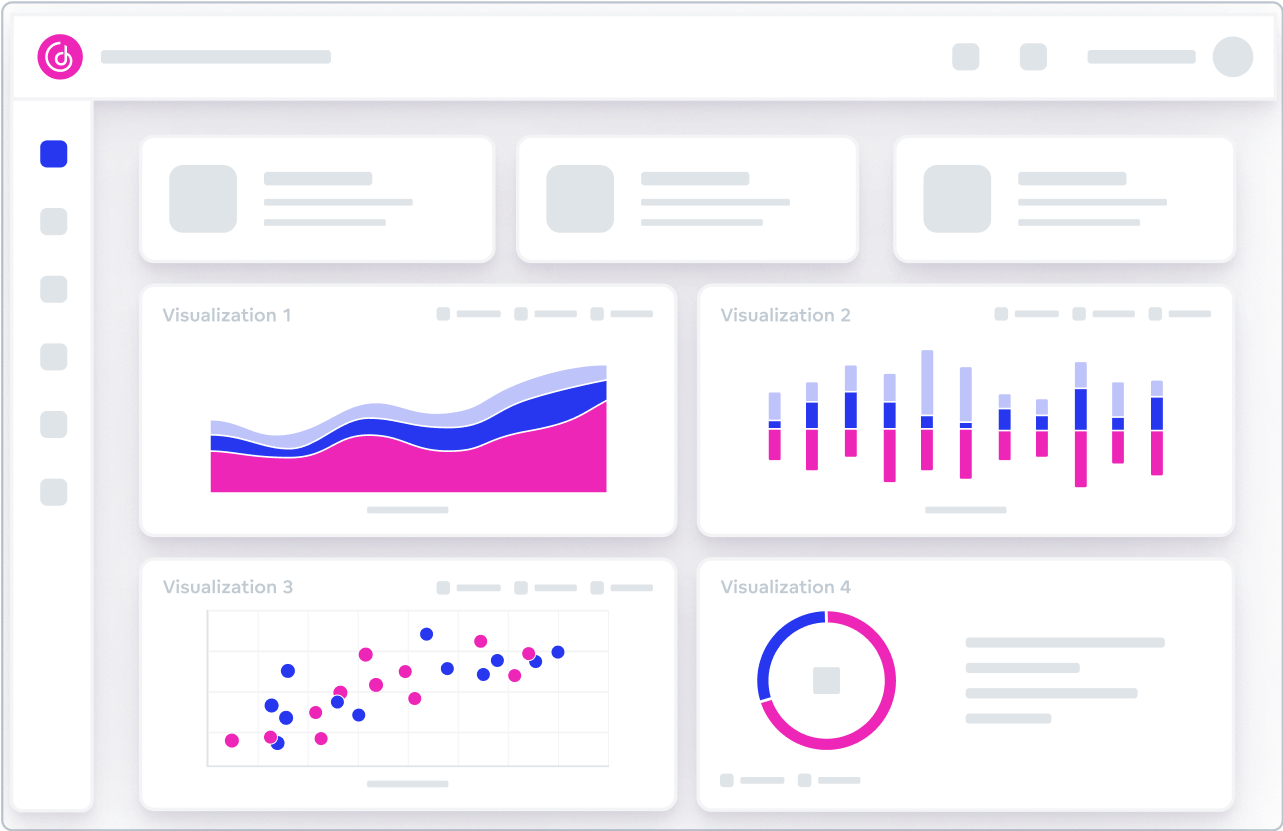

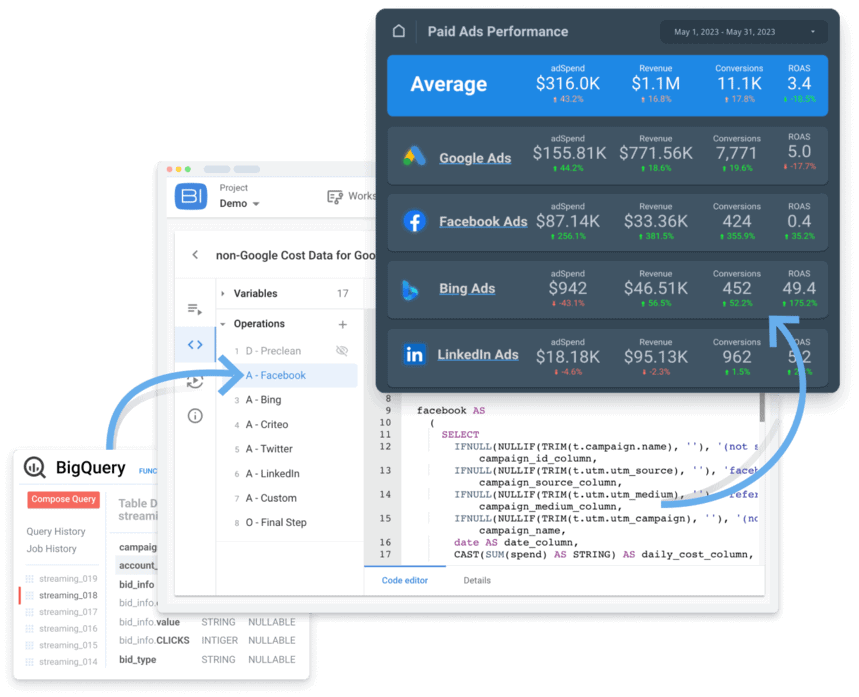

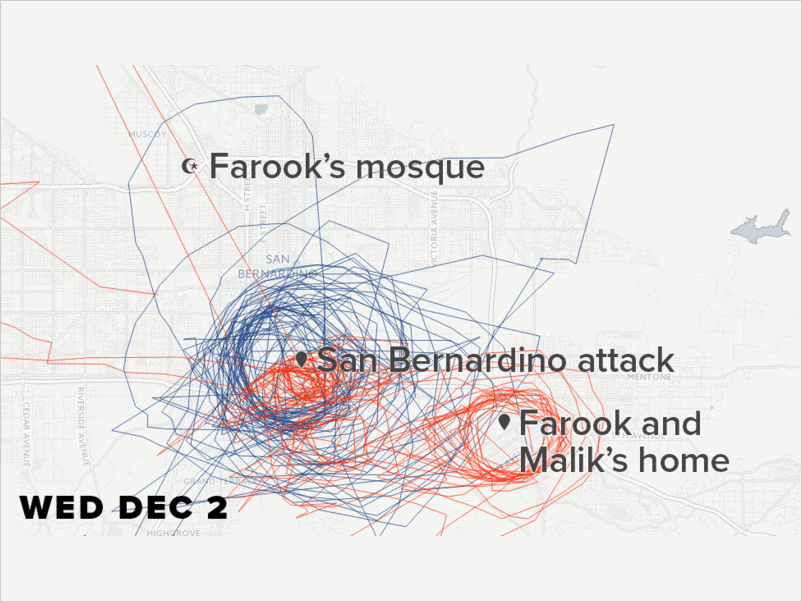

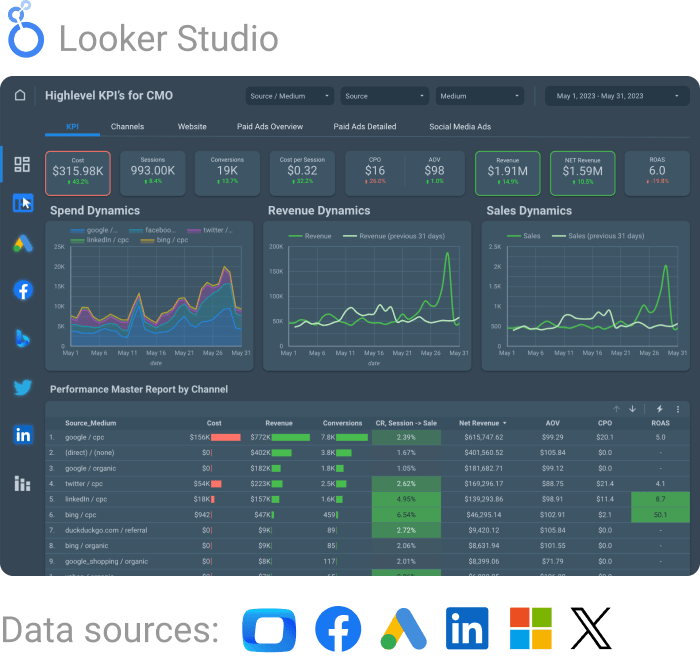

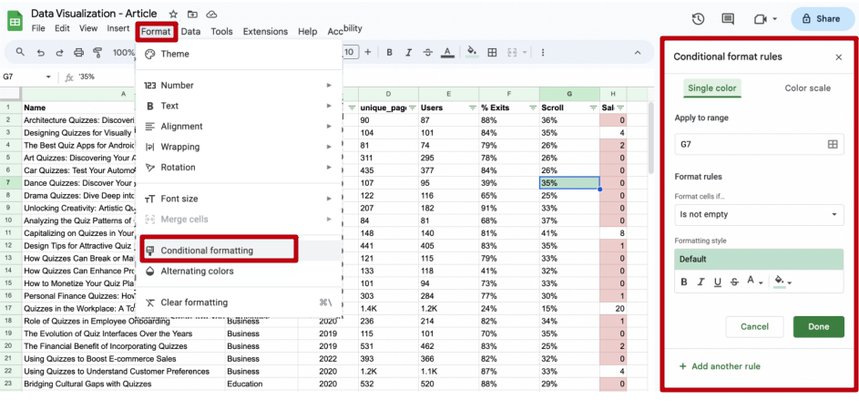

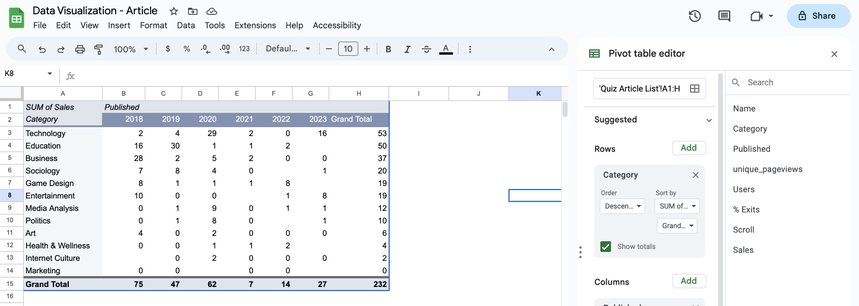

This allows users to engage with the data dynamically. Interactive dashboards and reports enable users to manipulate data and uncover insights through various filters and controls. Tools like Tableau and Power BI are commonly used to create interactive visualizations that allow users to drill down into data, explore different perspectives, and gain deeper insights.

Real-time Visualization

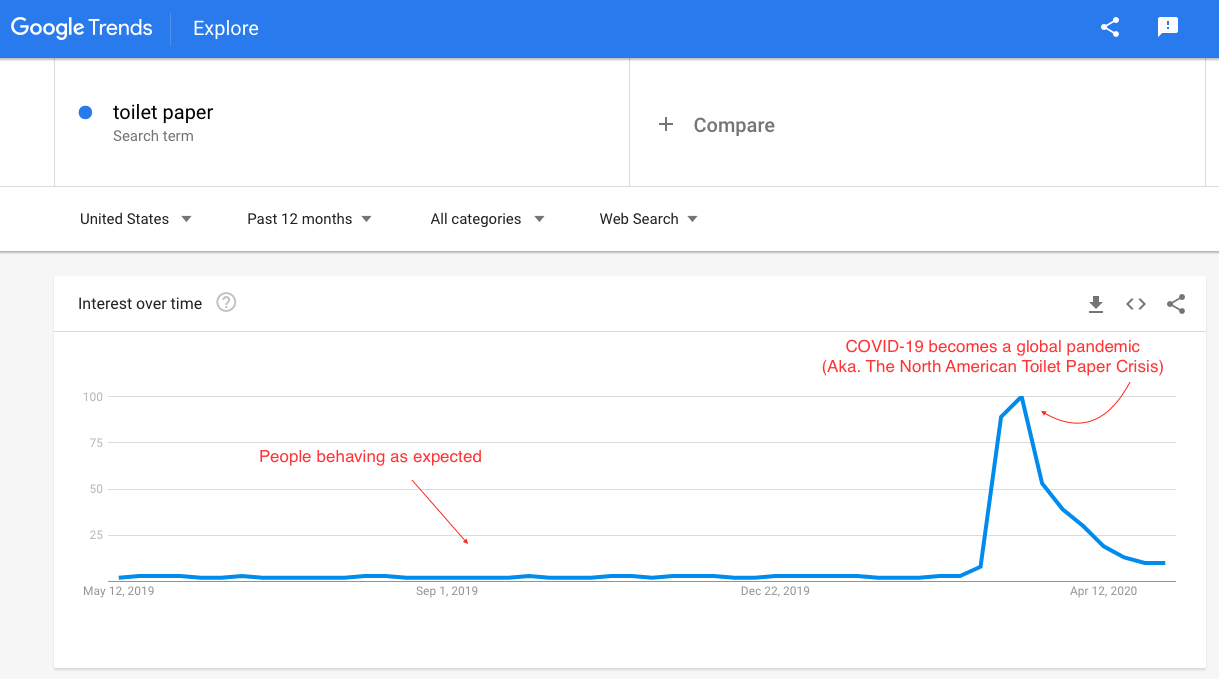

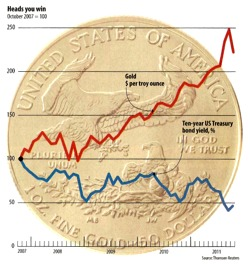

This involves visualizing data as it is collected. It’s particularly useful for monitoring live data streams, such as social media feeds, sensor data, or financial market movements. Real-time visualization tools can provide up-to-the-minute insights, enabling quick responses to changing conditions. For example, financial traders use real-time visualization to monitor market movements and make timely investment decisions.

3D Visualization

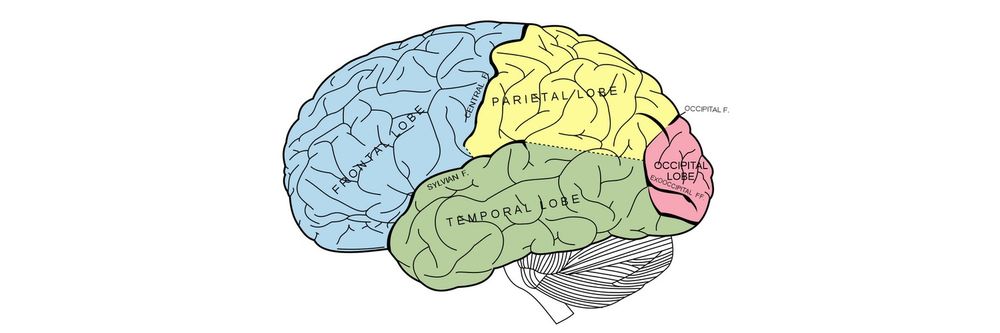

3D visualization can provide additional depth and clarity for particularly complex data sets. It’s often used in medical imaging, geospatial analysis, and engineering. 3D visualizations can help to understand complex structures and relationships that might be difficult to interpret in two dimensions. For example, 3D visualizations of MRI or CT scans in medical imaging can help doctors diagnose and plan treatments more effectively.

Also Read: The Top Data Science Interview Questions for 2024

Top Data Visualization Techniques

Numerous techniques are available for data visualization, each serving different purposes and data types. Here are some of the top ones.

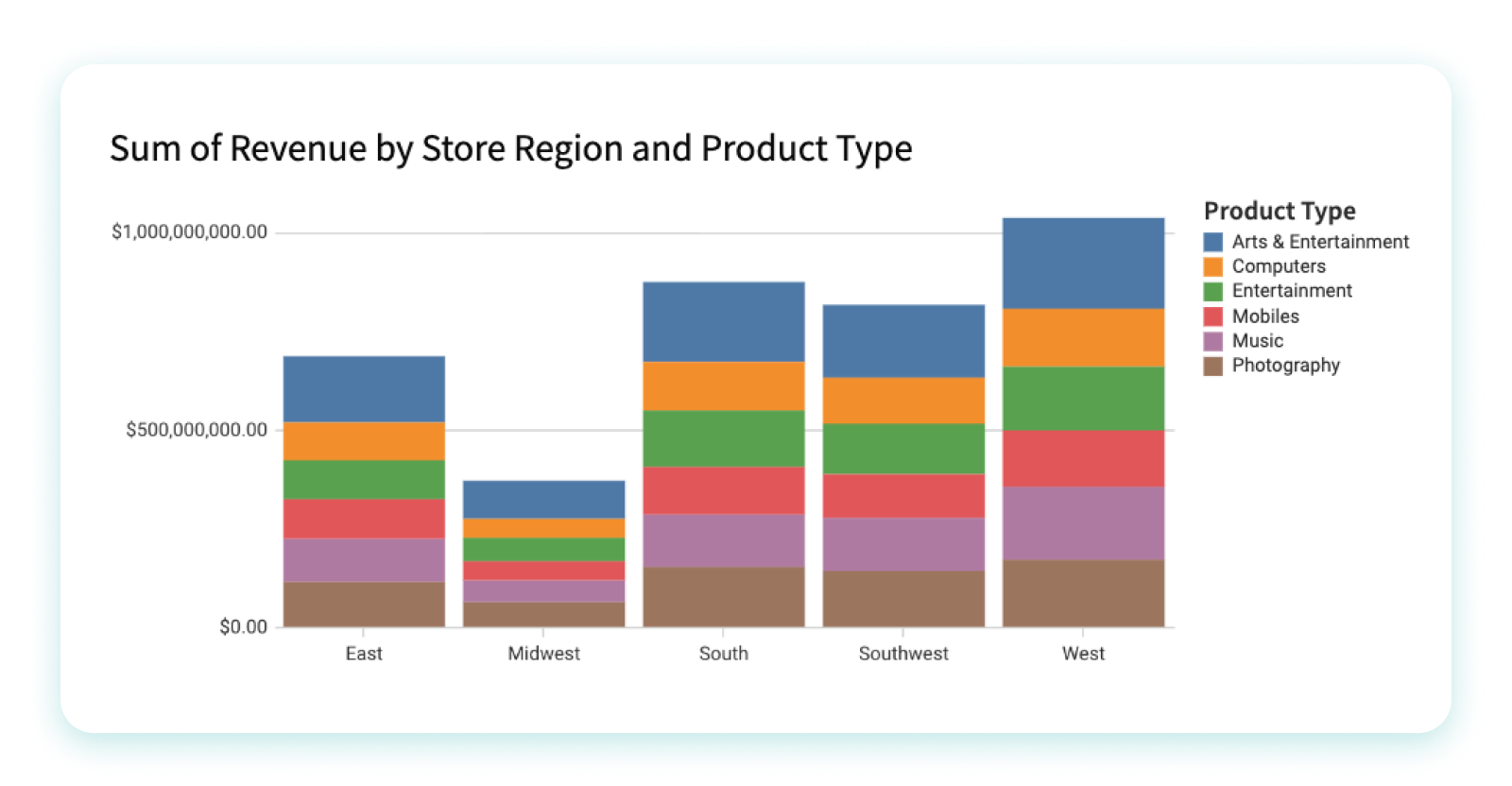

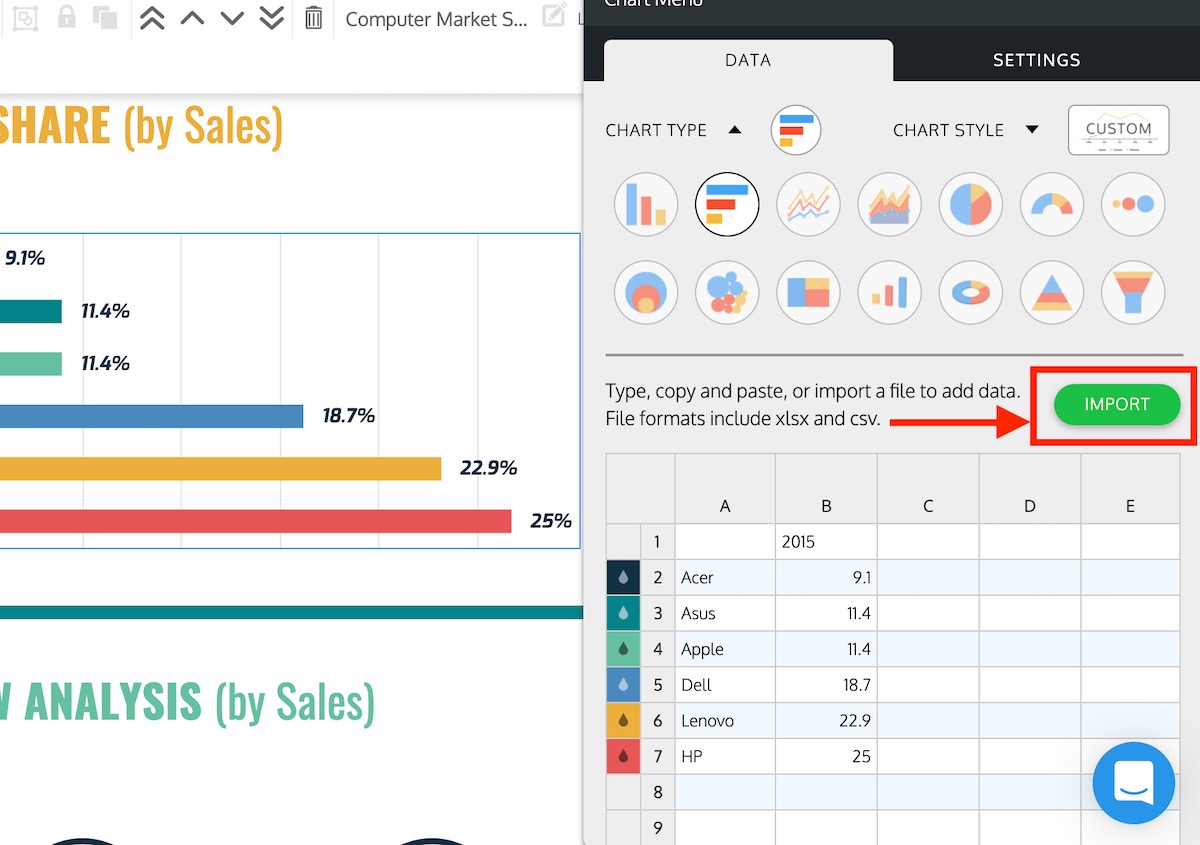

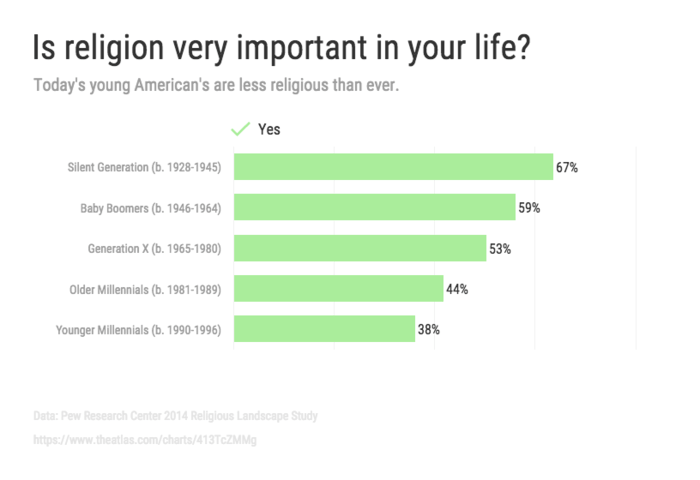

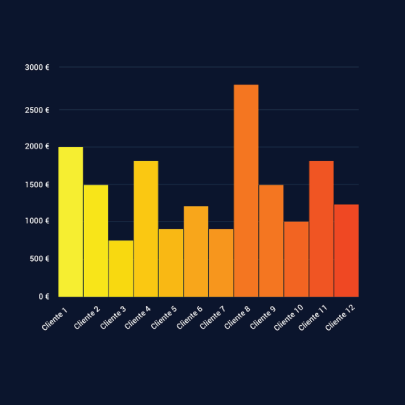

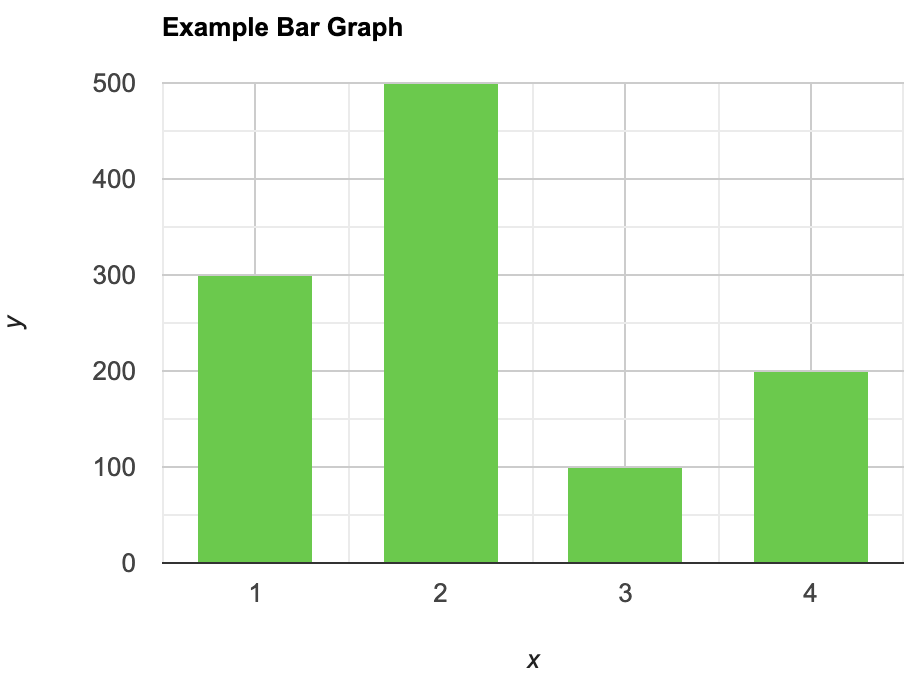

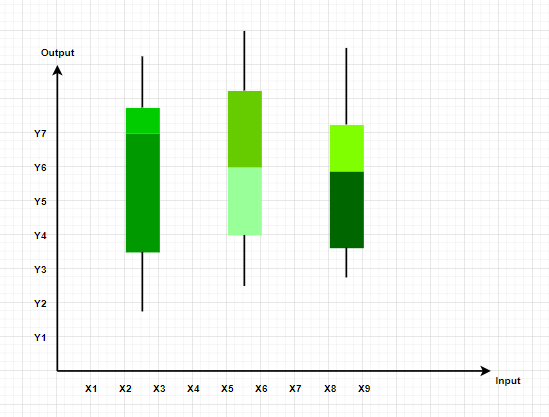

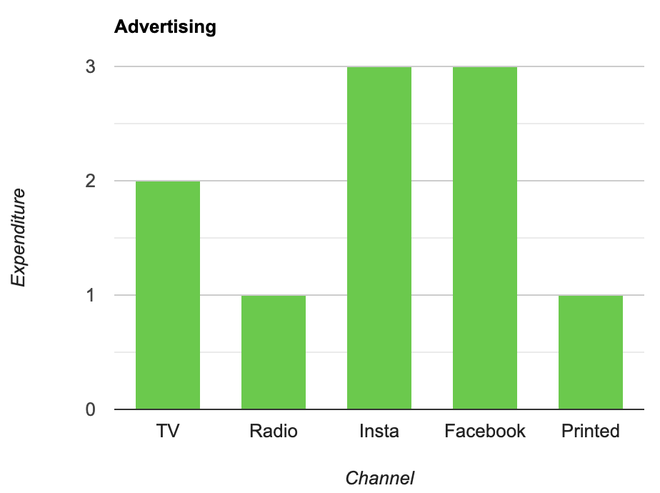

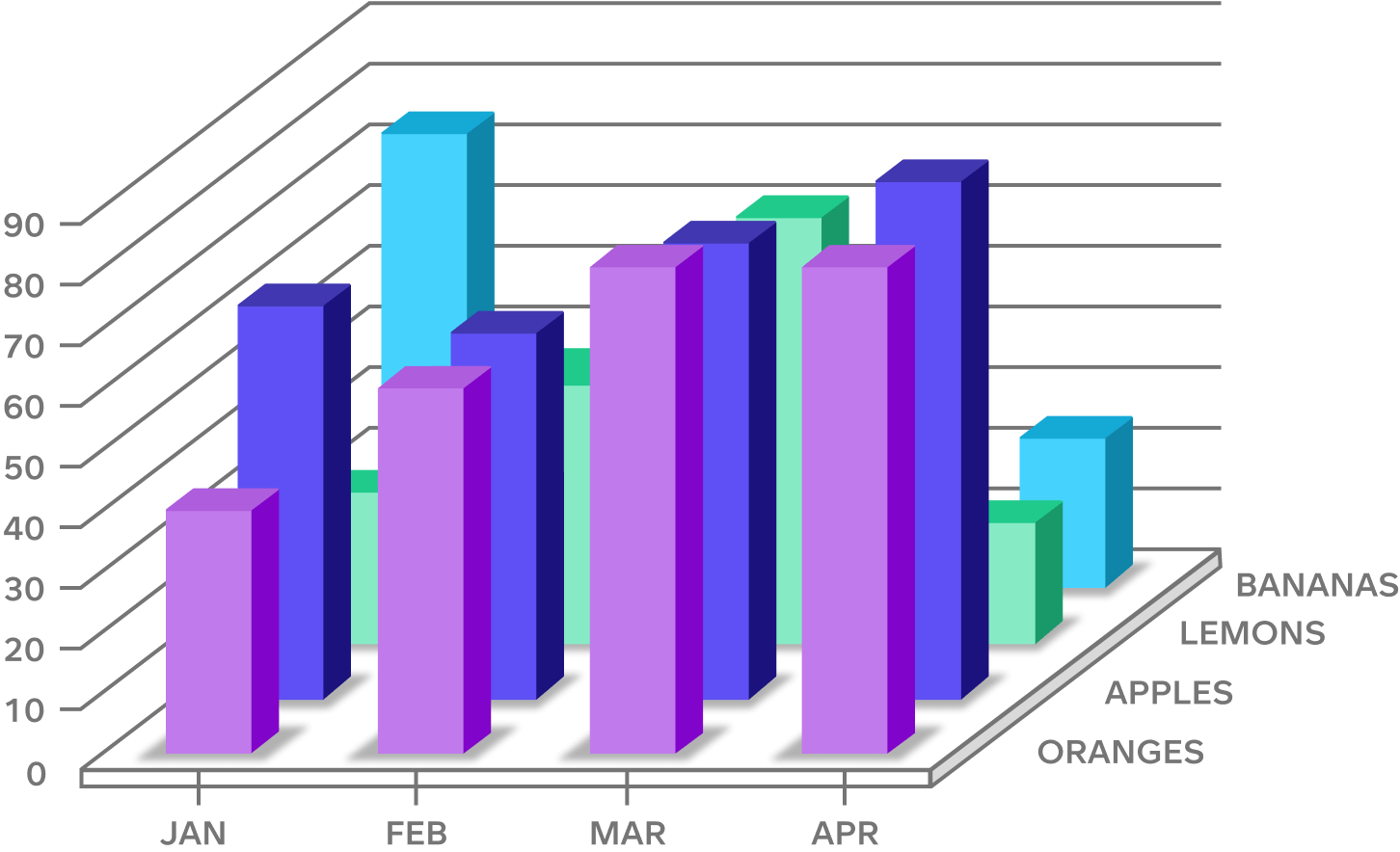

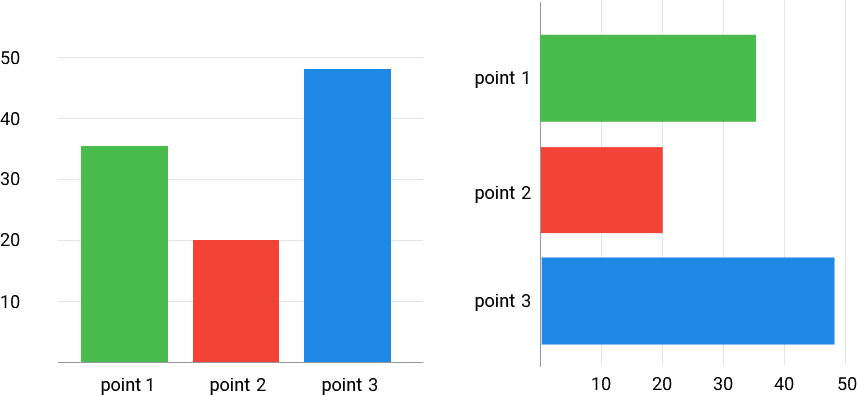

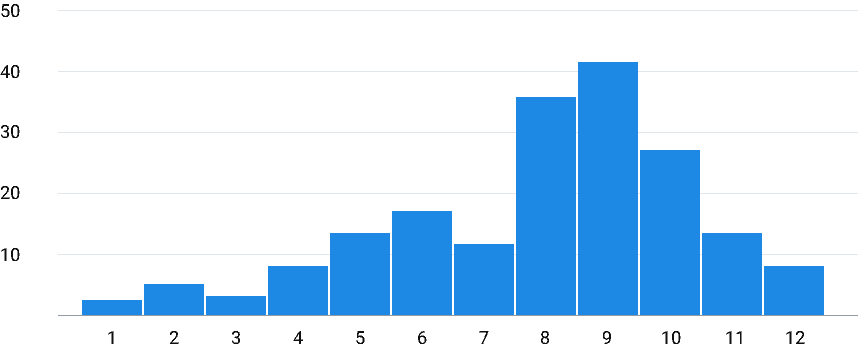

Bar Charts and Column Charts

These compare different categories or track changes over time. They are simple yet effective for presenting categorical data. Bar charts can be used to compare sales figures across various regions, while column charts can show the change in sales over different quarters.

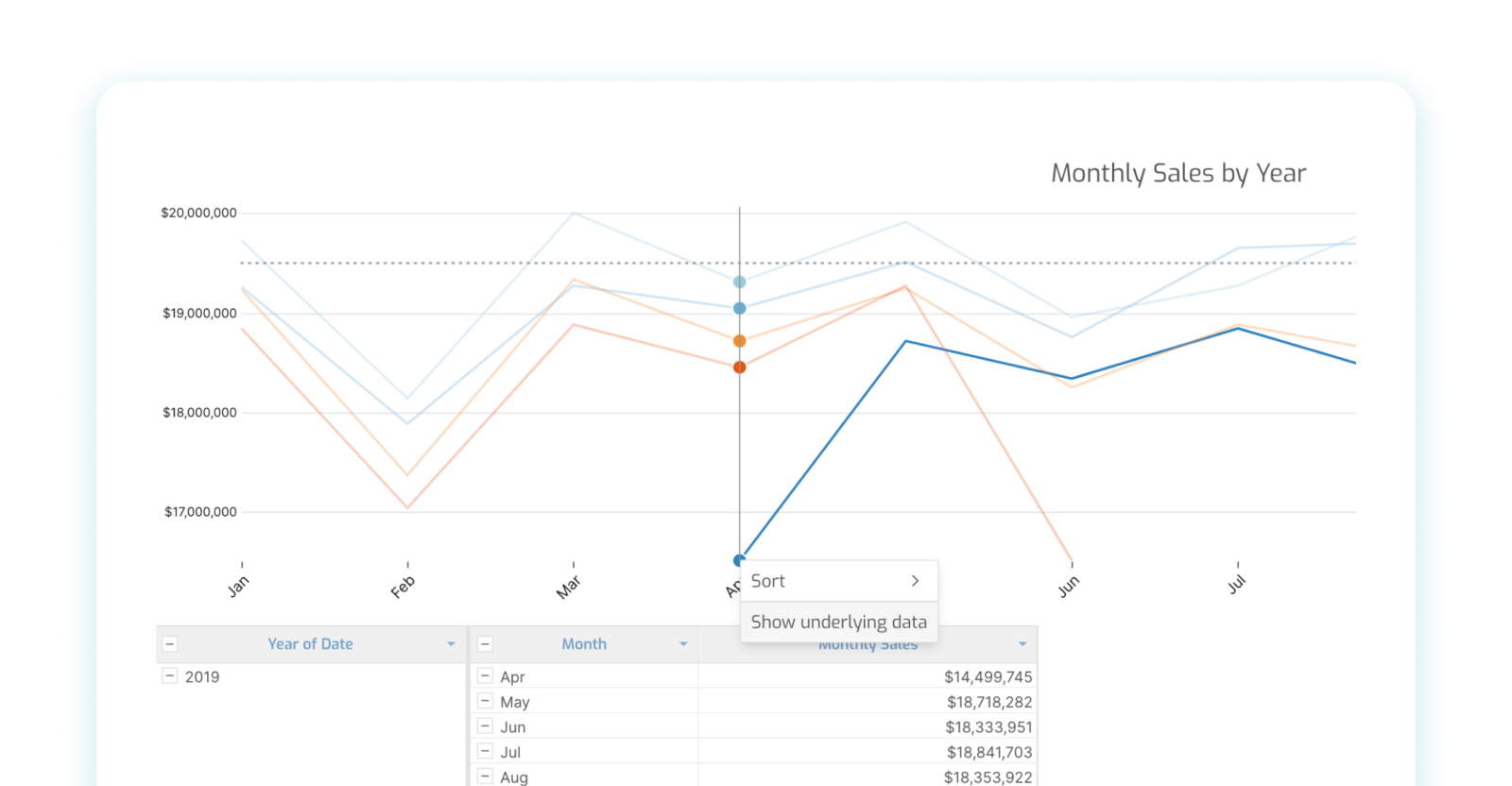

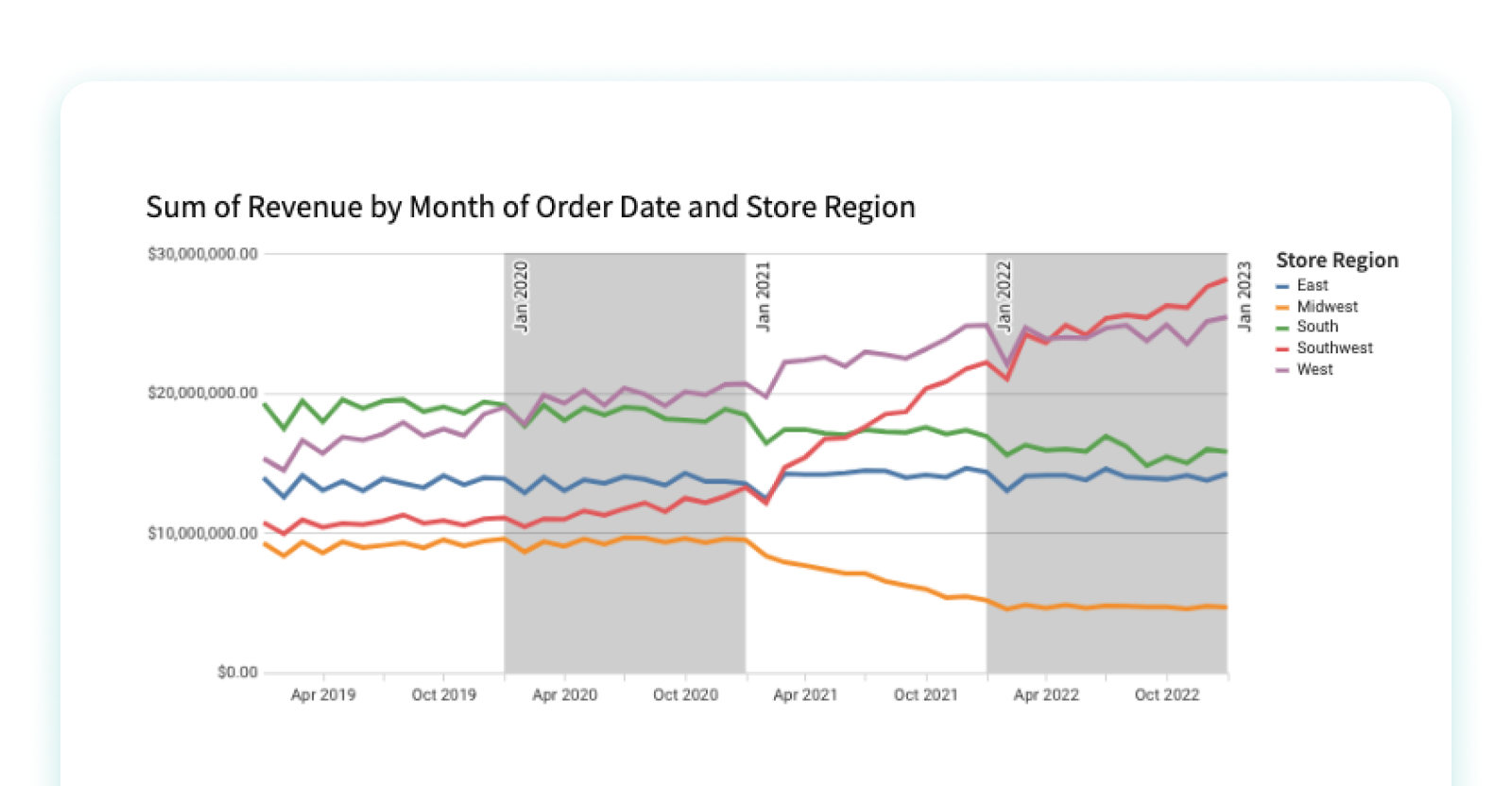

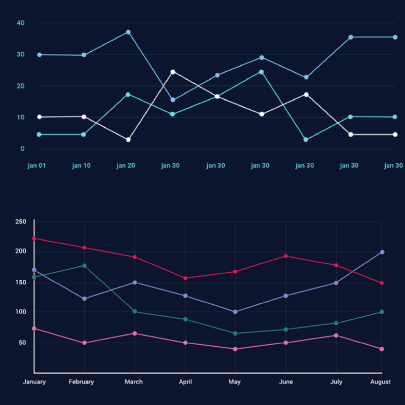

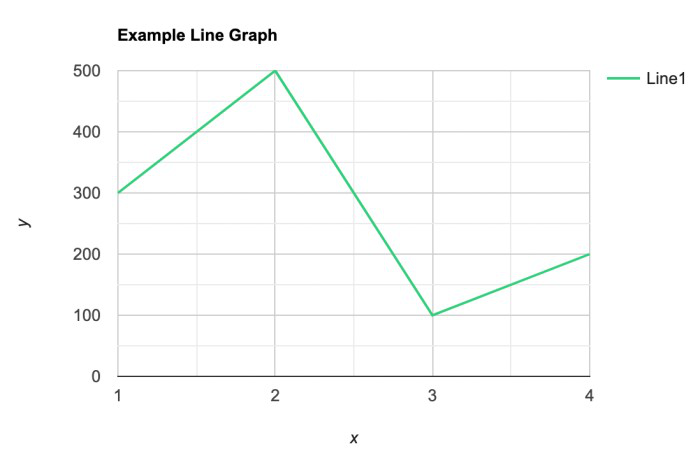

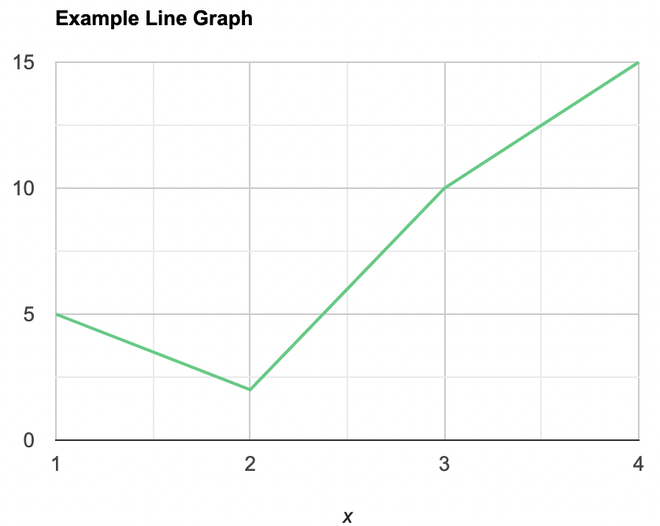

Line Charts

Line charts connect individual data points to show continuous data, which is ideal for showing trends over time. They are often used to illustrate trends in stock prices, website traffic, or temperature changes over time. Line charts can help to identify patterns and predict future trends.

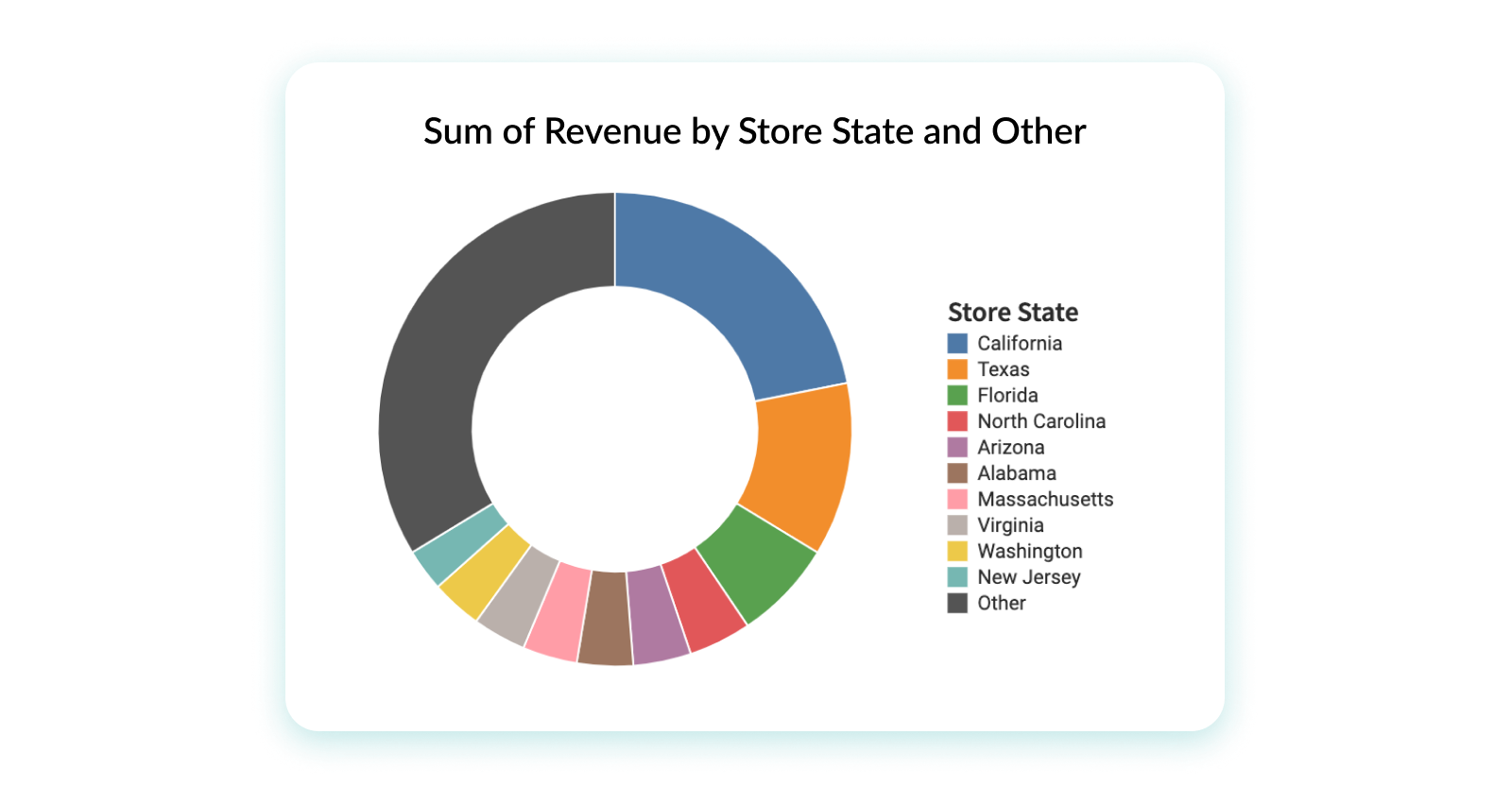

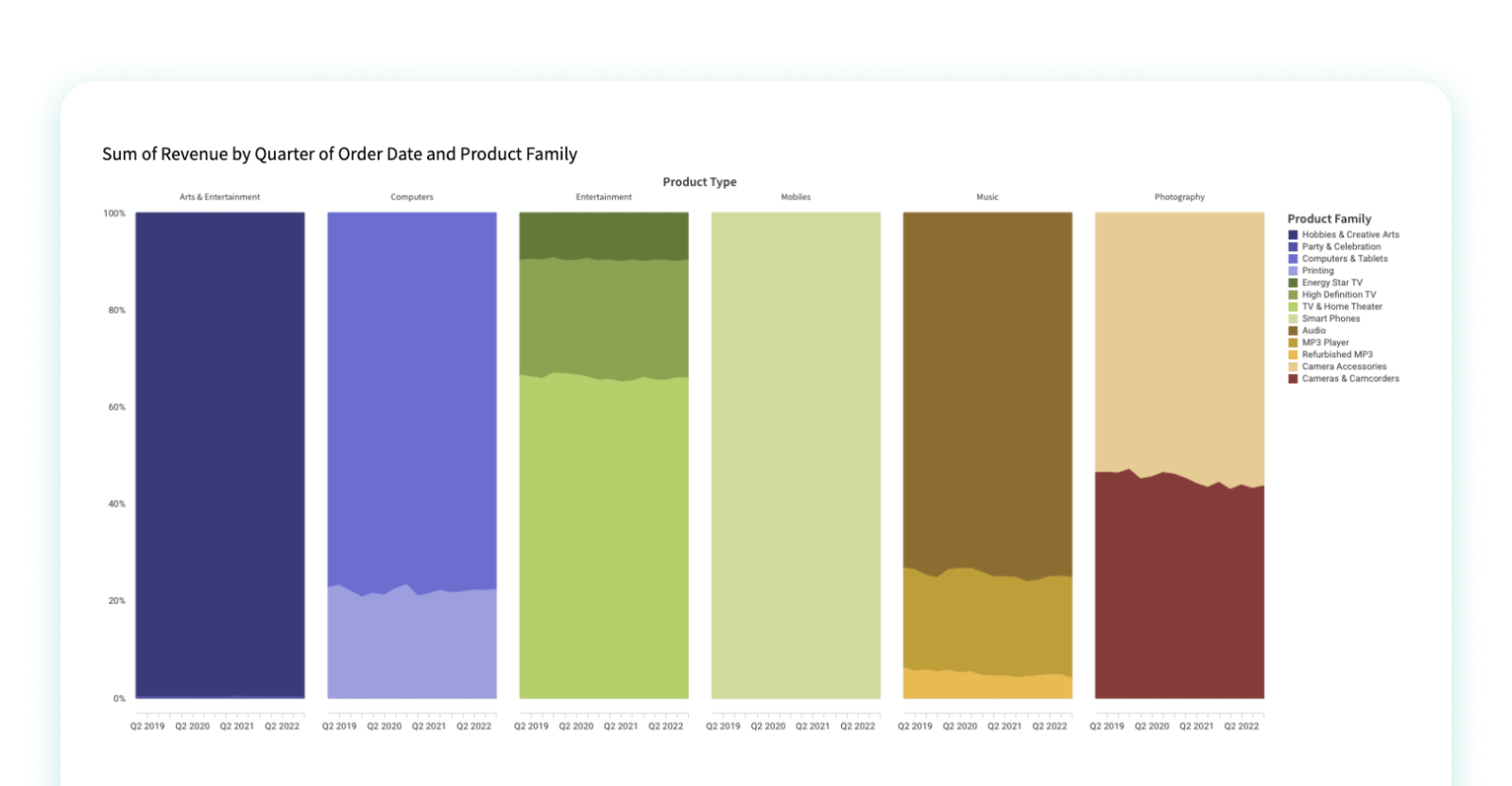

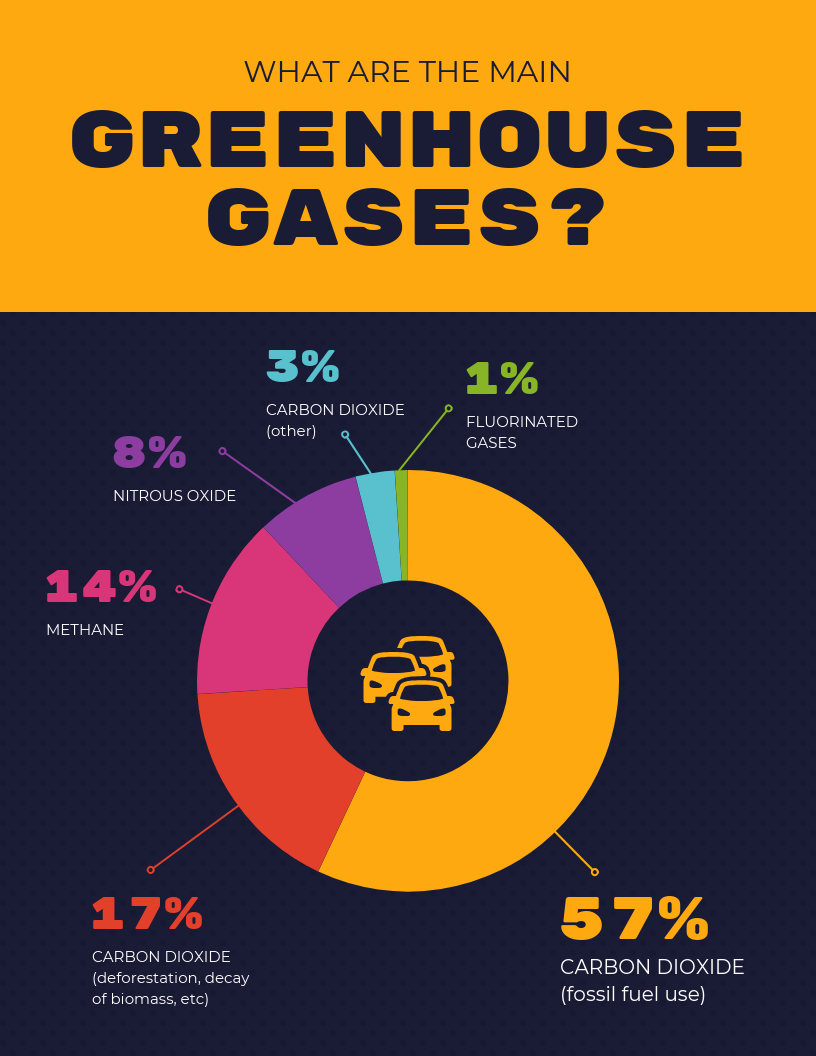

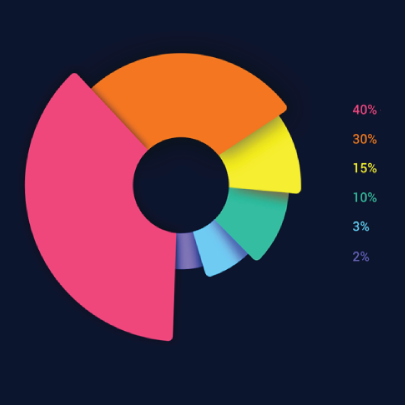

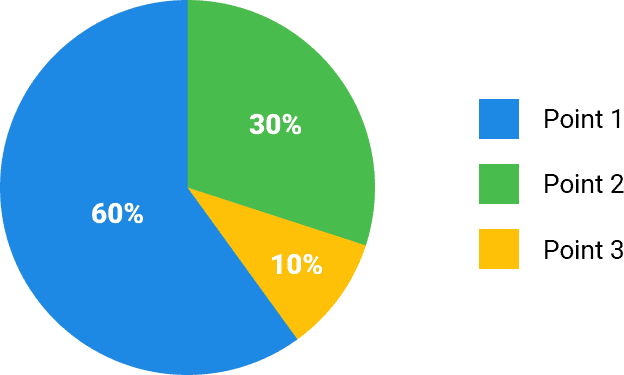

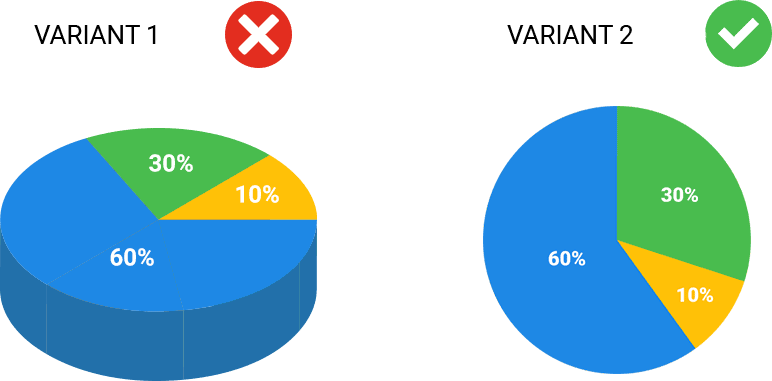

Pie Charts and Donut Charts

These charts show the proportions of a whole. While popular, they should be used carefully as they can sometimes be misleading. Pie charts are best used for showing simple proportions, such as the market share of different companies. Donut charts are similar but have a central hole, making them visually distinct.

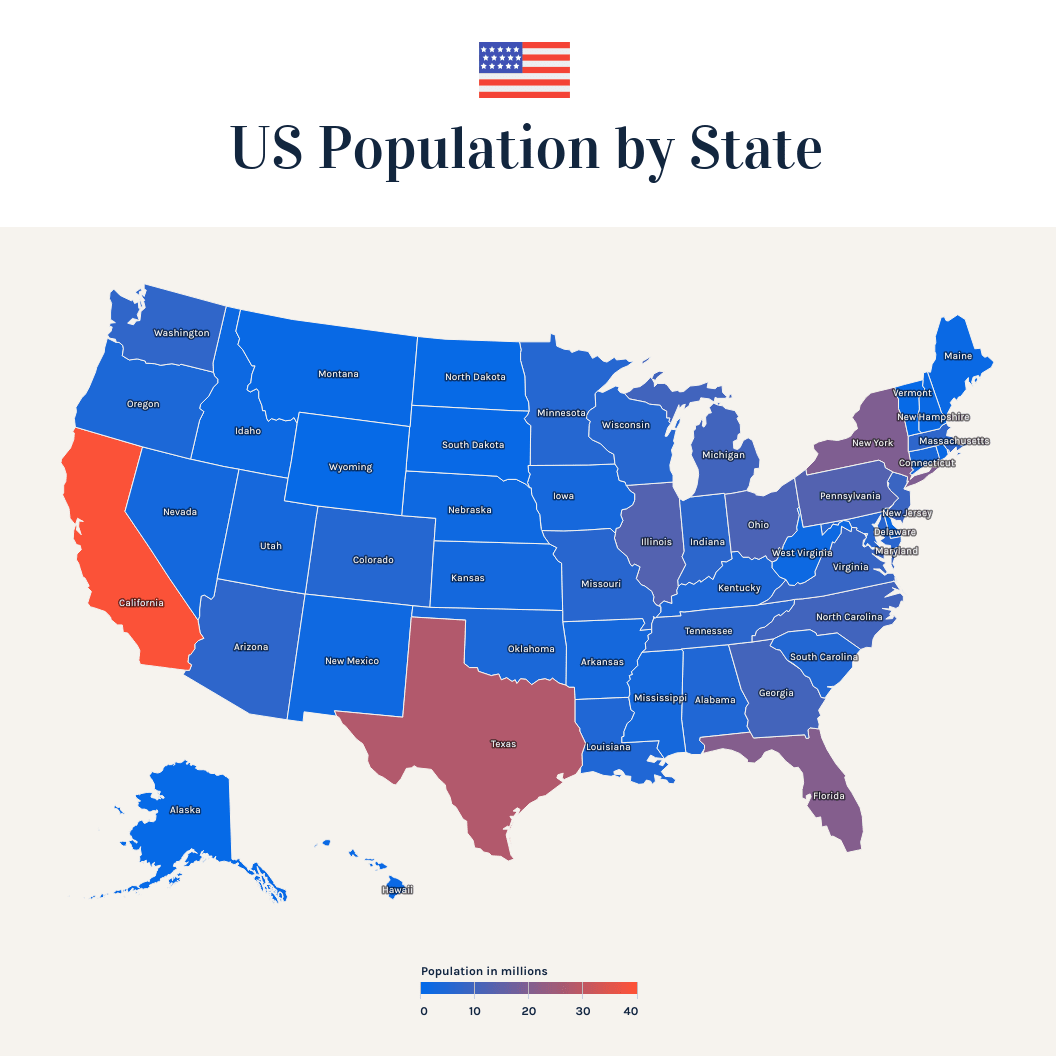

These visualize data through color variations. They effectively show data density and variations across different categories or geographical areas. Heat maps are often used in fields like marketing to show customer activity across various regions or in scientific research to show gene expression levels.

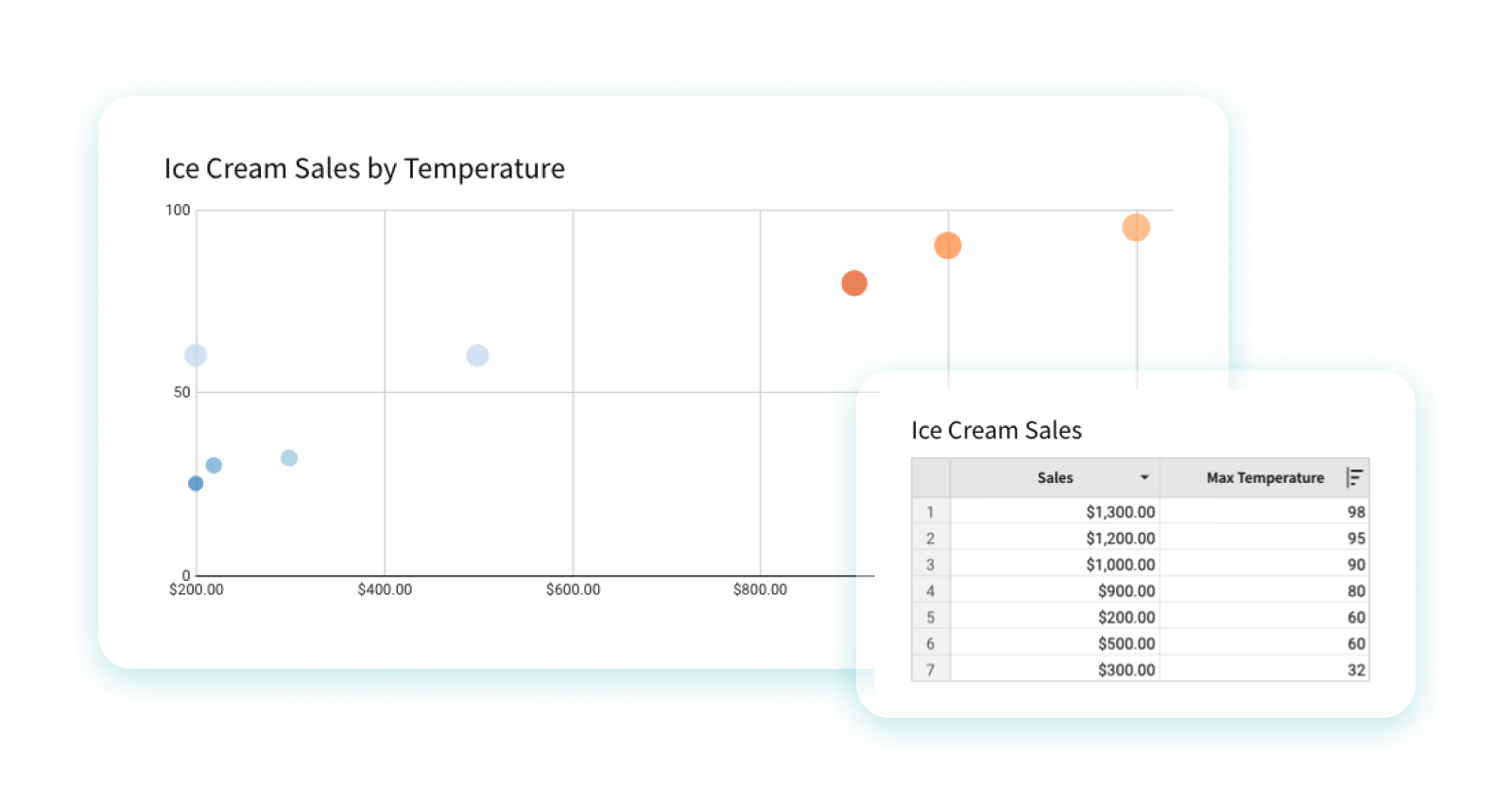

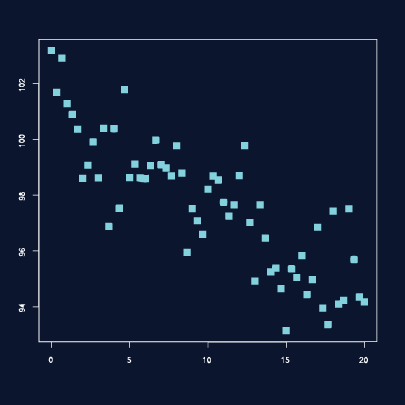

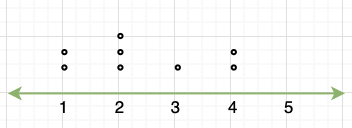

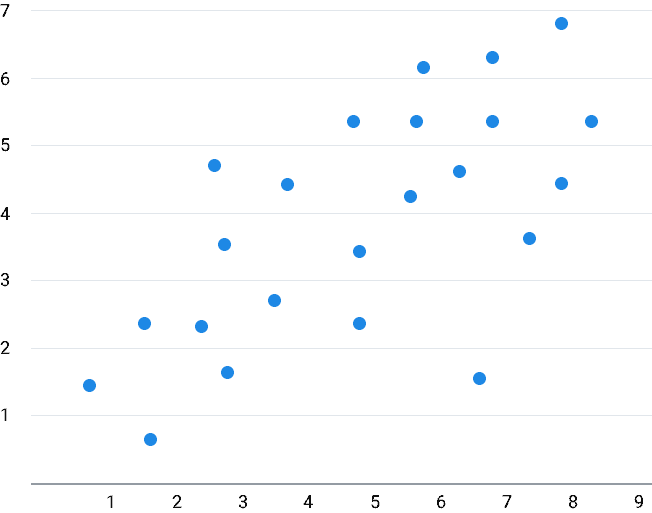

Scatter Plots

These display values for typically two variables for a data set, showing how much one variable is affected by another. Scatter plots help identify correlations and relationships between variables. For example, a scatter plot might show the relationship between advertising spend and sales revenue.

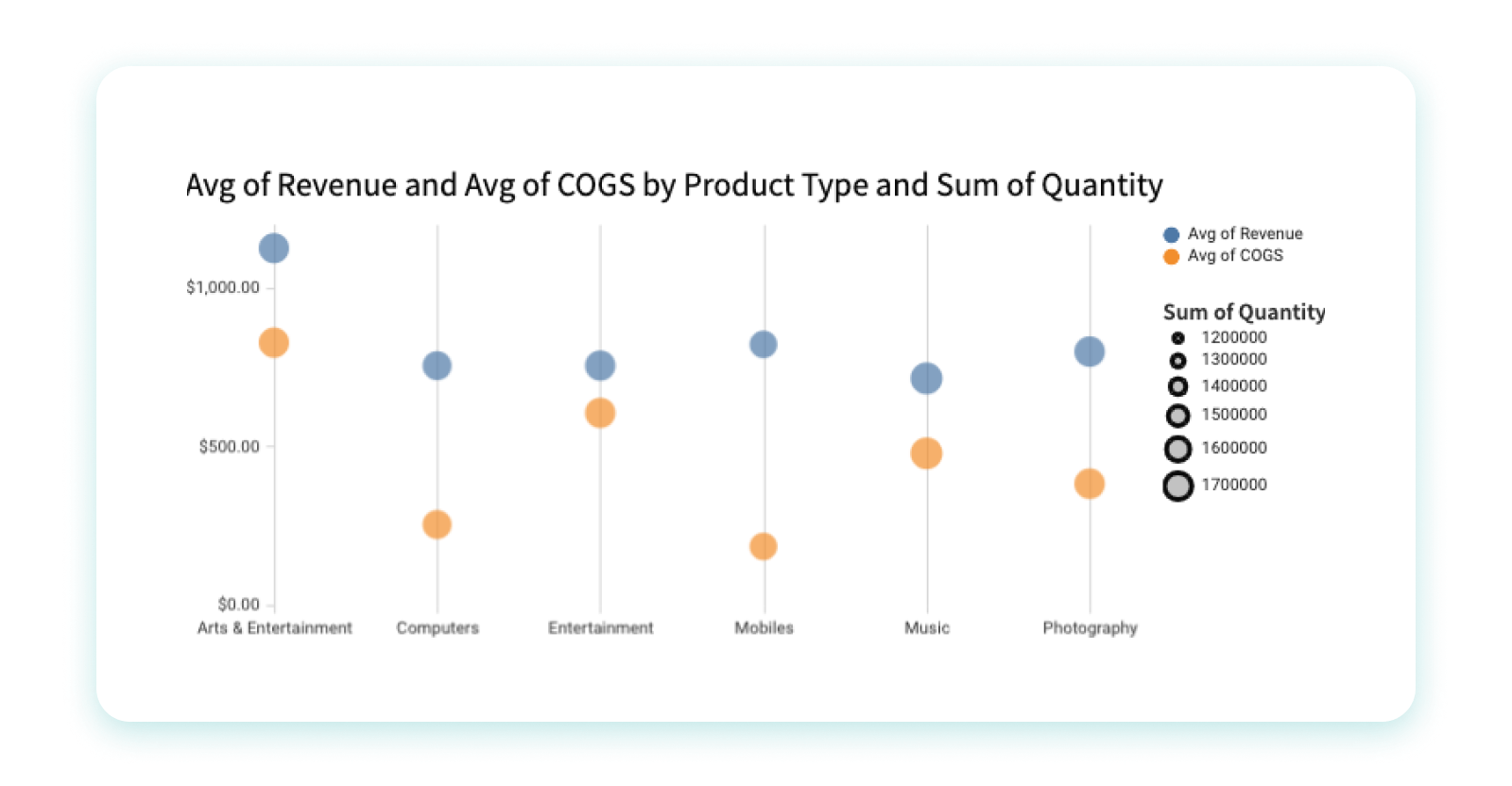

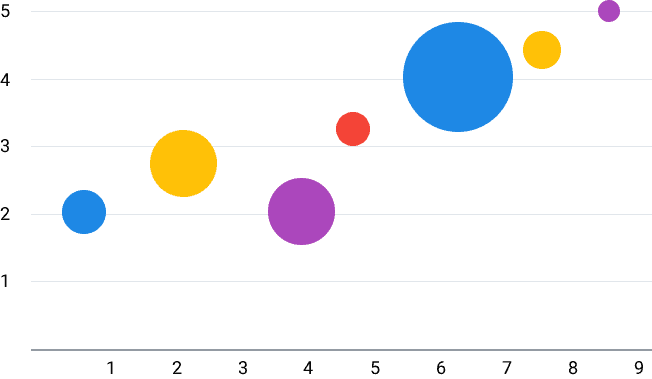

Bubble Charts

Similar to scatter plots, but with an added data dimension represented by the bubble size. Bubble charts can show the relationship between three variables, with the size of the bubble representing the third variable. They are often used in business to show the performance of different products or regions.

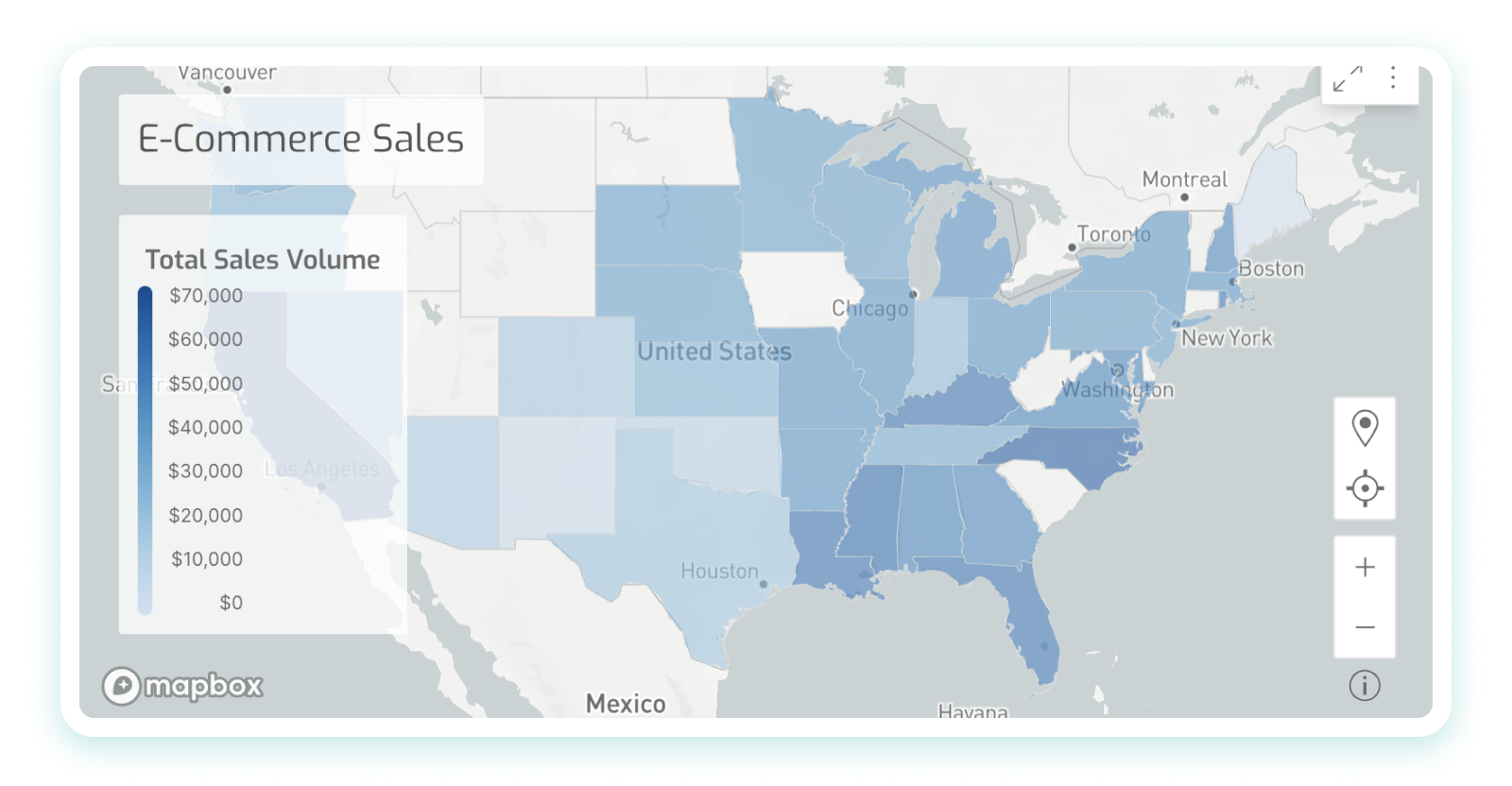

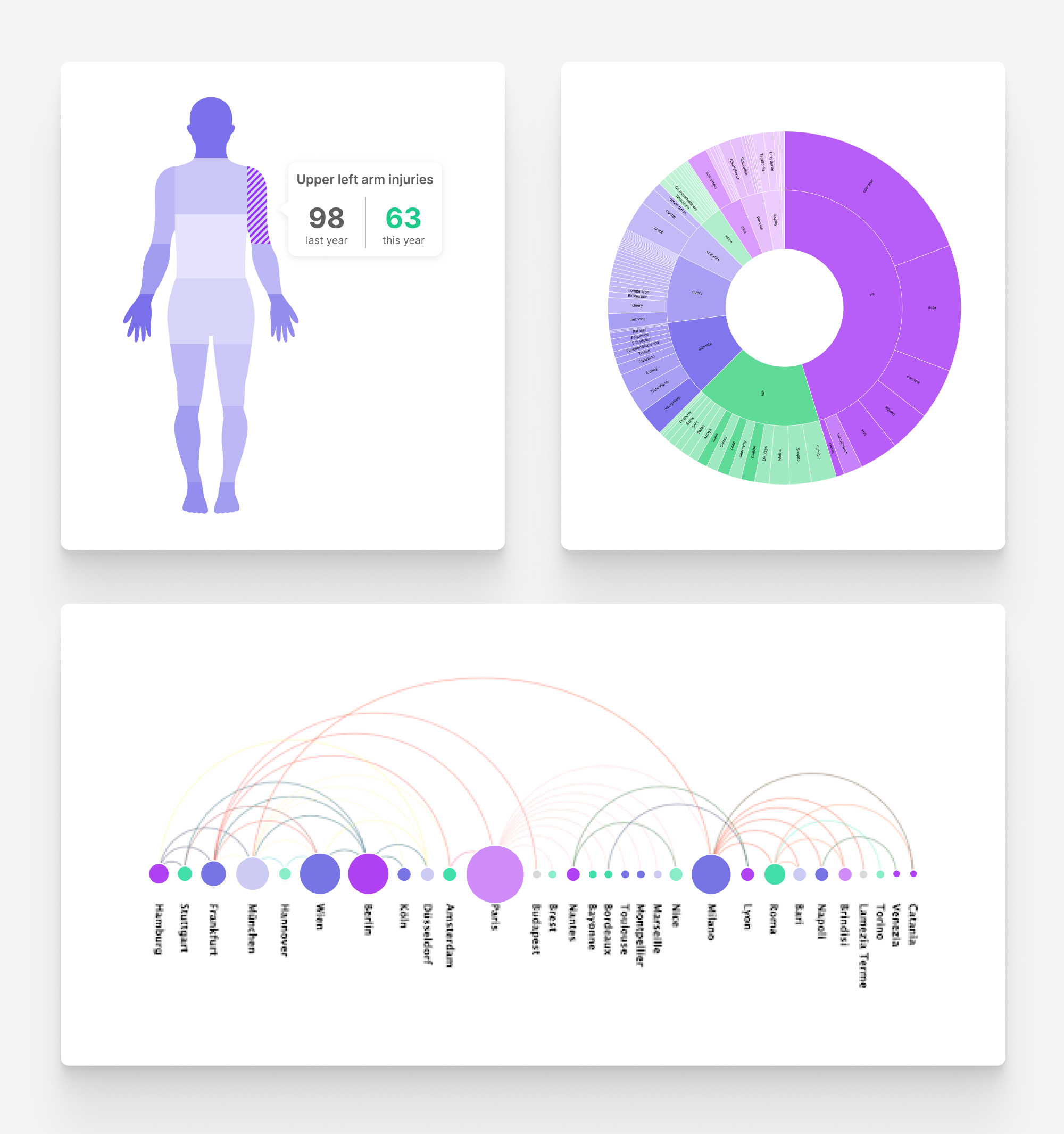

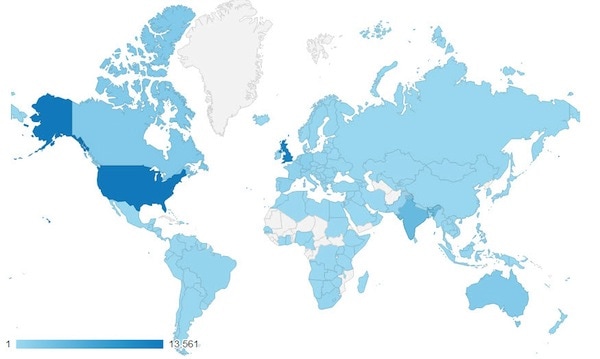

Geospatial Maps

Geospatial maps visualize data related to geographical locations and are useful in fields like meteorology, urban planning, and logistics. They can show the distribution of phenomena across different regions, such as the spread of diseases, population density, or delivery routes.

Also Read: Big Data and Analytics: Unlocking the Future

What is Data Visualization? Use Cases and Applications

Data visualization is used across various industries and applications, enhancing the ability to understand and utilize data effectively:

Business Intelligence

Companies use data visualization for performance tracking, market analysis, and strategic planning. Tableau and Power BI are popular for creating interactive dashboards and reports. These tools enable businesses to monitor key performance indicators (KPIs), track sales performance, and analyze real-time market trends. For example, a retail company might use a dashboard to monitor daily sales figures, inventory levels, and customer feedback.

Visualization helps understand patient data, track disease outbreaks, and optimize healthcare operations. For example, heat maps can show the spread of diseases geographically, allowing public health officials to allocate resources effectively. In hospitals, data visualization can be used to monitor patient vitals, track the progress of treatments, and identify potential complications early.

In finance, visualization aids in tracking stock market trends, risk management, and portfolio analysis. Real-time visualization tools are crucial for making timely investment decisions. For example, traders use real-time charts to monitor stock prices, identify trends, and execute trades. Risk managers use visualizations to assess portfolios’ risk exposure and develop mitigation strategies.

Marketers use visualization to analyze consumer behavior, campaign performance, and market trends. Interactive dashboards can show the impact of marketing efforts in real time. For example, a marketing team might use a dashboard to track the performance of different campaigns, analyze customer engagement, and optimize marketing strategies based on data insights.

Data visualization improves teaching methods, tracks student performance, and research trends. Educational institutions use visual tools to analyze and present data on student outcomes. For example, schools use dashboards to monitor student attendance, track academic performance, and identify students who need additional support. Researchers use data visualization to analyze education trends and develop evidence-based policies.

Scientific Research

Scientists use data visualization to interpret complex data sets from experiments and simulations. 3D visualizations can provide in-depth insights into scientific phenomena. For example, climate scientists use visualizations to analyze data from climate models, track changes in temperature and precipitation patterns, and predict future climate scenarios. Biologists use visualizations to analyze gene expression data and understand diseases’ underlying mechanisms.

Government and Public Policy

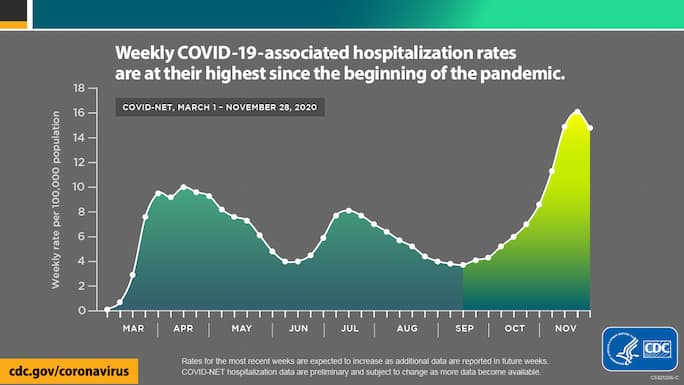

Governments use data visualization to analyze population data, economic indicators, and public health information. This aids in policy-making and public communication. For example, governments use visualizations to track the spread of COVID-19, monitor economic performance, and allocate resources. Policymakers use data visualization to analyze different policies’ impact and communicate findings to the public.

Also Read: Five Outstanding Data Visualization Examples for Marketing

Building Data Science and Data Visualization Skills

Data visualization is more than just a way to present data; it’s a crucial tool for making sense of complex information and driving informed decision-making across various sectors. Aspiring data scientists and analysts should prioritize gaining data visualization expertise to unlock their data’s full potential. Enrolling in a comprehensive data science program can provide the necessary skills and knowledge to excel in this field.

As data continues to grow in volume and complexity, the importance of data visualization will only increase, making it a vital skill for the future. By harnessing the power of data visualization, individuals and organizations can turn data into actionable insights, drive better decisions, and achieve their goals. Whether you’re working in business, healthcare, finance, education, scientific research, or government, data visualization can help you understand and leverage the power of data.

You might also like to read:

Data Science Bootcamps vs. Traditional Degrees: Which Learning Path to Choose?

Data Scientist vs. Machine Learning Engineer

What is A/B Testing in Data Science?

What is Natural Language Generation in Data Science, and Why Does It Matter?

What is Exploratory Data Analysis? Types, Tools, Importance, etc.

Data Science Bootcamp

- Learning Format:

Online Bootcamp

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Recommended Articles

What is Bayesian Statistics, and How Does it Differ from Classical Methods?

What is Bayesian statistics? Learn about this tool used in data science, its fundamentals, uses, and advantages.

What is Data Imputation, and How Can You Use it to Handle Missing Data?

This article defines data imputation and demonstrates its importance, techniques, and challenges.

What is Data Governance, How Does it Work, Who Performs it, and Why is it Essential?

What is data governance? This article explores its goals and components, how to implement it, best practices, and more.

Technology at Work: Data Science in Finance

In today’s data-driven world, industries leverage advanced data analytics and AI-powered tools to improve services and their bottom line. The financial services industry is at the forefront of this innovation. This blog discusses data science in finance, including how companies use it, the skills required to leverage it, and more.

The Top Data Science Interview Questions for 2024

This article covers popular basic and advanced data science interview questions and the difference between data analytics and data science.

Big Data and Analytics: Unlocking the Future

Unlock the potential and benefits of big data and analytics in your career. Explore essential roles and discover the advantages of data-driven decision-making.

Learning Format

Program Benefits

- 12+ tools covered, 25+ hands-on projects

- Masterclasses by distinguished Caltech CTME instructors

- Caltech CTME Circle Membership

- Industry-specific training from global experts

- Call us on : 1800-212-7688

arXiv's Accessibility Forum starts next month!

Help | Advanced Search

Quantitative Biology > Neurons and Cognition

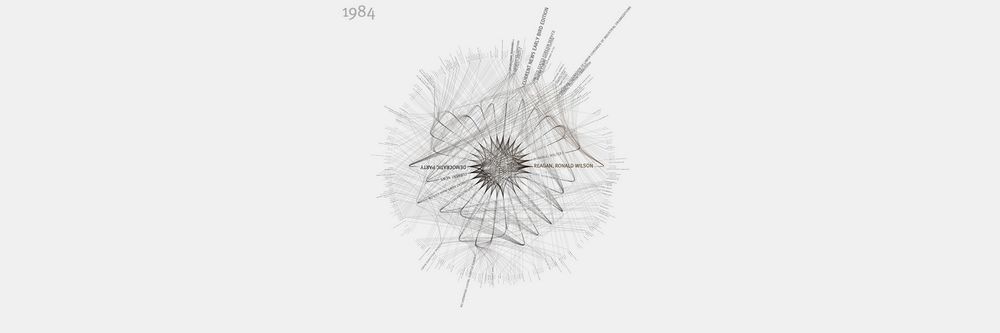

Title: universal dimensions of visual representation.

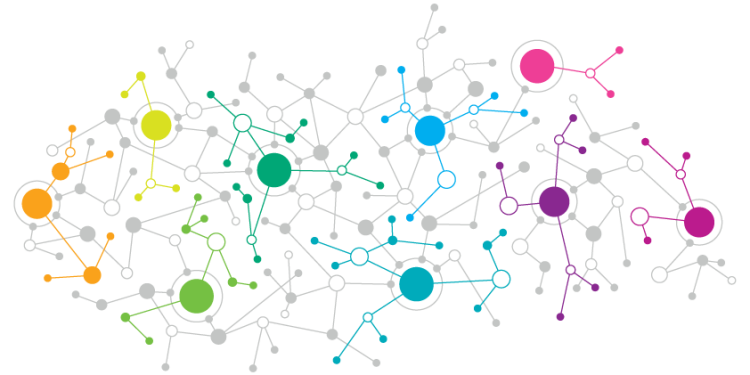

Abstract: Do neural network models of vision learn brain-aligned representations because they share architectural constraints and task objectives with biological vision or because they learn universal features of natural image processing? We characterized the universality of hundreds of thousands of representational dimensions from visual neural networks with varied construction. We found that networks with varied architectures and task objectives learn to represent natural images using a shared set of latent dimensions, despite appearing highly distinct at a surface level. Next, by comparing these networks with human brain representations measured with fMRI, we found that the most brain-aligned representations in neural networks are those that are universal and independent of a network's specific characteristics. Remarkably, each network can be reduced to fewer than ten of its most universal dimensions with little impact on its representational similarity to the human brain. These results suggest that the underlying similarities between artificial and biological vision are primarily governed by a core set of universal image representations that are convergently learned by diverse systems.

| Subjects: | Neurons and Cognition (q-bio.NC); Computer Vision and Pattern Recognition (cs.CV) |

| Cite as: | [q-bio.NC] |

| (or [q-bio.NC] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite |

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Neuroscience

Neural dynamics of visual working memory representation during sensory distraction

- Jonas Karolis Degutis author has email address

Simon Weber

John-dylan haynes.

- Bernstein Center for Computational Neuroscience Berlin and Berlin Center for Advanced Neuroimaging, Charité Universitätsmedizin Berlin, corporate member of the Freie Universität Berlin, Humboldt-Universität zu Berlin, and Berlin Institute of Health, Berlin, Germany

- Max Planck School of Cognition, Leipzig, Germany

- Department of Psychology, Humboldt-Universität zu Berlin, Berlin, Germany

- Research Training Group “Extrospection” and Berlin School of Mind and Brain, Humboldt-Universität zu Berlin, Berlin, Germany

- Institute of Psychology, Otto von Guericke University, Mageburg, Germany

- Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany

- German Center for Neurodegenerative Diseases, Göttingen, Germany

- Research Cluster of Excellence “Science of Intelligence”, Technische Universität Berlin, Berlin, Germany

- Collaborative Research Center “Volition and Cognitive Control”, Technische Universität Dresden, Dresden, Germany

- https://doi.org/ 10.7554/eLife.99290.1

- Open access

- Copyright information

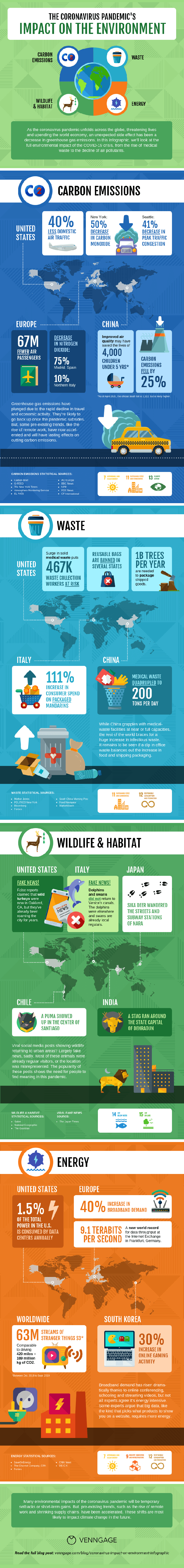

Recent studies have provided evidence for the concurrent encoding of sensory percepts and visual working memory contents (VWM) across visual areas; however, it has remained unclear how these two types of representations are concurrently present. Here, we reanalyzed an open-access fMRI dataset where participants memorized a sensory stimulus while simultaneously being presented with sensory distractors. First, we found that the VWM code in several visual regions did not generalize well between different time points, suggesting a dynamic code. A more detailed analysis revealed that this was due to shifts in coding spaces across time. Second, we collapsed neural signals across time to assess the degree of interference between VWM contents and sensory distractors, specifically by testing the alignment of their encoding spaces. We find that VWM and feature-matching sensory distractors are encoded in separable coding spaces. Together, these results indicate a role of dynamic coding and temporally stable coding spaces in helping multiplex perception and VWM within visual areas.

eLife assessment

This useful study reports a reanalysis of one experiment of a previously published report to characterize the dynamics of neural population codes during visual working memory in the presence of distracting information. The evidence supporting the claims of dynamic codes is incomplete , as only a subset of the original data is analyzed, there is only modest evidence for dynamic coding in the results, and the result might be affected by the signal-to-noise ratio. This research will be of interest to cognitive neuroscientists working on the neural bases of visual perception and memory.

- https://doi.org/ 10.7554/eLife.99290.1.sa2

- Read the peer reviews

- About eLife assessments

Introduction

To successfully achieve behavioral goals, humans rely on the ability to remember, update, and ignore information. Visual working memory (VWM) allows for a brief maintenance of visual stimuli that are no longer present within the environment ( 1 – 3 ). Previous studies have revealed that the contents of VWM are present throughout multiple visual areas, starting from V1 ( 4 – 12 ). These findings raised the question of how areas that are primarily involved in visual perception can also maintain VWM information without interference between the two contents. Recent studies that had participants remember a stimulus while simultaneously being presented with sensory stimuli during the delay period have found supporting evidence that both VWM contents and sensory percepts are multiplexed in occipital and parietal regions ( 8 , 13 , 14 ). However, the mechanism employed in order to segregate bottom-up visual input from VWM contents remains poorly understood.

One proposed mechanism to achieve the separation between sensory and memory representations is dynamic coding ( 15 – 17 ): the change of the population code encoding VWM representations across time. Recent work has shown that the format of VWM might not be as persistent and stable throughout the delay as previously thought ( 18 , 19 ). Frontal regions display dynamic population coding across the delay during the maintenance of category ( 20 ) and spatial contents in the absence of interference ( 21 , 22 ), and also shows dynamic recoding of the memoranda after sensory distraction ( 23 , 24 ). The visual cortex in humans displays dynamic coding of contents during high load trials ( 25 ) and during a spatial VWM task ( 26 ). However, it is not yet clear whether dynamic coding of VWM might help evade sensory distraction in human visual areas.

Another line of evidence suggests that perception could potentially be segregated from VWM representations using stable non-overlapping coding spaces ( 27 ). For example, evidence from neuroanatomy indicates that the sensory bottom-up visual pathway primarily projects to the cytoarchitectonic Layer 4 in V1, while feedback projections culminate in superficial and deep layers of the cortex ( 28 ). Functional results are in line with neuroanatomy by showing that VWM signals preferentially activate the superficial and deep layers in humans ( 29 ) and non-human primates ( 30 ), while perceptual signals are more prevalent in the middle layers ( 31 ). In addition to laminar separation, regional multiplexing of multiple items could potentially rely on rotated representations, as seen in memory and sensory representations orthogonally coded in the auditory cortex ( 32 ) and in the storage of a sequence of multiple spatial locations in the prefrontal cortex (PFC) ( 33 ). Non-overlapping orthogonal representations have also been seen in both humans and trained recurrent neural networks as a way of segregating attended and unattended VWM representations ( 34 – 36 ).

Here we investigated whether the concurrent presence of VWM and sensory information is compatible with predictions offered by dynamic coding or by stable non-aligned coding spaces. For this, we reanalyzed an open-access fMRI dataset by Rademaker et al. ( 8 ) where participants performed a delayed-estimation VWM task with and without sensory distraction. To investigate dynamic coding we employed a temporal cross-decoding analysis that assessed how well the multivariate code encoding VWM generalizes from one time point to another ( 22 , 37 – 39 ), and a temporal neural subspace analysis that examined a sensitive way of looking at alignment of neural populations coding for VWM at different time points. To assess the non-overlapping coding hypothesis, we used neural subspaces ( 21 , 26 , 32 ) to see whether temporally stable representations of the VWM target and the sensory distractor are coded in separable neural populations. Finally, we examined the multivariate VWM code changes during distractor trials when compared to the no-distractor VWM format.

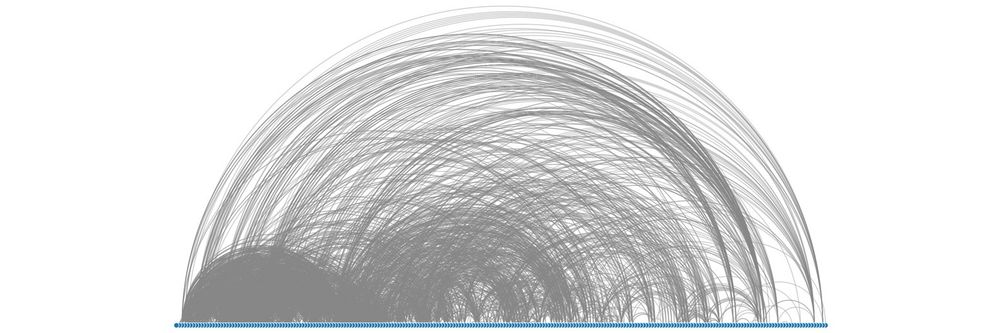

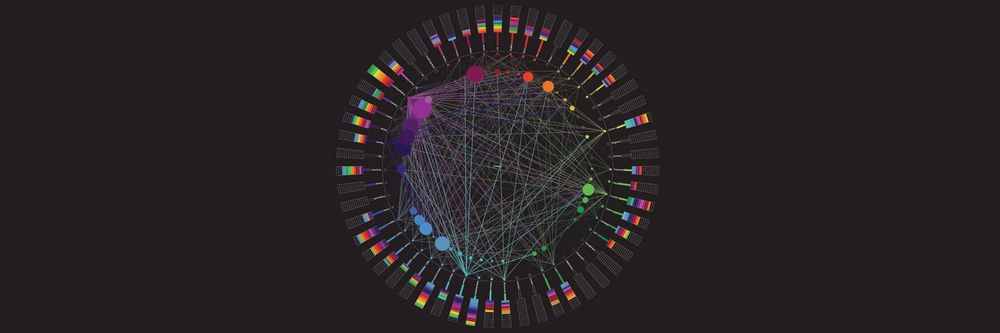

Temporal cross-decoding in distractor and no-distractor trials

In the previously published study ( 8 ) participants completed a VWM task where on a given trial they were asked to remember an orientation of a grating, which they had to then recall at the end of the trial. The delay period was either left blank (no-distractor) or a noise or randomly oriented grating distractor was presented ( Fig. 1a ). To investigate the dynamics of the VWM code, we examined how the multivariate pattern of activity encoding VWM memoranda changed across the duration of the delay period. To do so, we ran a temporal cross-decoding analysis where we trained a decoder (periodic support vector regression, see ( 40 )) on the target orientation, separately for each time point and tested on all time points in turn in a cross-validated fashion. If the information encoding VWM memoranda were to have the same code, the trained decoder would generalize to other time points, indicated by similar decoding accuracies on the diagonal and off-diagonal elements of the matrix. However, if the code exhibited dynamic properties, despite information about the memonda being present (above-chance decoding on the diagonal of the matrix), both off-diagonal elements corresponding to a given on-diagonal element would have lower decoding accuracies ( Fig. 1b ). Such off-diagonal elements are considered an indication of a dynamic code.

Task and temporal cross-decoding.

a) On each trial an oriented grating was presented for the 0.5 s followed by a delay period of 13 s ( 8 ). In a third of the trials a noise distractor was presented for 11 s during the middle of the delay; in another third another orientation grating was presented; one third of trials had no distractor during the delay. b) Illustration of dynamic coding elements. An off-diagonal element had to have a lower decoding accuracy compared to both corresponding diagonal elements (see Methods for details). c) Temporal generalization of the multivariate code encoding VWM representations in three conditions across occipital and parietal regions. Across-participant mean temporal cross-decoding of no-distractor trials. Black outlines: matrix elements showing above-chance decoding (cluster-based permutation test; p < 0.05). Blue outlines with dots: dynamic coding elements; parts of the cross-decoding matrix where the multivariate code fails to generalize (off-diagonal elements having lower decoding accuracy than their corresponding two diagonal elements; conjunction between two cluster-based permutation tests; p < 0.05). d) Same as c), but noise distractor trials. e) Same as c), but orientation distractor trials. f) Dynamicism index; the proportion of dynamic coding elements across time. High values indicate a dynamic non-generalizing code, while low values indicate a generalizing code. Time indicates the time elapsed since the onset of the delay period.

We ran the temporal cross-decoding analysis for the three VWM delay conditions: no-distractor, noise distractor and orientation distractor (feature-matching distractor). First, we examined each element of the cross-decoding matrix to test whether decoding accuracies were above chance. In all three conditions and throughout all ROIs, we found clusters where decoding was above chance ( Fig. 1c-e , black outline; nonparametric cluster-permutation test against null; all clusters p < 0.05) from as early as 4 s after the onset of the delay period. We found that decoding on the diagonal was highest during no-distractor compared to noise and orientation distractor trials in most regions of interest (ROI; Fig. 4a ).

Second, we examined off-diagonal elements to assess whether there was any indication that they reflected a non-generalizing dynamic code (see Methods for full details). Despite a high degree of temporal generalization, we found dynamic coding clusters in all three conditions. Some degree of dynamic coding was observed in all ROIs but LO2 in the noise distractor and no-distractor trials, while it was only present in V1, V2, V3, V4, and IPS in the orientation distractor condition ( Fig. 1c-e , blue outline). The difference between noise and orientation distractor conditions could not be explained by the amount of information present in each ROI, as the decoding accuracy of the diagonal was similar across all ROIs in both the noise and orientation distractor conditions ( Fig. 4a ). We saw a nominally larger number of dynamic coding elements in V1, V2 and V3AB during the noise distractor condition and in V3 during the no-distractor condition ( Fig. 1d ).

To qualitatively compare the amount of dynamic coding in the three conditions across the delay period, we calculated a dynamicism index ( 22 ) ( Fig. 1e ; see Methods), which measured the multivariate code’s uniqueness at each time point; more precisely, the proportion of dynamic elements corresponding to each diagonal element. High values indicate dynamic code and low values indicate a generalizing code. Across all conditions, most dynamic elements occurred between the encoding and early delay periods (4-8 s), and the late delay and retrieval (14.4-16.8 s). Interestingly, during the noise distractor trials in V1 we also saw dynamic coding during the middle of the delay period; the multivariate code not only changed during the onset and offset of the noise stimulus, but also during its presentation and throughout the extent of the delay.

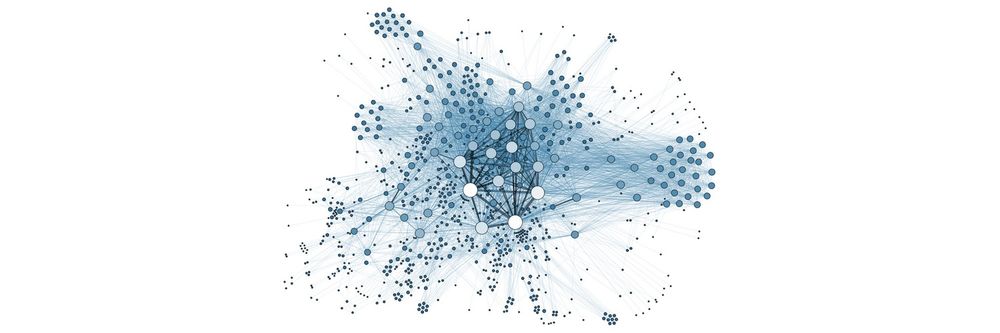

Dynamics of VWM neural subspaces across time

The temporal cross-decoding analysis revealed more dynamic coding in the early visual cortex primarily during the early and late delay phase and a more generalized coding throughout the delay in higher-order regions. In order to understand the nature of these effects in more detail, we conducted a separate series of analyses that directly assessed the neural subspaces in which the orientations were encoded and how these potentially changed across time. Specifically, we followed a previous methodological framework ( 26 ) and applied a principal component analysis (PCA) to the high-dimensional activity patterns at each time point to identify the two axes that explained maximal variance across orientations (see Fig. 2 and Methods).

Assessing the dynamics of neural subspaces in V1-V3AB.

a) Schematic illustration of the neural subspace analysis. A given data matrix (voxels x orientation bins) was subjected to a principal components analysis and the first two dimensions were used to define a neural subspace onto which a left-out test data matrix was projected. This resulted in a matrix of two coordinates for each orientation bin and was visualized (see right). The x and y axes indicate the first two principal components. Each color depicts an angular bin. b) Schematic illustration of the calculation of an above-baseline principal angle (aPA). A principal angle (PA) is the angle between the 2D PCA-based neural subspaces (as in a ) for two different time points t 1 , t 2 . A small angle would indicate alignment of coding spaces; an angle of above-baseline would indicate a shift in the coding space. The above-baseline principle angle (aPA) is the angle for a comparison between two time points (t 1 , t 2 ) minus the angle between cross-validated pairs of the same time points. c) Each row shows a projection that was estimated for one of two time ranges (middle and late delay) and then applied to all time points (using independent, split-half cross-validated data). Opacity increases from early to late time points. For visualization purposes the subspaces were estimated on a participant-aggregated ROI ( 26 ). Fig. S1 depicts the same projections as neural trajectories. d) aPA between all pairwise time point comparisons (nonparametric permutation test against null; FDR-corrected p < 0.05) averaged across 1,000 split-half iterations. Corresponding p -values found in Supplementary Table 1 .

First, we visualized the consistency of the neural subspaces across time. For this, we computed low-dimensional 2D neural subspaces for a given time point and projected left-out data from six time points during the delay onto this subspace ( 26 , 32 ). A projection of data from a single time point resulted in four orientation bin values placed within the subspace ( Fig. 2a , colored circles indicate orientation). Taking into account projected data from all timepoints, if the VWM code were generalizing, we would see a clustering of orientation points in a subspace; however, if orientation points were scattered around the neural subspace, this would show a non-generalizing code.

We examined the projections in a combined ROI spanning V1-V3AB aggregated across participants. We projected left-out data from all six time point bins onto subspaces generated from the early (7.2 s), middle (12 s), and late (16.8 s) time point data for each of the three conditions. Overall, the results showed generalization across time with some exceptions ( Fig. 2c , Fig. S1 ). The clustering of orientation bins in the no-distractor condition was most pronounced ( Fig. 4a ). In contrast, the noise distractor trials showed a resemblance of some degree of dynamic coding, as seen by less variance explained by early time points projected onto the middle subspace and the early and middle time points projected onto late subspace ( Fig. 2c , Fig. S1 ).

To quantify the visualized changes, we measured the alignment between each pair of subspaces by calculating the above-baseline principal angle ( Fig. 2b ) within the combined V1-V3AB ROI. The above-baseline principal angle (aPA) measures the alignment between the 2D subspaces encoding the VWM representations: the higher the angle, the smaller the alignment between two subspaces and an indication of a changed neural coding space. Unlike in the projection of data from time points, the aPA was calculated participant-wise. Using a split-half approach, we measured the aPA between each split-pair of subspaces and subtracted the angles measured within each of the subspaces with the latter acting as a null baseline.

All three conditions showed significant aPAs ( Fig. 2d ; cyan stars; permutation test; p < 0.05, FDR-corrected). Corresponding to the results from the cross-decoding analysis, the early (4.8s) and late (16.8) delay subspaces showed the highest number of significant pairwise aPAs in all conditions, with noise distractor trials having all pairwise aPAs including the early and late subspaces being significant. The three conditions each had two significant aPAs between timepoints in the middle of the delay period.

Alignment between distractor and target subspaces in orientation distractor trials

Next, we assessed any similarity in encoding between the memorized orientation targets and the orientation distractors by focusing on those trials where both occurred. First, we examined whether the encoding of the sensory distractor is stable across its entire presentation duration (1.5 s - 12.5 s after target onset) using the same approach as for the VWM target ( Fig. 1e ). We found stable coding of the distractor in all ROIs with only a few dynamic elements in V2 and V3 ( Fig. 3a , Fig. S2 ). We then assessed whether the sensory distractor had a similar code to the VWM target by examining whether the multivariate code across time generalizes from the target to the distractor and vice versa. When cross-decoded, the sensory distractor ( Fig. 3c ) and target orientation ( Fig. 3b ) had lower decoding accuracies in the early visual cortex compared to when trained and tested on the same label-type, indicative of a non-generalizing code. Such a difference was not seen in higher-order visual regions, as the decoding of the sensory distractor was low to begin with ( Fig. S2 ).

Generalization between target and distractor codes in orientation distractor VWM trials in V1-V3AB.

a) Across-participant mean temporal cross-decoding of the sensory distractor. Black outlines: matrix elements showing above-chance decoding (cluster-based permutation test; p < 0.05). Blue outlines with dots: dynamic coding element (conjunction between two cluster-based permutation tests; p < 0.05). b) Same as a), but the decoder was trained on the target and tested on the sensory distractor in orientation VWM trials. c) Same as a), but trained on the sensory distractor and tested on the target. See Fig. S2 for ROIs from V4-LO2. d) Left: projection of left-out target (green) and sensory distractor (gray) onto an orientation VWM target neural subspace. Right: same as left, but the projections are onto the sensory distractor subspace. e) Principal angle between the sensory distractor and orientation VWM target subspaces ( p = 0.0297, one-tailed permutation test of sample mean). Average across 1,000 split-half iterations. Errorbars indicate ± SEM across participants.

Since we found minimal dynamics in the encoding of the distractor ( Fig. 3a ) and target ( Fig. 1e ), we focused on temporally stable neural subspaces that encoded the target and sensory distractor. We computed stable neural subspaces where we disregarded the temporal variance by averaging across the whole delay period and binned the trials either based on the target orientation ( Fig. 3d , left subpanel) or the distractor orientation ( Fig. 3d , right subpanel). We then projected left-out data binned based on the target ( Fig. 3d , green quadrilateral) or the distractor ( Fig. 3d , gray quadrilateral). This projection provided us with both a baseline (as when training and testing on the same label) and a cross-generalization. Unsurprisingly, the target subspace explained the left-out target data well ( Fig. 3d , left subpanel, green quadrilateral); however, the target subspace explained less variance of the left-out distractor data ( Fig. 3d , left subpanel, gray quadrilateral), as qualitatively seen from the smaller spread of the sensory distractor orientations. A similar but less pronounced dissociation between projections was seen in the distractor subspace ( Fig. 3d , left, quadrilateral in green) with the distractor subspace better explaining the left-out distractor data. We quantified the difference between the target and distractor subspaces and found a significant aPA between them ( p = 0.0297, one-tailed nonparametric permutation test; Fig. 3e ). These results provide evidence for the presence of separable stable neural subspaces that might enable the multiplexing of VWM and perception across the extent of the delay period.

Impact of distractors on VWM multivariate code

To further assess the impact of distractors on the available VWM information, we examined the decoding accuracies of distractor and no-distractor trials across time. Decoding accuracy was higher in the no-distractor trials compared to both orientation and noise distractor trials across all ROIs, but IPS ( Fig. 4a, red and blue lines , p < 0.05, cluster permutation test) across several stages of the delay period. To further assess how distractors affected the delay period information, we increased sensitivity by collapsing across it, because time courses were comparable in all conditions ( Fig. 4a ). To assess to which degree VWM encoding generalized from no-distractor to distractor trials, we trained a decoder on no-distractor trials and tested it on both types of distractor trials ( Fig. 4b noise- and orientation-cross). We expressed the decoding accuracy of each distractor condition as a proportion of the decoding accuracy in the no-distractor condition. Values close to one indicate comparable information, while values below one mean the decoder does not generalize well. We found that the cross-decoding accuracies were significantly lower than the no-distractor in all ROIs but V4 (in both noise and orientation) and LO2 (only noise). Thus, in most areas the decoder did not generalize well from the no-distractor to distractor conditions. However, the total amount of information in distractor trials was generally slightly lower ( Fig. 4a ). Thus, we also compared the generalization to a decoder trained and tested on the same distractor condition ( Fig. 4b noise- and orientation-within), which might thus be able to extract more information. We found that indeed information recovered in areas V2 and V3AB in the noise distractor condition ( Fig. 4b , pairwise permutation test). Thus, there was more information in the noise distractor condition, but it was not accessible to a decoder trained only on no-distractor trials. Additionally, a temporal cross-decoding analysis where all training time points were no-distractor trials had less dynamic coding in early visual regions ( Fig. S3 ) when compared to the temporal cross-decoding matrix when trained and tested on noise distractor trials ( Fig. 1d ). These results indicate a change in the VWM format between the noise distractor and no-distractor trials.

Cross-decoding between distractor and no-distractor conditions.

a) Decoding accuracy (feature continuous accuracy; FCA) across time for train and test on no-distractor trials (purple), train and test on noise distractor trials (dark green) and train and test on orientation distractor trials (light green). Horizontal lines indicate clusters where there is a difference between two time courses (all clusters p < 0.05; nonparametric cluster permutation test, see color code on the right). b) Decoding accuracy as a proportion of no-distractor decoding estimated on the averaged delay period (4-16.8s). Nonparametric permutation tests compared the decoding accuracy of each analysis to the no-distractor decoding baseline (indicated as a dashed line) and between a decoder trained and tested on distractor trials (noise- or orientation-within) and a decoder trained on no-distractor trials and tested on distractor trials (noise or orientation-cross). FDR-corrected across ROIs. * p < 0.05, *** p < 0.001. Corresponding p -values found in Supplementary Table 2 .

We examined the dynamics of visual working memory (VWM) with and without distractors and explored the impact of sensory distractors on the coding spaces of VWM contents in visual areas by reanalyzing previously published data ( 8 ). Participants completed a task during which they had to maintain an orientation stimulus in VWM. During the delay period either no distractor, an orientation distractor, or a noise distractor were presented. We assessed two potential mechanisms that could help concurrently maintain the superimposed sensory and memory representations. First, we examined whether changes were observable in the multivariate code for memory contents across time, which we term dynamic coding. For this we used two different analyses: temporal cross-classification and a direct assessment of angles between coding spaces. We found evidence for dynamic coding in all conditions, but there were differences in these dynamics between conditions and regions. Dynamic coding was most pronounced during the noise distractor trials in early visual regions. Second, we assessed the complementary question of temporally stable coding spaces. We computed the stable neural subspaces by averaging across the delay period. We saw that coding of the VWM target and concurrent sensory distractors occurred in different stable neural subspaces. Finally, we observed that the format of the multivariate VWM code during the noise distraction differs from the VWM code when distractors were not present.

Dynamic encoding of VWM contents has been previously repeatedly examined. Temporal cross-decoding analyses have been used in a number of non-human primate electrophysiology and human fMRI studies ( 15 , 22 , 23 , 25 , 26 , 38 , 41 ). Spaak et al. ( 22 ) found dynamic coding in the non-human primate PFC during a spatial VWM task. They observed a change in the multivariate code between different stages; specifically a first shift between the encoding and maintenance periods, and also a second shift between the maintenance and retrieval periods. The initial transformation between the encoding and maintenance periods might recode the percept of the target into a stable VWM representation, whereas the second might transform the stable memoranda into a representation suited for initiation of motor output. A similar dynamic coding pattern was also observed in human visual regions using neuroimaging ( 26 ). In this study, in all three conditions we find a comparable pattern of results, where the multivariate code changes between the early delay and middle delay, and middle delay and late delay periods.

When noise distractors are added to the delay period we find evidence of additional coding shifts in V1 during the middle of the delay. Previous research in non-human primates has shown that the presentation of a distractor induces a change in multivariate encoding for VWM in lateral PFC (lPFC) ( 23 ). More precisely, a lack of generalization was observed between the population code encoding VWM before the presentation of a distractor (first half of the delay) and after its presentation (the second half). Additionally, continuous shifts in encoding have been observed in the extrastriate cortex throughout the extent of the delay period when decoding multiple remembered items at high VWM load ( 25 ). The dynamic code has been interpreted to enable multiplexing of representations when the visual cortex is overloaded by the maintenance of multiple stimuli at once. Future research could examine how properties of the distractor and of the target stimulus could interact to lead to dynamic coding. One intriguing hypothesis is that distractors that perturb the activity of feature channels that are used to encode VWM representations induce changes in its coding space over time. It is important to note that in this experiment, the activation of the encoded target features was highest for the noise stimulus. Thus the shared spatial frequencies between noise distractor and the VWM contents potentially contribute to a more pronounced dynamic coding effect.

In a complementary analysis we directly assessed subspaces in which orientations were encoded in VWM. We defined the subspaces for three different time windows, early, middle and late. We find no evidence that the identity of orientations is confusable across time, e.g. we do not observe 45° at one given time point being recoded as 90° from a different time point. Such dynamics have been previously observed in the rotation of projected angles within a fixed neural subspace ( 32 , 34 ). Rather, we find a decreased generalization between neural subspaces at different time points, as previously observed in a spatial VWM task ( 26 ). These results suggest that the temporal dynamics across the VWM trial periods are driven by changes in the coding subspace of VWM. We do observe a preservation of the topology of the projected angles, as more similar angles remained closer together (e.g. the bin containing 45° was always closer to the bin containing 0° and 90°). Such a topology has been seen in V4 during a color perception task ( 42 ).

We also find evidence that the VWM contents are encoded in a different way depending on whether a noise distractor is presented or not. The decoder trained on no-distractor trials does not generalize well, presumably because it fails to fully access all the information present in noise distractor trials. If the decoders are trained directly on the distractor conditions the VWM related information is much higher. Additionally, we see that the code generalizes better across time when training on no-distractor trial time points and testing on noise distractor trials. This may imply that by training our decoder on the no-distractor trials we are able to uncover an underlying stable population code encoding VWM in noise distractor trials. Consistent with this finding, Murray et al. ( 21 ) demonstrated that subspaces derived on the delay period could still generalize to the more dynamic encoding and retrieval periods, albeit not perfectly.

Interestingly, we found limited dynamic coding in the orientation distractor condition; primarily a change in the code between the early delay and middle delay periods was observed. Nonetheless, we find distinct temporally stable coding spaces in which sensory distractors and memory targets are encoded. These results correspond to prior research demonstrating a rotated format between perception and memory representations ( 32 ), attended and unattended VWM representations in both humans and recurrent-neural networks trained on a 2-back VWM ( 34 ) and serial retro-cueing tasks ( 35 , 43 ). Additionally, similar rotation dynamics have been observed between multiple spatial VWM locations stored in the non-human primate lPFC ( 33 ). Considering the consistency of these results across different paradigms, we speculate that separate coding spaces might be a general mechanism of how feature-matching items can be concurrently multiplexed within visual regions. With growing evidence of the relationship between VWM capacity and neural resources available within the visual cortex ( 44 – 46 ), further research could examine the number of feature-matching items that can be stored in non-aligned coding spaces.

It remains to be seen whether the degree of change or rotation between subspaces correlates with behavior. In this experiment, we do not observe a behavioral deficit in the feature-matching orientation distractor trials ( 8 ). Yet there is evidence from behavioral and neural studies that show interactions between perception and VWM: feature-matching distractors behaviorally bias retrieved VWM contents ( 47 , 48 ); VWM representations influence perception ( 49 – 52 ); neural visual VWM representations in the early visual cortices are biased towards distractors ( 53 ); and the fidelity of VWM neural representations within the visual cortex negatively correlates with behavioral errors when recalling VWM during a sensory distraction task ( 54 ). In cases where a distractor does induce a drop in recall accuracy or biases the recalled VWM target, VWM and the sensory distractor neural subspaces might overlap more.

To our surprise, we did not observe a significant difference in the coding format of VWM between orientation distractor and no-distractor trials. Our initial expectation was that the VWM coding might undergo changes due to the target representation avoiding the distractor stimulus. However, the presence of a generalizing code between no-distractor and orientation distractor trials, along with the non-aligned coding spaces between the target and distractor in the orientation trials, suggests an alternative explanation. We suggest that the sensory distractor stimulus occupies a distinct coding space throughout its presentation during the delay, while the coding space of the target remains the same in both orientation and no-distractor trials. Layer-specific coding differences in perception and VWM might explain these findings ( 29 , 31 , 55 ). Specifically, the sensory distractor neural subspace might predominantly reside in the bottom-up middle layers of early visual cortices, while the neural subspace encoding VWM might primarily occupy the superficial and deep layers.

We provide evidence for two types of mechanisms found in visual areas during the presence of both VWM and sensory distractors. First, our findings show dynamic coding of VWM within the human visual cortex during sensory distraction and indicate that such activity is not only present within the lPFC. Second, we find that VWM and feature-matching sensory distractors are encoded in shifted coding spaces. Taking into account previous findings, we posit that different coding spaces within the same region might be a more general mechanism of segregating feature-matching stimuli. In sum, these results provide possible mechanisms of how VWM and perception are concurrently present within visual areas.

Participants, stimuli, procedure, and preprocessing

The following section is a brief explanation of parts of the methods covered in Rademaker et al. ( 8 ). Readers may refer to that paper for details. We reanalyzed data from Experiment 1.

Six participants performed two tasks while in the scanner: a VWM task and a perceptual localizer task. In the perceptual localizer task, either a donut-shaped or a circle-shaped grating was presented in 9 second blocks. The participants had to respond whenever the grating dimmed. There were a total of 20 donut-shaped and 20 circle-shaped gratings in one run. Participants completed a total of 15-17 runs.

The visual VWM task began with the presentation of a colored 100% valid cue which indicated the type of trial: no-distractor, orientation distractor, or noise distractor. Following the cue, the target orientation grating was presented centrally for 500 ms, followed by a 13 s delay period. In the trials with the distractor, a stimulus of the same shape and size as the target grating was presented centrally for 11 s in the middle of the delay period ( Fig. 1a ). The orientation and noise distractors reversed contrast at 4 Hz. At the end of the delay, a probe stimulus bar appeared at a random orientation. The participants had to align the bar to the target orientation and had to respond in 3 s.

The orientations for the VWM sample were pseudo-randomly chosen from six orientation bins each consisting of 30 orientations. The orientation distractor and sample were counterbalanced in order not to have the same orientation presented as a distractor. Each run consisted of four trials of each condition. Across three sessions participants completed 27 runs of the task resulting in a total of 108 trials per condition.

The data were acquired using a simultaneous multi-slice EPI sequence with a TR of 800 ms, TE of 35 ms, flip angle of 52°, and isotropic voxels of 2 mm. The data were preprocessed using FreeSurfer and FSL and time-series were z-scored across time for each voxel.

Voxel selection

We used the same regions of interest (ROI) as in Rademaker et al. ( 8 ), which were derived using retinotopic mapping. In contrast to the original study, we reduced the size of our ROIs by selecting voxels that reliably responded to both the donut-shaped orientation perception task and the no-distractor VWM task. In order to select reliably activating voxels, we calculated four tuning functions for each voxel: two from the perceptual localizer and two from the no-distractor VWM task. The tuning functions spanned the continuous feature space in bins of 30°. Thus, to calculate the tuning functions, we ran a split-half analysis using stratified sampling where we binned all trials into six bins (of 30°). For both halves, tuning functions were estimated using a GLM that included six orientation regressors (one for each bin) and assumed an additive noise component independent and identically distributed across trials. We calculated Pearson correlations between the no-distractor memory and the perception tuning functions across the six parameter estimates extracted from the GLM, thus generating one memory-memory and one perception-perception correlation coefficient for each voxel.

The same analysis was additionally performed 1,000 times on randomly permuted orientation labels to generate a null distribution for each participant and each ROI. These distributions were used to check for the reliability of voxel activation to perception and no-distractor VWM. After performing Fisher z-transformation on the correlations, we selected voxels that had a value above the 75th percentile of the null distributions in both the memory-memory and perception-perception correlations. This population of voxels was then used for all subsequent analyses. IPS included reliable voxels from retinotopically derived IPS0, IPS1, and IPS2.

Periodic support vector regression

We used periodic support vector regression (pSVR) to predict the target orientation from the multivariate BOLD activity ( 40 ). PSVR uses a regression approach to estimate the sine and cosine components of a given orientation independently and therefore accounts for the circular nature of stimuli. In order to have a proper periodic function, orientation labels from the range [0°, 180°) were projected into the range [0, 2π).

We used the support vector regression algorithm using a non-linear radial basis function (RBF) kernel implemented in LIBSVM ( 56 ) for orientation decoding. Specifically, sine and cosine components of the presented orientations were predicted based on multivariate fMRI signals from a set of voxels at specific time points within a trial (see Temporal Generalization ). In each cross-validation fold, we rescaled the training data voxel activation into the range [0, 1] and applied the training data parameters to rescale the test data. For each participant we had a total of three iterations in our cross-validation, where we trained on two thirds (i.e. two sessions) and tested on one third of the data (i.e. the left-out session). We selected three iterations in order to mitigate training and test data leakage (see Temporal Generalization ).

After pSVR-based analysis, reconstructed orientations were obtained by plugging the predicted sine and cosine components into the four-quadrant inverse tangent:

where x p and y p are pSVR outputs in the test set. Prediction accuracy was measured as the trial-wise absolute angular deviation between predicted orientation and actual orientation:

where θ is the labeled orientation and θ p is the predicted orientation. This measure was then transformed into a trial-wise feature continuous accuracy (FCA) ( 57 ) as follows:

The final across-trial accuracy was the mean of the trial-wise FCAs. Mean FCA was calculated across predicted orientations from all test sets after cross-validation was complete. The FCA is an equivalent measurement to standard accuracy measured in decoding analyses falling into the range between 0 and 100%, but extended to the continuous domain. In the case of random guessing, the expected angular deviation is π/2, resulting in chance-level FCA at 50%.

Temporal cross-decoding

To determine the underlying stability of the VWM code, we ran a temporal cross-decoding analysis using pSVR ( Fig. 1 ). We trained on data from a given time point and then predicted orientations for all time points, using the presented targets as labels. We trained on two-thirds of the trials per iteration and tested on the left-out third. Training and test data were never taken from the same trials, both when testing on the same and different time points.

We used a cluster-based approach to test for significance for above-chance decoding clusters ( 58 ). To determine whether the size of the cluster of the above-chace values was significantly larger than chance, we calculated a summed t-value for each cluster. We then generated a null distribution by randomly permuting the sign of the estimated above-chance accuracy (each FCA value was subtracted by 50%, such that 0 corresponds to chance level) of all components within the temporal cross-decoding matrix. We calculated the summed t-value for the largest randomly occurring above-chance cluster. This procedure was repeated 1000 times to estimate a null distribution. The empirical summed t-value of each cluster was then compared to the null distribution to determine significance ( p < 0.05; without control of multiple cluster comparisons).

Dynamic coding clusters were defined as elements within the temporal cross-decoding matrix where the multivariate code at a given time point did not fully generalize to another time point; in other words, an off-diagonal element was significantly smaller in accuracy compared to its two corresponding on-diagonal elements ( a ij < a ii and a ij < a jj , Fig. 1b ). In order to test for significance of these clusters, we ran two cluster-permutation tests as done in previous studies to define dynamic clusters ( 22 , 26 ). In each test, we subtracted one or the other corresponding diagonal elements from the off-diagonal elements ( a ij – a ii and a ij – a jj ). We then ran the same sign permutation test as for the above-chance decoding cluster for both comparisons. An off-diagonal element was deemed dynamic, if both tests were significant ( p < 0.05) and it was part of the above-chance decoding cluster.

Following ( 22 ), we also computed the dynamicism index as a proportion of elements across time that were dynamic. Specifically, we calculated the proportion of (off-diagonal) dynamic elements corresponding to a diagonal time point in both columns (corresponding to the test time points) and rows (corresponding to the train time points) of the temporal cross-decoding matrix.

Neural subspaces

We adapted the method from ( 26 ) to calculate two-dimensional neural subspaces encoding VWM information at a given time point. To do so, we used principal component analysis (PCA). To maximize power, we binned trial-wise fMRI activations into four equidistant bins of 45 degrees and averaged the signal across all trials within a bin ( Fig. 2a ). The data matrix X was defined as a p×ν matrix where p = 4 was the four orientation bins, and ν was the number of voxels. We mean-centered the columns (i.e. each voxel) of the data matrix.

This analysis focused on the time points from 4 s to 17.6 s after delay onset. The first TRs were not used since the temporal cross-decoding results showed no above-chance decoding. We averaged across every three TRs leading to six non-overlapping temporal bins resulting in six X matrices. We calculated the principal components (PCs) using eigendecomposition of the covariance matrix for each X and defined the matrix V using the two largest eigenvalues as a ν × 2 matrix, resulting in six neural subspaces, one for each non-overlapping temporal bin.

Neural subspaces across time

For visualization purposes, we used three out of the total of six neural subspaces from the following time points: early (7.2 s), middle (12 s), and late (16.8 s). Following the aforementioned procedure, these subspaces were calculated on half of the trials, as we projected the left-out data onto the subspaces. The left-out data were binned into six temporal bins between 4 s and 17.6 s after target onset with no overlap just like in the calculation of the six subspaces. The projection resulted in a p × 2 matrix P for each projected time bin (resulting in a total of six P matrices). We use distinct colors to plot the temporal trajectories of each orientation bin across time in a 2D subspace flattened ( Fig. 2c ) and not flattened ( Fig. S1 ) across the time dimension. Importantly, the visualization analysis was done on a combined participant-aggregated V1-V3AB region, which included all reliable voxels across the four regions and all six participants (see Voxel Selection ).

To measure the alignment between coding spaces at different times, we calculated an above-baseline principal angle (aPA) between all subspaces ( Fig. 2c ). We used the MATLAB function subspace for an implementation of the method proposed by ( 59 ) to measure the angle between two V matrices. This provided us with a possible principal angle between 0-90°; the higher the angle, the larger the difference between the two subspaces. In order to avoid overfitting and as in the visualization analysis, we used a split-half approach to compute the aPA between subspaces. Half of the binned trials were used to calculate V i,A and V j,A and half for V i,B V j,B , where A and B refer to the two halves of the split and and i and j refer to the two time bins compared. For significance testing, the within-subspace angle (the angle between two splits of the data within a given temporal bin (i.e. V i,A and V i,B )) was subtracted from the between-subspace PA (the angle between two different temporal bins (e.g. V i,A and V j,B )). Unlike the visualization analysis, the PA was calculated per participant 1,000 times using different splits of the data on a combined V1-V3AB region that included the reliable voxels across the four regions (see Voxel Selection ). The final aPA value was an average across all iterations for each participant.

Sensory distractor and orientation VWM target neural subspaces

For the orientation VWM target and sensory distractor neural subspace, we followed the aforementioned subspace analysis, but instead of calculating subspaces on six temporal bins, we averaged across the 4-17.6 s delay period and calculated a single subspace. As in the previous analysis, we split the orientation VWM trials in half. We then binned the trials either based on the target orientation or the sensory distractor. For visualization purposes, we projected the left-out data averaged based on the sensory distractor and the target onto subspaces derived from both the sensory distractor and target subspaces. As in the previous visualization, the analysis was run on a participant-aggregated V1-V3AB region.

To calculate the aPA we had the following subspaces: V Target,A , V Dist,A , V Target,B and V Dist,B , where the subspaces were calculated on trials binned either based on the target orientation or the sensory distractor. The aPA was calculated by subtracting the within-subspace angle ( V Target,A and V Target,B , V Dist,A and V Dist,B ) from the sensory distractor and working memory angle ( V Target,A and V Dist,B , V Target,B and V Dist,A ). The split-half aPA analysis was performed 1,000 times and the final value was an average across these iterations for each participant.

Data availability

The preprocessed data are shared open-access https://osf.io/dkx6y/ . The analysis scripts and results are shared https://osf.io/jq3ma/?view_only=8fcef0ef33a047e693c4102e3794319f (the link will become public (e.g. not view only) when the manuscript is published).

Acknowledgements

J.K.D. was funded by the Max Planck Society and BMBF (as part of the Max Planck School of Cognition). J.D.H. was supported by the Deutsche Forschungsgemeinschaft (DFG, Exzellenzcluster Science of Intelligence); SFB 940 “Volition and Cognitive Control”; and SFB-TRR 295 “Retuning dynamic motor network disorders using neuromodulation”. S.W. was supported by Deutsche Forschungsgemeinschaft (DFG) Research Training Group 2386 451 and EXC 2002/1 “Science of Intelligence.” Open access funding provided by Max Planck Society. We thank Rosanne Rademaker, Chaipat Chunharas, and John Serences for collecting and sharing their data open access, without which this reanalysis would not have been possible. We also thank Rosanne Rademaker, Michael Wolff, Amir Rawal, and Maria Servetnik for extensive discussions of the results. We also thank Vivien Chopurian and Thomas Christophel for their feedback on the manuscript.

Author contributions

Conceptualization, J.K.D.; Methodology, J.K.D, S.W., J.S., J.-D.H.; Formal Analysis, J.K.D.; Software, J.K.D, S.W., J.S.; Visualization, J.K.D.; Funding Acquisition, J.K.D., J.-D.H.; Writing - Original Draft Preparation, J.K.D.; Writing – Review & Editing, J.K.D., S.W., J.S., J.-D.H. Supervision, J.-D.H.

Declaration of interests

The authors declare no competing interests.

Neural trajectories across time.

Same as Figure 2c ), but the time dimension is on the z-axis.

Extension of Figure 3 for V4-LO2.

Temporal cross-decoding generalization between distractor and no-distractor VWM trials.

a) Across-participant mean temporal cross-decoding of noise distractor trials when trained on no-distractor trials. b) Same as a), but orientation distractor trials trained on no-distractor trials.

FDR-corrected p -values corresponding to Figure 2d .

FDR-corrected p -values corresponding to Figure 4b .

- D’Esposito M

- Goldman-Rakic PS

- Alexander GE

- Harrison SA

- Christophel TB

- Serences JT

- Rademaker RL

- Chunharas C

- Roelfsema PR

- Iamshchinina P

- Bettencourt KC

- Muhle-Karbe PS

- Lundqvist M

- Sreenivasan KK

- Freedman DJ

- Bernacchia A

- Constantinidis C

- Funahashi S

- Parthasarathy A

- Herikstad R

- Libedinsky C

- Lewis-Peacock JA

- Felleman DJ

- Van Essen DC

- Lawrence SJD

- van Mourik T

- Koopmans PJ

- de Lange FP

- van Kerkoerle T

- Buschman TJ

- Menendez JA

- Van Loon AM

- Olmos-Solis K

- Fahrenfort JJ

- Christophel T

- Cavanagh SE

- Kennerley SW

- Summerfield C

- Franconeri SL

- Mummaneni A

- Van Der Stigchel S

- Guggenmos M

- Vandenbroucke ARE

- Hallenbeck GE

- Oostenveld R

Article and author information

Jonas karolis degutis, for correspondence:, version history.

- Preprint posted : May 23, 2024

- Sent for peer review : May 23, 2024

- Reviewed Preprint version 1 : August 27, 2024

© 2024, Degutis et al.

This article is distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use and redistribution provided that the original author and source are credited.

Views, downloads and citations are aggregated across all versions of this paper published by eLife.

Be the first to read new articles from eLife

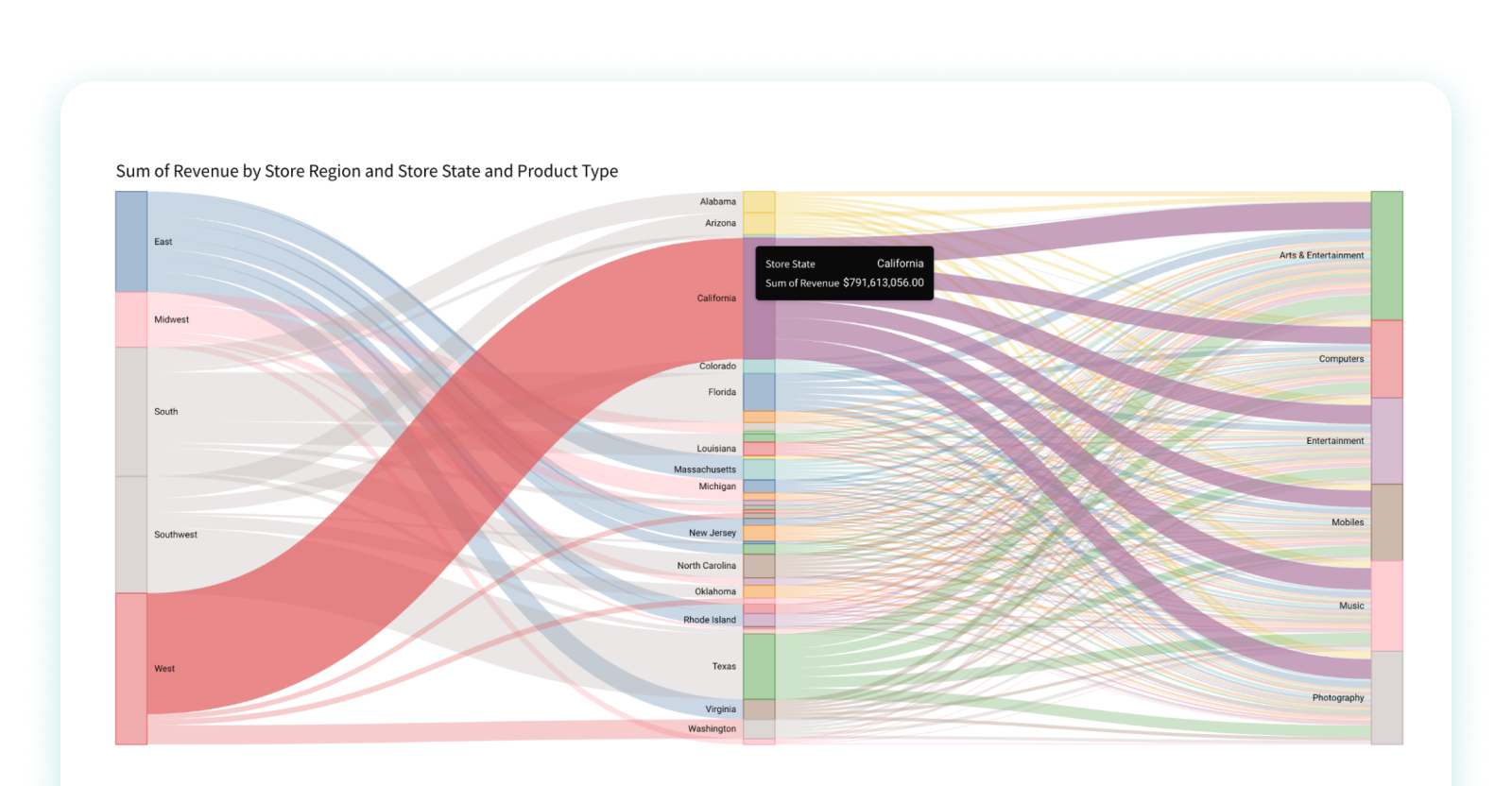

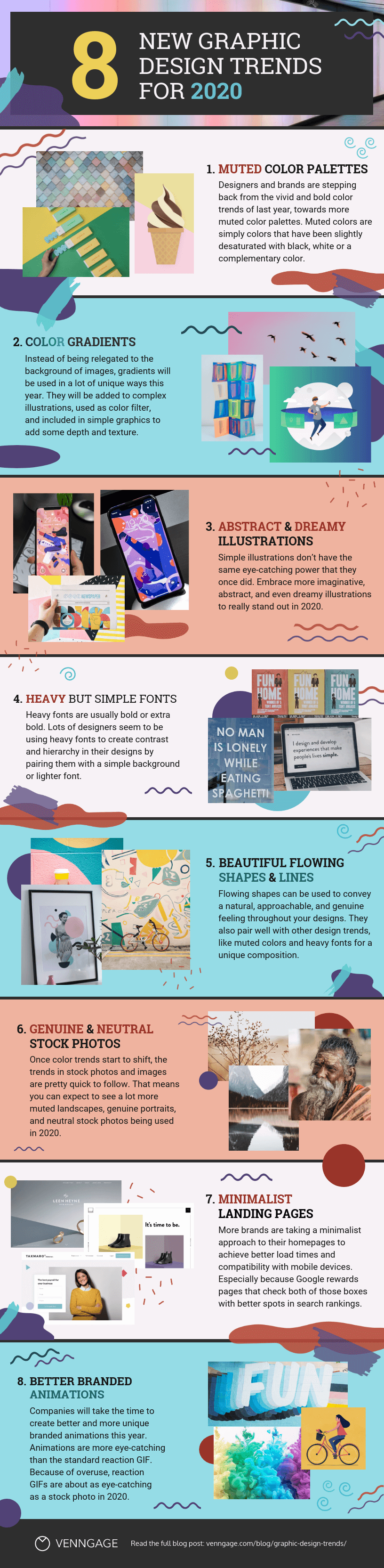

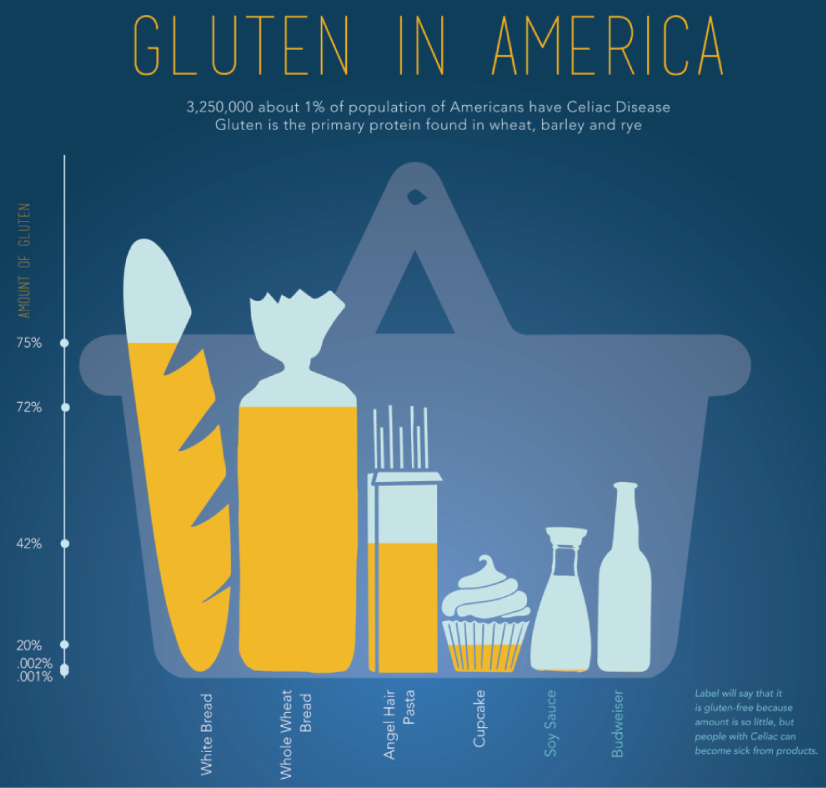

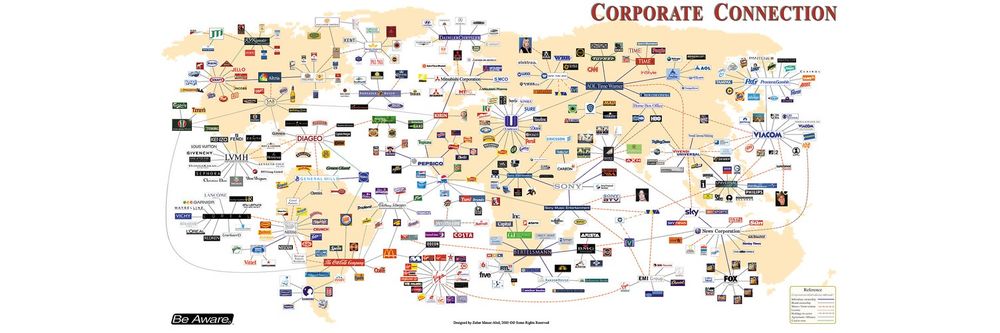

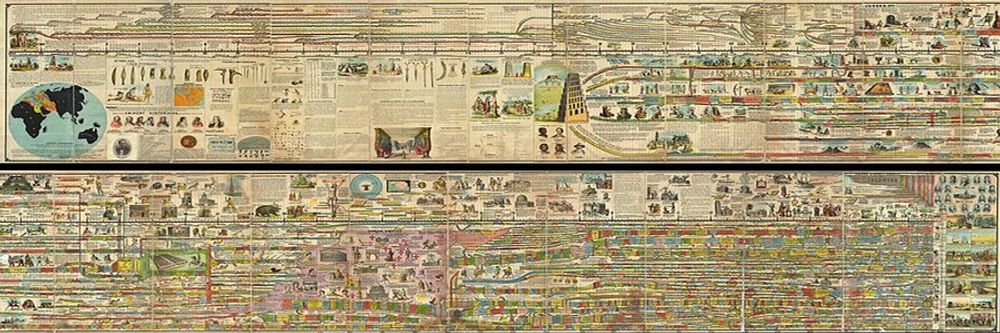

Data visualization is the representation of data through use of common graphics, such as charts, plots, infographics and even animations. These visual displays of information communicate complex data relationships and data-driven insights in a way that is easy to understand.

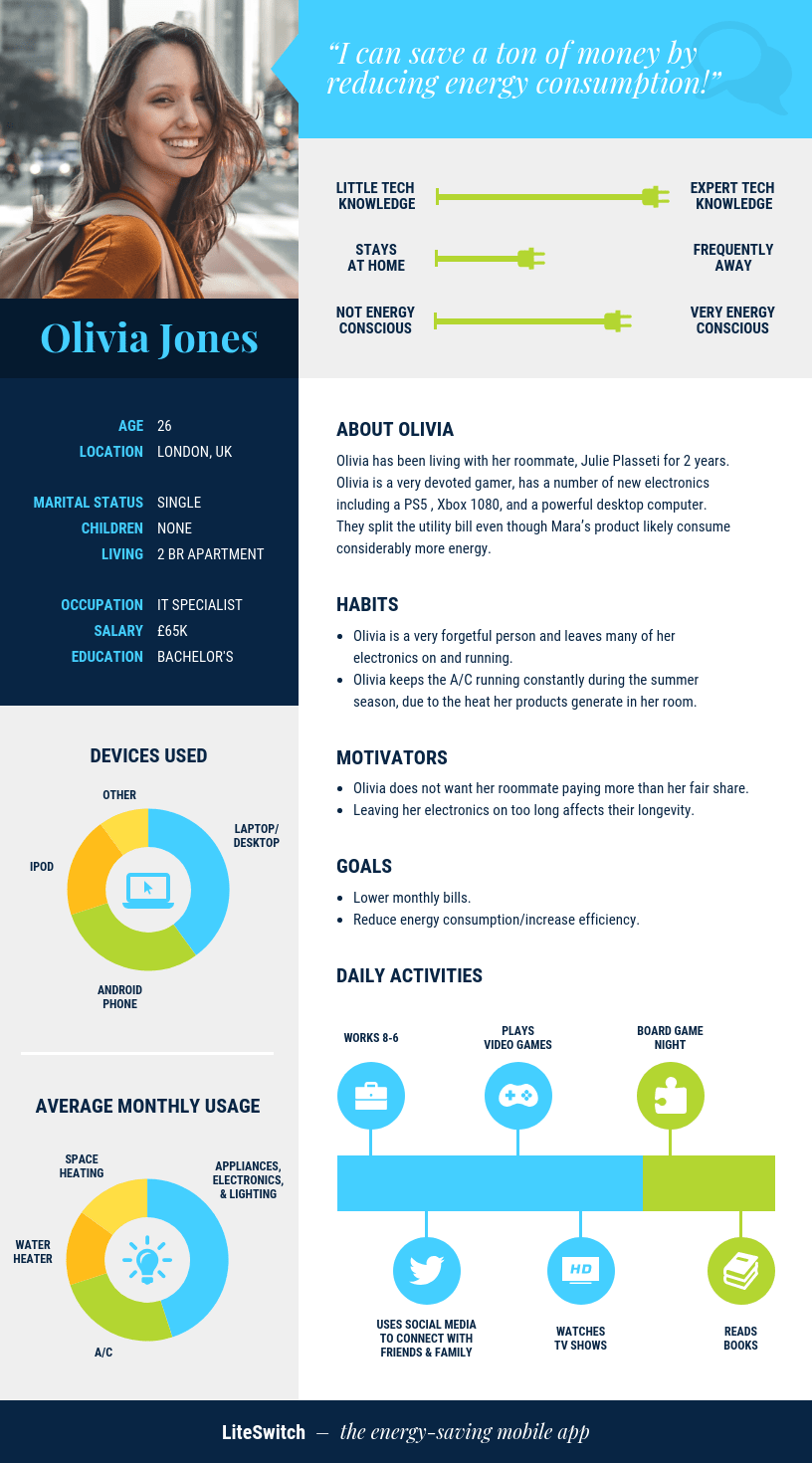

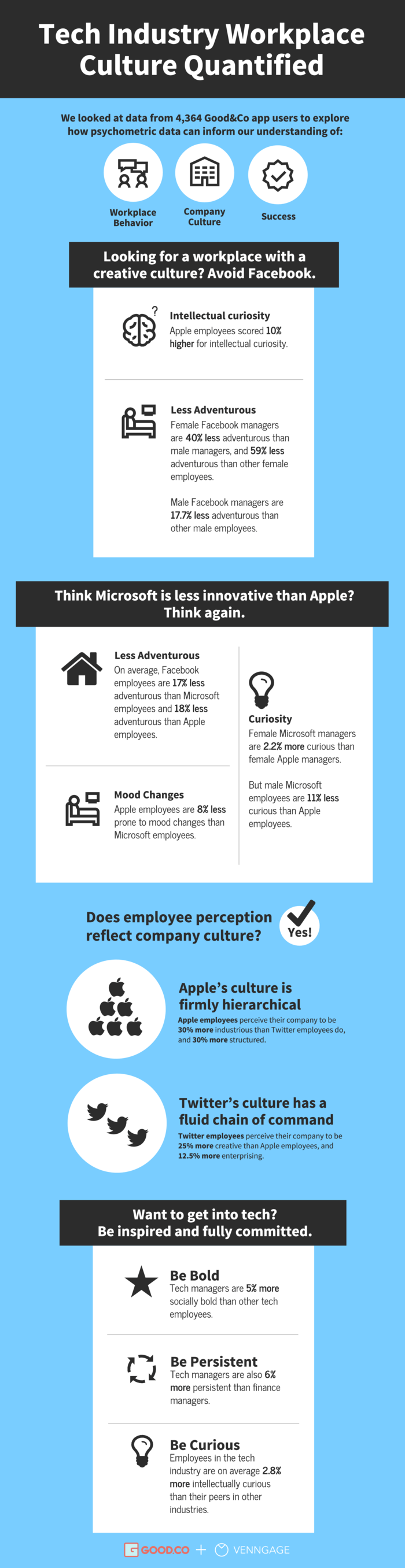

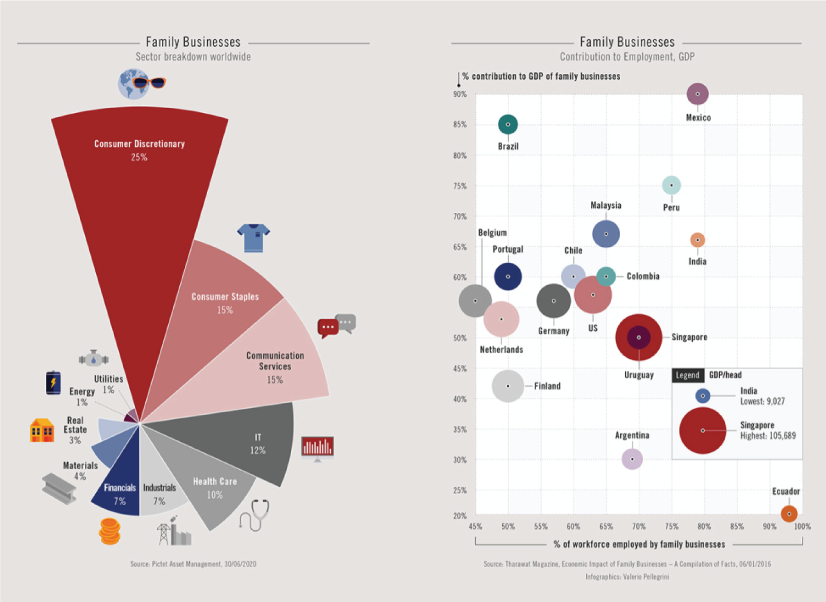

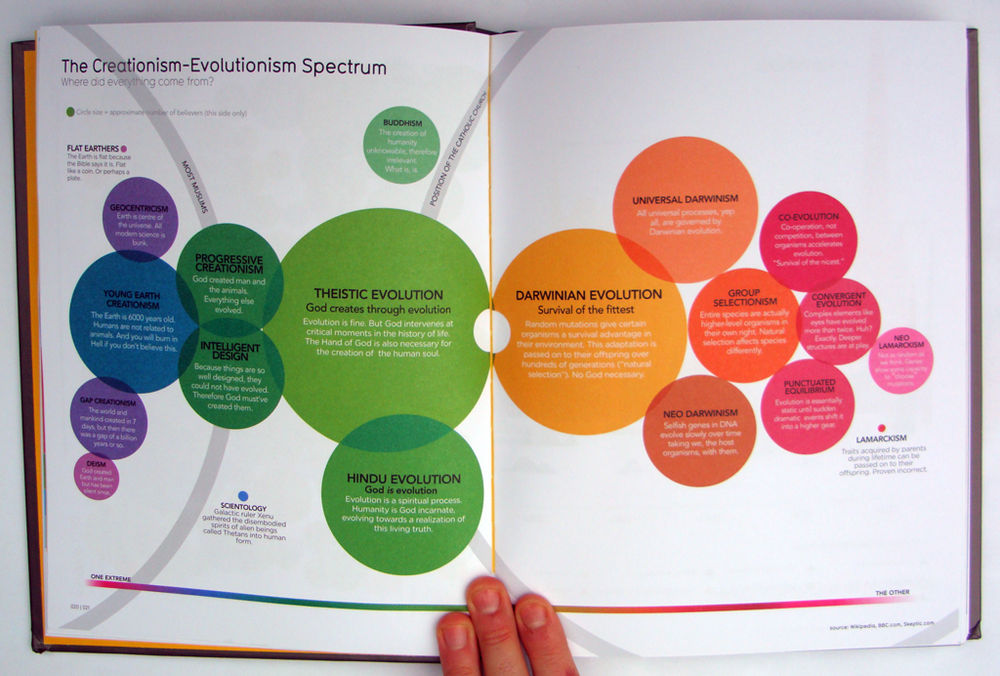

Data visualization can be utilized for a variety of purposes, and it’s important to note that is not only reserved for use by data teams. Management also leverages it to convey organizational structure and hierarchy while data analysts and data scientists use it to discover and explain patterns and trends. Harvard Business Review (link resides outside ibm.com) categorizes data visualization into four key purposes: idea generation, idea illustration, visual discovery, and everyday dataviz. We’ll delve deeper into these below:

Idea generation

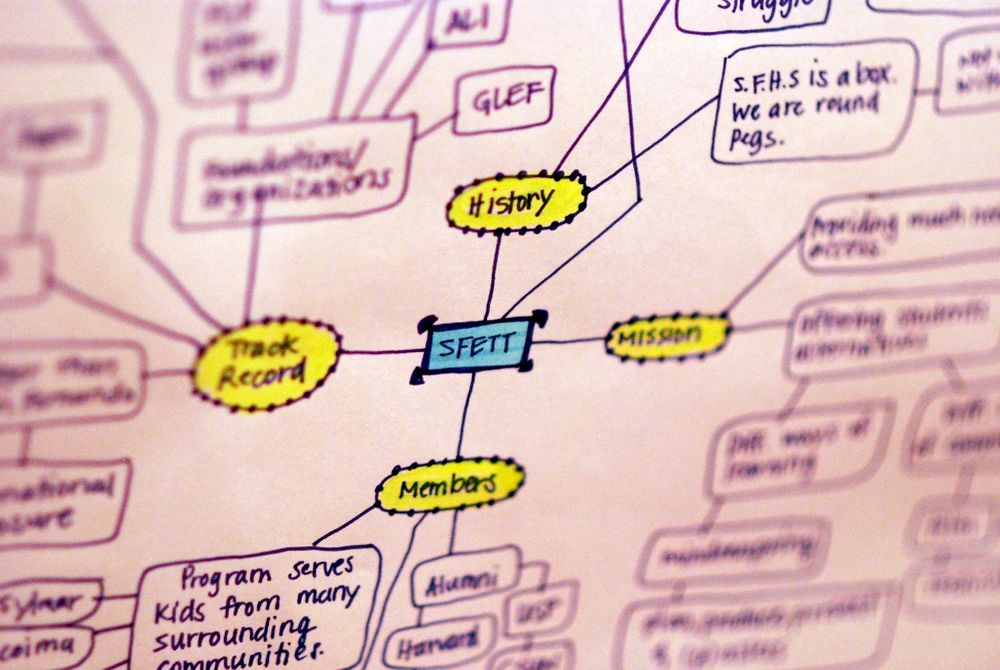

Data visualization is commonly used to spur idea generation across teams. They are frequently leveraged during brainstorming or Design Thinking sessions at the start of a project by supporting the collection of different perspectives and highlighting the common concerns of the collective. While these visualizations are usually unpolished and unrefined, they help set the foundation within the project to ensure that the team is aligned on the problem that they’re looking to address for key stakeholders.

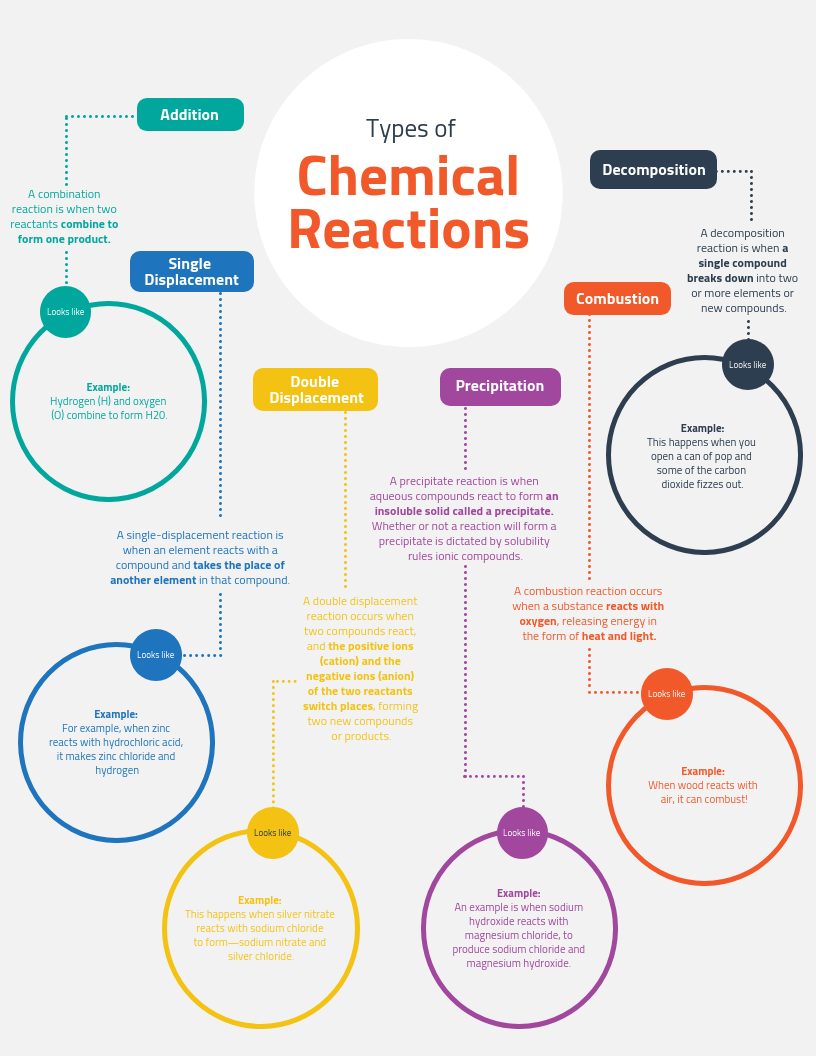

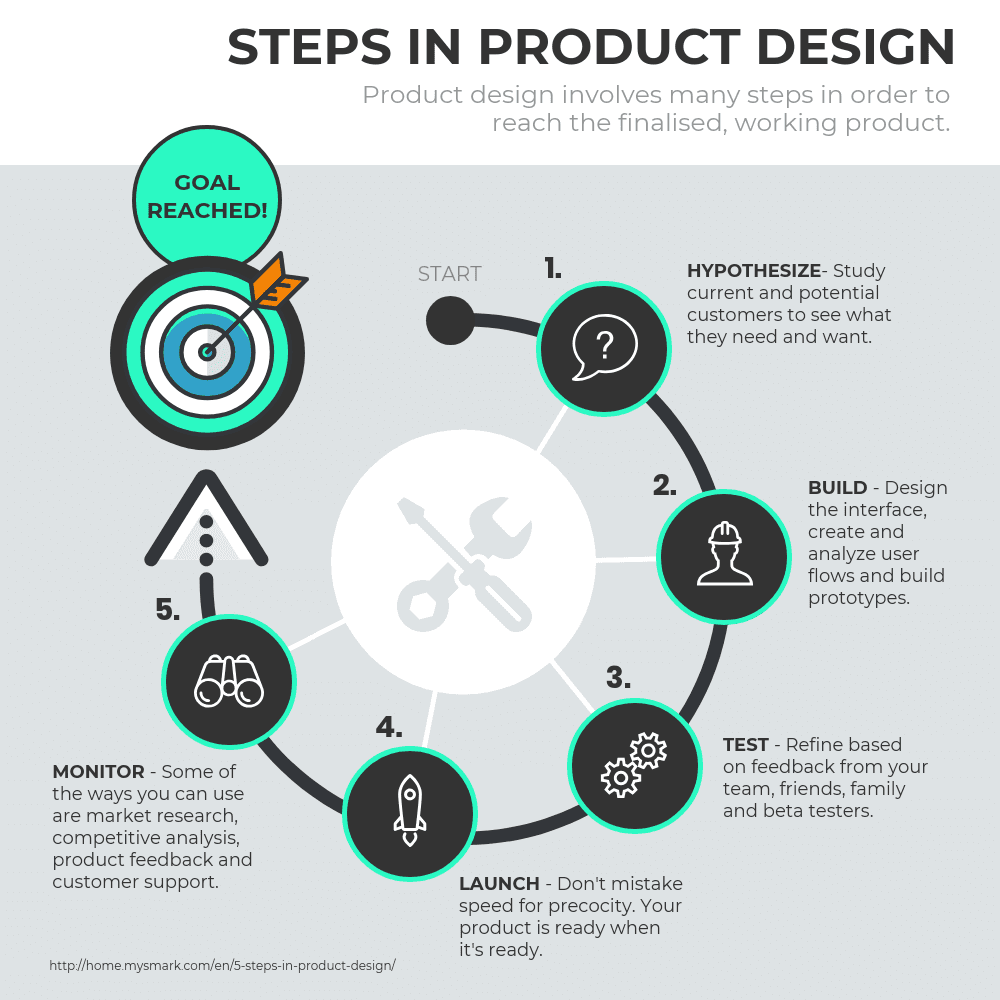

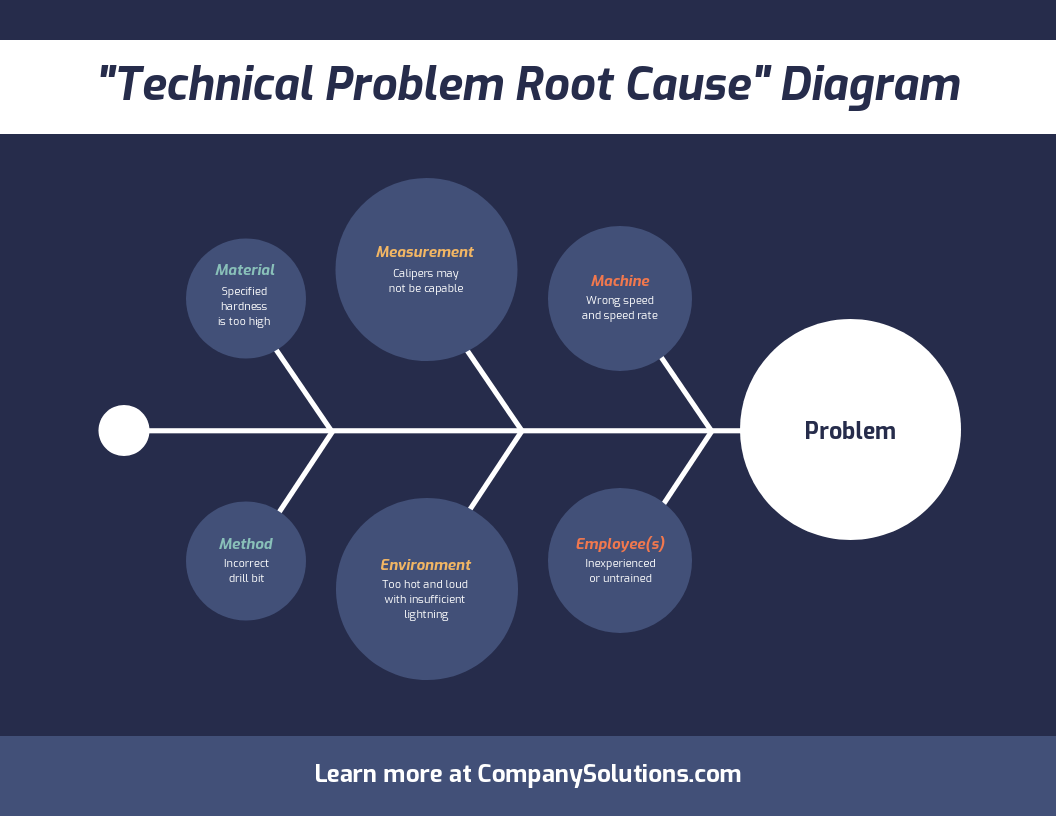

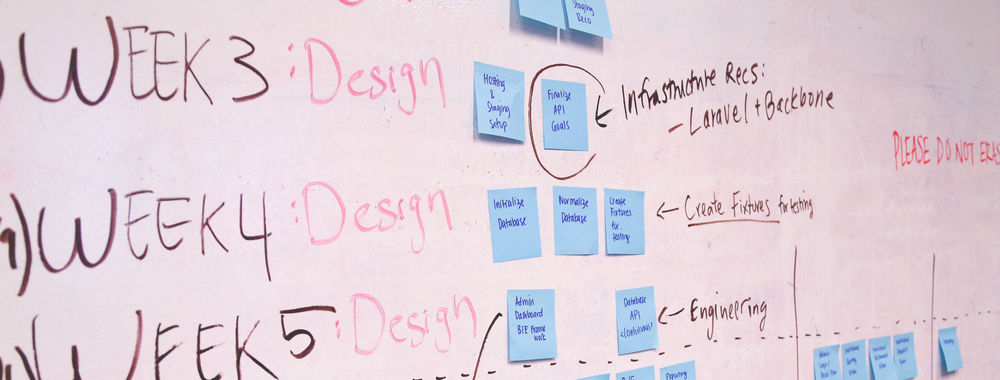

Idea illustration

Data visualization for idea illustration assists in conveying an idea, such as a tactic or process. It is commonly used in learning settings, such as tutorials, certification courses, centers of excellence, but it can also be used to represent organization structures or processes, facilitating communication between the right individuals for specific tasks. Project managers frequently use Gantt charts and waterfall charts to illustrate workflows . Data modeling also uses abstraction to represent and better understand data flow within an enterprise’s information system, making it easier for developers, business analysts, data architects, and others to understand the relationships in a database or data warehouse.

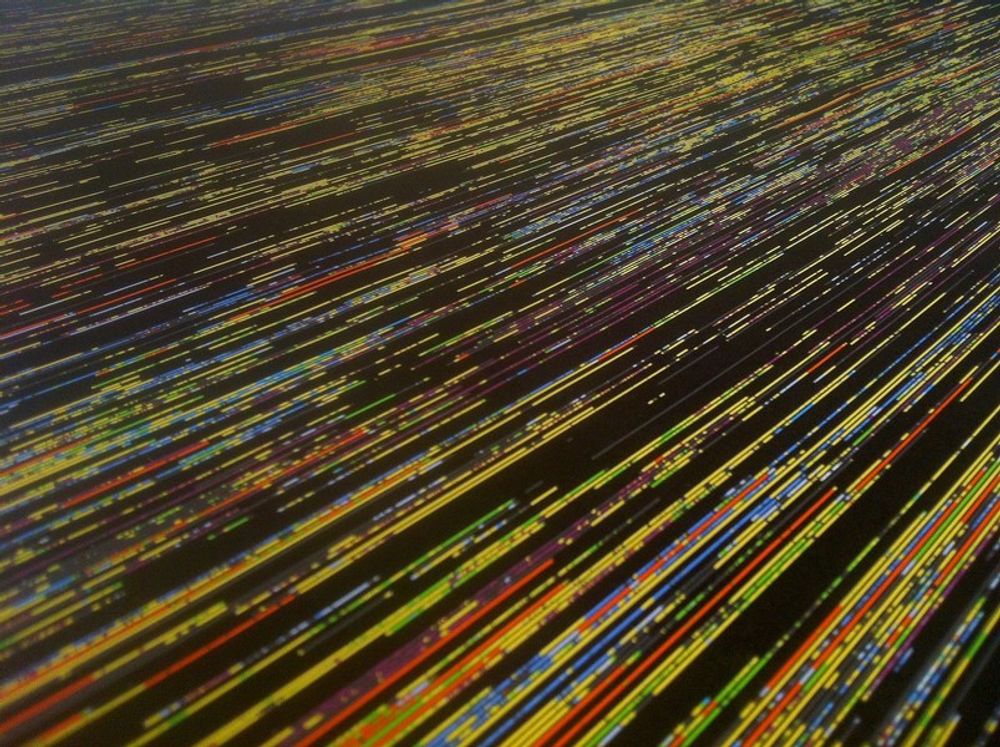

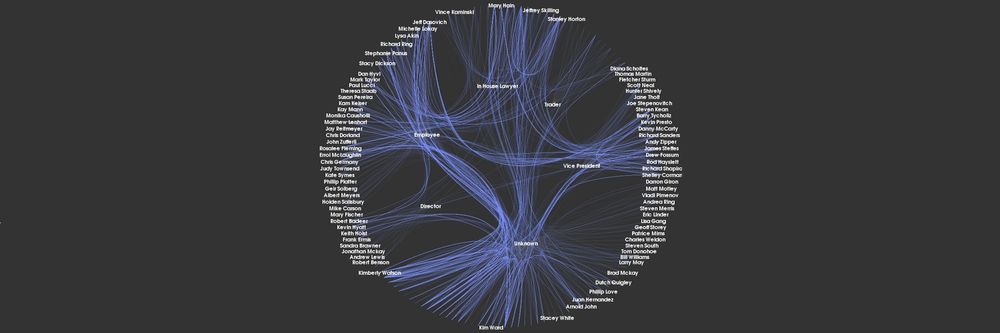

Visual discovery

Visual discovery and every day data viz are more closely aligned with data teams. While visual discovery helps data analysts, data scientists, and other data professionals identify patterns and trends within a dataset, every day data viz supports the subsequent storytelling after a new insight has been found.

Data visualization

Data visualization is a critical step in the data science process, helping teams and individuals convey data more effectively to colleagues and decision makers. Teams that manage reporting systems typically leverage defined template views to monitor performance. However, data visualization isn’t limited to performance dashboards. For example, while text mining an analyst may use a word cloud to to capture key concepts, trends, and hidden relationships within this unstructured data. Alternatively, they may utilize a graph structure to illustrate relationships between entities in a knowledge graph. There are a number of ways to represent different types of data, and it’s important to remember that it is a skillset that should extend beyond your core analytics team.

Use this model selection framework to choose the most appropriate model while balancing your performance requirements with cost, risks and deployment needs.

Register for the ebook on generative AI

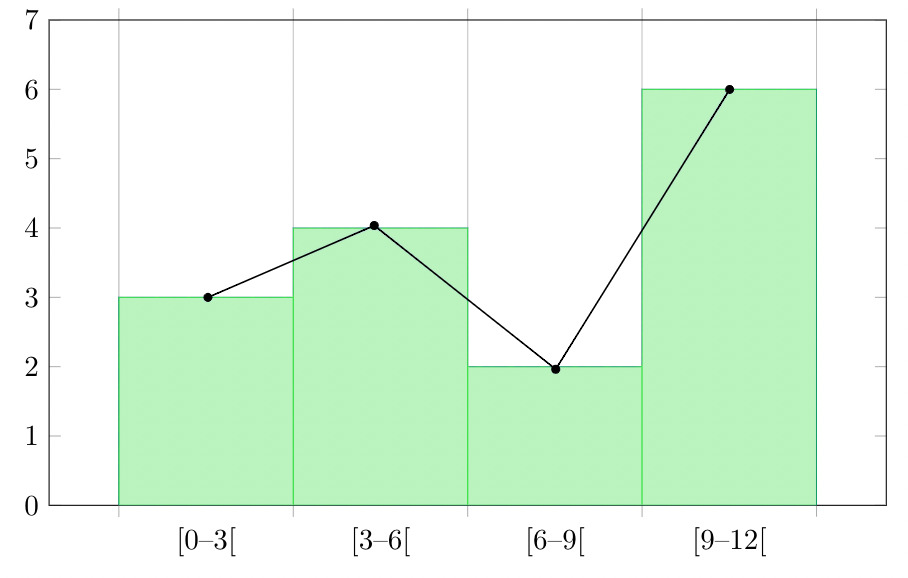

The earliest form of data visualization can be traced back the Egyptians in the pre-17th century, largely used to assist in navigation. As time progressed, people leveraged data visualizations for broader applications, such as in economic, social, health disciplines. Perhaps most notably, Edward Tufte published The Visual Display of Quantitative Information (link resides outside ibm.com), which illustrated that individuals could utilize data visualization to present data in a more effective manner. His book continues to stand the test of time, especially as companies turn to dashboards to report their performance metrics in real-time. Dashboards are effective data visualization tools for tracking and visualizing data from multiple data sources, providing visibility into the effects of specific behaviors by a team or an adjacent one on performance. Dashboards include common visualization techniques, such as:

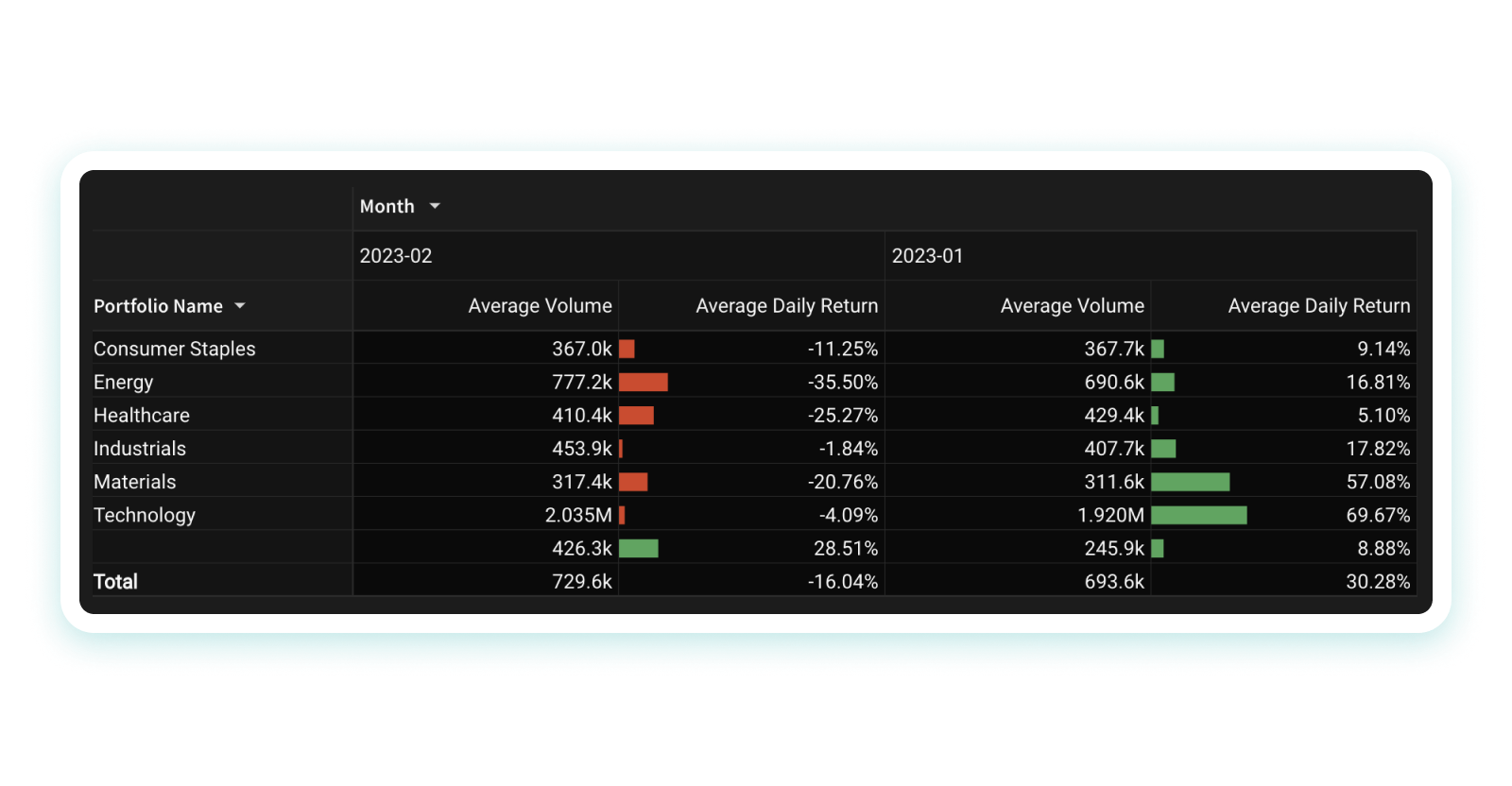

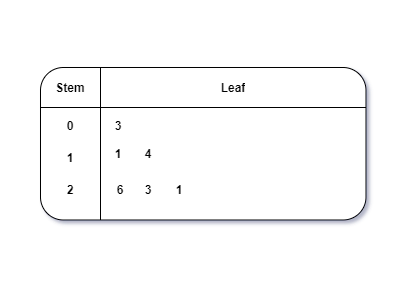

- Tables: This consists of rows and columns used to compare variables. Tables can show a great deal of information in a structured way, but they can also overwhelm users that are simply looking for high-level trends.

- Pie charts and stacked bar charts: These graphs are divided into sections that represent parts of a whole. They provide a simple way to organize data and compare the size of each component to one other.

- Line charts and area charts: These visuals show change in one or more quantities by plotting a series of data points over time and are frequently used within predictive analytics. Line graphs utilize lines to demonstrate these changes while area charts connect data points with line segments, stacking variables on top of one another and using color to distinguish between variables.

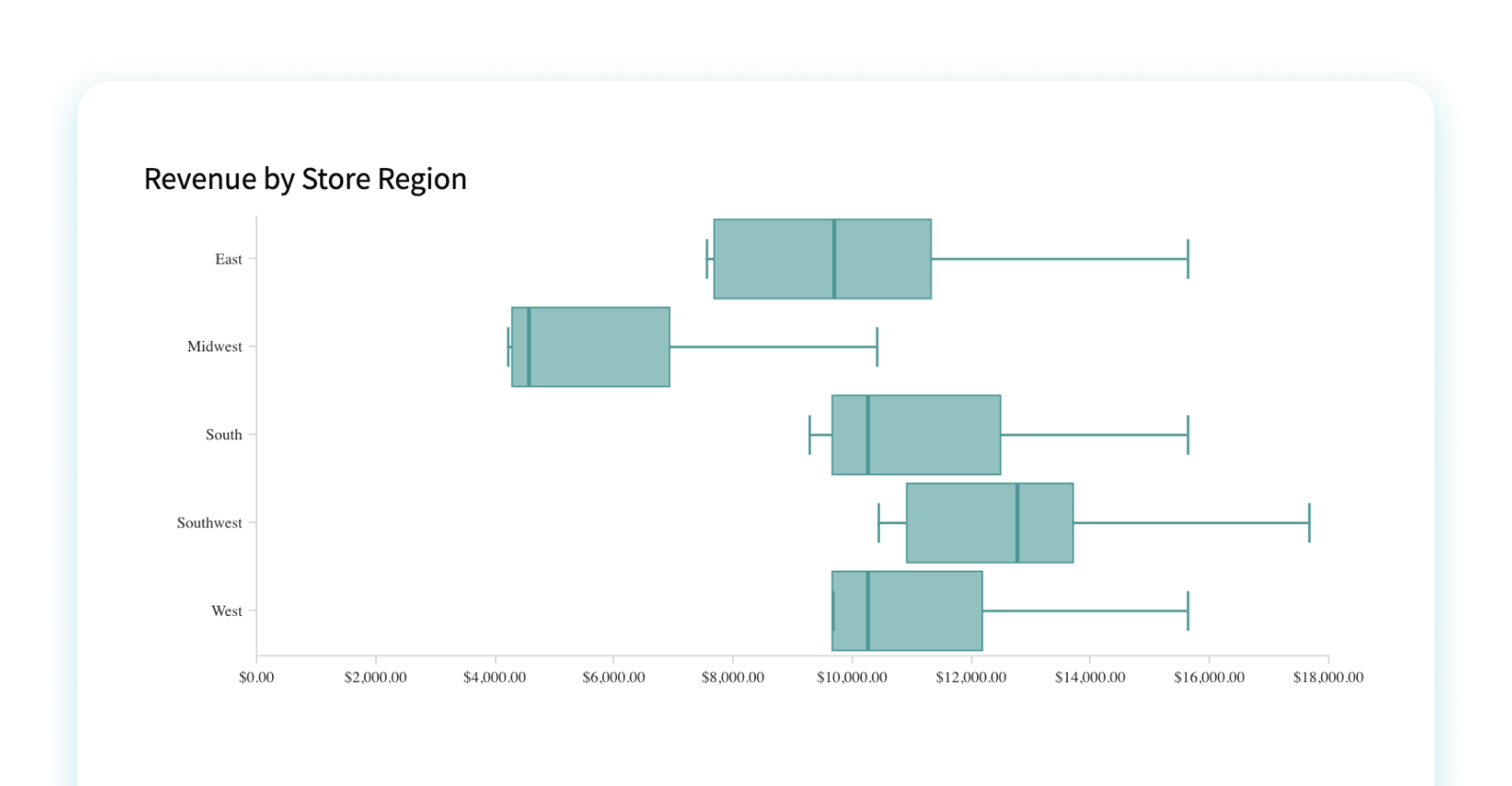

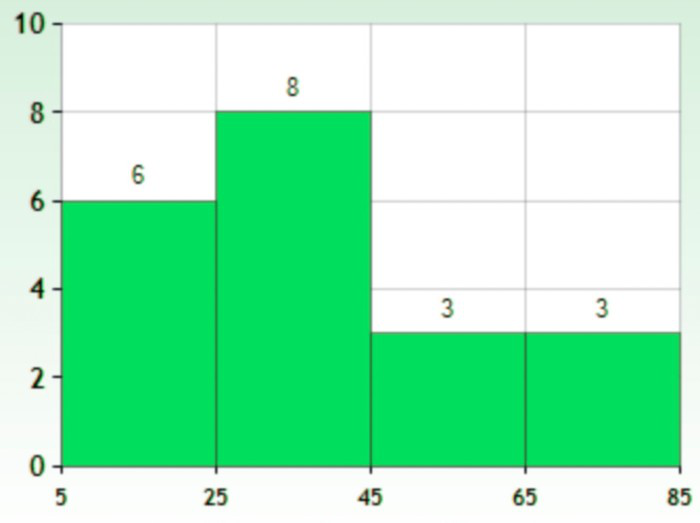

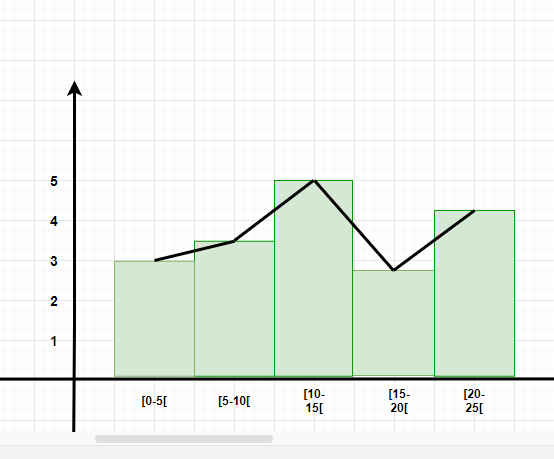

- Histograms: This graph plots a distribution of numbers using a bar chart (with no spaces between the bars), representing the quantity of data that falls within a particular range. This visual makes it easy for an end user to identify outliers within a given dataset.

- Scatter plots: These visuals are beneficial in reveling the relationship between two variables, and they are commonly used within regression data analysis. However, these can sometimes be confused with bubble charts, which are used to visualize three variables via the x-axis, the y-axis, and the size of the bubble.

- Heat maps: These graphical representation displays are helpful in visualizing behavioral data by location. This can be a location on a map, or even a webpage.

- Tree maps, which display hierarchical data as a set of nested shapes, typically rectangles. Treemaps are great for comparing the proportions between categories via their area size.

Access to data visualization tools has never been easier. Open source libraries, such as D3.js, provide a way for analysts to present data in an interactive way, allowing them to engage a broader audience with new data. Some of the most popular open source visualization libraries include:

- D3.js: It is a front-end JavaScript library for producing dynamic, interactive data visualizations in web browsers. D3.js (link resides outside ibm.com) uses HTML, CSS, and SVG to create visual representations of data that can be viewed on any browser. It also provides features for interactions and animations.

- ECharts: A powerful charting and visualization library that offers an easy way to add intuitive, interactive, and highly customizable charts to products, research papers, presentations, etc. Echarts (link resides outside ibm.com) is based in JavaScript and ZRender, a lightweight canvas library.

- Vega: Vega (link resides outside ibm.com) defines itself as “visualization grammar,” providing support to customize visualizations across large datasets which are accessible from the web.

- deck.gl: It is part of Uber's open source visualization framework suite. deck.gl (link resides outside ibm.com) is a framework, which is used for exploratory data analysis on big data. It helps build high-performance GPU-powered visualization on the web.

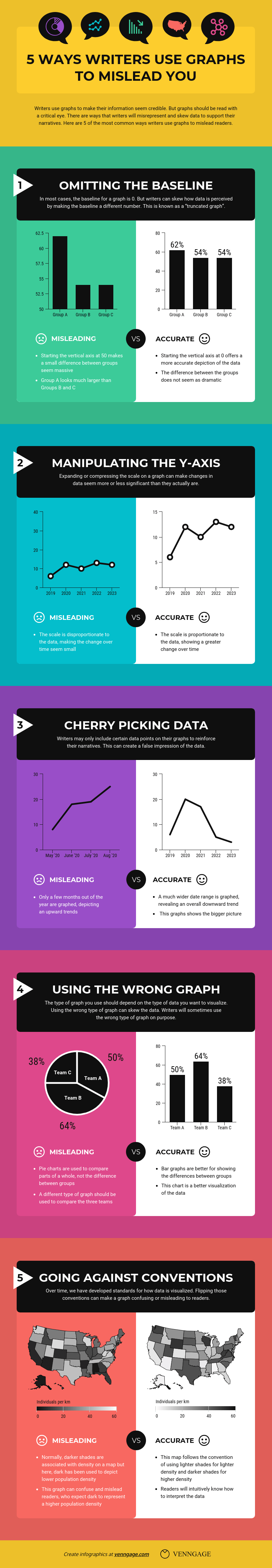

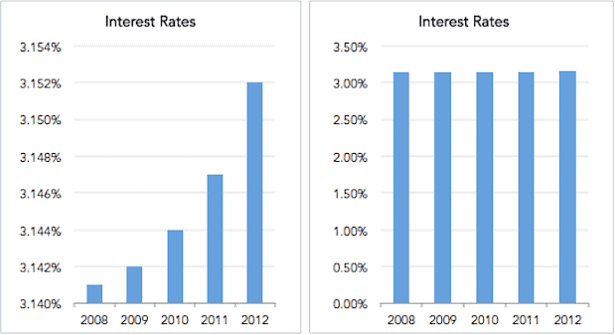

With so many data visualization tools readily available, there has also been a rise in ineffective information visualization. Visual communication should be simple and deliberate to ensure that your data visualization helps your target audience arrive at your intended insight or conclusion. The following best practices can help ensure your data visualization is useful and clear:

Set the context: It’s important to provide general background information to ground the audience around why this particular data point is important. For example, if e-mail open rates were underperforming, we may want to illustrate how a company’s open rate compares to the overall industry, demonstrating that the company has a problem within this marketing channel. To drive an action, the audience needs to understand how current performance compares to something tangible, like a goal, benchmark, or other key performance indicators (KPIs).

Know your audience(s): Think about who your visualization is designed for and then make sure your data visualization fits their needs. What is that person trying to accomplish? What kind of questions do they care about? Does your visualization address their concerns? You’ll want the data that you provide to motivate people to act within their scope of their role. If you’re unsure if the visualization is clear, present it to one or two people within your target audience to get feedback, allowing you to make additional edits prior to a large presentation.

Choose an effective visual: Specific visuals are designed for specific types of datasets. For instance, scatter plots display the relationship between two variables well, while line graphs display time series data well. Ensure that the visual actually assists the audience in understanding your main takeaway. Misalignment of charts and data can result in the opposite, confusing your audience further versus providing clarity.