An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Forecasting COVID-19: Vector Autoregression-Based Model

Khairan rajab.

1 Najran University, Najran, Kingdom of Saudi Arabia

Firuz Kamalov

2 Canadian University Dubai, Dubai, UAE

Aswani Kumar Cherukuri

3 Vellore Institute of Technology, Vellore, India

Forecasting the spread of COVID-19 infection is an important aspect of public health management. In this paper, we propose an approach to forecasting the spread of the pandemic based on the vector autoregressive model. Concretely, we combine the time series for the number of new cases and the number of new deaths to obtain a joint forecasting model. We apply the proposed model to forecast the number of new cases and deaths in the UAE, Saudi Arabia, and Kuwait. Test results based on out-of-sample forecast show that the proposed model achieves a high level of accuracy that is superior to many existing methods. Concretely, our model achieves mean absolute percentage error (MAPE) of 0.35%, 2.03%, and 3.75% in predicting the number of daily new cases for the three countries, respectively. Furthermore, interpolating our predictions to forecast the cumulative number of cases, we obtain MAPE of 0.0017%, 0.002%, and 0.024%, respectively. The strong performance of the proposed approach indicates that it could be a valuable tool in managing the pandemic.

Introduction

The COVID-19 pandemic has brought tremendous challenges to the governments and public health authorities around the globe. One of the key aspects to managing the governments’ response to the pandemic is forecasting the spread of the infection. Accurate forecast of the expected number of new cases can help the authorities better plan their policies and actions to achieve the optimal outcome. In this paper, we propose a vector autoregressive (VAR) model to forecast the daily number of new cases and deaths. The proposed algorithm produces accurate results and outperforms the existing state-of-the-art forecasting models.

The ability to accurately forecast the spread of the infection allows governments to make smart and informed decisions. If the number of infections is expected to rise sharply, the government may consider imposing a lockdown in order to stop the spread of the virus. On the other hand, if the number of new cases is expected to decline the government may consider easing some of the social and economic restrictions to improve the quality of life. Similarly, accurate forecast allows public health authorities to better manage their limited resources.

Forecasting COVID-19 infection has received a considerable amount of attention among researchers. However, our method differs from the existing approaches in two important ways. First, we consider the number of new cases in conjunction with the number of deaths. We believe that the two time series are related. Consequently, the information about one time series can be used to forecast the other time series. Using the VAR model, we can take into account the cross-correlation between the series and achieve more accurate forecasts. Second, we use data from some of the most extensively tested populations in the world—the UAE, Saudi Arabia, and Kuwait—to train our model. So the data accurately represent the true prevalence of the infection in each country. Additionally, our data cover a 12-month period which is considerably longer than many existing studies. We believe that the quality and depth of the data lead to more reliable results.

Although vector autoregression was used to forecast COVID-19 in the past [ 1 ], its application has been sparse. Our approach is based on a careful study of the times series plots, correlation plots, and information criteria. Remarkably, our analysis produced the same order model for each country. It indicates that the proposed approach can be adopted to forecast the infection rates in other countries. To test the efficacy of our approach, we measured the model accuracy based on 10-day ahead forecast. The mean absolute percentage error for the three countries is 0.0017%, 0.002%, and 0.024%, respectively.

Our paper is structured as follows. In Sect. 2 , we briefly discuss the existing efforts in the literature to forecast the COVID-19 infection. In Sect. 3 , we provide the required theoretical background about the VAR model. In Sect. 4 , we construct and apply the proposed model to forecast the infection rates in the UAE, Saudi Arabia, and Kuwait. We conclude the paper with a few closing remarks in Sect. 5 .

The topic of forecasting COVID-19 has recently attracted a significant amount of attention in the literature. A number of different forecasting approaches have been explored. The results vary depending on the model and data used in the study. Despite the considerable volume of research dedicated to the subject, the results have sometimes been criticized as inadequate [ 2 ]. One of the issues with the existing attempts in the literature is the size and the quality of the training data. The data are often too little or originate from countries with low testing rates [ 3 ]. To address this issue in our paper, we employ a 12-month dataset from rigorously tested countries. Another issue is the vulnerability of the underlying assumptions of the model. Most of the forecasting models are based on certain assumptions about the time series. If the underlying assumptions are not satisfied, then the model is not technically sound.

The majority of the existing forecasting methods can be grouped into three categories: autoregressive integrated moving average (ARIMA) models, mathematical growth models, and machine learning (ML) models. In an ARIMA model, the values of an individual time series are forecasted based on a linear combination of the past values and random shocks. Formally, ARIMA models are denoted ARIMA ( p , d , q ), where p is the number of time lags of the autoregressive model, d is the degree of differencing, and q is the order of past shocks. The authors in [ 4 ] find ARIMA(0,1,0) to be the best fit for predicting the trend of daily confirmed COVID-19 in Malaysia. The authors use the data from January 22 to March 31, 2020, for training and April 1 to April 17, 2020, for testing. The test results show MAPE of 16.01%. In a similar study [ 5 ], the authors attempted to estimate the total daily infected cases from the top five countries: US, Brazil, India, Russia, and Spain. The authors obtained different optimal ARIMA models for each country: (4,2,4), (3,1,2), (3,0,0), (4,2,4), and (1,2,1), respectively. Model specifications were estimated using Hannan and Rissanen algorithm [ 6 ]. The data for the study were taken from February 15 to June 30, 2020, for training and July 1 to July 18, 2020, for testing. The MAPE for each country is 3.701%, 1.844%, 1.090%, 0.832%, and 2.885%, respectively.

The mathematical growth (contagion) models are based on differential equations that model the spread of infection. In [ 7 ], the authors forecast the total number of daily confirmed and death cases in India using several models based on gene expression programming. The data for the study are taken from April 7 to May 5, 2020. The results show root-mean-squared error on training data: The confirmed and death cases are 5.5574 and 90.1863, respectively. The authors do not provide out-of-sample forecasting and testing. In [ 8 ], the authors forecast the total number of daily infected individuals in Brazil, UK, and South Korea using discrete-time-evolution model based on a set of four equations. The results show MAPE: Brazil 5.25%, UK 4%, and South Korea 3.75%, respectively.

Machine learning models have been used for forecasting in a variety of applications including finance [ 9 , 10 ], energy [ 11 ], education [ 12 ], temperature [ 13 ], and many others. A number of authors have employed ML methods such as regularized linear regression (LASSO) and recurrent neural networks (RNN) to forecast the spread of the infection [ 14 ]. In [ 15 ], the authors compared RNN, long short-term memory (LSTM), BiLSTM, gated recurrent units (GRU), and variational autoencoders (VAE) to forecast the total number of daily cases in Italy, Spain, Italy, China, the USA, and Australia. The study used data from January 22 to June 1, 2020, for training and June 1 to June 17, 2020, for testing. The VAE model achieved the best MAPE values: 5.90%, 2.19%, 1.88%, 0.128%, 0.236%, and 2.04%, respectively. Similar studies using LASSO, SVM, logistic regression, and others have also been carried out in [ 16 , 17 ].

Vector autoregression is used to model the joint dynamic behavior of a collection of time series. It was used in [ 18 ] to forecast mortality rates, where mortality rates of each age depend on the historical values of itself and the neighboring ages. The VAR model is commonly used in spatiotemporal settings. For instance, in [ 19 ] wind power forecast at multiple plants at different locations was done within a single framework using LASSO vector autoregression. The predicted output from each plant in the model is based on its own past values and the past values of the other plants included in the model. Similarly, LASSO vector autoregression was applied for wind power prediction in [ 20 ]. Vector autoregression has also been applied in a number of other fields including finance [ 21 ], tourism [ 22 ], and commodity prices [ 23 ].

The application of vector autoregression model in the context of COVID-19 has been limited. For instance, in [ 1 ] the VAR model was studied together with linear regression and multilayer perceptron. The authors in [ 24 ] used VAR model to forecast the infection, hospitalization, and ICU bed numbers in Italy. None of the two studies include any measures of accuracy to evaluate the models.

Vector Autoregressive Model

The VAR process is traditionally used to model together two or more related time series. In case of COVID-19, the number of new cases is related to the number of deaths. As the number of new cases increases, so does the number of deaths. Therefore, information about the former can help predict the latter. The VAR process allows to incorporate both the number of new cases and deaths into a single model, producing a more powerful forecasting paradigm. In addition, the VAR process requires minimal assumptions about the nature of the time series. As will be shown in Sect. 4.3 , modeling the number of new cases in conjunction with the number of deaths is more effective than modeling the series individually.

A good introduction to vector autoregression can be found in [ 25 ]. Let x t = x t , 1 x t , 2 ⋮ x t , k be a vector-valued time series consisting of k individual time series. Assume that x t is stationary, i.e., the cross-covariance function Cov ( x t , i , x s , j ) depends only on s - t . Then, the VAR( p ) model is given by the following equation:

where Φ j are matrices of coefficients and w t is the vector Gaussian white noise with Cov ( w t , w s ) = 0 for s ≠ t . In our paper, we examine a vector of two time series, so the corresponding VAR( p ) model is given by the following equation:

where x t and and y t are the numbers of new cases and deaths at time t , respectively. The coefficients of matrix Φ j = Φ 11 Φ 12 Φ 21 Φ 22 are estimated based on the maximum likelihood estimation. In other words, the matrix coefficients are calculated to maximize the likelihood of obtaining the sample. In our paper, we use the statsmodels package in Python [ 26 ] to implement the VAR model. The order p of the VAR model is chosen based on a combination of different factors including the time series plots, the correlation plots, and information criteria. In addition, residual analysis is employed to confirm the model assumptions about normality and independence.

Model Construction and Forecasting

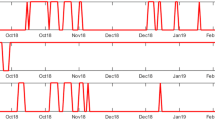

The data used in this study consist of the daily number of new cases and deaths. The data are collected for three countries: the UAE, Saudi Arabia, and Kuwait. The countries were chosen due to rigorous COVID-19 testing conducted within the populations [ 3 ]. The data range over a 12-month period starting from March 20, 2020, to March 20, 2021. We used the data until March 10, 2021, for training and the data from March 11 to March 20, 2021, for testing. The data are sourced from OurWorldInData.org [ 27 ]. The time series plots for the number of new cases and deaths for each country are presented in Fig. 1 .

The original time series data for the three countries

Data Preprocessing

According to the European Centre for Disease Prevention and Control, “the daily number of cases is frequently subject to retrospective corrections, delays in reporting and/or clustered reporting of data for several days.” Therefore, daily variations in the number of cases are unreliable for effective forecasting. To obtain a more balanced and well-founded time series, we employ a 7-day rolling mean. As shown in Fig. 2 , using the rolling mean produces a more reliable time series. We can also see from the plots that the time series for the number of cases and number of deaths are correlated with an approximately 30-day lag. For instance, in the case of the UAE, the peak for the number of new cases occurs in the end of January, while the peak for the number of new deaths occurs in the end of February.

The 7-day rolling mean of the number of new cases and deaths

The plots in Fig. 2 show that the time series are not stationary. Let x t be the value of the times series at time t . To obtain a more stationary time series, we take the first difference of the time series values:

As shown in Fig. 3 , the resulting series is more stationary with a constant mean around zero. There remain spikes in the variance especially in the end of the period (Fig. 3 a) which can be attributed to the stochastic realization of the time series. Nevertheless, the transformed series appears largely stable to proceed with our analysis.

Taking the first difference of the series helps achieve stationarity

Building the Forecasting Model

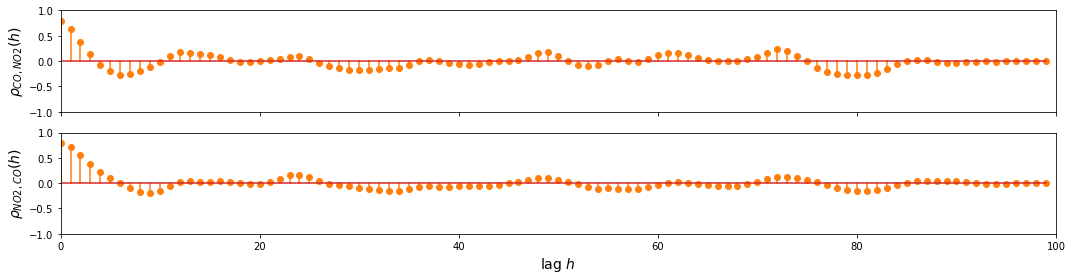

The key to building an effective VAR model is determining the correct order. To identify the order of the model we consider 3 factors: time series plots, correlation plots, and information criteria. We illustrate our approach to building a model in the case of the UAE. The models for Saudi Arabia and Kuwait are constructed in similar fashion. Visual examination of the time series in Fig. 1 a shows that the time series for the new cases and the time series for new deaths are correlated with an approximately 30-day lag. Next, we consider the auto and cross-correlation plots. As shown in Fig. 4 , there exists nontrivial correlation in both time series. Concretely, the autocorrelation plot for the new cases (upper left) contains nonzero values up to lag 28 while for the new deaths (lower right) it is up to lag 17. The cross-correlation function is not even, i.e., ρ xy ( s , t ) ≠ ρ yx ( s , t ) . Therefore, the off-diagonal cross-correlation plots are different. Since the number of new cases leads the number of new deaths, we consider the cross-correlation plot on the upper right. The cross-correlation plot shows nontrivial correlation which indicates that the number of new cases has a lagged correlation with the number of new deaths. So the use of the VAR model is statistically justified. In addition, the nonzero value at lag 29 is consistent with our visual observations in Fig. 1 a.

Correlation plots for the UAE. The upper left and lower right plots represent autocorrelations for the number of cases and deaths, respectively. The off-diagonal plots are cross-correlations. The dashed horizontal lines represent the confidence limits

As the final step in identifying the order of the model, we study information criteria metrics. In particular, we calculate the Akaike information criterion (AIC) of the VAR model for the first 33 orders. The AIC is given by the following equation:

where k is the number of parameters in the model and L ^ is the maximum sample likelihood for a model. The model with the lowest AIC is considered optimal. As shown in Table 1 , the minimum AIC is achieved at lag 29. This is consistent with our earlier observations. Recall that the plots in Fig. 1 a show an approximately 30-day lag between the two time series. In addition, the cross-correlation plot had a maximum nonzero value at lag 29. We conclude that the optimal order for our VAR model is p = 29 .

AIC values for different orders p of the VAR model

| p | AIC | p | AIC | p | AIC | p | AIC | p | AIC | p | AIC |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 5.693 | 6 | 4.785 | 12 | 4.337 | 18 | 4.106 | 24 | 3.990 | 30 | 3.968 |

| 1 | 4.911 | 7 | 4.624 | 13 | 4.292 | 19 | 4.113 | 25 | 3.990 | 31 | 3.998 |

| 2 | 4.877 | 8 | 4.436 | 14 | 4.198 | 20 | 4.088 | 26 | 3.986 | 32 | 4.008 |

| 3 | 4.824 | 9 | 4.442 | 15 | 4.081 | 21 | 4.019 | 27 | 3.999 | 33 | 4.017 |

| 4 | 4.841 | 10 | 4.310 | 16 | 4.089 | 22 | 3.977 | 28 | 3.997 | 34 | |

| 5 | 4.826 | 11 | 4.325 | 17 | 4.102 | 23 | 3.978 | 29 | 3.951 | 35 |

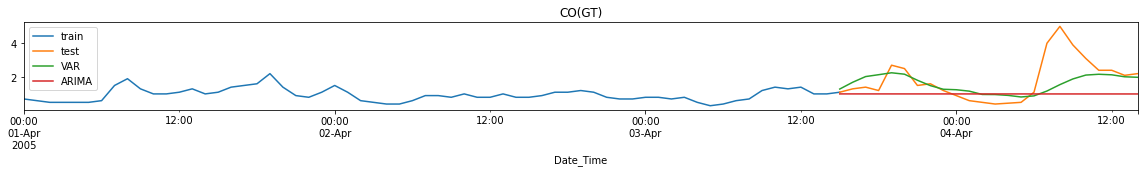

We split the data into training and testing subsets. The training subset encompasses the period March 20, 2020–March 10, 2021, while the testing subset includes the period March 11–March 20, 2021. We fit the VAR(29) model on the training. Then, we use the fitted model to forecast the new daily number of cases and deaths for the testing period. As shown in Fig. 5 a, the forecasted numbers of new cases match almost perfectly with the actual numbers. Similarly, it can be seen from Fig. 5 b, the forecasted numbers of new deaths match closely with the actual numbers.

The actual and forecast values for the number of new cases and deaths in the UAE

To make the comparison between the forecasted and actual values more precise, we calculate the root-mean-squared error (RMSE), the mean absolute error (MAE), and the mean absolute percentage error (MAPE) of the model forecast. Note that RMSE and MAE are conditional on the size of the population and should be used with caution for comparison between countries with significantly different numbers of cases. While our model is designed to forecast the number of new cases, it can be easily used to forecast the total number of cases. To obtain the forecast for the total number of cases, we simply add the forecast for the new number of cases to the current total number of cases. The results presented in Table 2 show that the proposed model produces highly accurate forecasts. The RMSE, MAE, and MAPE columns in Table 2 correspond to the number of new cases, while the MAPE T column corresponds to the number of total cases. Observe that the MAPE relative to the number of new cases is 0.35%, while the MAPE relative to the total number of cases is 0.0017%. The model is less accurate in predicting the number of deaths. The lower accuracy on the number of deaths can be attributed to two primary factors: insufficient data and the complex nature of death. On the other hand, the MAE for new deaths is 0.86 which means that the forecast is accurate within 1 count.

10-day-ahead forecast accuracy for the UAE

| RMSE | MAE | MAPE | ||

|---|---|---|---|---|

| New cases | 9.65 | 7.45 | 0.35% | 0.0017% |

| New deaths | 1.02 | 0.86 | 10.75% | 0.0612% |

Indeed, the results are substantially better than for many current models in the literature. As shown in Table 3 , the proposed model outperforms existing approaches from a range of fields including ARIMA, mathematical modeling, and ML. In particular, the MAPE for predicting the total number of cases using our method is 0.0017% which is substantially smaller than the MAPE values presented in Table 3 . Although comparison with Table 3 is not ideal due to the differences in studies, it does provide a useful benchmark for our proposed model.

Accuracy results of the existing methods for forecasting the number of cases

| Source | Singh et al. [ ] | Singh et al. [ ] | Curado et al. [ ] | Zeroual et al. [ ] |

|---|---|---|---|---|

| Method | ARIMA | ARIMA | Mathematical model | Machine learning |

| Results | MAPE: 16.01% | : 0.8–3.7% | : 3–5% | : 0.128–5.90% |

To further validate the use of the VAR model, we compare its performance against the basic AR model. To this end, we fitted and tested the AR(30) model on the data. We obtain MAPE T of 0.0063% which is more than thrice the MAPE T for the VAR model. We conclude that the vector-based approach to forecasting COVID-19 infection is more effective than single-valued approach.

Saudi Arabia and Kuwait

To demonstrate the effectiveness of the proposed approach, we apply it to the case of Saudi Arabia and Kuwait. To construct the forecasting models for the two countries, we follow the same steps as in the case of the UAE. We consider the time series plots, correlation plots, and AIC. Our analysis yields VAR(29) and VAR(28) as the optimal models for Saudi Arabia and Kuwait, respectively. After fitting the models on the training data, we forecast the number of new cases and deaths for the test period March 11–March 20, 2021. The results are illustrated in Figs. 6 and and7. 7 . As shown in Fig. 6 , the forecasted number of cases in Saudi Arabia matches very closely with the actual number of cases. Furthermore, the forecasted number of deaths is nearly identical with the actual numbers. Similarly, as shown in Fig. 7 , the forecasted values in Kuwait are not too far from the actual values.

The actual and forecast values for the number of new cases and deaths in Saudi Arabia

The actual and forecast values for the number of new cases and deaths in Kuwait

As shown in Tables 2 and 4 , the proposed forecasting approach achieves a high degree of accuracy in forecasting the number of new cases and deaths. The 10-day-ahead forecast of the number of new cases in the UAE is accurate within 0.35%. The results attained by the proposed VAR model are significantly better than the results in the existing literature (Table 3 ). The success of the proposed approach lies in the simplified assumptions that underlie the VAR model together with the high quality of the time series data. Given the performance of the proposed model, it can be similarly applied to other countries and help health officials combat the pandemic.

10-day-ahead forecast accuracy for Saudi Arabia and Kuwait

| Saudi | Kuwait | |||||||

|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | MAPE | RMSE | MAE | MAPE | |||

| New cases | 8.59 | 7.41 | 2.03% | 0.002% | 64.49 | 49.78 | 3.75% | 0.024% |

| New deaths | 0.10 | 0.07 | 1.29% | 0.001% | 0.85 | 0.74 | 10.55% | 0.063% |

In this paper, we investigated the use of vector autoregression to forecast the spread of COVID-19 infection. In particular, we applied the VAR model to jointly forecast the number of new cases and deaths the UAE, Saudi Arabia, and Kuwait. The results show a high level of accuracy of the proposed model. Indeed, the MAPE of the model is substantially lower than that of the existing models in the literature. The success of the proposed approach shows that it can also be used for forecasting in other countries.

Despite the high accuracy of the model, there remains room for improvement. Although taking the first difference stabilized the mean of the series around zero, additional transformations can be applied to improve the variance. The model may benefit by expanding and including quantities such as the percentage of tested population, the number of recoveries, and others. Alternative VAR models such as LASSO VAR and VARCH may also be investigated in future research. The proposed approach has a great potential to be a valuable tool in managing the government response against COVID-19.

This work was supported in part by the Najran University under Grant NU/-/SERC/10/597.

Contributor Information

Firuz Kamalov, Email: ea.ca.duc@zurif .

Aswani Kumar Cherukuri, Email: gro.mca@irukurehc .

- R(eal) Basics

- Econometric Methods

- Time Series Topics

- Network Analysis

- Reproduction Projects

- About and Disclaimer

An Introduction to Structural Vector Autoregression (SVAR)

Vector autoregressive (VAR) models constitute a rather general approach to modelling multivariate time series. A critical drawback of those models in their standard form is their missing ability to describe contemporaneous relationships between the analysed variables. This becomes a central issue in the impulse response analysis for such models, where it is important to know the contemporaneous effects of a shock to the economy. Usually, researchers address this by using orthogonal impulse responses, where the correlation between the errors is obtained from the (lower) Cholesky decomposition of the error covariance matrix. This requires them to arrange the variables of the model in a suitable order. An alternative to this approach is to use so-called structural vector autoregressive (SVAR) models, where the relationship between contemporaneous variables is modelled more directly. This post provides an introduction to the concept of SVAR models and how they can be estimated in R.

What does the term “structual” mean?

To understand what a structural VAR model is, let’s repeat the main characteristics of a standard reduced form VAR model:

\[y_{t} = A_{1} y_{t-1} + u_t \ \ \text{with} \ \ u_{t} \sim (0, \Sigma),\] where \(y_{t}\) is a \(k \times 1\) vector of \(k\) variables in period \(t\) . \(A_1\) is a \(k \times k\) coefficent matrix and \(\epsilon_t\) is a \(k \times 1\) vector of errors, which have a multivariate normal distribution with zero mean and a \(k \times k\) variance-covariance matrix \(\Sigma\) .

To understand SVAR models, it is important to look more closely at the variance-covariance matrix \(\Sigma\) . It contains the variances of the endogenous variable on its diagonal elements and covariances of the errors on the off-diagonal elements. The covariances contain information about contemporaneous effects each variable has on the others. The covariance matrices of standard VAR models is symmetric , i.e. the elements to the top-right of the diagonal (the “upper triangular”) mirror the elements to the bottom-left of the diagonal (the “lower triangular”). This reflects the idea that the relations between the endogenous variables only reflect correlations and do not allow to make statements about causal relationships.

Contemporaneous causality or, more precisely, the structural relationships between the variables is analysed in the context of SVAR models, which impose special restrictions on the covariance matrix and – depending on the model – on other coefficient matrices as well. The drawback of this approach is that it depends on the more or less subjective assumptions made by the researcher. For many researchers this is too much subjectiv information, even if sound economic theory is used to justify them. However, they can be useful tools and that is why it is worth to look into them.

Lütkepohl (2007) mentions four approaches to model structural relationships between the endogenouse variables of a VAR model: The A-model, the B-model, the AB-model and long-run restrictions à la Blanchard and Quah (1989).

The A-model

The A-model assumes that the covariance matrix is diagonal - i.e. it only contains the variances of the error term - and contemporaneous relationships between the observable variables are described by an additional matrix \(A\) so that

\[ A y_{t} = A^*_{1} y_{t-1} + ... + A^*_{p} y_{t-p} + \epsilon_t,\] where \(A^*_j = A A_j\) and \(\epsilon_{t} = A u_t \sim (0, \Sigma_\epsilon = A \Sigma_u A^{\prime})\) .

Matrix \(A\) has the special form:

\[ A = \begin{bmatrix} 1 & 0 & \cdots & 0 \\ a_{21} & 1 & & 0 \\ \vdots & & \ddots & \vdots \\ a_{K1} & a_{K2} & \cdots & 1 \\ \end{bmatrix} \]

Beside the normalisation that is achieved by setting the diagonal elements of \(A\) to one, the matrix contains \((K(K - 1) / 2\) further restrictions, which are needed to obtain unique estimates of the structural coefficients. If less restirctions were provided, there would be mathematical problems - to express it in a simple way. In the above example, the upper triangular elements of the matrix are set to zero and the elements below the diagonal are freely estimated. However, it would also be possible to estimate a coefficient in the upper triangular area, if a value in the lower triangular area were set to zero. Furthermore, it would be possible to set more than \((K(K - 1) / 2\) elements of \(A\) to zero. In this case the model is said to be over-identified .

The B-model

The B-model describes the structural relationships of the errors directly by adding a matrix \(B\) to the error term and normalises the error variances to unity so that

\[ y_{t} = A_{1} y_{t-1} + ... + A_{p} y_{t-p} + B \epsilon_t,\] where \(u_{t} = B \epsilon_t\) and \(\epsilon_t \sim (0, I_K)\) . Again, \(B\) must contain at least \((K(K - 1) / 2\) restrictions.

The AB-model

The AB-model is a mixture of the A- and B-model, where the errors of the VAR are modelled as

\[ A u_t = B \epsilon_t \text{ with } \epsilon_t \sim (0, I_K).\]

This general form would require to specify more restrictions than in the A- or B-model. Thus, one of the matrices is usually replaced by an identiy matrix and the other contains the necessary restrictions. This model can be useful to estimate, for example, a system of equations, which describe the IS-LM-model.

Long-run restirctions à la Blanchard-Quah

Blanchard and Quah (1989) propose an approach, which does not require to directly impose restrictions on the structural matrices \(A\) or \(B\) . Instead, structural innovations can be identified by looking at the accumulated effects of shocks and placing zero restrictions on those accumulated relationships, which die out and become zero in the long run.

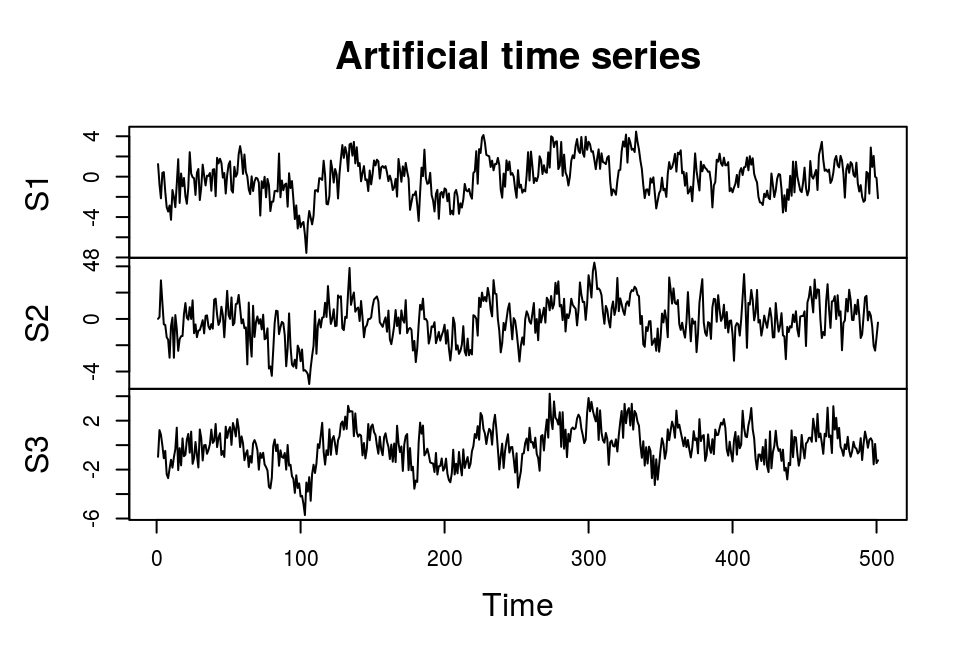

For this illustration we generate an artificial data set with three endogenous variables, which followe the data generating process

\[y_t = A_1 y_{t - 1} + B \epsilon_t,\]

\[ A_1 = \begin{bmatrix} 0.3 & 0.12 & 0.69 \\ 0 & 0.3 & 0.48 \\ 0.24 & 0.24 & 0.3 \end{bmatrix} \text{, } B = \begin{bmatrix} 1 & 0 & 0 \\ -0.14 & 1 & 0 \\ -0.06 & 0.39 & 1 \end{bmatrix} \text{ and } \epsilon_t \sim N(0, I_3). \]

The vars package (Pfaff, 2008) provides functions to estimate structural VARs in R. The workflow is divided into two steps, where the first consists in estimating a standard VAR model using the VAR function:

The estimated coefficients are reasonably close to the true coefficients. In the next step the resulting object is used in function SVAR to estimate the various structural models.

The A-model requires to specify a matrix Amat , which contains the \(K (K - 1) / 2\) restrictions. In the following example, we create a diagonal matrix with ones as diagonal elements and zeros in its upper triangle. The lower triangular elements are set to NA , which indicates that they should be estimated.

The result is not equal to matrix \(B\) , because we estimated an A-model. In order to translate it into the structural coefficients of the B-model, we only have to obtain the inverse of the matrix:

Confidence intervals for the structural coefficients can be obtained by directly accessing the respective element in svar_est_a :

B-modes are estimated in a similar way as A-models by specifying a matrix Bmat , which contains restrictions on the structural matrix \(B\) . In the following example \(B\) is equal to Amat above.

Again, confidence intervals of the structural coefficients can be obtained by directly accessing the respective element in svar_est_b :

Blanchard, O., & Quah, D. (1989). The dynamic effects of aggregate demand and supply disturbances. American Economic Review 79 , 655-673.

Lütkepohl, H. (2007). New Introduction to Multiple Time Series Analyis (2nd ed.). Berlin: Springer.

Bernhard Pfaff (2008). VAR, SVAR and SVEC Models: Implementation Within R Package vars. Journal of Statistical Software 27 (4).

Sims, C. (1980). Macroeconomics and Reality. Econometrica, 48 (1), 1-48.

Grab your spot at the free arXiv Accessibility Forum

Help | Advanced Search

Computer Science > Computer Vision and Pattern Recognition

Title: visual autoregressive modeling: scalable image generation via next-scale prediction.

Abstract: We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction". This simple, intuitive methodology allows autoregressive (AR) transformers to learn visual distributions fast and generalize well: VAR, for the first time, makes GPT-like AR models surpass diffusion transformers in image generation. On ImageNet 256x256 benchmark, VAR significantly improve AR baseline by improving Frechet inception distance (FID) from 18.65 to 1.73, inception score (IS) from 80.4 to 350.2, with around 20x faster inference speed. It is also empirically verified that VAR outperforms the Diffusion Transformer (DiT) in multiple dimensions including image quality, inference speed, data efficiency, and scalability. Scaling up VAR models exhibits clear power-law scaling laws similar to those observed in LLMs, with linear correlation coefficients near -0.998 as solid evidence. VAR further showcases zero-shot generalization ability in downstream tasks including image in-painting, out-painting, and editing. These results suggest VAR has initially emulated the two important properties of LLMs: Scaling Laws and zero-shot task generalization. We have released all models and codes to promote the exploration of AR/VAR models for visual generation and unified learning.

| Comments: | Demo website: https://var.vision/ |

| Subjects: | Computer Vision and Pattern Recognition (cs.CV); Artificial Intelligence (cs.AI) |

| Cite as: | [cs.CV] |

| (or [cs.CV] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite |

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Time Series Analysis Handbook

Chapter 3: vector autoregressive methods ¶.

Prepared by: Maria Eloisa Ventura

Previously, we have introduced the classical approaches in forecasting single/univariate time series like the ARIMA (autoregressive integrated moving-average) model and the simple linear regression model. We learned that stationarity is a condition that is necessary when using ARIMA while this need not be imposed when using the linear regression model. In this notebook, we extend the forecasting problem to a more generalized framework where we deal with multivariate time series –time series which has more than one time-dependent variable. More specifically, we introduce vector autoregressive (VAR) models and show how they can be used in forecasting mutivariate time series.

Multivariate Time Series Model ¶

As shown in the previous chapters, one of the main advantages of using simple univariate methods (e.g., ARIMA) is the ability to forecast future values of one variable by only using past values of itself. However, we know that most if not all of the variables that we observe are actually dependent on other variables. Most of the time, the information that we gather is limited by our capacity to measure the variables of interest. For example, if we want to study weather in a particular city, we could measure temperature, humidity and precipitation over time. But if we only have a thermometer, then we’ll only be able to collect data for temperature, effectively reducing our dataset to a univariate time series.

Now, in cases where we have multiple time series (longitudinal measurements of more than one variable), we can actually use multivariate time series models to understand the relationships of the different variables over time. By utilizing the additional information available from related series, these models can often provide better forecasts than the univariate time series models.

In this section, we cover the foundational information needed to understand Vector Autoregressive Models, a class of multivariate time series models, by using a framework similar to univariate time series laid out in the previous chapters, and extending it to the multivariate case.

Definition: Univariate vs Multivariate Time Series ¶

Time series can either be univariate or multivariate. The term univariate time series consists of single observations recorded sequentially over equal time increments. When dealing with a univariate time series model (e.g., ARIMA), we usually refer to a model that contains lag values of itself as the independent variable.

On the other hand, a multivariate time series has more than one time-dependent variable. For a multivariate process, several related time series are observed simultaneously over time. As an extension of the univariate case, the multivariate time series model involves two or more input variables, and leverages the interrelationship among the different time series variables.

Example 1: Multivariate Time Series ¶

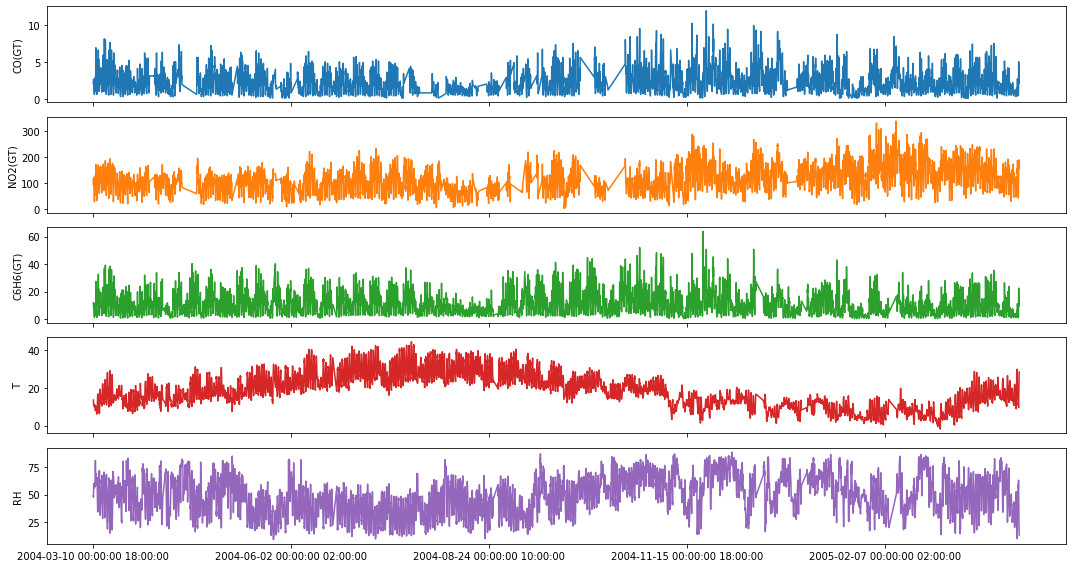

A. air quality data from uci ¶.

The dataset contains hourly averaged measurements obtained from an Air Quality Chemical Multisensor Device which was located on the field of a polluted area at an Italian city. The dataset can be downloaded here .

| CO(GT) | PT08.S1(CO) | NMHC(GT) | C6H6(GT) | PT08.S2(NMHC) | NOx(GT) | PT08.S3(NOx) | NO2(GT) | PT08.S4(NO2) | PT08.S5(O3) | T | RH | AH | Unnamed: 15 | Unnamed: 16 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date_Time | |||||||||||||||

| 2004-03-10 00:00:00 18:00:00 | 2.6 | 1360.00 | 150.0 | 11.881723 | 1045.50 | 166.0 | 1056.25 | 113.0 | 1692.00 | 1267.50 | 13.6 | 48.875001 | 0.757754 | NaN | NaN |

| 2004-03-10 00:00:00 19:00:00 | 2.0 | 1292.25 | 112.0 | 9.397165 | 954.75 | 103.0 | 1173.75 | 92.0 | 1558.75 | 972.25 | 13.3 | 47.700000 | 0.725487 | NaN | NaN |

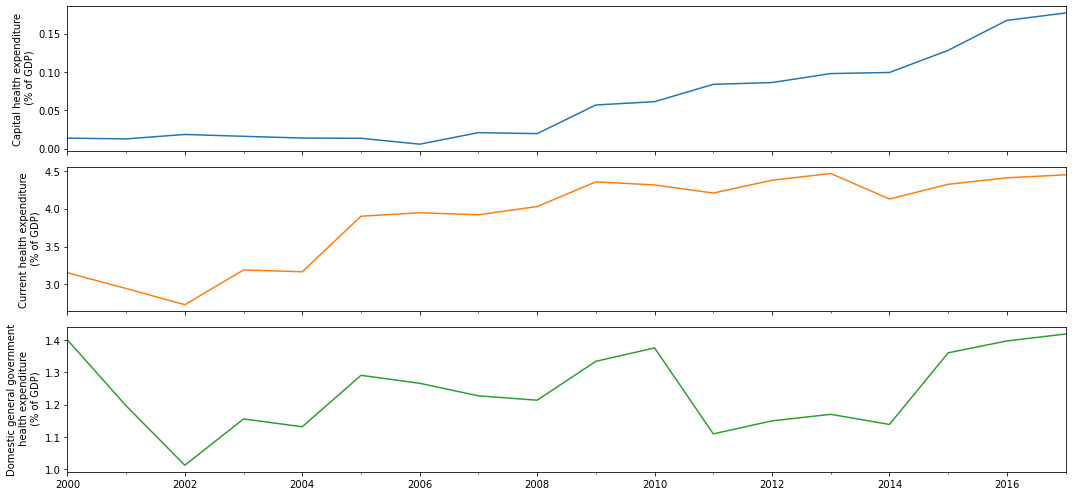

B. Global Health from The World Bank ¶

This dataset combines key health statistics from a variety of sources to provide a look at global health and population trends. It includes information on nutrition, reproductive health, education, immunization, and diseases from over 200 countries. The dataset can be downloaded here .

| indicator_code | Capital_health_expenditure | Current_health_expenditure | Domestic_general_government_health_expenditure |

|---|---|---|---|

| 2000-12-31 | 0.013654 | 3.154818 | 1.400685 |

| 2001-12-31 | 0.012675 | 2.947059 | 1.196554 |

| 2002-12-31 | 0.018476 | 2.733301 | 1.012481 |

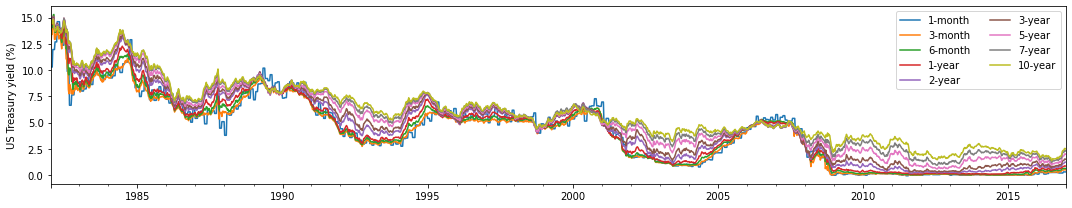

C. US Treasury Rates ¶

January, 1982 – December, 2016 (Weekly) https://essentialoftimeseries.com/data/ This sample dataset contains weekly data of US Treasury rates from January 1982 to December 2016. The dataset can be downloaded here .

| 1-month | 3-month | 6-month | 1-year | 2-year | 3-year | 5-year | 7-year | 10-year | Excess CRSP Mkt Returns | 10-year Treasury Returns | Term spread | Change in term spread | 5-year Treasury Returns | Unnamed: 15 | Excess 10-year Treasury Returns | Term Spread | VXO | Delta VXO | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||||||

| 1982-01-08 | 10.296 | 12.08 | 13.36 | 13.80 | 14.12 | 14.32 | 14.46 | 14.54 | 14.47 | -1.632 | NaN | 2.39 | NaN | NaN | NaN | -0.286662 | 1.729559 | 20.461911 | -0.003106 |

| 1982-01-15 | 10.296 | 12.72 | 13.89 | 14.39 | 14.67 | 14.73 | 14.79 | 14.84 | 14.76 | -2.212 | -2.9 | 2.04 | -0.35 | 1.65 | -2.556 | -3.758000 | 4.464000 | NaN | NaN |

| 1982-01-22 | 10.296 | 13.47 | 14.30 | 14.72 | 14.93 | 14.92 | 14.81 | 14.80 | 14.73 | -0.202 | 0.3 | 1.26 | -0.78 | 0.10 | 0.049 | -0.558000 | 4.434000 | NaN | NaN |

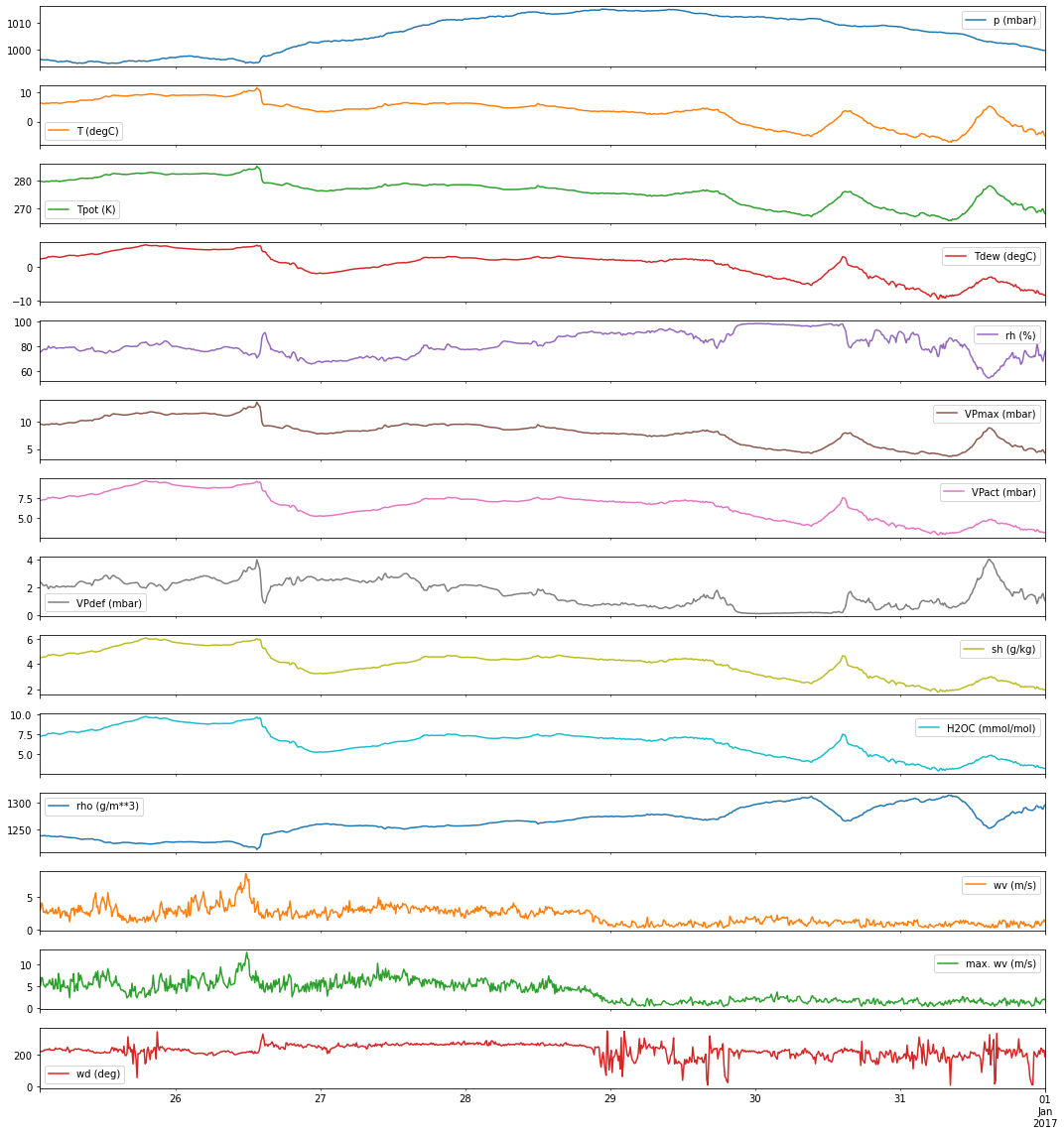

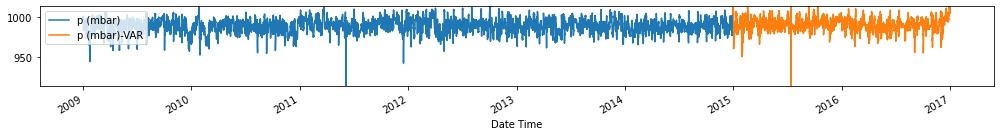

D. Jena Climate Data ¶

Time series dataset recorded at the Weather Station at the Max Planck Institute for Biogeochemistry in Jena, Germany from 2009 to 2016. It contains 14 different quantities (e.g., air temperature, atmospheric pressure, humidity, wind direction, and so on) were recorded every 10 minutes. You can download the data here .

Foundations ¶

Before we discuss VARs, we outline some fundamental concepts below that we will need to understand the model.

Weak Stationarity of Multivariate Time Series ¶

As in the univariate case, one of the requirements that we need to satisfy before we can apply VAR models is stationarity–in particular, weak stationarity. Both in the univariate and multivariate case, the first two moments of the time series are time-invariant. More formally, we describe weak stationarity below.

Consider a \(N\) -dimensional time series, \(\mathbf{y}_t = \left[y_{1,t}, y_{2,t}, ..., y_{N,t}\right]^\prime\) . This is said to be weakly stationary if the first two moments are finite and constant through time, that is,

\(E\left[\mathbf{y}_t\right] = \boldsymbol{\mu}\)

\(E\left[(\mathbf{y}_t-\boldsymbol{\mu})(\mathbf{y}_t-\boldsymbol{\mu})^\prime\right] \equiv \boldsymbol\Gamma_0 < \infty\) for all \(t\)

\(E\left[(\mathbf{y}_t-\boldsymbol{\mu})(\mathbf{y}_{t-h}-\boldsymbol{\mu})^\prime\right] \equiv \boldsymbol\Gamma_h\) for all \(t\) and \(h\)

where the expectations are taken element-by-element over the joint distribution of \(\mathbf{y}_t\) :

\(\boldsymbol{\mu}\) is the vector of means \(\boldsymbol\mu = \left[\mu_1, \mu_2, ..., \mu_N \right]\)

\(\boldsymbol\Gamma_0\) is the \(N\times N\) covariance matrix where the \(i\) th diagonal element is the variance of \(y_{i,t}\) , and the \((i, j)\) th element is the covariance between \(y_{i,t}\) and \({y_{j,t}}\)

\(\boldsymbol\Gamma_h\) is the cross-covariance matrix at lag \(h\)

Obtaining Cross-Correlation Matrix from Cross-Covariance Matrix ¶

When dealing with a multivariate time series, we can examine the predictability of one variable on another by looking at the relationship between them using the cross-covariance function (CCVF) and cross-correlation function (CCF). To do this, we begin by defining the cross-covariance between two variables, then we estimate the cross-correlation between one variable and another variable that is time-shifted. This informs us whether one time series may be related to the past lags of the other. In other words, CCF is used for identifying lags of one variable that might be useful as a predictor of the other variable.

Let \(\mathbf\Gamma_0\) be the covariance matrix at lag 0, \(\mathbf D\) be a \(N\times N\) diagonal matrix containing the standard deviations of \(y_{i,t}\) for \(i=1, ..., N\) . The correlation matrix of \(\mathbf{y}_t\) is defined as

where the \((i, j)\) th element of \(\boldsymbol\rho_0\) is the correlation coefficient between \(y_{i,t}\) and \(y_{j,t}\) at time \(t\) :

Let \(\boldsymbol\Gamma_h = E\left[(\mathbf{y}_t-\boldsymbol{\mu})(\mathbf{y}_{t-h}-\boldsymbol{\mu})^\prime\right]\) be the lag- \(h\) covariance cross-covariance matrix of \(\mathbf y_{t}\) . The lag- \(h\) cross-correlation matrix is defined as

The \((i,j)\) th element of \(\boldsymbol\rho_h\) is the correlation coefficient between \(y_{i,t}\) and \(y_{j,t-h}\) :

What do we get from this?

Correlation Coefficient | Interpretation |

|---|---|

\(\rho_{i,j}(0)\neq0\) | \(y_{i,t}\) and \(y_{j,t}\) are |

\(\rho_{i,j}(h)=\rho_{j,i}(h)=0\) for all \(h\geq0\) | \(y_{i,t}\) and \(y_{j,t}\) share |

\(\rho_{i,j}(h)=0\) and \(\rho_{j,i}(h)=0\) for all \(h>0\) | \(y_{i,t}\) and \(y_{j,t}\) are said to be linearly |

\(\rho_{i,j}(h)=0\) for all \(h>0\), but \(\rho_{j,i}(q)\neq0\) for at least some \(q>0\) | There is a between \(y_{i,t}\) and \(y_{j,t}\), where \(y_{i,t}\) does not depend on \(y_{j,t}\), but \(y_{j,t}\) depends on (some) lagged values of \(y_{i,t}\) |

\(\rho_{i,j}(h)\neq0\) for at least some \(h>0\) and \(\rho_{j,i}(q)\neq0\) for at least some \(q>0\) | There is a between \(y_{i,t}\) and \(y_{j,t}\) |

Vector Autoregressive (VAR) Models ¶

The vector autoregressive (VAR) model is one of the most successful models for analysis of multivariate time series. It has been demonstrated to be successful in describing relationships and forecasting economic and financial time series, and providing more accurate forecasts than the univariate time series models and theory-based simultaneous equations models.

The structure of the VAR model is just the generalization of the univariate AR model. It is a system regression model that treats all the variables as endogenous, and allows each of the variables to depend on \(p\) lagged values of itself and of all the other variables in the system.

A VAR model of order \(p\) can be represented as

where \(\mathbf y_t\) is a \(N\times 1\) vector containing \(N\) endogenous variables, \(\mathbf a_0\) is a \(N\times 1\) vector of constants, \(\mathbf A_1, \mathbf A_2, ..., \mathbf A_p\) are the \(p\) \(N\times N\) matrices of autoregressive coefficients, and \(\mathbf u_t\) is a \(N\times 1\) vector of white noise disturbances.

VAR(1) Model ¶

To illustrate, let’s consider the simplest VAR model where we have \(p=1\) .

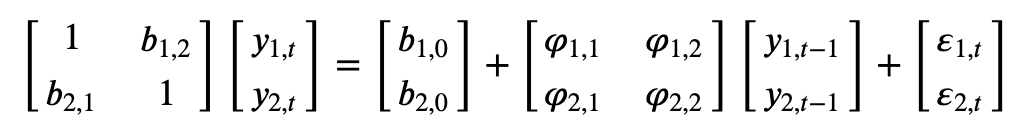

Structural and Reduced Forms of the VAR model ¶

Consider the following bivariate system

where both \(y_{1,t}\) and \(y_{2,t}\) are assumed to be stationary, and \(\varepsilon_{1,t}\) and \(\varepsilon_{2,t}\) are the uncorrelated error terms with standard deviation \(\sigma_{1,t}\) and \(\sigma_{2,t}\) , respectively.

Structural VAR (VAR in primitive form) ¶

Described by equation above

Captures contemporaneous feedback effects ( \(b_{1,2}, b_{2,1}\) )

Not very practical to use

Contemporaneous terms cannot be used in forecasting

Needs further manipulation to make it more useful (e.g. multiplying the matrix equation by \(\mathbf B^{-1}\) )

Multiplying the matrix equation by \(\mathbf B^{-1}\) , we get

where \(\mathbf a_0 = \mathbf B^{-1}\mathbf Q_0\) , \(\mathbf A_1 = \mathbf B^{-1}\mathbf Q_1\) , \(L\) is the lag/backshift operator, and \(\mathbf u_t = \mathbf B^{-1}\boldsymbol\varepsilon_t\) , equivalently,

Reduced-form VAR (VAR in standard form) ¶

Only dependent on lagged endogenous variables (no contemporaneous feedback)

Can be estimated using ordinary least squares (OLS)

VMA infinite representation and Stationarity ¶

Consider the reduced form, standard VAR(1) model

Assuming that the process is weakly stationary and taking the expectation of \(\mathbf y_t\) , we have

where \(E\left[\mathbf u_t\right]=0.\) If we let \(\tilde{\mathbf y}_{t}\equiv \mathbf y_t - \boldsymbol \mu\) be the mean-corrected time-series, we can write the model as

Substituting \(\tilde{\mathbf y}_{t-1} = \mathbf A_1 \tilde{\mathbf y}_{t-2} + \mathbf u_{t-1}\) ,

If we keep iterating, we get

Letting \(\boldsymbol\Theta_i\equiv A_1^i\) , we get the VMA infinite representation

Stationarity of the VAR(1) model ¶

All the N eigenvalues of the matrix \(A_1\) must be less than 1 in modulus, to avoid that the coefficient matrix \(A_1^j\) from either exploding or converging to a nonzero matrix as \(j\) goes to infinity.

Provided that the covariance matrix of \(u_t\) exists, the requirement that all the eigenvalues of \(A_1\) are less than one in modulus is a necessary and sufficient condition for \(y_t\) to be stable, and thus, stationary.

All roots of \(det\left(\mathbf I_N - \mathbf A_1 z\right)=0\) must lie outside the unit circle.

VAR(p) Model ¶

Consider the VAR(p) model described by

Using the lag operator \(L\) , we get

where \(\tilde{\mathbf A} (L) = (\mathbf I_N - A_1 L - ... - A_p L^p)\) . Assuming that \(\mathbf y_t\) is weakly stationary, we obtain that

Defining \(\tilde{\mathbf y}_t=\mathbf y_t -\boldsymbol\mu\) , we have

Properties ¶

\(Cov[\mathbf y_t, \mathbf u_t] = \Sigma_u\) , the covariance matrix of \(\mathbf u_t\)

\(Cov[\mathbf y_{t-h}, \mathbf u_t] = \mathbf 0\) for any \(h>0\)

\(\boldsymbol\Gamma_h = \mathbf A_1 \boldsymbol\Gamma_{h-1} +...+\mathbf A_p \boldsymbol\Gamma_{h-p}\) for \(h>0\)

\(\boldsymbol\rho_h = \boldsymbol \Psi_1 \boldsymbol\Gamma_{h-1} +...+\boldsymbol \Psi_p \boldsymbol\Gamma_{h-p}\) for \(h>0\) , where \(\boldsymbol \Psi_i = \mathbf D^{-1/2}\mathbf A_i D^{-1/2}\)

Stationarity of VAR(p) model ¶

All roots of \(det\left(\mathbf I_N - \mathbf A_1 z - ...- \mathbf A_p z^p\right)=0\) must lie outside the unit circle.

Specification of a VAR model: Choosing the order p ¶

Fitting a VAR model involves the selection of a single parameter: the model order or lag length \(p\) . The most common approach in selecting the best model is choosing the \(p\) that minimizes one or more information criteria evaluated over a range of model orders. The criterion consists of two terms: the first one characterizes the entropy rate or prediction error, and second one characterizes the number of freely estimated parameters in the model. Minimizing both terms will allow us to identify a model that accurately models the data while preventing overfitting (due to too many parameters).

Some of the commonly used information criteria include: Akaike Information Criterion (AIC), Schwarz’s Bayesian Information Criterion (BIC), Hannan-Quinn Criterion (HQ), and Akaike’s Final Prediction Error Criterion (FPE). The equation for each are shown below.

Akaike’s information criterion

Schwarz’s Bayesian information criterion

Hannan-Quinn’s information criterion

Final prediction error

where \(M\) stands for multivariate, \(\tilde{\boldsymbol\Sigma}_u\) is the estimated covariance matrix of the residuals, \(T\) is the number of observations in the sample, and \(k\) is the total number of equations in the VAR( \(p\) ) (i.e. \(N^2p + N\) where \(N\) is the number of equations and \(p\) is the number of lags).

Among the metrics above, AIC and BIC are the most widely used criteria, but BIC penalizes higher orders more. For moderate and large sample sizes, AIC and FPE are essentially equivalent, but FPE may outperform AIC for small sample sizes. HQ also penalizes higher order models more heavily than AIC, but less than BIC. However, under small sample conditions, AIC/FPE may outperform BIC and/or HQ in selecting true model order.

There are cases when AIC and FPE show no clear minimum over a range or model orders or lag lengths. In this case, there may be a clear “elbow” when we plot the values over model order. This may indicate a suitable model order.

Building a VAR model ¶

In this section, we show how we can use the VAR model to forecast the air quality data. The following steps are shown below:

Check for stationarity.

Split data into train and test sets.

Select the VAR order p that gives.

Fit VAR model of order p on the train set.

Generate forecast.

Evaluate model performance.

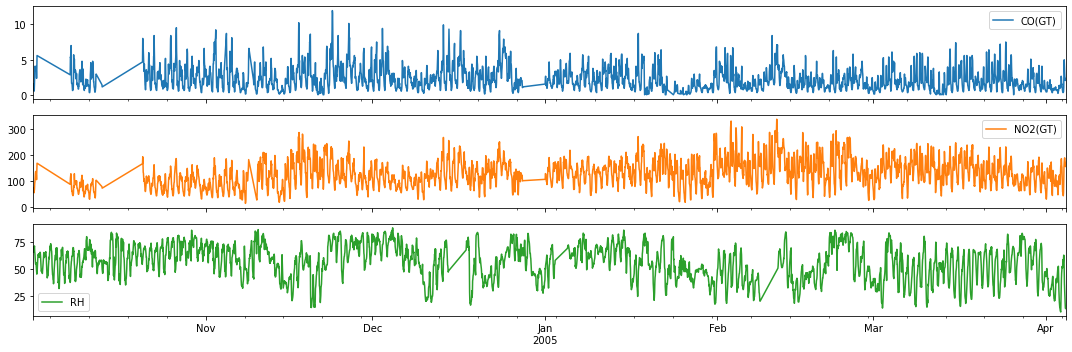

Example 2: Forecasting Air Quality Data (CO, NO2 and RH) ¶

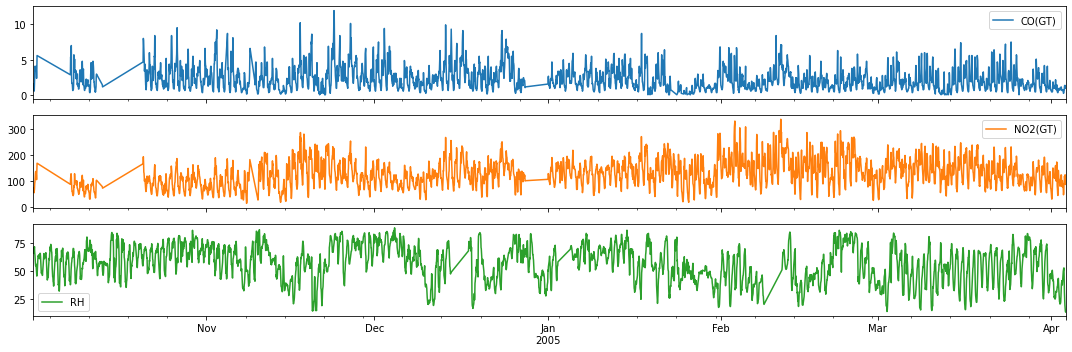

For illustration, we consider the carbon monoxide, nitrous dioxide and relative humidity time series from the Air Quality Dataset from 1 October 2014.

Quick inspection before we proceed with modeling…

To find out whether the multivariate approach is better than treating the signals separately as univariate time series, we examine the relationship between the variables using CCF. The sample below shows the CCF for the last 100 data points of the Air quality data for CO, NO2 and RH.

Observation/s :

As shown in the plot above, we can see that there’s a relationship between:

CO and some lagged values of RH and NO2

NO2 and some lagged values of RH and CO

RH and some lagged values of CO and NO2

This shows that we can benefit from the multivariate approach, so we proceed with building the VAR model.

1. Check stationarity ¶

To check for stationarity, we use the Kwiatkowski–Phillips–Schmidt–Shin (KPSS) test and the Augmented Dickey-Fuller (ADF) test. For the data to be suitable for VAR modelling, we need each of the variables in the multivariate time series to be stationary. In both tests, we need the test statistic to be less than the critical values to say that a time series (a variable) to be stationary.

Kwiatkowski–Phillips–Schmidt–Shin (KPSS) test ¶

Recall: Null hypothesis is that an observable time series is stationary around a deterministic trend (i.e. trend-stationary) against the alternative of a unit root.

| CO(GT) | NO2(GT) | RH | |

|---|---|---|---|

| Test statistic | 0.0702 | 0.3239 | 0.1149 |

| p-value | 0.1000 | 0.0100 | 0.1000 |

| Critical value - 1% | 0.2160 | 0.2160 | 0.2160 |

| Critical value - 2.5% | 0.2160 | 0.2160 | 0.2160 |

| Critical value - 5% | 0.1460 | 0.1460 | 0.1460 |

| Critical value - 10% | 0.1190 | 0.1190 | 0.1190 |

From the KPSS test, CO and RH are stationary.

Augmented Dickey-Fuller (ADF) test ¶

Recall: Null hypothesis is that a unit root is present in a time series sample against the alternative that the time series is stationary.

| CO(GT) | NO2(GT) | RH | |

|---|---|---|---|

| Test statistic | -7.0195 | -6.7695 | -6.8484 |

| p-value | 0.0000 | 0.0000 | 0.0000 |

| Critical value - 1% | -3.4318 | -3.4318 | -3.4318 |

| Critical value - 5% | -2.8622 | -2.8622 | -2.8622 |

| Critical value - 10% | -2.5671 | -2.5671 | -2.5671 |

From the ADF test, CO, NO2 and RH are stationary.

2. Split data into train and test sets ¶

We use the dataset from 01 October 2014 to predict the last 24 points (24 hrs/1 day) in the dataset.

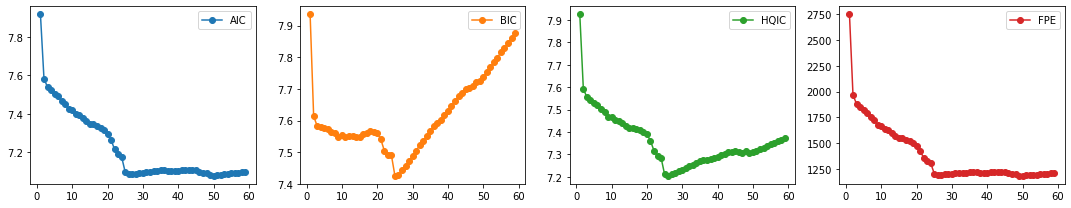

3. Select order p ¶

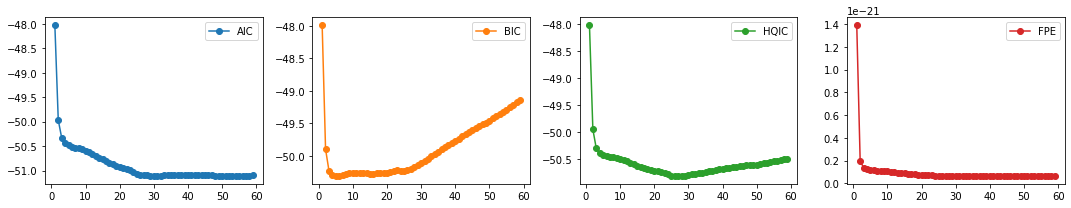

We compute the different multivariate information criteria (AIC, BIC, HQIC), and FPE. We pick the set of order parameters that correspond to the lowest values.

We find BIC and HQIC to be lowest at \(p=26\) , and we also observe an elbow in the plots for AIC, and FPE, so we choose the number of lags to be 26.

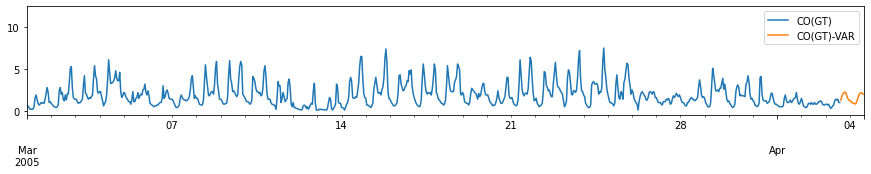

3. Fit VAR model with chosen order ¶

4. get forecast ¶.

Performance Evaluation: Comparison with ARIMA model ¶

When using ARIMA, we treat each variable as a univariate time series, and we perform the forecasting for each variable: 1 for CO, 1 for NO2, and 1 for RH

| CO(GT)-ARIMA | NO2(GT)-ARIMA | RH-ARIMA | |

|---|---|---|---|

| Date_Time | |||

| 2005-04-03 15:00:00 | 0.999865 | 87.002935 | 14.502893 |

| 2005-04-03 16:00:00 | 0.999729 | 87.005870 | 16.743239 |

| 2005-04-03 17:00:00 | 0.999594 | 87.008806 | 19.253414 |

| 2005-04-03 18:00:00 | 0.999458 | 87.011741 | 21.715709 |

| 2005-04-03 19:00:00 | 0.999323 | 87.014676 | 23.974483 |

Performance Metrics

| CO(GT)-VAR | NO2(GT)-VAR | RH-VAR | CO(GT)-ARIMA | NO2(GT)-ARIMA | RH-ARIMA | |

|---|---|---|---|---|---|---|

| MAE | 0.685005 | 31.687612 | 9.748883 | 1.057097 | 59.283376 | 16.239017 |

| MSE | 1.180781 | 1227.046604 | 111.996306 | 2.163021 | 4404.904459 | 333.622140 |

| MAPE | 43.508500 | 29.811066 | 35.565987 | 56.960617 | 46.190671 | 51.199023 |

MAE: VAR forecasts have lower errors than ARIMA forecasts for CO and NO2 but not in RH.

MSE: VAR forecasts have lower errors for all variables (CO, NO2 and RH).

MAPE: VAR forecasts have lower errors for all variables (CO, NO2 and RH).

Training time is significantly reduced when using VAR compared to ARIMA (<0.1s run time for VAR while ~20s for ARIMA)

Structural VAR Analysis ¶

In addition to forecasting, VAR models are also used for structural inference and policy analysis. In macroeconomics, this structural analysis has been extensively employed to investigate the transmission mechanisms of macroeconomic shocks (e.g., monetary shocks, financial shocks) and test economic theories. There are particular assumptions imposed about the causal structure of the dataset, and the resulting causal impacts of unexpected shocks (also called innovations or perturbations) to a specific variable on the different variables in the model are summarized. In this section, we cover two of the common methods in summarizing the effects of these causal impacts: (1) impulse response functions, and (2): forecast error variance decompositions.

Impulse Response Function (IRF) ¶

Coefficients of the VAR models are often difficult to interpret so practitioners often estimate the impulse response function.

IRFs trace out the time path of the effects of an exogenous shock to one (or more) of the endogenous variables on some or all of the other variables in a VAR system.

IRF traces out the response of the dependent variable of the VAR system to shocks (also called innovations or impulses) in the error terms.

IRF in the VAR system for Air Quality ¶

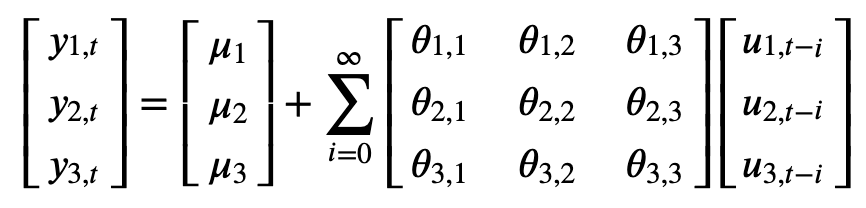

Let \(y_{1,t}\) , \(y_{2,t}\) and \(y_{3,t}\) be the time series corresponding to CO signal, NO2 signal, and RH signal, respectively. Consider the moving average representation of the system shown below:

Suppose \(u_1\) in the first equation increases by a value of one standard deviation.

This shock will change \(y_1\) in the current as well as the future periods.

This shock will also have an impact on \(y_2\) and \(y_3\) .

Suppose \(u_2\) in the second equation increases by a value of one standard deviation.

This shock will change \(y_2\) in the current as well as the future periods.

This shock will also have an impact on \(y_1\) and \(y_3\) .

Suppose \(u_3\) in the third equation increases by a value of one standard deviation.

This shock will change \(y_3\) in the current as well as the future periods.

This shock will also have an impact on \(y_1\) and \(y_2\) .

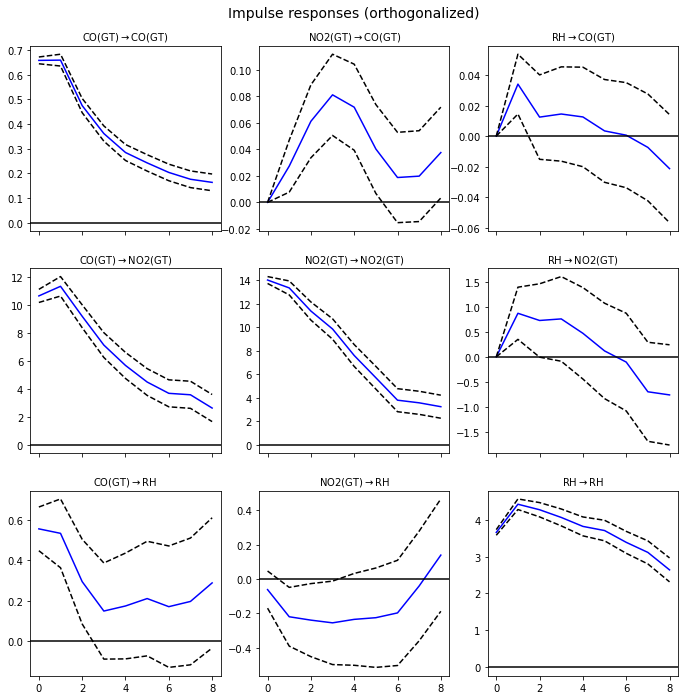

Observation/s:

Effects of exogenous perturbation/shocks (1SD) of a variable on itself:

CO \(\rightarrow\) CO: A shock in the value of CO has a larger effect CO in the early hours but this decays over time.

NO2 \(\rightarrow\) NO2: A shock in the value of NO2 has a larger effect NO2 in the early hours but this decays over time.

RH \(\rightarrow\) RH: A shock in the value of RH has a largest effect in RH after 1 hour and this effect decays over time.

Effects of exogenous perturbation/shocks of a variable on another:

CO \(\rightarrow\) NO2: A shock in the value of CO has a largest effect in NO2 after 1 hour and this effect decays over time.

CO \(\rightarrow\) RH: A shock in the value of CO has an immediate effect in the value of RH. However, the effect decreases immediately after an hour, and the value seems to stay at around 0.2.

NO2 \(\rightarrow\) CO: A shock in NO2 only causes a small effect in the values of CO. There seems to be a delayed effect, peaking after 3 hours, but the magnitude is still small.

NO2 \(\rightarrow\) RH: A shock in NO2 causes a small (negative) effect in the values of RH. The magnitude seems to decline further after 6 hours. The value of the IRF reaches zero in about 7 hours.

RH \(\rightarrow\) CO: A shock in RH only causes a small effect in the values of CO.

RH \(\rightarrow\) NO2: A shock in the value of RH has a largest effect in NO2 after 1 hour and this effect decays over time. The value of the IRF reaches zero after 6 hours.

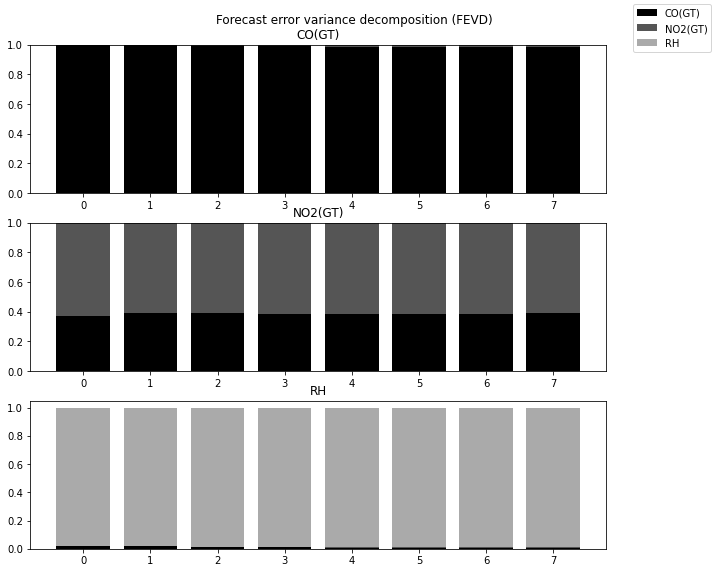

Forecast Error Variance Decomposition (FEVD) ¶

FEVD indicates the amount of information each variable contributes to the other variables in the autoregression

While impulse response functions trace the effects of a shock to one endogenous variable on to the other variables in the VAR, variance decomposition separates the variation in an endogenous variable into the component shocks to the VAR.

It determines how much of the forecast error variance of each of the variables can be explained by exogenous shocks to the other variables.

For CO, the variance is mostly explained by exogenous shocks to CO. This decreases over time but only by a small amount.

For NO2, the variance is mostly explained by exogenous shocks to NO2 and CO.

For RH, the variance is mostly explained by exogenous shocks to RH. Over time, the contribution of the exogenous shocks to CO increases.

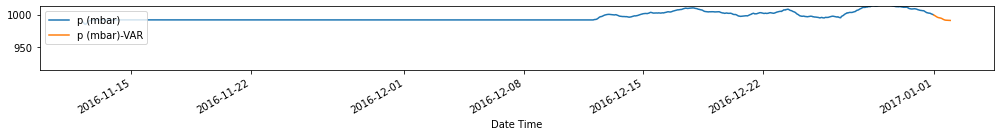

Example 3: Forecasting the Jena climate data ¶

We try to forecast the Jena climate data using the method outlined above. We will train the VAR model using hourly weather measurements from January 1, 2019 (00:10) up to December 29, 2014 (18:10). The performance of the model will be evaluated on the test set which contains data from December 29, 2014 (19:10) to December 31, 2014 (23:20) which is equivalent to 17,523 data points for each of the variables.

Load dataset ¶

Check stationarity of each variable using adf test ¶.

| p (mbar) | T (degC) | Tpot (K) | Tdew (degC) | rh (%) | VPmax (mbar) | VPact (mbar) | VPdef (mbar) | sh (g/kg) | H2OC (mmol/mol) | rho (g/m**3) | wv (m/s) | max. wv (m/s) | wd (deg) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test statistic | -15.5867 | -7.9586 | -8.3354 | -8.5750 | -17.7069 | -9.1945 | -9.0103 | -13.5492 | -9.0827 | -9.0709 | -9.3980 | -24.2424 | -24.3052 | -19.8394 |

| p-value | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Critical value - 1% | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 | -3.4305 |

| Critical value - 5% | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 | -2.8616 |

| Critical value - 10% | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 | -2.5668 |

From the values above, all the components of the Jena climate data are stationary, so we’ll use all the variables in our VAR model.

Select order p ¶

The model order that resulted to the minimum value varies for each information criteria, showing no clear minimum. We see an elbow at \(p=5\) , but if we look at AIC and HQIC we’re observing another elbow/local minimum at \(p=26\) . So, we choose \(p=26\) as our lag length.

Train VAR model using the training and validation data ¶

Forecast 24-hour weather measurements and evaluate performance on test set ¶.

Evaluate forecasts

| p (mbar)-VAR | T (degC)-VAR | Tpot (K)-VAR | Tdew (degC)-VAR | rh (%)-VAR | VPmax (mbar)-VAR | VPact (mbar)-VAR | VPdef (mbar)-VAR | sh (g/kg)-VAR | H2OC (mmol/mol)-VAR | rho (g/m**3)-VAR | wv (m/s)-VAR | max. wv (m/s)-VAR | wd (deg)-VAR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | 2.681284 | 2.542025 | 2.603187 | 1.797102 | 10.899944 | 2.526237 | 1.171625 | 2.379343 | 0.744665 | 1.186948 | 12.164486 | 3.136091 | 4.414089 | 66.420597 |

| MSE | 238.321753 | 652.733627 | 618.458262 | 102.257346 | 9581.665059 | 540.666240 | 39.837241 | 490.874048 | 15.612470 | 39.678736 | 9986.126326 | 17352.964659 | 23404.318476 | 87581.698748 |

Observation/s : The VAR(26) model outperformed the naive (MAE= 3.18), seasonal naive (MAE= 2.61) and ARIMA (MAE= 3.19) models.

Forecast 24 hours beyond test set ¶

VAR methods are useful when dealing with multivariate time series, as they allow us to use the relationship between the different variable to forecast.

These models allow us to forecast the different variables simultaneously, with the added benefit of easy (only 1 hyperparameter) and fast training.

Using the fitted VAR model, we can also explain the relationship between variables, and how the perturbation in one variable affects the others by getting the impulse response functions and the variance decomposition of the forecasts.

However, the application of these models is limited due to the stationarity requirement for ALL the variables in the multivariate time series. This method won’t work well if there is at least one variable that’s non-stationary. When dealing with non-stationary multivariate time series, one can explore the use of vector error correction models (VECM).

Preview to the Next Chapter ¶

In the next chapter, we further extend the use of VAR models to explain the relationships between variables in a multivariate time series using Granger causality , which is one of the most common ways to describe causal mechanisms in time series data.

References ¶

Main references

Lütkepohl, H. (2005). New introduction to multiple time series analysis. Berlin: Springer.

Kilian, L., & Lütkepohl, H. (2018). Structural vector autoregressive analysis. Cambridge: Cambridge University Press.

Supplementary references are listed here .

- Bibliography

- More Referencing guides Blog Automated transliteration Relevant bibliographies by topics

- Automated transliteration

- Relevant bibliographies by topics

- Referencing guides

Evaluating variable selection methods for multivariable regression models: A simulation study protocol

Roles Conceptualization, Methodology, Software, Visualization, Writing – original draft, Writing – review & editing

Affiliation Institute of Clinical Biometrics, Center for Medical Data Science, Medical University of Vienna, Vienna, Austria

Roles Conceptualization, Funding acquisition, Methodology, Supervision, Writing – original draft, Writing – review & editing

Roles Writing – review & editing

Affiliation Institute of Biometry and Clinical Epidemiology, Charité – Universitätsmedizin Berlin, corporate member of Freie Universität Berlin and Humboldt-Universität zu Berlin, Berlin, Germany

Roles Software, Writing – review & editing

Affiliations Institute of Clinical Biometrics, Center for Medical Data Science, Medical University of Vienna, Vienna, Austria, Austrian Agency for Health and Food Safety (AGES), Vienna, Austria

* E-mail: [email protected]

¶ Membership list can be found in the Acknowledgments section.

- Theresa Ullmann,

- Georg Heinze,

- Lorena Hafermann,

- Christine Schilhart-Wallisch,

- Daniela Dunkler,

- for TG2 of the STRATOS initiative

- Published: August 9, 2024

- https://doi.org/10.1371/journal.pone.0308543

- Peer Review

- Reader Comments

Researchers often perform data-driven variable selection when modeling the associations between an outcome and multiple independent variables in regression analysis. Variable selection may improve the interpretability, parsimony and/or predictive accuracy of a model. Yet variable selection can also have negative consequences, such as false exclusion of important variables or inclusion of noise variables, biased estimation of regression coefficients, underestimated standard errors and invalid confidence intervals, as well as model instability. While the potential advantages and disadvantages of variable selection have been discussed in the literature for decades, few large-scale simulation studies have neutrally compared data-driven variable selection methods with respect to their consequences for the resulting models. We present the protocol for a simulation study that will evaluate different variable selection methods: forward selection, stepwise forward selection, backward elimination, augmented backward elimination, univariable selection, univariable selection followed by backward elimination, and penalized likelihood approaches (Lasso, relaxed Lasso, adaptive Lasso). These methods will be compared with respect to false inclusion and/or exclusion of variables, consequences on bias and variance of the estimated regression coefficients, the validity of the confidence intervals for the coefficients, the accuracy of the estimated variable importance ranking, and the predictive performance of the selected models. We consider both linear and logistic regression in a low-dimensional setting (20 independent variables with 10 true predictors and 10 noise variables). The simulation will be based on real-world data from the National Health and Nutrition Examination Survey (NHANES). Publishing this study protocol ahead of performing the simulation increases transparency and allows integrating the perspective of other experts into the study design.

Citation: Ullmann T, Heinze G, Hafermann L, Schilhart-Wallisch C, Dunkler D, for TG2 of the STRATOS initiative (2024) Evaluating variable selection methods for multivariable regression models: A simulation study protocol. PLoS ONE 19(8): e0308543. https://doi.org/10.1371/journal.pone.0308543

Editor: Suyan Tian, The First Hospital of Jilin University, CHINA

Received: February 7, 2024; Accepted: July 25, 2024; Published: August 9, 2024

Copyright: © 2024 Ullmann et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: This manuscript is a protocol of a simulation study. We intend to share the software code after the study has been conducted and published. This will allow recreating our data and reproducing our simulation study.

Funding: This research was funded in part by the Austrian Science Fund (FWF, https://www.fwf.ac.at/en/ ) [I-4739-B] (for T.U. and C.W.) and by the German Research Foundation (DFG, https://www.dfg.de/en ) [RA 2347/8-1] (for L. H.). For open access purposes, the author has applied a CC BY public copyright license to any author accepted manuscript version arising from this submission. The funders did not and will not have any role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

1 Introduction

Data-driven variable selection is frequently performed when modeling the associations between an outcome and multiple independent variables (sometimes also referred to as explanatory variables, covariates or predictors). Variable selection may help to generate parsimonious and interpretable models, and may also yield models with increased predictive accuracy. Despite these potential advantages, data-driven variable selection can also have unintended negative consequences that many researchers are not fully aware of. Variable selection induces additional uncertainty in the estimation process and may cause biased estimation of regression coefficients, model instability (i.e., models that are not robust with respect to small perturbations of the data set), and issues with post-selection inference such as underestimated standard errors and invalid confidence intervals [ 1 – 5 ].

A recent review [ 1 ] provided guidance about variable selection and gave an overview of possible consequences of variable selection. However, there are few systematic simulation studies that compare different variable selection methods with respect to their consequences for the resulting models (for some exceptions, see [ 6 – 10 ]). While many articles proposing new variable selection methods include a comparison with existing methods (based on simulated or real data), these comparisons are typically somewhat limited, often comparing the new method to only one to three competitors, even though there are many more existing methods. Moreover, these articles are inherently biased towards demonstrating superiority of the new methods. In particular, such studies cannot be considered as neutral . A neutral comparison study is a study whose authors do not have a vested interest in one of the competing methods, and are (as a group) approximately equally familiar with all considered methods [ 11 , 12 ]. More neutral comparison studies about existing variable selection methods are needed to better understand their properties, a viewpoint that aligns with the goals of the STRATOS initiative (STRengthening Analytical Thinking for Observational Studies [ 13 ]). The STRATOS initiative is an international consortium of biostatistical experts, and aims to provide guidance in the design and analysis of observational studies for specialist and non-specialist audiences. This perspective motivates our comprehensive simulation study.

We will focus on descriptive modeling (i.e., describing the relationship between the outcome and the independent variables in a parsimonious manner) and predictive modeling (i.e., predicting the outcome as accurately as possible) [ 14 ]. Our setting is multivariable regression analysis with one outcome variable. The outcome is either continuous (linear regression) or binary (logistic regression). We simulate data in a low-dimensional scenario (20 variables consisting of 10 true predictors and 10 noise variables). Different variable selection methods with multiple parameter settings are compared: forward selection, stepwise forward selection, backward elimination, augmented backward elimination [ 15 ], univariable selection, univariable selection followed by backward elimination, the Lasso [ 16 ], the relaxed Lasso [ 9 , 17 ], and the adaptive Lasso [ 18 ]. We compare the performances of these methods with respect to false inclusion and/or exclusion of variables, consequences on bias and variance of the estimated regression coefficients, the validity of the confidence intervals for the coefficients, the accuracy of the estimated variable importance ranking, and finally the predictive performance of the selected models.

Using simulated instead of real data allows us to a) know the true data generating process and b) systematically vary several data characteristics [ 19 , 20 ]. For example, we will include varying sample sizes and R 2 , as the consequences of variable selection depend on these parameters. To ensure that the simulation results are practically relevant, we use real data as the starting point for our simulation. The distributions and correlation structure of the variables are based on data from the National Health and Nutrition Examination Survey (NHANES) [ 21 ]. The choice of variables and true regression coefficients is inspired by an applied study about predicting the difference between ambulatory/home and clinic blood pressure readings [ 22 ]. Our simulated data thus mimics real cardiovascular data.

Our focus is on low-dimensional data, which is reflected in our simulation setting with twenty independent variables. Data of this type frequently appears in medicine and other application fields, and researchers often apply variable selection in this context. For example, a systematic review of models for COVID-19 prognosis [ 23 , 24 ] identified 236 newly developed regression models for prediction. Data-driven variable selection was applied (and reported) for 196 models. In 165 models both the number of candidate predictors (i.e., the predictors considered at the start of data-driven selection) and the number of predictors in the final model were reported; the median numbers were 28 (range 4–130), and 6 (range 1–38), respectively. This demonstrates that low- to medium-dimensional data played an important role in COVID-19 prediction research. Of course, data-driven variable selection is also relevant for high-dimensional data. Comparing variable selection methods for high-dimensional data would require a different study design and is not the purpose of this planned simulation study.

As mentioned above, neutrality is an important goal when conducting systematic comparison studies. “Perfect” neutrality may be the ultimate goal, but this ideal can be difficult to achieve in practice. While we aim to be as neutral as possible, we disclose (for the purpose of full transparency) that one of the methods for variable selection included in our comparison, namely augmented backwards elimination, was originally proposed by two authors of the present study protocol [ 15 ]. Our goal was to not let this fact influence our choice of study design, though unconscious biases can never be fully excluded. Striving for as much neutrality as possible motivated us to publish this study protocol. This will allow us to integrate the comments of reviewers before performing the simulation. For the design of our study, results from previous smaller simulation studies and pilot studies were taken into account [ 1 ]; however, the study outlined in this protocol has not yet been run and analyzed. Preregistration of study protocols for simulation studies/methodological studies is still very rare (for an exception, see [ 25 ]). However, this practice could offer similar advantages to those discussed for preregistration in applied research, such as increased transparency and prevention of “hindsight bias” [ 26 ]. Potential advantages of preregistering protocols for simulation studies, but also possible limitations and challenges, are discussed more extensively elsewhere [ 27 ].

A specific goal of our simulation study is to evaluate previously published recommendations about variable selection [ 1 ], which we discuss in Section 2. We then describe our simulation design in Section 3, explain the planned code review in Section 4, and conclude the protocol with some final remarks in Section 5.

2 Previous variable selection recommendations