- AI Education in India

- Speakers & Mentors

- AI services

How Does Artificial Intelligence Solve Problems? An In-Depth Look at Problem Solving in AI

What is problem solving in artificial intelligence? It is a complex process of finding solutions to challenging problems using computational algorithms and techniques. Artificial intelligence, or AI, refers to the development of intelligent systems that can perform tasks typically requiring human intelligence.

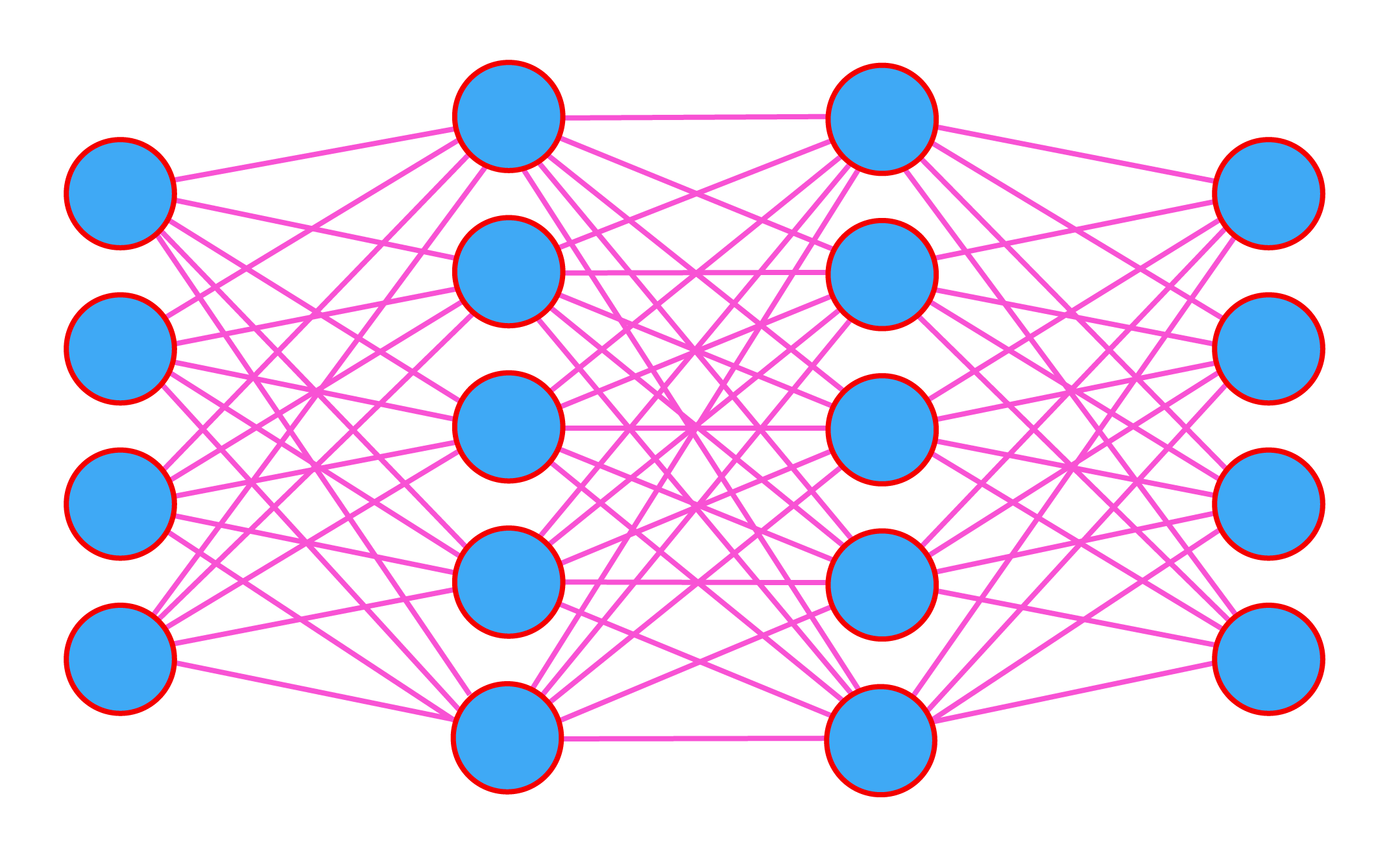

Solving problems in AI involves the use of various algorithms and models that are designed to mimic human cognitive processes. These algorithms analyze and interpret data, generate possible solutions, and evaluate the best course of action. Through machine learning and deep learning, AI systems can continuously improve their problem-solving abilities.

Artificial intelligence problem solving is not limited to a specific domain or industry. It can be applied in various fields such as healthcare, finance, manufacturing, and transportation. AI-powered systems can analyze vast amounts of data, identify patterns, and make predictions to solve complex problems efficiently.

Understanding and developing problem-solving capabilities in artificial intelligence is crucial for the advancement of AI technologies. By improving problem-solving algorithms and models, researchers and developers can create more efficient and intelligent AI systems that can address real-world challenges and contribute to technological progress.

What is Artificial Intelligence?

Artificial intelligence (AI) can be defined as the simulation of human intelligence in machines that are programmed to think and learn like humans. It is a branch of computer science that deals with the creation and development of intelligent machines that can perform tasks that normally require human intelligence.

AI is achieved through the use of algorithms and data that allow machines to learn from and adapt to new information. These machines can then use their knowledge and reasoning abilities to solve problems, make decisions, and even perform tasks that were previously thought to require human intelligence.

Types of Artificial Intelligence

There are two main types of AI: narrow or weak AI and general or strong AI.

Narrow AI refers to AI systems that are designed to perform specific tasks, such as language translation, image recognition, or playing chess. These systems are trained to excel in their specific tasks but lack the ability to generalize their knowledge to other domains.

General AI, on the other hand, refers to AI systems that have the ability to understand, learn, and apply knowledge across a wide range of tasks and domains. These systems are capable of reasoning, problem-solving, and adapting to new situations in a way that is similar to human intelligence.

The Role of Problem Solving in Artificial Intelligence

Problem solving is a critical component of artificial intelligence. It involves the ability of AI systems to identify problems, analyze information, and develop solutions to those problems. AI algorithms are designed to imitate human problem-solving techniques, such as searching for solutions, evaluating options, and making decisions based on available information.

AI systems use various problem-solving techniques, including algorithms such as search algorithms, heuristic algorithms, and optimization algorithms, to find the best solution to a given problem. These techniques allow AI systems to solve complex problems efficiently and effectively.

In conclusion, artificial intelligence is the field of study that focuses on creating intelligent machines that can perform tasks that normally require human intelligence. Problem-solving is a fundamental aspect of AI and involves the use of algorithms and data to analyze information and develop solutions. AI has the potential to revolutionize many aspects of our lives, from healthcare and transportation to business and entertainment.

Problem solving is a critical component of artificial intelligence (AI). AI systems are designed to solve complex, real-world problems by employing various problem-solving techniques and algorithms.

One of the main goals of AI is to create intelligent systems that can solve problems in a way that mimics human problem-solving abilities. This involves using algorithms to search through a vast amount of data and information to find the most optimal solution.

Problem solving in AI involves breaking down a problem into smaller, more manageable sub-problems. These sub-problems are then solved individually and combined to solve the larger problem at hand. This approach allows AI systems to tackle complex problems that would be impossible for a human to solve manually.

AI problem-solving techniques can be classified into two main categories: algorithmic problem-solving and heuristic problem-solving. Algorithmic problem-solving involves using predefined rules and algorithms to solve a problem. These algorithms are based on logical reasoning and can be programmed into AI systems to provide step-by-step instructions for solving a problem.

Heuristic problem-solving, on the other hand, involves using heuristics or rules of thumb to guide the problem-solving process. Heuristics are not guaranteed to find the optimal solution, but they can provide a good enough solution in a reasonable amount of time.

Problem solving in AI is not limited to just finding a single solution to a problem. AI systems can also generate multiple solutions and evaluate them based on predefined criteria. This allows AI systems to explore different possibilities and find the best solution among them.

In conclusion, problem solving is a fundamental aspect of artificial intelligence. AI systems use problem-solving techniques and algorithms to tackle complex real-world problems. Through algorithmic and heuristic problem solving, AI systems are able to find optimal solutions and generate multiple solutions for evaluation. As AI continues to advance, problem-solving abilities will play an increasingly important role in the development of intelligent systems.

Problem Solving Approaches in Artificial Intelligence

In the field of artificial intelligence, problem solving is a fundamental aspect. Artificial intelligence (AI) is the intelligence exhibited by machines or computer systems. It aims to mimic human intelligence in solving complex problems that require reasoning and decision-making.

What is problem solving?

Problem solving refers to the cognitive mental process of finding solutions to difficult or complex issues. It involves identifying the problem, gathering relevant information, analyzing possible solutions, and selecting the most effective one. Problem solving is an essential skill for both humans and AI systems to achieve desired goals.

Approaches in problem solving in AI

Artificial intelligence employs various approaches to problem solving. Some of the commonly used approaches are:

- Search algorithms: These algorithms explore a problem space to find a solution. They can use different search strategies such as depth-first search, breadth-first search, and heuristic search.

- Knowledge-based systems: These systems store and utilize knowledge to solve problems. They rely on rules, facts, and heuristics to guide their problem-solving process.

- Logic-based reasoning: This approach uses logical reasoning to solve problems. It involves representing the problem as a logical formula and applying deduction rules to reach a solution.

- Machine learning: Machine learning algorithms enable AI systems to learn from data and improve their problem-solving capabilities. They can analyze patterns, make predictions, and adjust their behavior based on feedback.

Each approach has its strengths and weaknesses, and the choice of approach depends on the problem domain and available resources. By combining these approaches, AI systems can effectively tackle complex problems and provide valuable solutions.

Search Algorithms in Problem Solving

Problem solving is a critical aspect of artificial intelligence, as it involves the ability to find a solution to a given problem or goal. Search algorithms play a crucial role in problem solving by systematically exploring the search space to find an optimal solution.

What is a Problem?

A problem in the context of artificial intelligence refers to a task or challenge that requires a solution. It can be a complex puzzle, a decision-making problem, or any situation that requires finding an optimal solution.

What is an Algorithm?

An algorithm is a step-by-step procedure or set of rules for solving a problem. In the context of search algorithms, it refers to the systematic exploration of the search space, where each step narrows down the possibilities to find an optimal solution.

Search algorithms in problem solving aim to efficiently explore the search space to find a solution. There are several types of search algorithms, each with its own characteristics and trade-offs.

One commonly used search algorithm is the Breadth-First Search (BFS) algorithm. BFS explores the search space by systematically expanding all possible paths from the initial state to find the goal state. It explores the search space in a breadth-first manner, meaning that it visits all nodes at the same depth level before moving to the next level.

Another popular search algorithm is the Depth-First Search (DFS) algorithm. Unlike BFS, DFS explores the search space by diving deep into a path until it reaches a dead-end or the goal state. It explores the search space in a depth-first manner, meaning that it explores the deepest paths first before backtracking.

Other search algorithms include the A* algorithm, which combines the efficiency of BFS with the heuristic guidance of algorithms; the Greedy Best-First Search, which prioritizes paths based on a heuristic evaluation; and the Hill Climbing algorithm, which iteratively improves the current solution by making small changes.

Search algorithms in problem solving are essential in the field of artificial intelligence as they enable systems to find optimal solutions efficiently. By understanding and implementing different search algorithms, developers and researchers can design intelligent systems capable of solving complex problems.

| Search Algorithm | Description |

|---|---|

| Breadth-First Search (BFS) | Explores all possible paths at the same depth level before moving to the next level |

| Depth-First Search (DFS) | Explores a path until it reaches a dead-end or the goal state, then backtracks |

| A* Algorithm | Combines the efficiency of BFS with heuristic guidance |

| Greedy Best-First Search | Prioritizes paths based on a heuristic evaluation |

| Hill Climbing | Iteratively improves the current solution by making small changes |

Heuristic Functions in Problem Solving

In the field of artificial intelligence, problem-solving is a crucial aspect of creating intelligent systems. One key component in problem-solving is the use of heuristic functions.

A heuristic function is a function that guides an intelligent system in making decisions about how to solve a problem. It provides an estimate of the best possible solution based on available information at any given point in the problem-solving process.

What is a Heuristic Function?

A heuristic function is designed to provide a quick, yet informed, estimate of the most promising solution out of a set of possible solutions. It helps the intelligent system prioritize its search and focus on the most likely path to success.

Heuristic functions are especially useful in problems that have a large number of possible solutions and where an exhaustive search through all possibilities would be impractical or inefficient.

How Does a Heuristic Function Work?

Heuristic functions take into account various factors and considerations that are relevant to the problem being solved. These factors could include knowledge about the problem domain, past experience, or rules and constraints specific to the problem.

The heuristic function assigns a value to each possible solution based on these factors. The higher the value, the more likely a solution is to be optimal. The intelligent system then uses this information to guide its search for the best solution.

A good heuristic function strikes a balance between accuracy and efficiency. It should be accurate enough to guide the search towards the best solution but should also be computationally efficient to prevent excessive computation time.

| Advantages of Heuristic Functions | Limitations of Heuristic Functions |

|---|---|

| 1. Speeds up the problem-solving process | 1. May lead to suboptimal solutions in certain cases |

| 2. Reduces the search space | 2. Relies on available information, which may be incomplete or inaccurate |

| 3. Allows for efficient exploration of the solution space | 3. Requires careful design and calibration for optimal performance |

Overall, heuristic functions play a crucial role in problem-solving in artificial intelligence. They provide a way for intelligent systems to efficiently navigate complex problem domains and find near-optimal solutions.

Constraint Satisfaction in Problem Solving

Problem solving is a key component of artificial intelligence, as it involves using computational methods to find solutions to complex issues. However, understanding how to solve these problems efficiently is essential for developing effective AI systems. And this is where constraint satisfaction comes into play.

Constraint satisfaction is a technique used in problem solving to ensure that all solution candidates satisfy a set of predefined constraints. These constraints can be thought of as rules or conditions that must be met for a solution to be considered valid.

So, what is a constraint? A constraint is a limitation or restriction on the values that variables can take. For example, in a scheduling problem, constraints can include time availability, resource limitations, or precedence relationships between tasks.

The goal of constraint satisfaction in problem-solving is to find a solution that satisfies all the given constraints. This is achieved by exploring the space of possible solutions and eliminating those that violate the constraints.

Constraint satisfaction problems (CSPs) can be solved using various algorithms, such as backtracking or constraint propagation. These algorithms iteratively assign values to variables and check if the constraints are satisfied. If a constraint is violated, the algorithm backtracks and tries a different value for the previous variable.

One advantage of using constraint satisfaction in problem solving is that it provides a systematic way to represent and solve problems with complex constraints. By breaking down the problem into smaller constraints, it becomes easier to reason about the problem and find a solution.

In conclusion, constraint satisfaction is an important technique in problem solving for artificial intelligence. By defining and enforcing constraints, AI systems can efficiently search for valid solutions. Incorporating constraint satisfaction techniques into AI algorithms can greatly improve problem-solving capabilities and contribute to the development of more intelligent systems.

Genetic Algorithms in Problem Solving

Artificial intelligence (AI) is a branch of computer science that focuses on creating intelligent machines capable of performing tasks that typically require human intelligence. One aspect of AI is problem solving, which involves finding solutions to complex problems. Genetic algorithms are a type of problem-solving method used in artificial intelligence.

So, what are genetic algorithms? In simple terms, genetic algorithms are inspired by the process of natural selection and evolution. They are a type of optimization algorithm that uses concepts from genetics and biology to find the best solution to a problem. Instead of relying on a predefined set of rules or instructions, genetic algorithms work by evolving a population of potential solutions over multiple generations.

The process of genetic algorithms involves several key steps. First, an initial population of potential solutions is generated. Each solution is represented as a set of variables or “genes.” These solutions are then evaluated based on their fitness or how well they solve the problem at hand.

Next, the genetic algorithm applies operators such as selection, crossover, and mutation to the current population. Selection involves choosing the fittest solutions to become the parents for the next generation. Crossover involves combining the genes of two parents to create offspring with a mix of their characteristics. Mutation introduces small random changes in the offspring’s genes to introduce genetic diversity.

The new population is then evaluated, and the process continues until a stopping criterion is met, such as finding a solution that meets a certain fitness threshold or reaching a maximum number of generations. Over time, the genetic algorithm converges towards the best solution, much like how natural selection leads to the evolution of species.

Genetic algorithms have been successfully applied to a wide range of problem-solving tasks, including optimization, machine learning, and scheduling. They have been used to solve problems in areas such as engineering, finance, and biology. Due to their ability to explore a large solution space and find globally optimal or near-optimal solutions, genetic algorithms are often preferred when traditional methods fail or are not feasible.

In conclusion, genetic algorithms are a powerful tool in the field of artificial intelligence and problem solving. By mimicking the process of natural selection and evolution, they provide a way to find optimal solutions to complex problems. Their ability to explore a wide search space and adapt to changing environments makes them well-suited for a variety of problem-solving tasks. As AI continues to advance, genetic algorithms will likely play an increasingly important role in solving real-world problems.

Logical Reasoning in Problem Solving

Problem solving is a fundamental aspect of artificial intelligence. It involves finding a solution to a given problem by using logical reasoning. Logical reasoning is the process of using valid arguments and deductions to make inferences and arrive at a logical conclusion. In the context of problem solving, logical reasoning is used to analyze the problem, identify potential solutions, and evaluate their feasibility.

Logical reasoning is what sets artificial intelligence apart from other problem-solving approaches. Unlike human problem solvers, AI can analyze vast amounts of data and consider numerous possibilities simultaneously. It can also distinguish between relevant and irrelevant information and use it to make informed decisions.

Types of Logical Reasoning

There are several types of logical reasoning that AI systems employ in problem solving:

- Deductive Reasoning: Deductive reasoning involves drawing specific conclusions from general principles or premises. It uses a top-down approach, starting from general knowledge and applying logical rules to derive specific conclusions.

- Inductive Reasoning: Inductive reasoning involves drawing general conclusions or patterns from specific observations or examples. It uses a bottom-up approach, where specific instances are used to make generalizations.

- Abductive Reasoning: Abductive reasoning involves making the best possible explanation or hypothesis based on the available evidence. It is a form of reasoning that combines deductive and inductive reasoning to generate the most likely conclusion.

Importance of Logical Reasoning in Problem Solving

Logical reasoning is crucial in problem solving as it ensures that the solutions generated by AI systems are sound, valid, and reliable. Without logical reasoning, AI systems may produce incorrect or nonsensical solutions that are of no use in practical applications.

Furthermore, logical reasoning helps AI systems analyze complex problems systematically and break them down into smaller, more manageable sub-problems. By applying logical rules and deductions, AI systems can generate possible solutions, evaluate their feasibility, and select the most optimal one.

In conclusion, logical reasoning plays a vital role in problem solving in artificial intelligence. It enables AI systems to analyze problems, consider multiple possibilities, and arrive at logical conclusions. By employing various types of logical reasoning, AI systems can generate accurate and effective solutions to a wide range of problems.

Planning and Decision Making in Problem Solving

Planning and decision making play crucial roles in the field of artificial intelligence when it comes to problem solving . A fundamental aspect of problem solving is understanding what the problem actually is and how it can be solved.

Planning refers to the process of creating a sequence of actions or steps to achieve a specific goal. In the context of artificial intelligence, planning involves creating a formal representation of the problem and finding a sequence of actions that will lead to a solution. This can be done by using various techniques and algorithms, such as heuristic search or constraint satisfaction.

Decision making, on the other hand, is the process of selecting the best course of action among several alternatives. In problem solving, decision making is essential at every step, from determining the initial state to selecting the next action to take. Decision making is often based on evaluation and comparison of different options, taking into consideration factors such as feasibility, cost, efficiency, and the desired outcome.

Both planning and decision making are closely intertwined in problem solving. Planning helps in breaking down a problem into smaller, manageable sub-problems and devising a strategy to solve them. Decision making, on the other hand, guides the selection of actions or steps at each stage of the problem-solving process.

In conclusion, planning and decision making are integral components of the problem-solving process in artificial intelligence. Understanding the problem at hand, creating a plan, and making informed decisions are essential for achieving an effective and efficient solution.

Challenges in Problem Solving in Artificial Intelligence

Problem solving is at the core of what artificial intelligence is all about. It involves using intelligent systems to find solutions to complex problems, often with limited information or resources. While artificial intelligence has made great strides in recent years, there are still several challenges that need to be overcome in order to improve problem solving capabilities.

Limited Data and Information

One of the main challenges in problem solving in artificial intelligence is the availability of limited data and information. Many problems require a large amount of data to be effective, but gathering and organizing that data can be time-consuming and difficult. Additionally, there may be cases where the necessary data simply doesn’t exist, making it even more challenging to find a solution.

Complexity and Uncertainty

Another challenge is the complexity and uncertainty of many real-world problems. Artificial intelligence systems need to be able to handle ambiguous, incomplete, or contradictory information in order to find appropriate solutions. This requires advanced algorithms and models that can handle uncertainty and make decisions based on probabilistic reasoning.

Intelligent Decision-Making

In problem solving, artificial intelligence systems need to be able to make intelligent decisions based on the available information. This involves understanding the problem at hand, identifying potential solutions, and evaluating the best course of action. Intelligent decision-making requires not only advanced algorithms but also the ability to learn from past experiences and adapt to new situations.

In conclusion, problem solving in artificial intelligence is a complex and challenging task. Limited data and information, complexity and uncertainty, and the need for intelligent decision-making are just a few of the challenges that need to be addressed. However, with continued research and advancement in the field, it is hoped that these challenges can be overcome, leading to even more effective problem solving in artificial intelligence.

Complexity of Problems

Artificial intelligence (AI) is transforming many aspects of our lives, including problem solving. But what exactly is the complexity of the problems that AI is capable of solving?

The complexity of a problem refers to the level of difficulty involved in finding a solution. In the context of AI, it often refers to the computational complexity of solving a problem using algorithms.

AI is known for its ability to handle complex problems that would be difficult or time-consuming for humans to solve. This is because AI can process and analyze large amounts of data quickly, allowing it to explore different possibilities and find optimal solutions.

One of the key factors that determines the complexity of a problem is the size of the problem space. The problem space refers to the set of all possible states or configurations of a problem. The larger the problem space, the more complex the problem is.

Another factor that influences the complexity of a problem is the nature of the problem itself. Some problems are inherently more difficult to solve than others. For example, problems that involve combinatorial optimization or probabilistic reasoning are often more complex.

Furthermore, the complexity of a problem can also depend on the available resources and the algorithms used to solve it. Certain problems may require significant computational power or specialized algorithms to find optimal solutions.

In conclusion, the complexity of problems that AI is capable of solving is determined by various factors, including the size of the problem space, the nature of the problem, and the available resources. AI’s ability to handle complex problems is one of the key reasons why it is transforming many industries and becoming an essential tool in problem solving.

Incomplete or Uncertain Information

One of the challenges in problem solving in artificial intelligence is dealing with incomplete or uncertain information. In many real-world scenarios, AI systems have to make decisions based on incomplete or uncertain knowledge. This can happen due to various reasons, such as missing data, conflicting information, or uncertain predictions.

When faced with incomplete information, AI systems need to rely on techniques that can handle uncertainty. One such technique is probabilistic reasoning, which allows AI systems to assign probabilities to different possible outcomes and make decisions based on these probabilities. By using probabilistic models, AI systems can estimate the most likely outcomes and use this information to guide problem-solving processes.

In addition to probabilistic reasoning, AI systems can also utilize techniques like fuzzy logic and Bayesian networks to handle incomplete or uncertain information. Fuzzy logic allows for the representation and manipulation of uncertain or vague concepts, while Bayesian networks provide a graphical representation of uncertain relationships between variables.

Overall, dealing with incomplete or uncertain information is an important aspect of problem solving in artificial intelligence. AI systems need to be equipped with techniques and models that can handle uncertainty and make informed decisions based on incomplete or uncertain knowledge. By incorporating these techniques, AI systems can overcome limitations caused by incomplete or uncertain information and improve problem-solving capabilities.

Dynamic Environments

In the field of artificial intelligence, problem solving is a fundamental task. However, in order to solve a problem, it is important to understand what the problem is and what intelligence is required to solve it.

What is a problem?

A problem can be defined as a situation in which an individual or system faces a challenge and needs to find a solution. Problems can vary in complexity and can be static or dynamic in nature.

What is dynamic intelligence?

Dynamic intelligence refers to the ability of an individual or system to adapt and respond to changing environments or situations. In the context of problem solving in artificial intelligence, dynamic environments play a crucial role.

In dynamic environments, the problem or the conditions surrounding the problem can change over time. This requires the problem-solving system to be able to adjust its approach or strategy in order to find a solution.

Dynamic environments can be found in various domains, such as robotics, autonomous vehicles, and game playing. For example, in a game, the game board or the opponent’s moves can change, requiring the player to adapt their strategy.

To solve problems in dynamic environments, artificial intelligence systems need to possess the ability to perceive changes, learn from past experiences, and make decisions based on the current state of the environment.

In conclusion, understanding dynamic environments is essential for problem solving in artificial intelligence. By studying how intelligence can adapt and respond to changing conditions, researchers can develop more efficient and effective problem-solving algorithms.

Optimization vs. Satisficing

In the field of artificial intelligence and problem solving, there are two main approaches: optimization and satisficing. These approaches differ in their goals and strategies for finding solutions to problems.

What is optimization?

Optimization is the process of finding the best solution to a problem, typically defined as maximizing or minimizing a certain objective function. In the context of artificial intelligence, this often involves finding the optimal values for a set of variables that satisfy a given set of constraints. The goal is to find the solution that maximizes or minimizes the objective function while satisfying all the constraints. Optimization algorithms, such as gradient descent or genetic algorithms, are often used to search for the best solution.

What is satisficing?

Satisficing, on the other hand, focuses on finding solutions that are good enough to meet a certain set of criteria or requirements. The goal is not to find the absolute best solution, but rather to find a solution that satisfies a sufficient level of performance. Satisficing algorithms often trade off between the quality of the solution and the computational resources required to find it. These algorithms aim to find a solution that meets the requirements while minimizing the computational effort.

Both optimization and satisficing have their advantages and disadvantages. Optimization is typically used when the problem has a clear objective function and the goal is to find the best possible solution. However, it can be computationally expensive and time-consuming, especially for complex problems. Satisficing, on the other hand, is often used when the problem is ill-defined or there are multiple conflicting objectives. It allows for faster and less resource-intensive solutions, but the quality of the solution may be compromised to some extent.

In conclusion, the choice between optimization and satisficing depends on the specific problem at hand and the trade-offs between the desired solution quality and computational resources. Understanding these approaches can help in developing effective problem-solving strategies in the field of artificial intelligence.

Ethical Considerations in Problem Solving

Intelligence is the ability to understand and learn from experiences, solve problems, and adapt to new situations. Artificial intelligence (AI) is a field that aims to develop machines and algorithms that possess these abilities. Problem solving is a fundamental aspect of intelligence, as it involves finding solutions to challenges and achieving desired outcomes.

The Role of Ethics

However, it is essential to consider the ethical implications of problem solving in the context of AI. What is considered a suitable solution for a problem and how it is obtained can have significant ethical consequences. AI systems and algorithms should be designed in a way that promotes fairness, transparency, and accountability.

Fairness: AI systems should not discriminate against any individuals or groups based on characteristics such as race, gender, or religion. The solutions generated should be fair and unbiased, taking into account diverse perspectives and circumstances.

Transparency: AI algorithms should be transparent in their decision-making process. The steps taken to arrive at a solution should be understandable and explainable, enabling humans to assess the algorithm’s reliability and correctness.

The Impact of AI Problem Solving

Problem solving in AI can have various impacts, both positive and negative, on individuals and society as a whole. AI systems can help address complex problems and make processes more efficient, leading to advancements in fields such as healthcare, transportation, and finance.

On the other hand, there can be ethical concerns regarding the use of AI in problem solving:

– Privacy: AI systems may collect and analyze vast amounts of data, raising concerns about privacy invasion and potential misuse of personal information.

– Job displacement: As AI becomes more capable of problem solving, there is a possibility of job displacement for certain professions. It is crucial to consider the societal impact and explore ways to mitigate the negative effects.

In conclusion, ethical considerations play a vital role in problem solving in artificial intelligence. It is crucial to design AI systems that are fair, transparent, and accountable. Balancing the potential benefits of AI problem solving with its ethical implications is necessary to ensure the responsible and ethical development of AI technologies.

Question-answer:

What is problem solving in artificial intelligence.

Problem solving in artificial intelligence refers to the process of finding solutions to complex problems using computational systems or algorithms. It involves defining and structuring the problem, formulating a plan or strategy to solve it, and executing the plan to reach the desired solution.

What are the steps involved in problem solving in artificial intelligence?

The steps involved in problem solving in artificial intelligence typically include problem formulation, creating a search space, search strategy selection, executing the search, and evaluating the solution. Problem formulation involves defining the problem and its constraints, while creating a search space involves representing all possible states and actions. The search strategy selection determines the approach used to explore the search space, and executing the search involves systematically exploring the space to find a solution. Finally, the solution is evaluated based on predefined criteria.

What are some common techniques used for problem solving in artificial intelligence?

There are several common techniques used for problem solving in artificial intelligence, including uninformed search algorithms (such as breadth-first search and depth-first search), heuristic search algorithms (such as A* search), constraint satisfaction algorithms, and machine learning algorithms. Each technique has its own advantages and is suited for different types of problems.

Can problem solving in artificial intelligence be applied to real-world problems?

Yes, problem solving in artificial intelligence can be applied to real-world problems. It has been successfully used in various domains, such as robotics, healthcare, finance, and transportation. By leveraging computational power and advanced algorithms, artificial intelligence can provide efficient and effective solutions to complex problems.

What are the limitations of problem solving in artificial intelligence?

Problem solving in artificial intelligence has certain limitations. It heavily relies on the quality of input data and the accuracy of algorithms. In cases where the problem space is vast and complex, finding an optimal solution may be computationally expensive or even infeasible. Additionally, problem solving in artificial intelligence may not always capture human-like reasoning and may lack common sense knowledge, which can limit its ability to solve certain types of problems.

Problem solving in artificial intelligence is the process of finding solutions to complex problems using computer algorithms. It involves using various techniques and methods to analyze a problem, break it down into smaller sub-problems, and then develop a step-by-step approach to solving it.

How does artificial intelligence solve problems?

Artificial intelligence solves problems by employing different algorithms and approaches. These include search algorithms, heuristic methods, constraint satisfaction techniques, genetic algorithms, and machine learning. The choice of the specific algorithms depends on the nature of the problem and the available data.

What are the steps involved in problem solving using artificial intelligence?

The steps involved in problem solving using artificial intelligence typically include problem analysis, formulation, search or exploration of possible solutions, evaluation of the solutions, and finally, selecting the best solution. These steps may be repeated iteratively until a satisfactory solution is found.

What are some real-life applications of problem solving in artificial intelligence?

Problem solving in artificial intelligence has various real-life applications. It is used in areas such as robotics, natural language processing, computer vision, data analysis, expert systems, and autonomous vehicles. For example, self-driving cars use problem-solving techniques to navigate and make decisions on the road.

Related posts:

About the author

4 weeks ago

BlackRock and AI: Shaping the Future of Finance

Ai and handyman: the future is here, embrace ai-powered cdps: the future of customer engagement.

Artificial Intelligence

Control System

- Interview Q

Intelligent Agent

Problem-solving, adversarial search, knowledge represent, uncertain knowledge r., subsets of ai, artificial intelligence mcq, related tutorials.

| The process of problem-solving is frequently used to achieve objectives or resolve particular situations. In computer science, the term "problem-solving" refers to artificial intelligence methods, which may include formulating ensuring appropriate, using algorithms, and conducting root-cause analyses that identify reasonable solutions. Artificial intelligence (AI) problem-solving often involves investigating potential solutions to problems through reasoning techniques, making use of polynomial and differential equations, and carrying them out and use modelling frameworks. A same issue has a number of solutions, that are all accomplished using an unique algorithm. Additionally, certain issues have original remedies. Everything depends on how the particular situation is framed. Artificial intelligence is being used by programmers all around the world to automate systems for effective both resource and time management. Games and puzzles can pose some of the most frequent issues in daily life. The use of ai algorithms may effectively tackle this. Various problem-solving methods are implemented to create solutions for a variety complex puzzles, includes mathematics challenges such crypto-arithmetic and magic squares, logical puzzles including Boolean formulae as well as N-Queens, and quite well games like Sudoku and Chess. Therefore, these below represent some of the most common issues that artificial intelligence has remedied: Depending on their ability for recognising intelligence, these five main artificial intelligence agents were deployed today. The below would these be agencies: This mapping of states and actions is made easier through these agencies. These agents frequently make mistakes when moving onto the subsequent phase of a complicated issue; hence, problem-solving standardized criteria such cases. Those agents employ artificial intelligence can tackle issues utilising methods like B-tree and heuristic algorithms. The effective approaches of artificial intelligence make it useful for resolving complicated issues. All fundamental problem-solving methods used throughout AI were listed below. In accordance with the criteria set, students may learn information regarding different problem-solving methods. The heuristic approach focuses solely upon experimentation as well as test procedures to comprehend a problem and create a solution. These heuristics don't always offer better ideal answer to something like a particular issue, though. Such, however, unquestionably provide effective means of achieving short-term objectives. Consequently, if conventional techniques are unable to solve the issue effectively, developers turn to them. Heuristics are employed in conjunction with optimization algorithms to increase the efficiency because they merely offer moment alternatives while compromising precision. Several of the fundamental ways that AI solves every challenge is through searching. These searching algorithms are used by rational agents or problem-solving agents for select the most appropriate answers. Intelligent entities use molecular representations and seem to be frequently main objective when finding solutions. Depending upon that calibre of the solutions they produce, most searching algorithms also have attributes of completeness, optimality, time complexity, and high computational. This approach to issue makes use of the well-established evolutionary idea. The idea of "survival of the fittest underlies the evolutionary theory. According to this, when a creature successfully reproduces in a tough or changing environment, these coping mechanisms are eventually passed down to the later generations, leading to something like a variety of new young species. By combining several traits that go along with that severe environment, these mutated animals aren't just clones of something like the old ones. The much more notable example as to how development is changed and expanded is humanity, which have done so as a consequence of the accumulation of advantageous mutations over countless generations. Genetic algorithms have been proposed upon that evolutionary theory. These programs employ a technique called direct random search. In order to combine the two healthiest possibilities and produce a desirable offspring, the developers calculate the fit factor. Overall health of each individual is determined by first gathering demographic information and afterwards assessing each individual. According on how well each member matches that intended need, a calculation is made. Next, its creators employ a variety of methodologies to retain their finest participants. |

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Interview Questions

Company Questions

Trending Technologies

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

We're sorry but you will need to enable Javascript to access all of the features of this site.

Stanford Online

Artificial intelligence: principles and techniques.

Stanford School of Engineering

Artificial Intelligence (AI) applications are embedded in products and services in nearly every industry, from search engines, to speech recognition, medical devices, financial services, and even toys. In this course you will gain a broad understanding of the modern AI landscape.

You will learn how machines can engage in problem solving, reasoning, learning, and interaction, and you’ll apply your knowledge as you design, test, and implement new algorithms. You will gain the confidence and skills to analyze and solve new AI problems you encounter in your career.

- Get a solid understanding of foundational artificial intelligence principles and techniques, such as machine learning, state-based models, variable-based models, and logic.

- Implement search algorithms to find the shortest paths, plan robot motions, and perform machine translation.

- Find optimal policies in uncertain situations using Markov decision processes.

- Design agents and optimize strategies in adversarial games, such as Pac-Man.

- Adapt to preferences and limitations using constraint satisfaction problems (CSPs).

- Predict likelihoods of causes with Bayesian networks.

- Define logic in your algorithms with syntax, semantics, and inference rules.

Core Competencies

- Bayesian Networks

- Constraint Satisfaction Problems

- Graphical Models

- Machine Learning

- Markov Decision Processes

- Planning and Game Playing

What You Need to Get Started

Prior to enrolling in your first course in the AI Professional Program, you must complete a short application (15 min) to demonstrate:

- Proficiency in Python : Coding assignments will be in Python. Some assignments will require familiarity with basic Linux command line workflows.

- College Calculus and Linear Algebra : You should be comfortable taking (multivariable) derivatives and understand matrix/vector notation and operations.

- Probability Theory : You should be familiar with basic probability distributions (Continuous, Gaussian, Bernoulli, etc.) and be able to define concepts for both continuous and discrete random variables: Expectation, independence, probability distribution functions, and cumulative distribution functions.

Groups and Teams

Special Pricing

Have a group of five or more? Enroll as a group and learn together! By participating together, your group will develop a shared knowledge, language, and mindset to tackle challenges ahead. We can advise you on the best options to meet your organization’s training and development goals.

Teaching Team

Percy Liang

Associate Professor Computer Science

Percy Liang is an Assistant Professor in the Computer Science department. He works on methods that infer representations of meaning from sentences given limited supervision. What's particularly exciting to him is the interface between rich semantic representations (e.g., programs or logical forms) for capturing deep linguistic phenomena, and probabilistic modeling for allowing these representations to be learned from data. More generally, he is interested in modeling both natural and programming languages, and exploring the semantic and pragmatic connections between the two.

Dorsa Sadigh

Assistant Professor

Computer Science

Dorsa Sadigh is an Assistant Professor in the Computer Science Department and Electrical Engineering Department at Stanford University. Her work is focused on the design of algorithms for autonomous systems that safely and reliably interact with people.

You May Also Like

Natural Language Processing with Deep Learning

Artificial Intelligence in Healthcare

Stanford School of Medicine, Stanford Center for Health Education

Generative AI: Technology, Business, and Society Program

Stanford Institute for Human-Centered Artificial Intelligence (HAI)

- Engineering

- Artificial Intelligence

- Computer Science & Security

- Business & Management

- Energy & Sustainability

- Data Science

- Medicine & Health

- Explore All

- Technical Support

- Master’s Application FAQs

- Master’s Student FAQs

- Master's Tuition & Fees

- Grades & Policies

- HCP History

- Graduate Application FAQs

- Graduate Student FAQs

- Graduate Tuition & Fees

- Community Standards Review Process

- Academic Calendar

- Exams & Homework FAQs

- Enrollment FAQs

- Tuition, Fees, & Payments

- Custom & Executive Programs

- Free Online Courses

- Free Content Library

- School of Engineering

- Graduate School of Education

- Stanford Doerr School of Sustainability

- School of Humanities & Sciences

- Stanford Human Centered Artificial Intelligence (HAI)

- Graduate School of Business

- Stanford Law School

- School of Medicine

- Learning Collaborations

- Stanford Credentials

- What is a digital credential?

- Grades and Units Information

- Our Community

- Get Course Updates

MIT Technology Review

- Newsletters

What is AI?

Everyone thinks they know but no one can agree. And that’s a problem.

- Will Douglas Heaven archive page

Internet nastiness, name-calling, and other not-so-petty, world-altering disagreements

AI is sexy, AI is cool. AI is entrenching inequality, upending the job market, and wrecking education. AI is a theme-park ride, AI is a magic trick. AI is our final invention, AI is a moral obligation. AI is the buzzword of the decade, AI is marketing jargon from 1955. AI is humanlike, AI is alien. AI is super-smart and as dumb as dirt. The AI boom will boost the economy, the AI bubble is about to burst. AI will increase abundance and empower humanity to maximally flourish in the universe. AI will kill us all.

What the hell is everybody talking about?

Artificial intelligence is the hottest technology of our time. But what is it? It sounds like a stupid question, but it’s one that’s never been more urgent. Here’s the short answer: AI is a catchall term for a set of technologies that make computers do things that are thought to require intelligence when done by people. Think of recognizing faces, understanding speech, driving cars, writing sentences, answering questions, creating pictures. But even that definition contains multitudes.

And that right there is the problem. What does it mean for machines to understand speech or write a sentence? What kinds of tasks could we ask such machines to do? And how much should we trust the machines to do them?

As this technology moves from prototype to product faster and faster, these have become questions for all of us. But (spoilers!) I don’t have the answers. I can’t even tell you what AI is. The people making it don’t know what AI is either. Not really. “These are the kinds of questions that are important enough that everyone feels like they can have an opinion,” says Chris Olah, chief scientist at the San Francisco–based AI lab Anthropic. “I also think you can argue about this as much as you want and there’s no evidence that’s going to contradict you right now.”

But if you’re willing to buckle up and come for a ride, I can tell you why nobody really knows, why everybody seems to disagree, and why you’re right to care about it.

Let’s start with an offhand joke.

Back in 2022, partway through the first episode of Mystery AI Hype Theater 3000 , a party-pooping podcast in which the irascible cohosts Alex Hanna and Emily Bender have a lot of fun sticking “the sharpest needles’’ into some of Silicon Valley’s most inflated sacred cows, they make a ridiculous suggestion. They’re hate-reading aloud from a 12,500-word Medium post by a Google VP of engineering, Blaise Agüera y Arcas, titled “ Can machines learn how to behave? ” Agüera y Arcas makes a case that AI can understand concepts in a way that’s somehow analogous to the way humans understand concepts—concepts such as moral values. In short, perhaps machines can be taught to behave.

Hanna and Bender are having none of it. They decide to replace the term “AI’’ with “mathy math”—you know, just lots and lots of math.

The irreverent phrase is meant to collapse what they see as bombast and anthropomorphism in the sentences being quoted. Pretty soon Hanna, a sociologist and director of research at the Distributed AI Research Institute, and Bender, a computational linguist at the University of Washington (and internet-famous critic of tech industry hype), open a gulf between what Agüera y Arcas wants to say and how they choose to hear it.

“How should AIs, their creators, and their users be held morally accountable?” asks Agüera y Arcas.

How should mathy math be held morally accountable? asks Bender.

“There’s a category error here,” she says. Hanna and Bender don’t just reject what Agüera y Arcas says; they claim it makes no sense. “Can we please stop it with the ‘an AI’ or ‘the AIs’ as if they are, like, individuals in the world?” Bender says.

It might sound as if they’re talking about different things, but they’re not. Both sides are talking about large language models, the technology behind the current AI boom. It’s just that the way we talk about AI is more polarized than ever. In May, OpenAI CEO Sam Altman teased the latest update to GPT-4 , his company’s flagship model, by tweeting , “Feels like magic to me.”

There’s a lot of road between math and magic.

AI has acolytes, with a faith-like belief in the technology’s current power and inevitable future improvement. Artificial general intelligence is in sight, they say; superintelligence is coming behind it. And it has heretics, who pooh-pooh such claims as mystical mumbo-jumbo.

The buzzy popular narrative is shaped by a pantheon of big-name players, from Big Tech marketers in chief like Sundar Pichai and Satya Nadella to edgelords of industry like Elon Musk and Altman to celebrity computer scientists like Geoffrey Hinton . Sometimes these boosters and doomers are one and the same, telling us that the technology is so good it’s bad .

As AI hype has ballooned, a vocal anti-hype lobby has risen in opposition, ready to smack down its ambitious, often wild claims. Pulling in this direction are a raft of researchers, including Hanna and Bender, and also outspoken industry critics like influential computer scientist and former Googler Timnit Gebru and NYU cognitive scientist Gary Marcus. All have a chorus of followers bickering in their replies.

In short, AI has come to mean all things to all people, splitting the field into fandoms. It can feel as if different camps are talking past one another, not always in good faith.

Maybe you find all this silly or tiresome. But given the power and complexity of these technologies—which are already used to determine how much we pay for insurance, how we look up information, how we do our jobs, etc. etc. etc.—it’s about time we at least agreed on what it is we’re even talking about.

Yet in all the conversations I’ve had with people at the cutting edge of this technology, no one has given a straight answer about exactly what it is they’re building. (A quick side note: This piece focuses on the AI debate in the US and Europe, largely because many of the best-funded, most cutting-edge AI labs are there. But of course there’s important research happening elsewhere, too, in countries with their own varying perspectives on AI, particularly China.) Partly, it’s the pace of development. But the science is also wide open. Today’s large language models can do amazing things . The field just can’t find common ground on what’s really going on under the hood .

These models are trained to complete sentences. They appear to be able to do a lot more—from solving high school math problems to writing computer code to passing law exams to composing poems. When a person does these things, we take it as a sign of intelligence. What about when a computer does it? Is the appearance of intelligence enough?

These questions go to the heart of what we mean by “artificial intelligence,” a term people have actually been arguing about for decades. But the discourse around AI has become more acrimonious with the rise of large language models that can mimic the way we talk and write with thrilling/chilling (delete as applicable) realism.

We have built machines with humanlike behavior but haven’t shrugged off the habit of imagining a humanlike mind behind them. This leads to over-egged evaluations of what AI can do; it hardens gut reactions into dogmatic positions, and it plays into the wider culture wars between techno-optimists and techno-skeptics.

Add to this stew of uncertainty a truckload of cultural baggage, from the science fiction that I’d bet many in the industry were raised on, to far more malign ideologies that influence the way we think about the future. Given this heady mix, arguments about AI are no longer simply academic (and perhaps never were). AI inflames people’s passions and makes grownups call each other names.

“It’s not in an intellectually healthy place right now,” Marcus says of the debate. For years Marcus has pointed out the flaws and limitations of deep learning, the tech that launched AI into the mainstream, powering everything from LLMs to image recognition to self-driving cars. His 2001 book The Algebraic Mind argued that neural networks, the foundation on which deep learning is built, are incapable of reasoning by themselves. (We’ll skip over it for now, but I’ll come back to it later and we’ll see just how much a word like “reasoning” matters in a sentence like this.)

Marcus says that he has tried to engage Hinton—who last year went public with existential fears about the technology he helped invent—in a proper debate about how good large language models really are. “He just won’t do it,” says Marcus. “He calls me a twit.” (Having talked to Hinton about Marcus in the past, I can confirm that. “ChatGPT clearly understands neural networks better than he does,” Hinton told me last year.) Marcus also drew ire when he wrote an essay titled “Deep learning is hitting a wall.” Altman responded to it with a tweet : “Give me the confidence of a mediocre deep learning skeptic.”

At the same time, banging his drum has made Marcus a one-man brand and earned him an invitation to sit next to Altman and give testimony last year before the US Senate’s AI oversight committee.

And that’s why all these fights matter more than your average internet nastiness. Sure, there are big egos and vast sums of money at stake. But more than that, these disputes matter when industry leaders and opinionated scientists are summoned by heads of state and lawmakers to explain what this technology is and what it can do (and how scared we should be). They matter when this technology is being built into software we use every day, from search engines to word-processing apps to assistants on your phone. AI is not going away. But if we don’t know what we’re being sold, who’s the dupe?

“It is hard to think of another technology in history about which such a debate could be had—a debate about whether it is everywhere, or nowhere at all,” Stephen Cave and Kanta Dihal write in Imagining AI , a 2023 collection of essays about how different cultural beliefs shape people’s views of artificial intelligence. “That it can be held about AI is a testament to its mythic quality.”

Above all else, AI is an idea—an ideal—shaped by worldviews and sci-fi tropes as much as by math and computer science. Figuring out what we are talking about when we talk about AI will clarify many things. We won’t agree on them, but common ground on what AI is would be a great place to start talking about what AI should be .

What is everyone really fighting about, anyway?

In late 2022, soon after OpenAI released ChatGPT , a new meme started circulating online that captured the weirdness of this technology better than anything else. In most versions , a Lovecraftian monster called the Shoggoth, all tentacles and eyeballs, holds up a bland smiley-face emoji as if to disguise its true nature. ChatGPT presents as humanlike and accessible in its conversational wordplay, but behind that façade lie unfathomable complexities—and horrors. (“It was a terrible, indescribable thing vaster than any subway train—a shapeless congeries of protoplasmic bubbles,” H.P. Lovecraft wrote of the Shoggoth in his 1936 novella At the Mountains of Madness .)

For years one of the best-known touchstones for AI in pop culture was The Terminator , says Dihal. But by putting ChatGPT online for free, OpenAI gave millions of people firsthand experience of something different. “AI has always been a sort of really vague concept that can expand endlessly to encompass all kinds of ideas,” she says. But ChatGPT made those ideas tangible: “Suddenly, everybody has a concrete thing to refer to.” What is AI? For millions of people the answer was now: ChatGPT.

The AI industry is selling that smiley face hard. Consider how The Daily Show recently skewered the hype, as expressed by industry leaders. Silicon Valley’s VC in chief, Marc Andreessen: “This has the potential to make life much better … I think it’s honestly a layup.” Altman: “I hate to sound like a utopic tech bro here, but the increase in quality of life that AI can deliver is extraordinary.” Pichai: “AI is the most profound technology that humanity is working on. More profound than fire.”

Jon Stewart: “Yeah, suck a dick, fire!”

But as the meme points out, ChatGPT is a friendly mask. Behind it is a monster called GPT-4, a large language model built from a vast neural network that has ingested more words than most of us could read in a thousand lifetimes. During training, which can last months and cost tens of millions of dollars, such models are given the task of filling in blanks in sentences taken from millions of books and a significant fraction of the internet. They do this task over and over again. In a sense, they are trained to be supercharged autocomplete machines. The result is a model that has turned much of the world’s written information into a statistical representation of which words are most likely to follow other words, captured across billions and billions of numerical values.

It’s math—a hell of a lot of math. Nobody disputes that. But is it just that, or does this complex math encode algorithms capable of something akin to human reasoning or the formation of concepts?

Many of the people who answer yes to that question believe we’re close to unlocking something called artificial general intelligence , or AGI, a hypothetical future technology that can do a wide range of tasks as well as humans can. A few of them have even set their sights on what they call superintelligence , sci-fi technology that can do things far better than humans. This cohort believes AGI will drastically change the world—but to what end? That’s yet another point of tension. It could fix all the world’s problems—or bring about its doom.

kinda mad how the so called godfathers of AI managed to convince seemingly smart people within AI field & many regulators to buy into the absurd idea that a sophisticated curve fitting (to a dataset) machine can have the urge to exterminate humans — Abeba Birhane (@Abebab) June 30, 2024

Today AGI appears in the mission statements of the world’s top AI labs. But the term was invented in 2007 as a niche attempt to inject some pizzazz into a field that was then best known for applications that read handwriting on bank deposit slips or recommended your next book to buy. The idea was to reclaim the original vision of an artificial intelligence that could do humanlike things (more on that soon).

It was really an aspiration more than anything else, Google DeepMind cofounder Shane Legg, who coined the term, told me last year: “I didn’t have an especially clear definition.”

AGI became the most controversial idea in AI . Some talked it up as the next big thing: AGI was AI but, you know, much better . Others claimed the term was so vague that it was meaningless.

“AGI used to be a dirty word,” Ilya Sutskever told me, before he resigned as chief scientist at OpenAI.

But large language models, and ChatGPT in particular, changed everything. AGI went from dirty word to marketing dream.

Which brings us to what I think is one of the most illustrative disputes of the moment—one that sets up the sides of the argument and the stakes in play.

Seeing magic in the machine

A few months before the public launch of OpenAI’s large language model GPT-4 in March 2023, the company shared a prerelease version with Microsoft, which wanted to use the new model to revamp its search engine Bing.

At the time, Sebastian Bubeck was studying the limitations of LLMs and was somewhat skeptical of their abilities. In particular, Bubeck—the vice president of generative AI research at Microsoft Research in Redmond, Washington—had been trying and failing to get the technology to solve middle school math problems. Things like: x – y = 0; what are x and y ? “My belief was that reasoning was a bottleneck, an obstacle,” he says. “I thought that you would have to do something really fundamentally different to get over that obstacle.”

Then he got his hands on GPT-4. The first thing he did was try those math problems. “The model nailed it,” he says. “Sitting here in 2024, of course GPT-4 can solve linear equations. But back then, this was crazy. GPT-3 cannot do that.”

But Bubeck’s real road-to-Damascus moment came when he pushed it to do something new.

The thing about middle school math problems is that they are all over the internet, and GPT-4 may simply have memorized them. “How do you study a model that may have seen everything that human beings have written?” asks Bubeck. His answer was to test GPT-4 on a range of problems that he and his colleagues believed to be novel.

Playing around with Ronen Eldan, a mathematician at Microsoft Research, Bubeck asked GPT-4 to give, in verse, a mathematical proof that there are an infinite number of primes.

Here’s a snippet of GPT-4’s response: “If we take the smallest number in S that is not in P / And call it p, we can add it to our set, don’t you see? / But this process can be repeated indefinitely. / Thus, our set P must also be infinite, you’ll agree.”

Cute, right? But Bubeck and Eldan thought it was much more than that. “We were in this office,” says Bubeck, waving at the room behind him via Zoom. “Both of us fell from our chairs. We couldn’t believe what we were seeing. It was just so creative and so, like, you know, different.”

The Microsoft team also got GPT-4 to generate the code to add a horn to a cartoon picture of a unicorn drawn in Latex, a word processing program. Bubeck thinks this shows that the model could read the existing Latex code, understand what it depicted, and identify where the horn should go.

“There are many examples, but a few of them are smoking guns of reasoning,” he says—reasoning being a crucial building block of human intelligence.

Bubeck, Eldan, and a team of other Microsoft researchers described their findings in a paper that they called “ Spark s of artificial general intelligence ”: “We believe that GPT-4’s intelligence signals a true paradigm shift in the field of computer science and beyond.” When Bubeck shared the paper online, he tweeted : “time to face it, the sparks of #AGI have been ignited.”

The Sparks paper quickly became infamous—and a touchstone for AI boosters. Agüera y Arcas and Peter Norvig, a former director of research at Google and coauthor of Artificial Intelligence: A Modern Approach , perhaps the most popular AI textbook in the world, cowrote an article called “ Artificial General Intelligence Is Already Here .” Published in Noema , a magazine backed by an LA think tank called the Berggruen Institute, their argument uses the Sparks paper as a jumping-off point: “Artificial General Intelligence (AGI) means many different things to different people, but the most important parts of it have already been achieved by the current generation of advanced AI large language models,” they wrote. “Decades from now, they will be recognized as the first true examples of AGI.”

Since then, the hype has continued to balloon. Leopold Aschenbrenner, who at the time was a researcher at OpenAI focusing on superintelligence, told me last year: “AI progress in the last few years has been just extraordinarily rapid. We’ve been crushing all the benchmarks, and that progress is continuing unabated. But it won’t stop there. We’re going to have superhuman models, models that are much smarter than us.” (He was fired from OpenAI in April because, he claims, he raised security concerns about the tech he was building and “ ruffled some feathers .” He has since set up a Silicon Valley investment fund.)

In June, Aschenbrenner put out a 165-page manifesto arguing that AI will outpace college graduates by “2025/2026” and that “we will have superintelligence, in the true sense of the word” by the end of the decade. But others in the industry scoff at such claims. When Aschenbrenner tweeted a chart to show how fast he thought AI would continue to improve given how fast it had improved in last few years, the tech investor Christian Keil replied that by the same logic, his baby son, who had doubled in size since he was born, would weigh 7.5 trillion tons by the time he was 10.

It’s no surprise that “sparks of AGI” has also become a byword for over-the-top buzz. “I think they got carried away,” says Marcus, speaking about the Microsoft team. “They got excited, like ‘Hey, we found something! This is amazing!’ They didn’t vet it with the scientific community.” Bender refers to the Sparks paper as a “fan fiction novella.”

Not only was it provocative to claim that GPT-4’s behavior showed signs of AGI, but Microsoft, which uses GPT-4 in its own products, has a clear interest in promoting the capabilities of the technology. “This document is marketing fluff masquerading as research,” one tech COO posted on LinkedIn.

Some also felt the paper’s methodology was flawed. Its evidence is hard to verify because it comes from interactions with a version of GPT-4 that was not made available outside OpenAI and Microsoft. The public version has guardrails that restrict the model’s capabilities, admits Bubeck. This made it impossible for other researchers to re-create his experiments.

One group tried to re-create the unicorn example with a coding language called Processing, which GPT-4 can also use to generate images . They found that the public version of GPT-4 could produce a passable unicorn but not flip or rotate that image by 90 degrees. It may seem like a small difference, but such things really matter when you’re claiming that the ability to draw a unicorn is a sign of AGI.

The key thing about the examples in the Sparks paper, including the unicorn, is that Bubeck and his colleagues believe they are genuine examples of creative reasoning. This means the team had to be certain that examples of these tasks, or ones very like them, were not included anywhere in the vast data sets that OpenAI amassed to train its model. Otherwise, the results could be interpreted instead as instances where GPT-4 reproduced patterns it had already seen.

Bubeck insists that they set the model only tasks that would not be found on the internet. Drawing a cartoon unicorn in Latex was surely one such task. But the internet is a big place. Other researchers soon pointed out that there are indeed online forums dedicated to drawing animals in Latex . “Just fyi we knew about this,” Bubeck replied on X. “Every single query of the Sparks paper was thoroughly looked for on the internet.”

(This didn’t stop the name-calling: “I’m asking you to stop being a charlatan,” Ben Recht, a computer scientist at the University of California, Berkeley, tweeted back before accusing Bubeck of “being caught flat-out lying.”)

Bubeck insists the work was done in good faith, but he and his coauthors admit in the paper itself that their approach was not rigorous—notebook observations rather than foolproof experiments.

Still, he has no regrets: “The paper has been out for more than a year and I have yet to see anyone give me a convincing argument that the unicorn, for example, is not a real example of reasoning.”

That’s not to say he can give me a straight answer to the big question—though his response reveals what kind of answer he’d like to give. “What is AI?” Bubeck repeats back to me. “I want to be clear with you. The question can be simple, but the answer can be complex.”

“There are many simple questions out there to which we still don’t know the answer. And some of those simple questions are the most profound ones,” he says. “I’m putting this on the same footing as, you know, What is the origin of life? What is the origin of the universe? Where did we come from? Big, big questions like this.”

Seeing only math in the machine

Before Bender became one of the chief antagonists of AI’s boosters, she made her mark on the AI world as a coauthor on two influential papers. (Both peer-reviewed, she likes to point out—unlike the Sparks paper and many of the others that get much of the attention.) The first, written with Alexander Koller, a fellow computational linguist at Saarland University in Germany, and published in 2020, was called “ Climbing towards NLU ” (NLU is natural-language understanding).

“The start of all this for me was arguing with other people in computational linguistics whether or not language models understand anything,” she says. (Understanding, like reasoning, is typically taken to be a basic ingredient of human intelligence.)

Bender and Koller argue that a model trained exclusively on text will only ever learn the form of a language, not its meaning. Meaning, they argue, consists of two parts: the words (which could be marks or sounds) plus the reason those words were uttered. People use language for many reasons, such as sharing information, telling jokes, flirting, warning somebody to back off, and so on. Stripped of that context, the text used to train LLMs like GPT-4 lets them mimic the patterns of language well enough for many sentences generated by the LLM to look exactly like sentences written by a human. But there’s no meaning behind them, no spark . It’s a remarkable statistical trick, but completely mindless.

They illustrate their point with a thought experiment. Imagine two English-speaking people stranded on neighboring deserted islands. There is an underwater cable that lets them send text messages to each other. Now imagine that an octopus, which knows nothing about English but is a whiz at statistical pattern matching, wraps its suckers around the cable and starts listening in to the messages. The octopus gets really good at guessing what words follow other words. So good that when it breaks the cable and starts replying to messages from one of the islanders, she believes that she is still chatting with her neighbor. (In case you missed it, the octopus in this story is a chatbot.)

The person talking to the octopus would stay fooled for a reasonable amount of time, but could that last? Does the octopus understand what comes down the wire?

Imagine that the islander now says she has built a coconut catapult and asks the octopus to build one too and tell her what it thinks. The octopus cannot do this. Without knowing what the words in the messages refer to in the world, it cannot follow the islander’s instructions. Perhaps it guesses a reply: “Okay, cool idea!” The islander will probably take this to mean that the person she is speaking to understands her message. But if so, she is seeing meaning where there is none. Finally, imagine that the islander gets attacked by a bear and sends calls for help down the line. What is the octopus to do with these words?

Bender and Koller believe that this is how large language models learn and why they are limited. “The thought experiment shows why this path is not going to lead us to a machine that understands anything,” says Bender. “The deal with the octopus is that we have given it its training data, the conversations between those two people, and that’s it. But then here’s something that comes out of the blue and it won’t be able to deal with it because it hasn’t understood.”

The other paper Bender is known for, “ On the Dangers of Stochastic Parrots ,” highlights a series of harms that she and her coauthors believe the companies making large language models are ignoring. These include the huge computational costs of making the models and their environmental impact; the racist, sexist, and other abusive language the models entrench; and the dangers of building a system that could fool people by “haphazardly stitching together sequences of linguistic forms … according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.”

Google senior management wasn’t happy with the paper, and the resulting conflict led two of Bender’s coauthors, Timnit Gebru and Margaret Mitchell, to be forced out of the company, where they had led the AI Ethics team. It also made “stochastic parrot” a popular put-down for large language models—and landed Bender right in the middle of the name-calling merry-go-round.

The bottom line for Bender and for many like-minded researchers is that the field has been taken in by smoke and mirrors: “I think that they are led to imagine autonomous thinking entities that can make decisions for themselves and ultimately be the kind of thing that could actually be accountable for those decisions.”

Always the linguist, Bender is now at the point where she won’t even use the term AI “without scare quotes,” she tells me. Ultimately, for her, it’s a Big Tech buzzword that distracts from the many associated harms. “I’ve got skin in the game now,” she says. “I care about these issues, and the hype is getting in the way.”

Extraordinary evidence?