Machine Learning

Machine learning model stacking in python, find out how stacking can be used to improve model performance.

Suyash Maheshwari

Stacking is a type of ensemble learning wherein multiple layers of models are used for final predictions. More specifically, we predict train set (in CV-like fashion) and test set using some 1st level models, and then use these predictions as features for 2nd level model.

We can do it in python using a library called ‘Vecstack’. The library has been developed by Igor Ivanov and was released in the year 2016. In this article, we will have a look at the basic implementation of this library for a classification problem.

The library can be installed using :

Next, we import it :

First, we will create individual models and perform hyperparameter tuning to find out the best parameters for all of the models. In order to avoid overfitting, we apply cross-validation split the data into 5 folds, and compute the mean of roc_auc score.

- Decision Tree Classifier :

2. Random Forest Classifier :

3. Multilayer Perceptron Classifier :

4. KNeighbours Classifier :

5. Support Vector Machine Classifier :

Next, we create a base layer for our stacking model bypassing all of the above-mentioned models. We want to predict train set and test set with some 1st level models, and then use these predictions as features for 2nd level models. Any model can be used as a 1st level model or a 2nd level model.

Next, we pass the predictions of these models as input to our layer 2 models which is the MLP classifier in this case. We also perform Hyperparameter Tuning and Cross-Validation for this model.

The mean of the Roc_Auc score for the final model is 0.9321746030659209.

Conclusion :

Therefore, using stacking we were able to improve the performance of the model by at least 7%! Stacking is a way to combine the strengths of multiple models into a single powerful model. Having said this, Stacking may not always be the best thing to do as it involves significant use of computational resources and the decision to use it must be decided based on the business case, time and money. A huge shoutout to Igor Ivanov, developer of this library, for such amazing work.

Written by Suyash Maheshwari

MS in Business Analytics’22 , WP Carey , Arizona State University | Experience in Data Science & Machine Learning

Text to speech

Grab your spot at the free arXiv Accessibility Forum

Help | Advanced Search

Statistics > Methodology

Title: bayesian hierarchical stacking: some models are (somewhere) useful.

Abstract: Stacking is a widely used model averaging technique that asymptotically yields optimal predictions among linear averages. We show that stacking is most effective when model predictive performance is heterogeneous in inputs, and we can further improve the stacked mixture with a hierarchical model. We generalize stacking to Bayesian hierarchical stacking. The model weights are varying as a function of data, partially-pooled, and inferred using Bayesian inference. We further incorporate discrete and continuous inputs, other structured priors, and time series and longitudinal data. To verify the performance gain of the proposed method, we derive theory bounds, and demonstrate on several applied problems.

| Comments: | minor revision |

| Subjects: | Methodology (stat.ME); Machine Learning (cs.LG); Machine Learning (stat.ML) |

| Cite as: | [stat.ME] |

| (or [stat.ME] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite | |

| Journal reference: | Bayesian Analysis. (2021) |

| : | Focus to learn more DOI(s) linking to related resources |

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

3 blog links

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Intro to Model Stacking in Python

Dilyan Kovachev

“I am pretty happy with my basic model’s performance!” …said no Data Scientist ever…

If you don’t get down with that 50 % accuracy rate, and you often find yourself tweaking your Machine Learning models till 3:00 am, just so you can squeeze out that extra 1% of improved performance, or if you‘re just curious to learn what it takes to win major Kaggle contests, then this article is for you.

What is Model Stacking?

Model stacking is a Data Science ensemble method, which relies on the “wisdom of the crowd” premise that a diverse selection of combined “weaker” learners working together, will often outperform a single “strong” model. The winners of most major Kaggle competitions over the past 4–5 years have used some configuration of Model Stacking in their final winning models.

Model Stacking is analogous with real-world examples such as building successful human teams in business, science, sports etc. If all the members of the team were really good at the exact same task, then the team would crush any challenge that requires this one specific skill, but it would fail miserably when it comes to handling complex real-life problems that require a plethora of diverse skills, mindsets, and approaches. I do not know much about American football, but even with my limited knowledge, it is pretty obvious to me that you cannot win a football game with a team that consists of only quarterbacks, even if those quarterbacks are the best in the league. That is why optimal sports teams and successful business units consist of a diverse group of individuals with a wider range of strengths and weaknesses.

Cool story bro…but how does this work in practice?

Below you can see an example of the top winning model of a recent major Kaggle competition:

Note: It is very important to have a sufficient amount of data in order to perform robust Model Stacking. To avoid over-fitting, you need to perform cross-validation at each stacking/training stage and keep some data aside as a “holdout” set for the testing stage and make sure that there isn’t a huge discrepancy between the model’s performance on train and test data.

- Initial Stage — you run a variety of different standalone models and spend some time analyzing their individual performance metrics and thinking about where some models might have done better than others.

- Stage 1 Ensemble — you select a small “team” of those models, making sure there is a low correlation between their prediction coefficients to ensure that your Stacked Model allows for a lot of cross-learning between the weaker links. You take the average of their predictions and construct a new table, which will be fed into a new smaller team of models in Stage 2.

- Stage 2 Ensemble — you run a new ensemble of models, which will use the averaged prediction metrics from Stage 1 as features in order to learn new information about the relationships between our original variables.

- Stage 3 Ensemble — you repeat the same process, feeding the average predictions of the models from Stage 2 into a final “meta-learner” model, which should be well selected to fit the type of problem that you're trying to solve. In the example above, the competitors used Linear Regression for their final model because this was probably one of the models that performed best during the initial stage as a standalone model.

Should you try this at home?

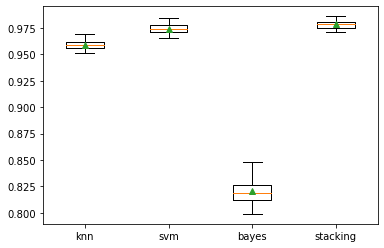

Absolutely! For my first experiment with Model Stacking, I decided to expand on my latest Data Science project, which was a Natural Language Processing model that aims to predict a song’s genre from its lyrics. For that project, we preprocessed a list of 16,000 song lyrics from eight different genres — Hip-Hop, Country, Pop, Rock, Metal, Electronic, Jazz, and R&B. We made sure to include 2000 songs per genre in our dataset to avoid the issue of class imbalance. The first step was to run a diverse set of basic models with a low correlation between their predictive methods in order to be able to build our Model Stacking teams accordingly. Please see results below:

Next, we decided to take the top 3 models and do some hyperparameter optimization via an extensive GridSearch, in order to get a sense for the highest accuracy that our top standalone model can achieve. Results below:

As you can see from the graph below, our top performer achieved a testing accuracy of 50% , which at first sight doesn’t seem very high, but given that our model is trying to predict what genre a song belongs to out of 8 possible genres, this accuracy is exactly four times better than random guessing (probability of a random guess = 1/8 or 12.5%). This means that about half the time our model predicts exactly what genre a song belongs to, only based on that song’s lyrics and that 50% testing accuracy was achieved on a testing set of over 3000 songs…not bad at all!

OK, Morpheus, let’s see how deep the rabbit hole goes…

First, I opted to combine and average the predictions of my three weakest learners — Random Forest, AdaBoost, and KNN classifiers and construct a new data frame of features that I can feed into my strong learners. Code below:

Each column in the table above is the average prediction coefficient for each of the eight genres and each row is a song lyric with a corresponding true genre, stored in a separate y-variable as a list of class labels.

Next, I split the data in a train and test set and ran Stage 2 of the Model Stacking, where my strong learners — GradientBoost and Naive Bayes — used the combined predictions from the weak learners to generate a new set of prediction. Then, I combined the predicted results from Stage 2 into one final data frame, which I wanted to feed into my final meta-learner — NN.

I opted to go with a Neural Network for the final stage of my Model Stacking because NNs tend to perform very well on multi-classification problems and NNs are pretty good at finding hidden links and figuring out complex relationships between dependent and independent variables. I used “softmax” for my output layer activation function because we’re trying to predict eight classes. Lo and behold, results below…

Final Thoughts

I felt exactly like the guy above when I saw the results of this Model Stacking experiment on my initial NLP project. The 80% accuracy was generated on a “holdout” set of test data, which consisted of 3600 song lyrics that the NN model had never seen before. The training accuracy of 86% with cross-validation was also very impressive, and what made me especially happy was the fact that there wasn’t a significant difference between the training set and the testing set performance, so there didn’t seem to be much overfitting.

I checked my code and my math multiple times to make sure that my cross-validations and train/test splits were valid at each stage since a 30% boost in performance seemed “too good to be true,” but I couldn’t find any mistakes in my code. Thus, I feel very happy with my first attempt at using Model Stacking, but I still recommend taking everything in this article with a grain of salt since I could have made a beginner’s mistake somewhere in the process. That being said, I recommend that you give model stacking a try and see if it generates a similar boost in performance for some of your projects.

What are you waiting for?….You’re up!

I tried to keep this guide as simple as possible since I am in no way an expert on Model Stacking and I just wanted to provide a basic example of my first attempt at using this fancy Data Science approach. If you’re interested in doing a deeper dive on the subject, I recommend starting with the two sites below and if they spark your interest, you can try to read as much material by Marios Michailidis as you can find, including watching his YouTube videos:

Stacking Made Easy: An Introduction to StackNet by Competitions Grandmaster Marios Michailidis…

You've probably heard the adage "two heads are better than one." well, it applies just as well to machine learning….

blog.kaggle.com

Why do stacked ensemble models win data science competitions?

Winning data science competitions with ensemble modeling ensemble modeling and model stacking are especially popular in….

blogs.sas.com

Written by Dilyan Kovachev

A curious mind with an affinity for numbers, trying to understand the world through Data Science

Text to speech

Bagging, Boosting, and Stacking in Machine Learning

Last updated: June 5, 2024

- Machine Learning

1. Introduction

Bagging, boosting, and stacking belong to a class of machine learning algorithms known as ensemble learning algorithms . Ensemble learning involves combining the predictions of multiple models into one to increase prediction performance .

In this tutorial, we’ll review the differences between bagging, boosting, and stacking.

Bagging, also known as bootstrap aggregation, is an ensemble learning technique that combines the benefits of bootstrapping and aggregation to yield a stable model and improve the prediction performance of a machine-learning model.

In bagging, we first sample equal-sized subsets of data from a dataset with bootstrapping, i.e., we sample with replacement. Then, we use those subsets to train several weak models independently. A weak model is one with low prediction accuracy. In contrast, strong models are very accurate. To get a strong model, we aggregate the predictions from all the weak models:

So, there are three steps:

- Sample equal-sized subsets with replacement

- Train weak models on each of the subsets independently and in parallel

- Combine the results from each of the weak models by averaging or voting to get a final result

The results are aggregated by averaging the results for regression tasks or by picking the majority class in classification tasks.

2.1. Algorithms That Use Bagging

The main idea behind bagging is to reduce the variance in a dataset , ensuring that the model is robust and not influenced by specific samples in the dataset.

For this reason, bagging is mainly applied to tree-based machine learning models such as decision trees and random forests .

2.2. Pros and Cons of Bagging

Here’s a quick summary of bagging:

| Pros | Cons |

|---|---|

| Reduces overall variance | High number of weak models may reduce model interpretability |

| Increases models’ robustness to noise in the data |

2.3. Implementing Bagging (Almost) From Scratch

In this section, we’ll implement bagging. For simplicity’s sake, we’ll use Scikit-Learn to access a well-known learning dataset, the base learner model , and some utility functions for tasks like splitting the dataset . We’ll also use NumPy to deal with the data as arrays, including randomly selecting a subset of the data to train the weak learners:

We initialize the SimpleBag with the following parameters:

- base_estimator : we use DecisionTreeClassifier by default, but any weak learner adhering to the SciKit Learn interface can be used. Decision trees are known for their high variance. Still, by creating multiple models in different subsets of the data, we reduce the overall variance courtesy of the central limit theorem .

- n_estimators : The number of base estimators in the ensemble. More estimators typically lead to better performance but increased computational cost and risk of overfitting.

- subset_size : The fraction of the training dataset used to bootstrap each weak learner. This controls the size of the subsets and is a key parameter in bagging, as it affects the diversity of the models in the ensemble.

In the fit() method, we used random sampling with replacement ( np.choice() ) to create a bootstrapped subset of the training data. Then, We cloned the base estimator and trained the new instance on the bootstrapped subset. Finally, we add the newly trained model to the base_learners list.

In the predict() method, we use each trained model to predict an input. Then, aggregate the predictions to make a final prediction. In this case, we add all predictions to an array and choose the most common one as our overall result. We used Scipy’s mode function to simplify finding the most common prediction.

Now, using the Iris dataset, we’ll compare the performance of our completed ensemble model with that of a single decision tree.

The single DecisionTreeClassifier model, configured with max_depth=1 and max_features=1 , yields an acceptable accuracy of 0.63. This performance is remarkable but typical for a small data set like the one we’re using, but Decision Trees are known for their high variance and tendency to overfit.

In our SimpleBag ensemble, we used multiple decision trees trained in different bags of the training data, which reduces the overall variance of our predictions, courtesy of the central limit theorem . If we run the code multiple times, we will notice that our results vary significantly from matching a weak learner to achieving perfect accuracy. This is due to the small data and our simplistic sampling process, but it’s enough to illustrate the bagging approach.

2.4. Using Existing Bagging Models

Instead of implementing the algorithm from scratch, we should leverage models from well-established libraries . Not only does it work less, but a library with a broad user base will be higher quality, faster, and have fewer bugs than in-house code.

Scikit-learn is the most popular library for basic ML algorithms for Python. It offers a comprehensive suite of tools and algorithm implementation, including one for bagging known as BaggingClassifier:

In this example, we replicate the setup of our custom bagging approach but utilize the BaggingClassifier provided by scikit-learn. We specify a DecisionTreeClassifier with max_depth=2 and max_features=1 as the base estimator to match our earlier experiment closely. The BaggingClassifier handles the complexities of training each base estimator on bootstrapped samples and aggregating their predictions, streamlining the process into a few lines of code.

3. Boosting

In boosting, we train a sequence of models. Each model is trained on a weighted training set. We assign weights based on the errors of the previous models in the sequence.

The main idea behind sequential training is to have each model correct the errors of its predecessor. This continues until the predefined number of trained models or some other criteria are met.

During training, instances that are classified incorrectly are assigned higher weights to give some form of priority when trained with the following model:

Additionally, weaker models are assigned lower weights than strong models when combining their predictions into the final output.

So, we first initialize data weights to the same value and then perform the following steps iteratively:

- Train a model on all instances

- Calculate the error on model output over all instances

- Assign a weight to the model (high for good performance and vice-versa)

- Update data weights: give higher weights to samples with high errors

- Repeat the previous steps if the performance isn’t satisfactory or other stopping conditions are met

Finally, we combine the models into the one we use for prediction.

3.1. Algorithms That Use Boosting

Boosting generally improves the accuracy of a machine learning model by improving the performance of weak learners. We typically use XGBoost , CatBoost , and AdaBoost .

These algorithms apply different boosting techniques and are most noted for achieving excellent performance.

3.2. Pros and Cons of Boosting

Boosting has many advantages but isn’t without shortcomings:

| Pros | Cons |

|---|---|

| Improves overall accuracy | Can be computationally expensive |

| Reduces overall bias by improving on the weakness of the previous model | Sensitive to noisy data |

| Model dependency may allow for replication of errors |

The decision to use boosting depends on whether the data aren’t noisy and our computational capabilities.

3.3. Implementing Boosting (Almost) From Scratch

There are various boosting algorithms, all based on adjusting the training, which are used to predict the next learner performance of the previously trained learners.

Gradient Boosting, eXtreme Gradient Boosting, and Light Gradient Boosting algorithms fit the new learners to the residual errors, using a gradient descend approach on the loss function to minimize others . However, they make different tradeoffs between speed and performance, regularization use, and their ability to deal with sparse or high amounts of data. They are usable for both classification and regression scenarios.

Categorical Boosting specializes in categorization problems . It can be used without requiring data preprocessing, such as converting the categories to one-hot encoding. It’s also overfitting resistant.

Adaptive Boosting was also initially developed for classification tasks, but it has been modified for regression problems. It uses changing sample weights to direct the training of new learners to perform better in training data in which the previous learners performed poorly. We will now implement a basic adaptative boosting algorithm on top of scikit-learn models.

Because we need to pass the sample weights to the underlying models, we can only use base models that support passing weights to their fitting method; Decision Trees, Logistic Regression, Ridge Classifier, and Support Vector Machines algorithms in scikit-learn allow us to pass weights to the training process:

In the above implementation, we start by encoding the labels using scikit-learn’s LabelEncoder, and then we proceed to train the weak learners, adjusting the weights at each step:

- At the start, we assign an equal weight to each sample . For a dataset with N samples, each sample’s initial weight is set to 1/ N .

- The current weak learner is trained on the training data using the current sample weights.

- After training, we evaluate the learner’s performance, calculating its weighted error rate.

- Then, we calculated the error rate for this learner and use it to calculate the weight we’ll give to this learner’s predictions when making the overall prediction of our ensemble. If the learner error shows a performance worse than random guessing, then we stop the training and do not add more learners to the ensemble.

- Finally, we update the weights based on the predictions of the current learner. Samples the learner incorrectly classified are given more weight, and samples that were correctly classified have their weights reduced.

To find the final prediction of our ensemble, we have to aggregate the individual predictions of the weak learners, but we have to weigh the votes and not simply count as we did in the bagging example:

- We make predictions using all the weak learners and store the result in a NumPy array .

- Then, we calculate the overall prediction . We start by creating an array with NxC dimensions, where N is the number of entries we’re classifying, and C is the number of possible categories. For each sample, we add the learner weight to the category it predicted.

- We chose the class that receives the highest total weight across all learners as the final prediction for the input.

When comparing our ensemble to a single weak learner, we would appreciate the improved performance another interesting point is that the performance is more stable across multiple runs than our simple bagging implementation:

The base learner achieves an accuracy from 0.43 to 0.63, and our simple boosting ensemble achieves a consistent accuracy of 0.93. The consistent performance is an excellent contrast to the variability of our bagging implementation; in simple terms, our boosting implementation doesn’t use randomness when training the model.

3.4. Using Existing Boosting Models

As with the bagging algorithm, it’s almost always better to use a library implementing these algorithms, scikit-learn includes implementations for different boosting strategies: AdaBoostClassifier , AdaBoostRegressor , GradientBoostingClassifier , GradientBoostingRegressor .

Since our simple implementation is a simplified Adaptative Boosting algorithm, we can compare it to scikit-learn’s AdaBoostClassifier:

Running it repeatedly, we obtain a stable 1.0 accuracy. Besides the higher accuracy, we also get an extensively tested model and more options that we can use to tweak how we train our model.

4. Stacking

In stacking, the predictions of base models are fed as input to a meta-model (or meta-learner). The job of the meta-model is to take the predictions of the base models and make a final prediction:

The base and meta-models don’t have to be of the same type. For example, we can pair a decision tree with a support vector machine (SVM).

Here are the steps:

- Construct base models on different portions of the training data

- Train a meta-model on the predictions from the base models

4.1. Pros and Cons of Stacking

We can summarize stacking as follows:

| Pros | Cons |

|---|---|

| Combines the benefits of different models into one | May take a longer time to train and aggregate the predictions of different types of models |

| Increases overall accuracy | Training several base models and a meta-model increases complexity |

4.2. Implementing Stacking (Almost) From Scratch

As before, we can use the base models in scikit-learn to write a simple stacking implementation:

Similarly to the previous ensembles, we train a set of base learners, in this case, we require the user of our class to pass an array of instantiated base learners, we train each learner in parallel as we did in bagging, but in this example, we used all the data to train each model. The main difference from before is that we use the prediction of each base learner as a feature to train a meta-learner.

In this approach, each base learner and the meta learner can use different algorithms; for example we can use a Decision Tree and Logistic Regression as base models and a Support Vector Machine as the meta learner:

Our simple learner achieves 1.0 accuracy in the Iris Dataset, but it has weaknesses that will limit the performance in more complex problems. Since we’re training the meta-learner directly on the output of the base learners, we might end up overfitting the training data. A common way to address this problem is to use a cross-validation prediction ( cross_val_predict ) approach to prevent the input data from leaking to the meta-learner.

Another issue is that we used the final class prediction from the base learners; this drops uncertainty information because it assumes the base learners are sure about the prediction. This is easier to fix, so we need to use predict_prob() , which assigns a probability to each possible category and uses this as input for the meta-learner.

We foresee how adding those improvements will slowly complicate our code, which is why we should favor using the models from a library in practice.

4.3. Stacking With SciKit-Learn

Using the StackingClassifier is as simple as with any of the other ensembles, we need to instantiate the base and meta learners since we need them to instantiate the stacking class:

Not only is this classifier more sophisticated than our straightforward approach, but it’s also flexible. We can use the constructor arguments to change if the base learners should connect to the meta learner using prediction probabilities or the class. On top of that, we can decide to use the base learners as extra features, feeding the raw input and the results of the base learners to the meta-learner.

5. Differences Between Bagging, Boosting, and Stacking

The main differences between bagging, boosting, and stacking are in the approach, base models, subset selection, goals, and model combination:

| Criteria | Bagging | Boosting | Stacking |

|---|---|---|---|

| Approach | Parallel training of weak models | Sequential training of weak models | Aggregates the predictions of multiple models into a meta-model |

| Base Models | Homogenous | Homogenous | Can be heterogenous |

| Subset Selection | Random sampling with replacement | Subsets are not required | Subsets are not required |

| Goal | Reduce variance | Reduce bias | Reduce variance and bias |

| Model Combination | Majority voting or averaging | Weighted majority voting or averaging | Using an ML model |

The selection of the technique to use depends on the overall objective and task at hand. Bagging is best when the goal is to reduce variance, whereas boosting is the choice for reducing bias. If the goal is to reduce variance and bias and improve overall performance, we should use stacking.

6. Other Libraries and Implementations

SciKit-Learn is the most popular library that implements foundational machine learning models in Python; besides those foundational models, it also implements several ensemble models, including bagging, different boosting strategies and stacking.

Other popular Python libraries, like XGBoost, LightGBM, or CatBoost, focus on gradient boosting models and do not have a stand-alone implementation of bagging models. However, they all include parameters to control subsampling when training weak learners, adding the core benefit of bagging to boosting algorithms.

H2O is general and implements multiple algorithms in the JVM. Besides providing a language to train and use models and a native interface for Spark, it also provides an REST API that can be accessed from Python or R or natively from Spark using its native language. Although it does not have a general bagging model, it implements Random Forest. It also has subsampling control for other ensemble models.

Weka is another Java ML library that is mainly used for academic purposes. The project was started at the University of Waikato.

7. Conclusions

In this article, we provided an overview of bagging, boosting, and stacking. Bagging trains multiple weak models in parallel. Boosting trains multiple homogenous weak models in sequence, with each successor improving on its predecessor’s errors. Stacking trains multiple models (that can be heterogeneous) to obtain a meta-model.

The choice of ensemble technique depends on the goal and task, as all three techniques aim to improve the overall performance by combining the power of multiple models.

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Stacking in Machine Learning

Stacking: Stacking is a way of ensembling classification or regression models it consists of two-layer estimators. The first layer consists of all the baseline models that are used to predict the outputs on the test datasets. The second layer consists of Meta-Classifier or Regressor which takes all the predictions of baseline models as an input and generate new predictions. Stacking Architecture:

Stacking Architecture

mlxtend: Mlxtend (machine learning extensions) is a Python library of useful tools for day-to-day data science tasks. It consists of lots of tools that are useful for data science and machine learning tasks for example:

- Feature Selection

- Feature Extraction

- Visualization

and many more. This article explains how to implement Stacking Classifier on the classification dataset. Why Stacking? Most of the Machine-Learning and Data science competitions are won by using Stacked models. They can improve the existing accuracy that is shown by individual models. We can get most of the Stacked models by choosing diverse algorithms in the first layer of architecture as different algorithms capture different trends in training data by combining both of the models can give better and accurate results. Installation of libraries on the system:

pip install mlxtend pip install pandas pip install -U scikit-learn

Code: Import Required Libraries:

Code: Loading the dataset

Output:

Code:

Code: Splitting Data into Train and Test

Code: Standardizing Data

Code: Building First Layer Estimators

Let’s Train and evaluate with our first layer estimators to observe the difference in the performance of the stacked model and general model Code: Training KNeighborsClassifier

Code: Evaluation of KNeighborsClassifier

Code: Training Naive Bayes Classifier

Code: Evaluation of Naive Bayes Classifier

Code: Implementing Stacking Classifier

- use_probas=True indicates the Stacking Classifier uses the prediction probabilities as an input instead of using predictions classes.

- use_features_in_secondary=True indicates Stacking Classifier not only take predictions as an input but also uses features in the dataset to predict on new data.

Code: Training Stacking Classifier

Code: Evaluating Stacking Classifier

Our both individual models scores an accuracy of nearly 80% and our Stacked model got an accuracy of nearly 84%.By Combining two individual models we got a significant performance improvement. Code:

Our both individual models scores an accuracy of nearly 80% and our Stacked model got an accuracy of nearly 84%. By Combining two individual models we got a significant performance improvement.

Please Login to comment...

Similar reads.

- AI-ML-DS With Python

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Search anything:

Stacking in Machine Learning

Machine learning (ml).

Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

"When there is Unity, there is always victory"

Ensemble modeling is a powerful way to improve the performance of your model. It is a machine learning paradigm where multiple models are trained to solve the same problem and combined to get better results. It is also an art of combining a diverse set of learners together to improvise on the stability and predictive power of the model.

Most common types of Ensemble Methods:

In this article, we will focus on Stacking. By the end of this article you will get knowledge about:

What is Stacking?

- The general architecture of Stacking

- Steps to implement Stacking

- Basic Code implementation using Sckit-Learn

- How Stacking differs from Bagging and Boosting

Stacking (a.k.a Stack Generalization) is an ensemble technique that uses meta-learning for generating predictions. It can harness the capabilities of well-performing as well as weakly-performing models on a classification or regression task and make predictions with better performance than any other single model in the ensemble.

It is an extended form of the Model Averaging Ensemble technique, where multiple sub-models contribute equally or according to their performance weights to a combined prediction. In Stacking, an entirely new model to trained to combine the contributions from each submodel and produce the best predictions. This final model is said to be stacked on top of the others, hence the name.

The Architecture of Stacking:

- Original Data - The original split is split into n-folds

- Base Models - Level 1 individual Models

- Level 1 Predictions - Predictions generated by base models on original data

- Level 2 Model - Meta-Learner, the model which combines the Level 1 predictions to generate best final Predictions

Stacking can have more than one level of base learners.

Steps of Implementation

The following steps are involved in implementation:

- The Original Train data is split into n-folds using the RepeatedStratifiedKFold.

- Then the base learner (Model 1) is fitted on the first n-1 folds and predictions are made for the nth part.

- This prediction is added to the x1_train list.

- Steps 2 & 3 are repeated for the rest of the n-1 parts and we obtain x1_train array of size n

where, x1_train[i] is the prediction on (i+1)th part, when the model 1 is fitted on 1,2...,i-1,i+1...n parts

- Now, train the model on all the n parts and make predictions for test data. Store this prediction in y1_test.

- Similarly, we obtain x2_train, y2_test, x3_train and y3_test by using Model 2 and 3 for training respectively to obtain Level 2 predictions.

- Now we train a Meta Learner on Level 1 Predictions (using these predictions as features for the model).

- The Meta learner is now used to make predictions on test data.

Code Implementation

It can be seen that stacked ensemble model give the highest accuracy amongst all the models.

Difference between Bagging, Boosting and Stacking

Now, let's see how stacking differs from bagging and boosting.

Basic Definition

- Bagging: Generally, it is used in order to reduce the variance of a model. Here, we create several randomly selected overlapping subsets of data from the training sampple. Each of subset is used for training the model. In this way we get ensemble of different models.

- Boosting: Boosting uses multiple predictors. It is a sequential ensemble method that builds strong predictive models by decreasing bias error. In this technique, data samples are weighted, such that the upcoming model focuses more on the important(generally misclassified) data points. During training, weights are also allocated to models. So models which perform better have higher weights.

- Stacking: Similar to boosting, we also apply several models to your original data. The difference here is, we don't allocate weights, rather we introduce a meta-level i.e. using another model/approach to estimate the input together with outputs of every model to estimate the weights of individual models.

Working Principle

- Bagging: Here we build several models independently and then average their predictions o get the final predictions

- Boosting: In boosting we develop models sequentially and try to reduce bias upon each iteration

- Stacking: The predictions of base learners is used as a feature to obtain the fianl prediction by the meta-learner which is stacked upon all the base learners

| Boosting | Bagging | Stacking | |

|---|---|---|---|

| Splitting of data | random | weighted preference | n-folds |

| Target | reduce variance | reduce bias | reduce bias and variance |

| Implemented in... | random subspace | gradient boosting | blending |

| Method to combine predictions | weighted average | weighted majority vote | logistic regression |

For the final part to check your learning, try to answer the following question:

TRUE or FALSE

The meta learner is used to extract features from the original data.

Stacking has been proved to be highly effective in kaggle competitions. So next time when you participate in any competition, you will have a powerful weapon in your hand ;)

Wanna dig deeper? Look at the give links:

- Kaggle->Ensemble Learning: Bagging, Boosting & Stacking

- Skip to main content

- Skip to primary sidebar

- Skip to footer

The Best of Applied Artificial Intelligence, Machine Learning, Automation, Bots, Chatbots

Simple Model Stacking, Explained And Automated

November 2, 2021 by Jen Wadkins

An overview of Model Stacking

In model stacking, we don’t use one single model to make our predictions — instead, we make predictions with several different models, and then use those predictions as features for a higher-level meta model. It can work especially well with varied types of lower-level learners, all contributing different strengths to the meta model. Model stacks can be built in many ways, and there isn’t one “correct” way to use stacking. It can be made more complex than today’s example with multiple levels, weights, averaging, etc. The basic model stack we will make today looks like this:

In our stack, we will make non-leaky predictions on our train data using a series of intermediary models, and then use those as features in conjunction with the original training features on a meta model.

If this sounds complicated, don’t be deterred. I’ll show you a painless way to automate this process including selecting the best meta model, selecting the best stack models, putting all of that data together, and making the final predictions on your test data.

Today we are working with a Regression problem using the King County Housing dataset located on Kaggle.

If this in-depth educational content is useful for you, subscribe to our AI research mailing list to be alerted when we release new material

Starting Positions

Sanity check : This article doesn’t cover any preprocessing or model tuning. Your dataset should be clean and ready to use, and your potential models should be hyperparameter-tuned if desired. You should also have your data split into a train/test set or a train/validate/test set. This writeup also assumes basic familiarity with cross-validation.

We start by instantiating our tuned potential models with any optional hyperparameters. The key to a fun and successful stack is the more the merrier — try as many models as you want! You can save time if you run a cross-validated spot check beforehand with all of your potential models, to avoid models that are clearly not informative. But you might be surprised how much a poor base model can contribute to a stack. Just keep in mind that every potential model you add to the possibilities will take time. But there is NO limit to the number of models that can be tried in your stack, and the key to our tutorial is that we will ultimately use only the best ones, without having to select anything manually.

For our example I’ll instantiate only five potential models. Here we go:

Next, we’re going to create empty lists to store each potential’s training set predictions (which we’ll obtain in a non-leaky fashion). You’ll need a list for each potential model, and a consistent naming convention will help stay organized.

Finally, we’re putting the instantiated models and their empty prediction lists into a storage dictionary, which we’ll be using with our various stack functions. The format of each dictionary entry is “label” : [model instance, prediction list], like so:

Sanity check : Prepare your train/test sets in array format for the following functions. Your X features should be in an array shape of (n,m) where n is # of samples and m is # of features, and your y targets should be an array of (n, ). If they are dataframes, convert them with np.array(df)

Getting Out-Of-Fold Predictions

What does it mean to have an out-of-fold or out-of-sample prediction? In model stacking, we use predictions made on the train data itself in order to train the meta model. The way to properly include these predictions is by dividing our train data into folds, just like with cross-validation, and doing predictions on each fold using the remaining folds. In this way we will have a full set of predictions for our train data but without any data leakage , which is what would occur were we to simply train and then predict on the same set.

Here is our first function, for getting our out-of-fold predictions. This function was adapted from the great out-of-fold predictions tutorial at Machine Learning Mastery :

Now we’re ready to get the out-of-fold predictions, using the model dictionary that we made earlier. The function defaults to verbose and will give status updates about its progress. Keep in mind that getting OOF predictions can take a long time if you have a large dataset or a lot of models to try in your stack!

Run the out-of-fold predictions function:

Sanity check: Check for consistent output from this out-of-fold function. All of the yhats in the dictionaries should come back as plain lists of numbers, without any arrays.

We now have a data_x and data_y, which are the same data as our x_train and y_train, but re-ordered to match the order of the potentials’ yhat predictions . Our returned trained_models dictionary has yhat predictions for the entire train set, for each potential model .

Running the Stack Selector

Time for the Stack Selector. Here’s our next function. This one was based on the feature selection forward-backward selector written by David Dale here :

Sanity check: My function is scoring on mean absolute error, but you can edit this for whichever score metric you most prefer, such as R2 or RMSE.

We will ultimately run this function with each of our potential models as the function’s meta model. When we run the function we send it the data_x and data_y that we got from our out-of-fold function, as well as a single instantiated model to try as the meta model, and our dictionary with all of the out-of-fold predictions. The function then runs forward selection, using the out-of-fold predictions as features.

For the model that is being tried as the meta model, we get a baseline score (using CV) on the train set. For each other potential model, we iteratively append the potential’s yhat predictions to the feature set and re-score the meta model using that additional feature. If our meta model score improves with the addition of any feature, the single best scoring potential’s predictions are permanently appended to the feature set and the improved score becomes the baseline. The function then loops, once more trying the addition of each potential that isn’t already in the stack, until no potential additions improve the score. The function then reports the optimal included models for this meta model, and the best score achieved.

Sanity check: This model selector is written to optimize on a Train set using CV. If you are fortunate enough to have a Validation set, you could rewrite and perform the selection on that. It will be much faster — but watch out for overfitting!

How do we pick our meta model for this task? Here comes the fun part — we’re going to try ALL of our models as the meta model. Keep in mind that this function may take a LONG time to run. Keeping verbose set to True will give frequent progress reports.

We make a dictionary to store all of the scores that we’ll get from our testing. Then we run the stack selector on each of our trained models, using that model as the meta model:

Afterward we’ll have the scores of how each model performs as the meta model, along with its best stacked additions. We turn that dictionary into a dataframe and sort our results on our score metric:

Now we can see exactly which meta model and stacked models performed the best.

Putting It All Together

We’re almost done! Soon we’ll be making predictions on our Test set. We’ve selected a meta model (probably the one that performed the best on the Stack Selector!) and the stacked models that we’ll include.

Before we fit our meta model to our stacked data, try the model without the added features on the test set so you can get a comparison for your stacked model’s improvements! Fit and predict your meta model on your original train/test set. This is the baseline we’re expecting to beat with a stacked model.

Take a step back and fit all the models that we will be using in our stack on our ORIGINAL training dataset. We do this because we’ll be predicting on the test set (same as with a single model!) and adding the predictions to our test set as features for our meta model.

Now we prepare our model stack. First, manually make a list storing only the out-of-fold predictions for models that we are using in our final stack. We get these predictions from the ‘trained_models ’ dictionary that we produced earlier using our out-of-fold predictions function:

Time for one more function. This one takes in our re-ordered train data_x, and the list holding yhat predictions, and puts them together into a single meta train set. We do this in a function because we’ll be doing it again for the test data later.

Now call this function passing data_x and predictions list to create a meta training set for our meta model:

The meta training set consists of our original predictive features along with the stacked models’ out-of-fold predictions as additional features.

Make a list to hold the fitted model instances; we’ll be using that with our next and last function. Make sure this is in the same order as you listed the yhat predictions for your meta stacker!

Time for our last function. This function takes in our test set and the fitted models, uses the fitted models to make predictions on the test set, and then adds those predictions as features to the test set. It sends back a complete meta_x set for the meta model to predict on.

Here we call the function, sending the test set and the fitted models:

Final Model Evaluation

The moment of truth is finally here. Time to predict with your stacked model!

In our stacking example, we reduced the MAE on our test set from 53130 to 47205 — an 11.15% improvement!

I hope you see improvements to your model scores, and can see the utility of trying stacked models! I trust you’ve learned some valuable tools to add to your kit for automating model selection and stacking.

References:

- How to Use Out-of-Fold Predictions in Machine Learning by Jason Brownlee

- Forward-Backward Feature Selection by David Dale

This article was originally published on Towards Data Science and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more technical education.

- Email Address *

- Name * First Last

- Administration

- Customer Support

- Other (Please Describe Below)

- What is your biggest challenge with applied AI? *

Reader Interactions

About Jen Wadkins

Jen is a machine learning enthusiast, casual Kaggler, and board game fanatic.

Leave a Reply

You must be logged in to post a comment.

About TOPBOTS

- Expert Contributors

- Terms of Service & Privacy Policy

- Contact TOPBOTS

What is model stacking in neural networks in R

This recipe explains what is model stacking in neural networks Last Updated: 03 Aug 2022

Recipe Objective - What is model stacking in neural networks?

Model stacking is a technique of model ensembling and is defined as a meta-algorithm that aims at combining different neural network models to get a better prediction. Model stacking involves training of base-level models on the complete training set, then the meta-model is trained on features that are outputs of a base-level model. The base-level in model stacking often consists of the different learning algorithms and therefore stacking ensembles are often heterogeneous in nature. In Model stacking, models that is Base-Model are typically different (for eg. not all decision trees) and fits on same dataset and also, the single model that is Meta-model is used to learn how to best combine predictions from contributing models.

This recipe explains what is Model Stacking, how it is beneficial for neural network models and how it can be executed.

Access House Price Prediction Project using Machine Learning with Source Code

Explanation of Model Stacking.

Model stacking mostly considers heterogeneous weak learners, learns them in the parallel and combines them by training the meta-model to output the prediction based on the different weak models predictions. Model stacking mostly try to produce strong models which are less biased than their components that is even if variance can be reduced.

To build a stacking model, the L learners that are to be fit and the meta-model that combines them, both to be defined in order to build a strong stacked model.

Building a stack model with assumingly composed of L weak learners, firstly split the training data in two folds or k-fold cross-validation can also be used. Then, choose L weak learners and fit them to the data of the first fold. Furthermore, for each of the L weak learners, predictions are made for observations in the second fold. Then, fit the meta-model on the second fold and finally using the predictions made by weak learners as the inputs.

Start Your First Project

Learn By Doing

What Users are saying..

I am the Director of Data Analytics with over 10+ years of IT experience. I have a background in SQL, Python, and Big Data working with Accenture, IBM, and Infosys. I am looking to enhance my skills... Read More

Relevant Projects

Machine learning projects, data science projects, python projects for data science, data science projects in r, machine learning projects for beginners, deep learning projects, neural network projects, tensorflow projects, nlp projects, kaggle projects, iot projects, big data projects, hadoop real-time projects examples, spark projects, data analytics projects for students, you might also like, data science tutorial, data scientist salary, how to become a data scientist, data analyst vs data scientist, data scientist resume, data science projects for beginners, machine learning engineer, pandas dataframe, machine learning algorithms, regression analysis, mnist dataset, data science interview questions, python data science interview questions, spark interview questions, hadoop interview questions, data analyst interview questions, machine learning interview questions, aws vs azure, hadoop architecture, spark architecture.

Machine Learning Project to Forecast Rossmann Store Sales

![write a term paper on model stacking Big Data Project AWS MLOps Project to Deploy a Classification Model [Banking]](https://daxg39y63pxwu.cloudfront.net/hackerday_banner/lq/aws-mlops-project-classification-model-deployment.jpg)

AWS MLOps Project to Deploy a Classification Model [Banking]

Model Deployment on GCP using Streamlit for Resume Parsing

Deep Learning Project for Text Detection in Images using Python

MLOps AWS Project on Topic Modeling using Gunicorn Flask

Census Income Data Set Project-Predict Adult Census Income

Create Your First Chatbot with RASA NLU Model and Python

Recommender System Machine Learning Project for Beginners-4

Llama2 Project for MetaData Generation using FAISS and RAGs

Build Regression Models in Python for House Price Prediction

Subscribe to recipes, sign up to view recipe.

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Stacking - Appropriate base and meta models

When implementing stacking for model building and prediction (For example using sklearn's StackingRegressor function) what is the appropriate choice of models for the base models and final meta model?

Should weak/linear models be used as the base models and an ensemble model as the final meta model (For example: Lasso, Ridge and ElasticNet as base models, and XGBoost as a meta model). Or should non-linear/ensemble models be used as base models and linear regression as the final meta model (For example, XGBoot, Random Forest, LightGBM as the base models, and Ridge as the final meta model)

- scikit-learn

- ensemble-modeling

- ensemble-learning

- meta-learning

- 2 $\begingroup$ I think this depends on the data and problem. However, it is important to have "diverse" models as base learners. So "weak" linear models may be okay as well as "diverse" boosted or tree based models (e.g. different objectives in boosting or so). The choice of the meta learner still depends on the structure of the data after stacking. You could check some quick linear models first. $\endgroup$ – Peter Commented Sep 9, 2020 at 15:36

Know someone who can answer? Share a link to this question via email , Twitter , or Facebook .

Your answer, sign up or log in, post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Browse other questions tagged scikit-learn regression ensemble-modeling ensemble-learning meta-learning or ask your own question .

- Featured on Meta

- Announcing a change to the data-dump process

- Upcoming initiatives on Stack Overflow and across the Stack Exchange network...

Hot Network Questions

- Forgiveness for Judas?

- Definition of 出元

- How fast does the Parker solar probe move before/at aphelion?

- Factoriadic Fraction Addition

- Why does the 68000 have Immediate addressing modes for byte-width instructions?

- Tefillin -- Chabad for Sephardim

- Name of a particular post-hoc statistical fallacy

- Using Gamma Ray Lasers to Blow Away Interstellar Medium

- Is it true that the letter I is pronounced as a consonant when it's the first letter of a syllable?

- How do input pins work?

- Three up to 800 & sum to 2048

- When is Normal force 0?

- How can I break equation in forest?

- Existence of functorial (K-)flat resolutions?

- what's the purpose of philosophy/knowledge

- Has Donald Trump or his campaign explained what his plan is so that "we’ll have it fixed so good you’re not gonna have to vote"?

- What is an 'effective' encoding?

- How to make sure a payment is actually received and will not bounce, using Paypal, Stripe or online banking respectively?

- Short story about food called something like "Standard Fare"

- Where can I find a switch to alternate connections between two pairs of two wires?

- Identifying transistor topology in discrete phono preamp

- Bicolor/double-sided feathers as part of a thermoregulatory apparatus?

- How to phrase CV when my college didn’t award magna cum laude (in general) while others do?

- How to fix "Google Play is linked to another account" in Bomber Friends?

IMAGES

VIDEO

COMMENTS

Unlike boosting, in stacking, a single model is used to learn how to best combine the predictions from the contributing models (e.g. instead of a sequence of models that correct the predictions of prior models). The architecture of a stacking model involves two or more base models, often referred to as level-0 models, and a meta-model that ...

1. Stacking is a type of ensemble learning wherein multiple layers of models are used for final predictions. More specifically, we predict train set (in CV-like fashion) and test set using some 1st level models, and then use these predictions as features for 2nd level model. We can do it in python using a library called 'Vecstack'.

Abstract: In this paper, we study the usage of stacking approach for building ensembles of machine learning models. The cases for time series forecasting and logistic regression have been considered. The results show that using stacking technics we can improve performance of predictive models in considered cases.

Stacking is a widely used model averaging technique that asymptotically yields optimal predictions among linear averages. We show that stacking is most effective when model predictive performance is heterogeneous in inputs, and we can further improve the stacked mixture with a hierarchical model. We generalize stacking to Bayesian hierarchical stacking. The model weights are varying as a ...

Model stacking is a Data Science ensemble method, which relies on the "wisdom of the crowd" premise that a diverse selection of combined "weaker" learners working together, will often outperform a single "strong" model. The winners of most major Kaggle competitions over the past 4-5 years have used some configuration of Model ...

Yuling Yao, Gregor Pirš, Aki Vehtari, and I write: Stacking is a widely used model averaging technique that asymptotically yields optimal predictions among linear averages. We show that stacking is most effective when model predictive performance is heterogeneous in inputs, and we can further improve the stacked mixture with a hierarchical ...

Stacking is a way to ensemble multiple classifications or regression model. There are many ways to ensemble models, the widely known models are Bagging or Boosting. Bagging allows multiple similar models with high variance are averaged to decrease variance. Boosting builds multiple incremental models to decrease the bias, while keeping variance ...

Using an ML model. The selection of the technique to use depends on the overall objective and task at hand. Bagging is best when the goal is to reduce variance, whereas boosting is the choice for reducing bias. If the goal is to reduce variance and bias and improve overall performance, we should use stacking. 6.

Stacking: Stacking is a way of ensembling classification or regression models it consists of two-layer estimators. The first layer consists of all the baseline models that are used to predict the outputs on the test datasets. The second layer consists of Meta-Classifier or Regressor which takes all the predictions of baseline models as an input ...

Level 2 Model - Meta-Learner, the model which combines the Level 1 predictions to generate best final Predictions; Stacking can have more than one level of base learners. Steps of Implementation. The following steps are involved in implementation: The Original Train data is split into n-folds using the RepeatedStratifiedKFold.

In model stacking, we use predictions made on the train data itself in order to train the meta model. The way to properly include these predictions is by dividing our train data into folds, just like with cross-validation, and doing predictions on each fold using the remaining folds. In this way we will have a full set of predictions for our ...

Model stacking is a technique of model ensembling and is defined as a meta-algorithm that aims at combining different neural network models to get a better prediction. Model stacking involves training of base-level models on the complete training set, then the meta-model is trained on features that are outputs of a base-level model.

However, it is important to have "diverse" models as base learners. So "weak" linear models may be okay as well as "diverse" boosted or tree based models (e.g. different objectives in boosting or so). The choice of the meta learner still depends on the structure of the data after stacking. You could check some quick linear models first. - Peter.