IEEE Account

- Change Username/Password

- Update Address

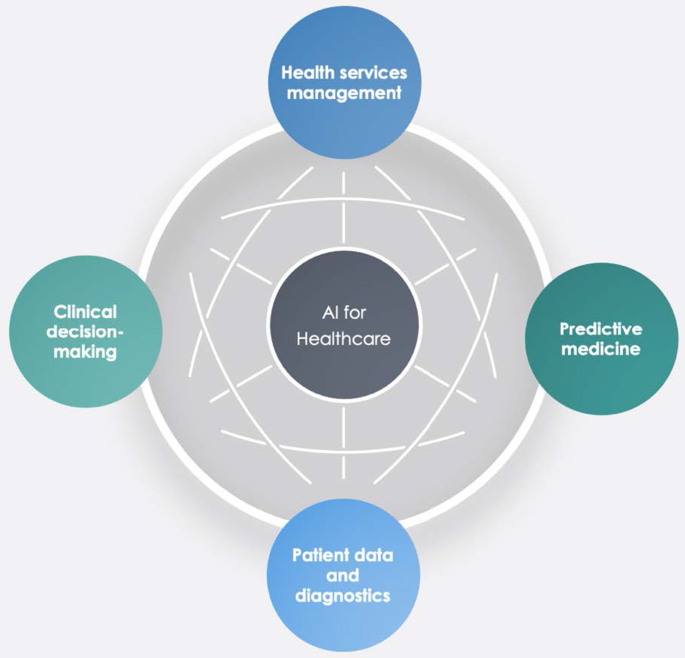

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Artificial Intelligence in Health Care: Current Applications and Issues

Affiliations.

- 1 Department of Orthopedic Surgery, Samsung Medical Center, School of Medicine, Sungkyunkwan University, Seoul, Korea.

- 2 Division of Pulmonary and Critical Care Medicine, Department of Medicine, Samsung Medical Center, School of Medicine, Sungkyunkwan University, Seoul, Korea.

- 3 Department of Surgery, Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea.

- 4 Department of Radiology, Severance Hospital, Yonsei University College of Medicine, Seoul, Korea.

- 5 Department of Radiology, Seoul National University College of Medicine, Seoul, Korea.

- 6 Division of Gastroenterology, Department of Medicine, Samsung Medical Center, School of Medicine, Sungkyunkwan University, Seoul, Korea.

- 7 Department of R&D Planning, Korea Health Industry Development Institute (KHIDI), Cheongju, Korea.

- 8 Health Innovation Big Data Center, Asan Institute for Life Science, Asan Medical Center, Seoul, Korea.

- 9 Department of Radiology, Seoul St. Mary's Hospital, College of Medicine, The Catholic University of Korea, Seoul, Korea.

- 10 Department of Bio and Brain Engineering, Korea Advanced Institute of Science and Technology (KAIST), Daejeon, Korea.

- 11 Protocol Engineering Center, Electronics and Telecommunications Research Institute (ETRI), Daejeon, Korea.

- 12 Department of Radiology, Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea.

- 13 Division of Geriatrics, Department of Internal Medicine, Severance Hospital, Yonsei University College of Medicine, Seoul, Korea.

- 14 VUNO Inc., Seoul, Korea.

- 15 Department of Convergence Medicine, Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea.

- 16 Lunit Inc., Seoul, Korea.

- 17 Big Data Research Center, Samsung Medical Center, School of Medicine, Sungkyunkwan University, Seoul, Korea.

- 18 Digital Healthcare Partners, Seoul, Korea.

- 19 Center for Bionics, Korea Institute of Science and Technology (KIST), Seoul, Korea.

- 20 Department of Biomedical Engineering, Seoul National University College of Medicine, Seoul, Korea. [email protected].

- PMID: 33140591

- PMCID: PMC7606883

- DOI: 10.3346/jkms.2020.35.e379

- Erratum: Correction of Author Name and Affiliation in the Article "Artificial Intelligence in Health Care: Current Applications and Issues". Park CW, Seo SW, Kang N, Ko B, Choi BW, Park CM, Chang DK, Kim H, Kim H, Lee H, Jang J, Ye JC, Jeon JH, Seo JB, Kim KJ, Jung KH, Kim N, Paek S, Shin SY, Yoo S, Choi YS, Kim Y, Yoon HJ. Park CW, et al. J Korean Med Sci. 2020 Dec 14;35(48):e425. doi: 10.3346/jkms.2020.35.e425. J Korean Med Sci. 2020. PMID: 33316861 Free PMC article. No abstract available.

In recent years, artificial intelligence (AI) technologies have greatly advanced and become a reality in many areas of our daily lives. In the health care field, numerous efforts are being made to implement the AI technology for practical medical treatments. With the rapid developments in machine learning algorithms and improvements in hardware performances, the AI technology is expected to play an important role in effectively analyzing and utilizing extensive amounts of health and medical data. However, the AI technology has various unique characteristics that are different from the existing health care technologies. Subsequently, there are a number of areas that need to be supplemented within the current health care system for the AI to be utilized more effectively and frequently in health care. In addition, the number of medical practitioners and public that accept AI in the health care is still low; moreover, there are various concerns regarding the safety and reliability of AI technology implementations. Therefore, this paper aims to introduce the current research and application status of AI technology in health care and discuss the issues that need to be resolved.

Keywords: Application; Artificial Intelligence; Health Care; Issue; Machine Learning.

© 2020 The Korean Academy of Medical Sciences.

PubMed Disclaimer

Conflict of interest statement

The authors have no potential conflicts of interest to disclose.

Fig. 1. Research and development strategic plan…

Fig. 1. Research and development strategic plan of artificial intelligence.

AI = artificial intelligence.

Similar articles

- ARTIFICIAL INTELLIGENCE IN MEDICAL PRACTICE: REGULATIVE ISSUES AND PERSPECTIVES. Pashkov VM, Harkusha AO, Harkusha YO. Pashkov VM, et al. Wiad Lek. 2020;73(12 cz 2):2722-2727. Wiad Lek. 2020. PMID: 33611272 Review.

- Fulfilling the Promise of Artificial Intelligence in the Health Sector: Let's Get Real. Hashiguchi TCO, Oderkirk J, Slawomirski L. Hashiguchi TCO, et al. Value Health. 2022 Mar;25(3):368-373. doi: 10.1016/j.jval.2021.11.1369. Epub 2022 Jan 14. Value Health. 2022. PMID: 35227447

- A governance model for the application of AI in health care. Reddy S, Allan S, Coghlan S, Cooper P. Reddy S, et al. J Am Med Inform Assoc. 2020 Mar 1;27(3):491-497. doi: 10.1093/jamia/ocz192. J Am Med Inform Assoc. 2020. PMID: 31682262 Free PMC article.

- Application Scenarios for Artificial Intelligence in Nursing Care: Rapid Review. Seibert K, Domhoff D, Bruch D, Schulte-Althoff M, Fürstenau D, Biessmann F, Wolf-Ostermann K. Seibert K, et al. J Med Internet Res. 2021 Nov 29;23(11):e26522. doi: 10.2196/26522. J Med Internet Res. 2021. PMID: 34847057 Free PMC article. Review.

- Open Data and transparency in artificial intelligence and machine learning: A new era of research. Rodgers CM, Ellingson SR, Chatterjee P. Rodgers CM, et al. F1000Res. 2023 Apr 12;12:387. doi: 10.12688/f1000research.133019.1. eCollection 2023. F1000Res. 2023. PMID: 37065505 Free PMC article.

- Suitability of the Current Health Technology Assessment of Innovative Artificial Intelligence-Based Medical Devices: Scoping Literature Review. Farah L, Borget I, Martelli N, Vallee A. Farah L, et al. J Med Internet Res. 2024 May 13;26:e51514. doi: 10.2196/51514. J Med Internet Res. 2024. PMID: 38739911 Free PMC article. Review.

- The Structural and Molecular Mechanisms of Mycobacterium tuberculosis Translational Elongation Factor Proteins. Fang N, Wu L, Duan S, Li J. Fang N, et al. Molecules. 2024 Apr 29;29(9):2058. doi: 10.3390/molecules29092058. Molecules. 2024. PMID: 38731549 Free PMC article. Review.

- Exploring the matrix: knowledge, perceptions and prospects of artificial intelligence and machine learning in Nigerian healthcare. Adigwe OP, Onavbavba G, Sanyaolu SE. Adigwe OP, et al. Front Artif Intell. 2024 Jan 19;6:1293297. doi: 10.3389/frai.2023.1293297. eCollection 2023. Front Artif Intell. 2024. PMID: 38314120 Free PMC article.

- Novel Artificial Intelligence-Based Technology to Diagnose Asthma Using Methacholine Challenge Tests. Kang N, Lee K, Byun S, Lee JY, Choi DC, Lee BJ. Kang N, et al. Allergy Asthma Immunol Res. 2024 Jan;16(1):42-54. doi: 10.4168/aair.2024.16.1.42. Allergy Asthma Immunol Res. 2024. PMID: 38262390 Free PMC article.

- [Application and prospect of machine learning in orthopaedic trauma]. Tian C, Chen X, Zhu H, Qin S, Shi L, Rui Y. Tian C, et al. Zhongguo Xiu Fu Chong Jian Wai Ke Za Zhi. 2023 Dec 15;37(12):1562-1568. doi: 10.7507/1002-1892.202308064. Zhongguo Xiu Fu Chong Jian Wai Ke Za Zhi. 2023. PMID: 38130202 Free PMC article. Chinese.

- Rajkomar A, Dean J, Kohane I. Machine learning in medicine. N Engl J Med. 2019;380(14):1347–1358. - PubMed

- Shimizu H, Nakayama KI. Artificial intelligence in oncology. Cancer Sci. 2020;111(5):1452–1460. - PMC - PubMed

- Obermeyer Z, Emanuel EJ. Predicting the future - big data, machine learning, and clinical medicine. N Engl J Med. 2016;375(13):1216–1219. - PMC - PubMed

- Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230–243. - PMC - PubMed

- Cruz JA, Wishart DS. Applications of machine learning in cancer prediction and prognosis. Cancer Inform. 2007;2:59–77. - PMC - PubMed

Publication types

- Search in MeSH

Related information

Linkout - more resources, full text sources.

- Europe PubMed Central

- Korean Academy of Medical Sciences

- PubMed Central

- MedlinePlus Health Information

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Select Your Interests

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

- Download PDF

- Share X Facebook Email LinkedIn

- Permissions

AI in Medicine— JAMA ’s Focus on Clinical Outcomes, Patient-Centered Care, Quality, and Equity

- 1 Associate Editor, JAMA ; and Yale School of Medicine, New Haven, Connecticut

- 2 Editorial Board member, JAMA ; and University of California, San Francisco

- 3 Electronic Editor, JAMA and JAMA Network

- 4 Associate Editor, JAMA ; and University of California, San Francisco

- 5 Executive Managing Editor, JAMA and JAMA Network

- 6 Managing Director of Strategy and Planning, JAMA and JAMA Network

- 7 Executive Editor, JAMA and JAMA Network

- 8 Editor in Chief, JAMA and JAMA Network

- Editorial Guidance on Reporting Use of AI in Research and Scholarly Publication Annette Flanagin, RN, MA; Romain Pirracchio, MD, MPH, PhD; Rohan Khera, MD, MS; Michael Berkwits, MD, MSCE; Yulin Hswen, ScD, MPH; Kirsten Bibbins-Domingo, PhD, MD, MAS JAMA

- Special Communication Creation and Adoption of Large Language Models in Medicine Nigam H. Shah, MBBS, PhD; David Entwistle, BS, MHSA; Michael A. Pfeffer, MD JAMA

- Medical News & Perspectives Can AI Solve Clinician Burnout? Yulin Hswen, ScD, MPH; Rebecca Voelker, MSJ JAMA

- Medical News & Perspectives Building Health Care Equity Into AI Tools Yulin Hswen, ScD, MPH; Rebecca Voelker, MSJ JAMA

- Medical News & Perspectives How Kaiser Permanente Is Testing AI in the Clinic Rebecca Voelker, MSJ; Yulin Hswen, ScD, MPH JAMA

- Medical News & Perspectives Can Predictive AI Improve Early Sepsis Detection? Rebecca Voelker, MSJ; Yulin Hswen, ScD, MPH JAMA

- Medical News & Perspectives Feeding AI the Right Diet for Health Care Success Rebecca Voelker, MSJ; Yulin Hswen, ScD, MPH JAMA

The transformative role of artificial intelligence (AI) in health care has been forecast for decades, 1 but only recently have technological advances appeared to capture some of the complexity of health and disease and how health care is delivered. 2 Recent emergence of large language models (LLMs) in highly visible and interactive applications 3 has ignited interest in how new AI technologies can improve medicine and health for patients, the public, clinicians, health systems, and more. The rapidity of these developments, their potential impact on health care, and JAMA ’s mission to publish the best science that advances medicine and public health compel the journal to renew its commitment to facilitating the rigorous scientific development, evaluation, and implementation of AI in health care.

JAMA editors are committed to promoting discoveries in AI science, rigorously evaluating new advances for their impact on the health of patients and populations, assessing the value such advances bring to health systems and society nationally and globally, and examining progress toward equity, fairness, and the reduction of historical medical bias. Moreover, JAMA ’s mission is to ensure that these scientific advances are clearly communicated in a manner that enhances the collective understanding of the domain for all stakeholders in medicine and public health. 4 For scientific development of AI to be most effective for improving medicine and public health requires a platform that recognizes and supports the vision of rapid cycle innovation and is also fundamentally grounded in the principles of reliable and reproducible clinical research that is ethically sound, respectful of rights to privacy, and representative of diverse populations. 2 , 3 , 5

The scientific development in AI can be viewed through the framework used to describe other health-related sciences. 6 In these domains, scientific discoveries begin with identifying biological mechanisms of disease. Then inventions that target these mechanisms are tested in progressively larger groups of people with and without diseases to assess the effectiveness and safety of these interventions. These are then scaled to large studies evaluating outcomes for individuals and populations with the disease. This well-established scientific development framework can work for research in AI as well, with reportable stages as inventions and findings move from one stage to the next.

The editors seek original science that focuses on developing, testing, and deploying AI in studies that improve understanding of its effects on the health outcomes of patients and populations. The starting point is original research rigorously examining the challenges and potential solutions to optimizing clinical care with AI. In addition, to ensure our readers remain abreast of major scientific development across the entire continuum of scientific innovation, we invite reviews, special communications, and opinion articles that summarize the potential health care applications of emerging technology written for our journal’s broad readership.

While highlighting new developments, JAMA will focus on these essential areas ( Figure ):

Clinical care and outcomes: JAMA ’s key interest is in clinically impactful science, and we will be most interested in studies demonstrating the effective translation of novel AI technologies to improve clinical care and outcomes. The potential for clinical impact will represent an important yardstick in our evaluation of all AI studies.

Patient-centered care: Early phases of scientific development have focused on directly measurable outcomes, reflecting the broader availability of data on these outcomes. However, how algorithmic care may shape the care experience of individuals and outcomes of interest to patients remains an understudied domain. 7 Implementing novel technology to enhance patient care and experience can only achieve its intended effect when patients believe that it offers them an advance—either through more time with their clinicians, more accessible information on their care decisions, or personalized interventions that target the outcomes of interest to them. We encourage studies that consider domains of autonomy, mobility, comfort, education, or other aspects of health not measured in traditional outcome assessments.

Health care quality: Advances in modern medicine are often stymied by the inability to translate evidence-based care to all patients. As clinicians increasingly provide care for more complex patient conditions in an ever-expanding therapeutic landscape, AI can play a crucial role in alleviating current challenges in optimizing clinical care, 8 if stewarded appropriately when positioned in the medical enterprise. 9 We are interested in studies that assess the potential for AI technologies to improve access to high-quality health care for all patients.

Fairness in AI algorithms: We encourage the explicit assessment of the fairness of algorithms and their potential effect on health inequities. Through development on biased data sources or restricted deployment in privileged health care settings, algorithms can potentially exacerbate health outcome gaps across socioeconomic and sociocultural axes. 9 We are interested in studies that assess the fairness of algorithms, their potential impact on health disparities, and strategies to mitigate biases.

Medical education and clinician experience: In addition to patient-facing science, we seek investigations into the role of AI in addressing the challenges clinicians face in medical training and in the practice of medicine. The information overload through digital health technologies has posed an increasing burden on clinicians, with unintended consequences for their health and well-being. This remains a central area to target for AI in health. The investigations in this domain will evaluate the use of AI to enable a health care team and its members to function to the highest and best use of their expertise.

Global solutions: To advance health care beyond well-resourced countries, critical technologies would need to adapt to the infrastructural, technological, and health care milieu across the globe. We invite investigations to submit science that demonstrates and evaluates AI applications that enhance care within the limitations of low-resource settings. AI-driven method development that enables low-cost tools to be even more effective at diagnosis and treatment, and those that guide the fair and appropriate allocation of limited resources, may move the needle on bridging the health disparities across societies across the globe.

JAMA is one of the most widely circulated general medicine journals in the world and the flagship journal of the JAMA Network, which includes 11 specialty journals and JAMA Network Open . Submissions are welcome to all the JAMA Network journals. The Network also offers the advantage of coordinated publications, as well as amplification of findings to specific audiences of interest. With a mission to reach clinicians, scientists, patients, policymakers, and the general public globally, the value of JAMA and the JAMA Network for authors and readers interested in AI in medicine is clear.

We seek to engage scientists and other thought leaders advancing AI and medicine across clinical, computational, health policy, and public health domains. We invite authors to communicate directly with the editors about topics they believe can impact health care delivery and to connect with the editors to discuss further the development of your science and our approach to its evaluation; such engagement is critical in this rapidly evolving field. We are committed to including diverse opinions and voices in the journal and urge experts from across the career spectrum and the globe to participate in the discourse. The editors are committed to communicating science effectively to a broad range of stakeholders across our digital, multimedia, and social media avenues. As AI promises to enable major health care transformation, JAMA and the JAMA Network are positioned to serve as a platform for the publication of this transformative work.

Corresponding Author: Kirsten Bibbins-Domingo, PhD, MD, MAS, JAMA ( [email protected] ).

Published Online: August 11, 2023. doi:10.1001/jama.2023.15481

Conflict of Interest Disclosures: Dr Khera reported receiving grants from NHLBI, Doris Duke Charitable Foundation, Bristol Myers Squibb, and Novo Nordisk, and serving as cofounder of Evidence2Health, outside the submitted work. Dr Butte reported being a cofounder and consultant to Personalis and NuMedii; consultant to Mango Tree Corporation, Samsung, 10x Genomics, Helix, Pathway Genomics, and Verinata (Illumina); has served on paid advisory panels or boards for Geisinger Health, Regenstrief Institute, Gerson Lehman Group, AlphaSights, Covance, Novartis, Genentech, Merck, and Roche; is a shareholder in Personalis and NuMedii; is a minor shareholder in Apple, Meta (Facebook), Alphabet (Google), Microsoft, Amazon, Snap, 10x Genomics, Illumina, Regeneron, Sanofi, Pfizer, Royalty Pharma, Moderna, Sutro, Doximity, BioNtech, Invitae, Pacific Biosciences, Editas Medicine, Nuna Health, Assay Depot, and Vet24seven, and several other nonhealth-related companies and mutual funds; and has received honoraria and travel reimbursement for invited talks from Johnson & Johnson, Roche, Genentech, Pfizer, Merck, Lilly, Takeda, Varian, Mars, Siemens, Optum, Abbott, Celgene, AstraZeneca, AbbVie, Westat, and many academic institutions, medical, or disease-specific foundations and associations, and health systems; receives royalty payments through Stanford University for several patents and other disclosures licensed to NuMedii and Personalis; and has had research funded by NIH, Peraton, Genentech, Johnson & Johnson, FDA, Robert Wood Johnson Foundation, Leon Lowenstein Foundation, Intervalien Foundation, Chan Zuckerberg Initiative, the Barbara and Gerson Bakar Foundation, and in the recent past, the March of Dimes, Juvenile Diabetes Research Foundation, California Governor’s Office of Planning and Research, California Institute for Regenerative Medicine, L’Oreal, and Progenity. No other disclosures were reported.

See More About

Khera R , Butte AJ , Berkwits M, et al. AI in Medicine— JAMA ’s Focus on Clinical Outcomes, Patient-Centered Care, Quality, and Equity. JAMA. 2023;330(9):818–820. doi:10.1001/jama.2023.15481

Manage citations:

© 2024

Artificial Intelligence Resource Center

Cardiology in JAMA : Read the Latest

Browse and subscribe to JAMA Network podcasts!

Others Also Liked

- Register for email alerts with links to free full-text articles

- Access PDFs of free articles

- Manage your interests

- Save searches and receive search alerts

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Integrating artificial intelligence into biomedical science curricula: advancing healthcare education.

1. Introduction

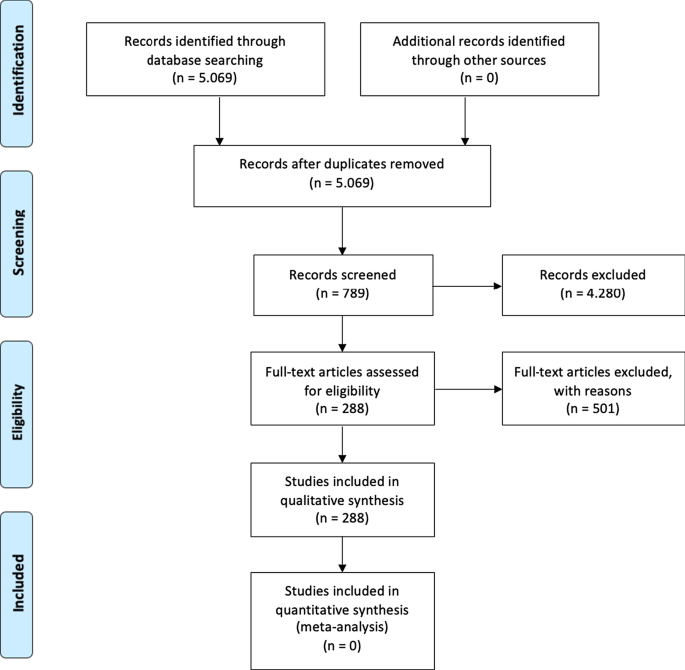

2. methods and search strategy, 3. ai in healthcare, 4. impact of ai on laboratory settings during covid-19, 5. ai in biomedical sciences, 6. how to reform biomedical education, 7. challenges in incorporating ai into biomedical science curricula, 8. ethics and ai, 9. discussion, 10. limitations, 11. conclusions, author contributions, conflicts of interest.

- Booth, R.G.; Strudwick, G.; McBride, S.; O’Connor, S.; Solano López, A.L. How the Nursing Profession Should Adapt for a Digital Future. BMJ 2021 , 373 , n1190. [ Google Scholar ] [ CrossRef ]

- Barbour, A.B.; Frush, J.M.; Gatta, L.A.; McManigle, W.C.; Keah, N.M.; Bejarano-Pineda, L.; Guerrero, E.M. Artificial Intelligence in Health Care: Insights from an Educational Forum. J. Med. Educ. Curric. Dev. 2019 , 6 , 2382120519889348. [ Google Scholar ] [ CrossRef ]

- Sammut, C.; Webb, G.I. Encyclopedia of Machine Learning. In Encyclopedia of Machine Learning ; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011; p. 881. ISBN 978-0-387-30768-8. [ Google Scholar ]

- Laï, M.-C.; Brian, M.; Mamzer, M.-F. Perceptions of Artificial Intelligence in Healthcare: Findings from a Qualitative Survey Study among Actors in France. J. Transl. Med. 2020 , 18 , 14. [ Google Scholar ] [ CrossRef ]

- Paton, C.; Kobayashi, S. An Open Science Approach to Artificial Intelligence in Healthcare. Yearb. Med. Inform. 2019 , 28 , 047–051. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Wiljer, D.; Hakim, Z. Developing an Artificial Intelligence-Enabled Health Care Practice: Rewiring Health Care Professions for Better Care. J. Med. Imaging Radiat. Sci. 2019 , 50 , S8–S14. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Acemoglu, D.; Restrepo, P. The Wrong Kind of AI? Artificial Intelligence and the Future of Labour Demand. Camb. J. Reg. Econ. Soc. 2020 , 13 , 25–35. [ Google Scholar ] [ CrossRef ]

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial Intelligence in Healthcare. Nat. Biomed. Eng. 2018 , 2 , 719–731. [ Google Scholar ] [ CrossRef ]

- Zare Harofte, S.; Soltani, M.; Siavashy, S.; Raahemifar, K. Recent Advances of Utilizing Artificial Intelligence in Lab on a Chip for Diagnosis and Treatment. Small 2022 , 18 , 2203169. [ Google Scholar ] [ CrossRef ]

- Sapci, A.H.; Sapci, H.A. Artificial Intelligence Education and Tools for Medical and Health Informatics Students: Systematic Review. JMIR Med. Educ. 2020 , 6 , e19285. [ Google Scholar ] [ CrossRef ]

- Lomis, K.; Jeffries, P.; Palatta, A.; Sage, M.; Sheikh, J.; Sheperis, C.; Whelan, A. Artificial Intelligence for Health Professions Educators. NAM Perspect. 2021 , 2021 . [ Google Scholar ] [ CrossRef ]

- Aung, Y.; Wong, D.; Ting, D. The Promise of Artificial Intelligence: A Review of the Opportunities and Challenges of Artificial Intelligence in Healthcare. Br. Med. Bull. 2021 , 139 , 4–15. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Musen, M.A.; Middleton, B.; Greenes, R.A. Clinical Decision-Support Systems. In Biomedical Informatics ; Shortliffe, E.H., Cimino, J.J., Eds.; Springer International Publishing: Cham, Switzerland, 2014. [ Google Scholar ]

- Meskó, B.; Hetényi, G.; Győrffy, Z. Will Artificial Intelligence Solve the Human Resource Crisis in Healthcare? BMC Health Serv. Res. 2018 , 18 , 1–4. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Ting, D.S.W.; Cheung, C.Y.-L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; San Yeo, I.Y.; Lee, S.Y. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images from Multiethnic Populations with Diabetes. JAMA 2017 , 318 , 2211–2223. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A. International Evaluation of an AI System for Breast Cancer Screening. Nature 2020 , 577 , 89–94. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Attia, Z.I.; Noseworthy, P.A.; Lopez-Jimenez, F.; Asirvatham, S.J.; Deshmukh, A.J.; Gersh, B.J.; Carter, R.E.; Yao, X.; Rabinstein, A.A.; Erickson, B.J. An Artificial Intelligence-Enabled ECG Algorithm for the Identification of Patients with Atrial Fibrillation during Sinus Rhythm: A Retrospective Analysis of Outcome Prediction. Lancet 2019 , 394 , 861–867. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Lakhani, P.; Sundaram, B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017 , 284 , 574–582. [ Google Scholar ] [ CrossRef ]

- Spencer, M. Brittleness and Bureaucracy: Software as a Material for Science. Perspect. Sci. 2015 , 23 , 466–484. [ Google Scholar ] [ CrossRef ]

- Dilsizian, S.E.; Siegel, E.L. Artificial Intelligence in Medicine and Cardiac Imaging: Harnessing Big Data and Advanced Computing to Provide Personalized Medical Diagnosis and Treatment. Curr. Cardiol. Rep. 2014 , 16 , 441. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Kahn, C.E., Jr. From Images to Actions: Opportunities for Artificial Intelligence in Radiology. Radiology 2017 , 285 , 719–720. [ Google Scholar ] [ CrossRef ]

- Polónia, A.; Campelos, S.; Ribeiro, A.; Aymore, I.; Pinto, D.; Biskup-Fruzynska, M.; Veiga, R.S.; Canas-Marques, R.; Aresta, G.; Araújo, T. Artificial Intelligence Improves the Accuracy in Histologic Classification of Breast Lesions. Am. J. Clin. Pathol. 2021 , 155 , 527–536. [ Google Scholar ] [ CrossRef ]

- Cheng, J.-Z.; Ni, D.; Chou, Y.-H.; Qin, J.; Tiu, C.-M.; Chang, Y.-C.; Huang, C.-S.; Shen, D.; Chen, C.-M. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 2016 , 6 , 24454. [ Google Scholar ] [ CrossRef ]

- Eggers, W.D.; Schatsky, D.; Viechnicki, P. AI-Augmented Government. Using Cognitive Technologies to Redesign Public Sector Work. Deloitte Cent. Gov. Insights 2017 , 1 , 24. [ Google Scholar ]

- Chen, J.; See, K.C. Artificial Intelligence for COVID-19: Rapid Review. J. Med. Internet Res. 2020 , 22 , e21476. [ Google Scholar ] [ CrossRef ]

- Stead, W.W.; Searle, J.R.; Fessler, H.E.; Smith, J.W.; Shortliffe, E.H. Biomedical Informatics: Changing What Physicians Need to Know and How They Learn. Acad. Med. 2011 , 86 , 429–434. [ Google Scholar ] [ CrossRef ]

- Wartman, S.A.; Combs, C.D. Medical Education Must Move From the Information Age to the Age of Artificial Intelligence. Acad. Med. 2018 , 93 , 1107–1109. [ Google Scholar ] [ CrossRef ]

- Durant, T.J.S.; Peaper, D.R.; Ferguson, D.; Schulz, W.L. Impact of COVID-19 Pandemic on Laboratory Utilization. J. Appl. Lab. Med. 2020 , 5 , 1194–1205. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Considerations for Development and Use of AI in Response to COVID-19 | Elsevier Enhanced Reader. Available online: https://www.sciencedirect.com/science/article/pii/S026840122030949X (accessed on 13 March 2023).

- Hirani, R.; Noruzi, K.; Khuram, H.; Hussaini, A.S.; Aifuwa, E.I.; Ely, K.E.; Lewis, J.M.; Gabr, A.E.; Smiley, A.; Tiwari, R.K.; et al. Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities. Life 2024 , 14 , 557. [ Google Scholar ] [ CrossRef ]

- Rubí, J.N.S.; Gondim, P.R.d.L. Interoperable Internet of Medical Things Platform for E-Health Applications. Int. J. Distrib. Sens. Netw. 2020 , 16 , 1550147719889591. [ Google Scholar ] [ CrossRef ]

- Introduction to the Practice of Telemedicine—John Craig, Victor Petterson. 2005. Available online: https://journals.sagepub.com/doi/abs/10.1177/1357633X0501100102 (accessed on 28 February 2024).

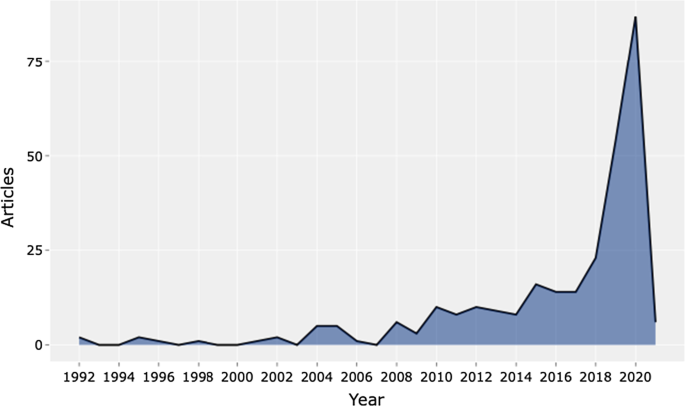

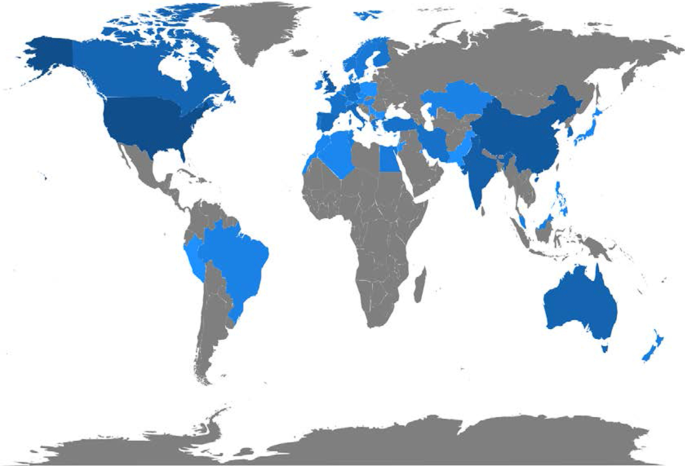

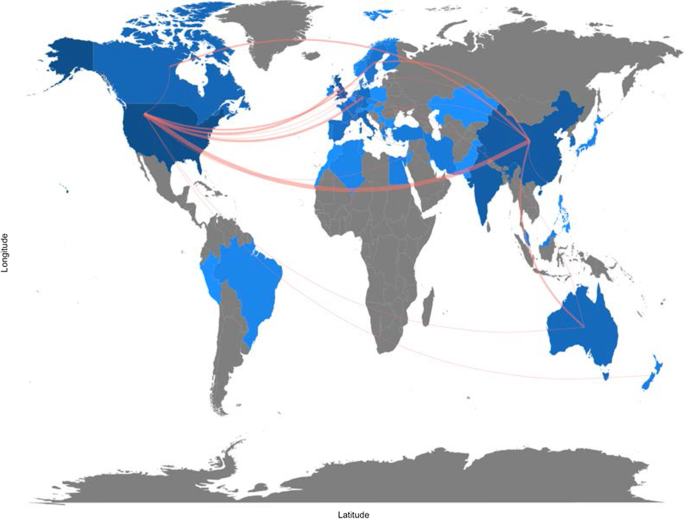

- Tran, B.X.; Vu, G.T.; Ha, G.H.; Vuong, Q.-H.; Ho, M.-T.; Vuong, T.-T.; La, V.-P.; Ho, M.-T.; Nghiem, K.-C.P.; Nguyen, H.L.T.; et al. Global Evolution of Research in Artificial Intelligence in Health and Medicine: A Bibliometric Study. J. Clin. Med. 2019 , 8 , 360. [ Google Scholar ] [ CrossRef ]

- Mueller, J.M. The ABCs of Assured Autonomy. In Proceedings of the 2019 IEEE International Symposium on Technology and Society (ISTAS), Medford, MA, USA, 15–16 November 2019; pp. 1–5. [ Google Scholar ]

- Yapo, A.; Weiss, J. Ethical Implications of Bias in Machine Learning. In Proceedings of the 51st Hawaii International Conference on System Sciences (HICSS-51), Hilton Waikoloa Village, HI, USA, 3–6 January 2018. [ Google Scholar ]

- Sarwar, S.; Dent, A.; Faust, K.; Richer, M.; Djuric, U.; Van Ommeren, R.; Diamandis, P. Physician Perspectives on Integration of Artificial Intelligence into Diagnostic Pathology. NPJ Digit. Med. 2019 , 2 , 28. [ Google Scholar ] [ CrossRef ]

- Paranjape, K.; Schinkel, M.; Nannan Panday, R.; Car, J.; Nanayakkara, P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med. Educ. 2019 , 5 , e16048. [ Google Scholar ] [ CrossRef ]

- Weng, S.F.; Vaz, L.; Qureshi, N.; Kai, J. Prediction of Premature All-Cause Mortality: A Prospective General Population Cohort Study Comparing Machine-Learning and Standard Epidemiological Approaches. PLoS ONE 2019 , 14 , e0214365. [ Google Scholar ] [ CrossRef ]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019 , 25 , 44–56. [ Google Scholar ] [ CrossRef ]

- Sharma, A.; Abunada, T.; Said, S.S.; Kurdi, R.M.; Abdallah, A.M.; Abu-Madi, M. Clinical Practicum Assessment for Biomedical Science Program from Graduates’ Perspective. Int. J. Environ. Res. Public Health 2022 , 19 , 12420. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Nurses Say Distractions Cut Bedside Time by 25% | HealthLeaders Media. Available online: https://www.healthleadersmedia.com/nursing/nurses-say-distractions-cut-bedside-time-25 (accessed on 5 March 2023).

- Goel, A.K.; Polepeddi, L. Jill Watson: A Virtual Teaching Assistant for Online Education. In Learning Engineering for Online Education ; Routledge: London, UK, 2018. [ Google Scholar ]

- Ching, T.; Himmelstein, D.S.; Beaulieu-Jones, B.K.; Kalinin, A.A.; Do, B.T.; Way, G.P.; Ferrero, E.; Agapow, P.-M.; Zietz, M.; Hoffman, M.M.; et al. Opportunities and Obstacles for Deep Learning in Biology and Medicine. J. R. Soc. Interface 2018 , 15 , 20170387. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- McCoy, L.G.; Nagaraj, S.; Morgado, F.; Harish, V.; Das, S.; Celi, L.A. What Do Medical Students Actually Need to Know about Artificial Intelligence? NPJ Digit. Med. 2020 , 3 , 86. [ Google Scholar ] [ CrossRef ]

- Price, W.N.; Cohen, I.G. Privacy in the Age of Medical Big Data. Nat. Med. 2019 , 25 , 37–43. [ Google Scholar ] [ CrossRef ]

- Stahnisch, F.W.; Verhoef, M. The Flexner Report of 1910 and Its Impact on Complementary and Alternative Medicine and Psychiatry in North America in the 20th Century. Evid. Based Complement Altern. Med 2012 , 2012 , 647896. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Prober, C.G.; Khan, S. Medical Education Reimagined: A Call to Action. Acad. Med. 2013 , 88 , 1407–1410. [ Google Scholar ] [ CrossRef ]

- NAACLS—National Accrediting Agency for Clinical Laboratory Science—Starting a NAACLS Accredited Program. Available online: https://www.naacls.org/Program-Directors/Fees/Procedures-for-Review-Initial-and-Continuing-Accre.aspx (accessed on 5 June 2022).

- Scanlan, P.M. A Review of Bachelor’s Degree Medical Laboratory Scientist Education and Entry Level Practice in the United States. EJIFCC 2013 , 24 , 5–13. [ Google Scholar ]

- Board of Certification. Available online: https://www.ascp.org/content/board-of-certification# (accessed on 22 May 2022).

- Alexander, G.L.; Powell, K.R.; Deroche, C.B. An Evaluation of Telehealth Expansion in U.S. Nursing Homes. J. Am. Med. Inform. Assoc. 2021 , 28 , 342–348. [ Google Scholar ] [ CrossRef ]

- Meskó, B.; Görög, M. A Short Guide for Medical Professionals in the Era of Artificial Intelligence. NPJ Digit. Med. 2020 , 3 , 126. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Davenport, T.; Kalakota, R. The Potential for Artificial Intelligence in Healthcare. Future Health J. 2019 , 6 , 94–98. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Grunhut, J.; Marques, O.; Wyatt, A.T.M. Needs, Challenges, and Applications of Artificial Intelligence in Medical Education Curriculum. JMIR Med. Educ. 2022 , 8 , e35587. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine Learning for Medical Imaging. Radiographics 2017 , 37 , 505. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Chen, J. Playing to Our Human Strengths to Prepare Medical Students for the Future. Korean J. Med. Educ. 2017 , 29 , 193–197. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Teng, M.; Singla, R.; Yau, O.; Lamoureux, D.; Gupta, A.; Hu, Z.; Hu, R.; Aissiou, A.; Eaton, S.; Hamm, C.; et al. Health Care Students’ Perspectives on Artificial Intelligence: Countrywide Survey in Canada. JMIR Med. Educ. 2022 , 8 , e33390. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Mennella, C.; Maniscalco, U.; De Pietro, G.; Esposito, M. Ethical and Regulatory Challenges of AI Technologies in Healthcare: A Narrative Review. Heliyon 2024 , 10 , e26297. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Chen, X.-W.; Lin, X. Big Data Deep Learning: Challenges and Perspectives. IEEE Access 2014 , 2 , 514–525. [ Google Scholar ] [ CrossRef ]

- Noorbakhsh-Sabet, N.; Zand, R.; Zhang, Y.; Abedi, V. Artificial Intelligence Transforms the Future of Health Care. Am. J. Med. 2019 , 132 , 795–801. [ Google Scholar ] [ CrossRef ]

- Kluge, E.-H.W. Artificial Intelligence in Healthcare: Ethical Considerations. In Proceedings of the Healthcare Management Forum; SAGE Publications Sage CA: Los Angeles, CA, USA, 2020; Volume 33, pp. 47–49. [ Google Scholar ]

- Kassam, A.; Kassam, N. Artificial Intelligence in Healthcare: A Canadian Context. Health Manag. Forum 2020 , 33 , 5–9. [ Google Scholar ] [ CrossRef ]

- Borenstein, J.; Howard, A. Emerging Challenges in AI and the Need for AI Ethics Education. AI Ethics 2021 , 1 , 61–65. [ Google Scholar ] [ CrossRef ]

- Azencott, C.-A. Machine Learning and Genomics: Precision Medicine versus Patient Privacy. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018 , 376 , 20170350. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Taylor, Z.W.; Charran, C.; Childs, J. Using Big Data for Educational Decisions: Lessons from the Literature for Developing Nations. Educ. Sci. 2023 , 13 , 439. [ Google Scholar ] [ CrossRef ]

- Kingston, J.K. Artificial Intelligence and Legal Liability. In Proceedings of the International Conference on Innovative Techniques and Applications of Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2016; pp. 269–279. [ Google Scholar ]

- Lee, T.T.; Kesselheim, A.S.U.S. Food and Drug Administration Precertification Pilot Program for Digital Health Software: Weighing the Benefits and Risks. Ann. Intern. Med. 2018 , 168 , 730–732. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Hussey, P.; Adams, E.; Shaffer, F.A. Nursing Informatics and Leadership, an Essential Competency for a Global Priority: eHealth. Nurse Lead. 2015 , 13 , 52–57. [ Google Scholar ] [ CrossRef ]

- Atique, S.; Bautista, J.R.; Block, L.J.; Lee, J.J.; Lozada-Perezmitre, E.; Nibber, R.; O’Connor, S.; Peltonen, L.-M.; Ronquillo, C.; Tayaben, J.; et al. A Nursing Informatics Response to COVID-19: Perspectives from Five Regions of the World. J. Adv. Nurs. 2020 , 76 , 2462–2468. [ Google Scholar ] [ CrossRef ]

- Ahmad, M.N.; Abdallah, S.A.; Abbasi, S.A.; Abdallah, A.M. Student Perspectives on the Integration of Artificial Intelligence into Healthcare Services. Digit. Health 2023 , 9 , 205520762311740. [ Google Scholar ] [ CrossRef ]

- Sit, C.; Srinivasan, R.; Amlani, A.; Muthuswamy, K.; Azam, A.; Monzon, L.; Poon, D.S. Attitudes and Perceptions of UK Medical Students towards Artificial Intelligence and Radiology: A Multicentre Survey. Insights Imaging 2020 , 11 , 14. [ Google Scholar ] [ CrossRef ]

- Abdullah, R.; Fakieh, B. Health Care Employees’ Perceptions of the Use of Artificial Intelligence Applications: Survey Study. J. Med. Internet Res. 2020 , 22 , e17620. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure

| Diagnostic accuracy | The incorporation of AI in diagnostics during COVID-19 increased the accuracy of diagnosis through faster and accurate image analysis |

| Laboratory workflow support | AI reduced the workload for healthcare providers by providing automation support for sample analysis and, later, speedy data analysis |

| Research and development | The incorporation of AI into healthcare served as the most efficient tool to discover and apply antiviral drugs and vaccines by the interpretation of big patient data |

| Patient triage optimization | AI supported patient triage by prioritizing critical cases depending on the available data |

| Quality assurance | The accuracy and reliability of testing were monitored by AI, resulting in reduced system error and delivering high quality work in laboratories |

| Radiology imaging and diagnostic approach | AI and machine learning training can support biomedical science students in using algorithms to read images of X-rays and CT and MRI scans to reach an accurate diagnosis |

| Precision medicine | Biomedical science students can support precision medicine by analyzing big datasets and providing tailored medicinal or genetic approaches to patients |

| Reducing workload | Efficient AI incorporation in healthcare can help future biomedical science students to reduce their workload as they will be trained in the automation of databases, imaging, etc. |

| Research and innovation | AI-trained biomedical science students can be an integral part of new innovations related to research or drug discovery |

| Career opportunities | AI-trained biomedical science students can explore more career and job opportunities in research and administrative healthcare settings |

| Strategy planning and disease controls | AI-trained healthcare professionals with a biomedical background can detect diseases at their early stages and estimate their potential to spread. This AI approach supports healthcare in planning strategies to control diseases |

| Ethical considerations | Ethical considerations are the biggest challenge in applying AI to patients and can be resolved by training biomedical students on the ethics related to AI in patient care |

| Curriculum Additions | Recommendations to Achieve AI Expertise |

|---|---|

| Biomedical engineering and computational data certification | Collaboration with computer/software engineering college and work on certification. Theoretical knowledge can be supplemented with practical experience with AI. |

| AI learning groups and open journal clubs | Interested students can receive hands-on training and learning from computer or data science students by sharing common learning groups. Students can exchange views and answer each other’s questions. |

| AI: fundamental concepts | Students should enhance their basic AI skills such as data handling, analysis, data visualization, and understanding. |

| AI: research opportunities | Interested students should be exposed to private–public AI services and should be involved in AI research projects. These projects would help students to apply new approaches to their work. |

| Field of Challenge | Challenge | Mitigation |

|---|---|---|

| Faculty expertise | The lack of faculty expertise represents the biggest challenge to incorporating AI into existing biomedical coursework. Many have only limited knowledge on AI methodologies and their applications. This lack of expertise acts as a barrier to teaching AI to biomedical students | Training the trainers is the solution to this challenge. CPD on AI should be offered through workshops, seminars, and short-term interdisciplinary AI courses to enhance their AI skills |

| Curriculum restructuring and AI integration | Amending the current curriculum with additional AI coursework is a challenge, as it might be overwhelming for biomedical students and preceptors | The curriculum should be reviewed thoroughly to find potential areas to incorporate AI into existing biomedical coursework. This can be achieved by adding foundational elective AI courses for students, which might require interdisciplinary faculty review |

| Variable student backgrounds | Biomedical students have different levels of prior knowledge on AI, posing a challenge to offering different levels of AI coursework to students to match their existing knowledge | Introductory AI modules should be provided in the coursework, but for “deep divers” with more extensive knowledge or interest in AI, more extensive AI coursework and research opportunities could be provided |

| Practical training in AI | Hands-on training for students on AI requires well-equipped labs with specialized equipment and software | AI training requires a new laboratory infrastructure with the latest software and tools for training. This will provide students with hands-on training on algorithms, data analysis, image processing, etc. |

| Limited resources | Limited financial resources pose a challenge to equip students with training in the latest AI technologies | Allocated funding and budgeting will support in planning new infrastructure and laboratories. In addition, partnership with different institutions will minimize the cost of AI incorporation |

| Ethical considerations | Ethical considerations: data mining, data privacy, and the responsible application of AI are a challenge | Case studies and real-world examples related to ethics will encourage critical thinking among students in relation to AI |

| Assessment methods | Effective and standardized assessment methods for assessing students’ knowledge on AI concepts are lacking | Designing exams, projects, presentations, hands-on assignments, and group projects related to the real-world biomedical application of AI will develop understanding and support student assessment |

| Interprofessional education (IPE) | Interdisciplinary collaboration between computer sciences, engineering departments, and biomedical sciences is challenging, considering the different objectives and priorities of coursework | IPE can be encouraged by structuring interdisciplinary teams with faculties from both computer science and biomedical backgrounds. In addition, faculty should participate in cross-disciplinary seminars to understand and incorporate AI more effectively in coursework. Interdisciplinary student mentors can also promote IPE by assigning joint AI projects to students |

| The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and Cite

Sharma, A.; Al-Haidose, A.; Al-Asmakh, M.; Abdallah, A.M. Integrating Artificial Intelligence into Biomedical Science Curricula: Advancing Healthcare Education. Clin. Pract. 2024 , 14 , 1391-1403. https://doi.org/10.3390/clinpract14040112

Sharma A, Al-Haidose A, Al-Asmakh M, Abdallah AM. Integrating Artificial Intelligence into Biomedical Science Curricula: Advancing Healthcare Education. Clinics and Practice . 2024; 14(4):1391-1403. https://doi.org/10.3390/clinpract14040112

Sharma, Aarti, Amal Al-Haidose, Maha Al-Asmakh, and Atiyeh M. Abdallah. 2024. "Integrating Artificial Intelligence into Biomedical Science Curricula: Advancing Healthcare Education" Clinics and Practice 14, no. 4: 1391-1403. https://doi.org/10.3390/clinpract14040112

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

How AI — and ASU — will advance the health care sector

As technology improves, it has the potential to accelerate diagnoses and enable earlier and possibly life-saving treatment.

AI-generated images by Alex Davis/ASU

Editor's note: This feature article is part of our “AI is everywhere ... now what?” special project exploring the potential (and potential pitfalls) of artificial intelligence in our lives. Explore more topics and takes on the project page .

A patient walks into a hospital complaining of stomach pain.

After a scan is performed, the doctor confirms the diagnosis of appendicitis, surgery is scheduled and the patient leaves the hospital the following day, his appendix out and his pain gone.

It’s a situation that happens every day, in hospitals across the world. But what if, using an artificial intelligence learning model, that scan — along with past medical history, lifestyle choices and other relevant data — also showed the patient is at risk for a heart attack or stroke?

How many lives might be saved?

“AI can see things that humans cannot,” said Dr. Bhavik Patel , the chief AI officer at Mayo Clinic in Arizona. “AI can take that scan and tell us what your heart risk and stroke factors are even though we didn’t image the heart or brain. AI can help us impact things to where we’re making a difference in people’s lives.”

It would be a misnomer to say AI is the next frontier in health care. AI has been part of medical care for more than 20 years, used to analyze medical imaging data such as X-rays or MRIs, transcribe medical documents and streamline administrative tasks.

But as AI’s technology improves, it has the potential to process information faster, thus accelerating diagnoses and enabling earlier and possibly life-saving treatment for patients.

- Algorithms that spot malignant tumors.

- A smartphone app that can alert a caregiver before someone falls.

- Learning models that recognize changes in speech patterns, which could indicate neurological conditions.

The possibilities, medical professionals say, are endless.

“I think AI represents the fourth industrial revolution, and health care is just one of the many verticals it’s revolutionizing,” Patel said. “You shouldn’t say health care anymore without saying AI. It should be an integral part of it.”

Sherine Gabriel , executive vice president of ASU Health , stressed that AI will not replace health care professionals or dramatically alter the doctor-patient relationship.

Instead, Gabriel said, AI is a tool that will ultimately result in better patient care.

“It’s not going to make the diagnosis for you, but it’s going to make the diagnosis easier, simpler and way quicker,” Gabriel said. “It’s going to identify diagnostic targets more quickly than we have been able to in the past. The thinking isn’t really that different. It’s just putting everything on overdrive.”

One example:

Bradley Greger , an associate professor in ASU’s School of Biological and Health Systems Engineering, part of the Ira A. Fulton Schools of Engineering, is using AI and machine learning to read and analyze signals from the brain.

The technology could have multiple applications: Enabling paralyzed people to control a robotic limb; helping blind people to see with the aid of a camera connected to the visual processing areas of the brain; aiding patients with seizure disorder.

“There’s unhealthy electrical activity in the brain that causes seizures. It’s very complicated datasets,” Greger said. “We can use AI to look for patterns, and then we can tell people when they’re going to have a seizure or they need to have more medication or something like that.

“AI is really good at searching through very complex datasets and looking for patterns that correlate or give you some knowledge about what’s happening with the pathology itself. A human can do it, but it just takes a human a long time.”

AI can also help with administrative tasks, thus allowing doctors to have more face time with their patients.

DeepScribe, an AI-powered medical scribe, uses machine learning and language processing to extract medical information from a phone conversation between a provider and patient and almost immediately produce a finished medical note that goes into a patient’s record. Typically, experts say, doctors spend more than three hours a day documenting conversations with their patients.

“AI is a really great tool for doctors to have at their disposal,” Greger said.

AI will be a central tenet of ASU Health, which includes a new medical school called the School of Medicine and Advanced Medical Engineering, the School of Technology for Public Health, the Health Observatory at ASU and the Medical Master’s Institute — as well as the existing College of Health Solutions and Edson College of Nursing and Health Innovation.

“AI will be used in almost every conceivable way imaginable,” Gabriel said. “It’s going to be interwoven into everything we teach. We want to be certain that the next generation of providers have all of those skills at hand in order to optimally improve health care.”

Gabriel said ASU Health will approach AI with a humanistic perspective, thus the creation of the AI + Center of Patient Stories. ASU Health will use reporters and students in the Walter Cronkite School of Journalism and Mass Communication to engage with patients and write their stories.

The center will then use AI applications like virtual reality, augmented reality or real-time mixed reality to digitally enhance the stories.

“We’re trying to create not just a video for them to watch, but an immersive educational experience for students to help build empathy, help build understanding and help them build the connection I think they need to really be able to care for those patients and populations,” Gabriel said. “We really want to use technology to enhance the humanistic aspects of health care.”

ASU Health also will create an AI + Medical Suite of the Future. Gabriel described it as a patient care setting where AI will be used to create information that is packaged in a way that patients or their loved ones can access it and “understand what’s going on in a much deeper level.” The suite, Gabriel said, could include an “AI coach” that can answer questions.

“There are lots and lots of studies that show a patient will have a conversation in a doctor’s office, but even if that conversation is taped, the patient will only retain a small part of it, either because the doctor didn’t explain it well or it’s an emotionally charged situation,” Gabriel said. “Or they’ll go home, and a loved one will say, ‘So, what did the doctor say?’

“That happens every day of the week. But these (AI) tools give us the opportunity to change that structure. It’s about bringing all of the curated information together from that patient encounter in a way that optimizes their health outcome.”

Across ASU, faculty is working with AI to improve patient care.

Thurmon Lockhart , a professor in the School of Biological and Health Systems Engineering, has developed a wearable device that goes across a patient’s sternum and measures body posture as well as arm and leg movements in real time.

“Traditional fall-risk assessments for seniors don’t always target specific types of risk, like muscle weakness or gait stability,” Lockhart said in a previously published ASU Thrive story .

When the risk of falling is deemed high, a smartphone app called the Lockhart Monitor can alert the user or caregiver.

Visar Berisha , the associate dean of research and commercialization in the Ira A. Fulton Schools of Engineering, is working with Julie Liss , an associate dean and professor in the College of Health Solutions, to develop AI models that analyze a person’s words and speech patterns.

Those patterns, Berisha said, can help determine whether a patient may suffer from neurological conditions like Alzheimer’s, Parkinson’s or amyotrophic lateral sclerosis, also known as Lou Gehrig's disease.

“A lot of that analysis has been done manually in the sense that patients would come to a lab, record samples, and then clinicians would listen for different types of changes in the speed signal that were indicative of the underlying condition,” Berisha said. “You needed to be an expert, have lots of training and a lot of time.”

Berisha said he and Liss have been working for more than a decade on an AI model that would automate the process by having patients download a mobile app on their personal devices and then provide speech samples for analysis.

“It scales it in a way that wasn’t possible before,” he said.

In addition, Berisha said, AI might be able to notice subtle changes in a person’s speech pattern that a clinician might not spot.

Patel said the AI “revolution” in health care isn’t something that will happen in five, 10 or even 20 years.

“Just because of the way the technology has improved, I think we’re very near to getting those exponential benefits,” he said.

Greger predicted AI will be omnipresent in the medical field.

“It’s going to be everywhere,” he said. “It’s just going to be this thing around that everybody uses. And it will have very specific applications. The radiologists will use it for image processing. The neurologists will use it to process the signals that come out of the brain and help them identify diseases. It’ll be a tool that’s very widely used. Just like the computer or car is today.”

Not all health care experts are as certain of AI’s immediate impact, though.

“I don’t know that the rate of change is going to be very fast,” Berisha said. “There are some structural complexities in the American health care system that make it really difficult to introduce new tools in everyday care.”

A 2019 paper in the National Library of Medicine amplified those difficulties. The paper noted that health care decisions have been made almost exclusively by humans, and the use of smart machines could raise issues of accountability, permission, transparency and privacy.

The paper, titled “The Potential for Artificial Intelligence in Healthcare,” also questioned whether health care providers will be able to adequately explain to a patient how deep learning algorithms led to, say, a cancer diagnosis. In turn, that could impact the doctor-patient relationship.

There’s also a question of accuracy within AI diagnoses, according to the paper. Machine learning systems could be subject to algorithmic bias and predict a greater likelihood of disease on the basis of gender or race when those aren’t causal factors.

Berisha said health care professionals may be reluctant to turn to AI for simpler reasons: time and money. He said overburdened care providers who only have “seven minutes to spend” with a patient may decide they don’t have the time to evaluate information they’re not certain will help with diagnosis or treatment.

In addition, he said, the cost of AI, plus the hundreds of applications that will be available, may dissuade providers.

“I agree it’s going to be transformational,” he said. “I just think it’ll take a while.”

AI is everywhere ... now what?

Artificial intelligence isn't just handy for creating images like the above — it has implications in an increasingly broad range of fields, from health to education to saving the planet.

Explore the ways in which ASU faculty are thinking about and using AI in their work:

- Enhancing education: ASU experts explain how artificial intelligence can help teachers — but training and access is key .

- A more sustainable future: Artificial intelligence is helping researchers find solutions to urgent worldwide issues .

- Advancing health care: AI has the potential to accelerate diagnoses and enable earlier and possibly life-saving treatment.

- The ethical costs: Issues around privacy, bias, surveillance and even extinction are raised with advancements in AI.

- Expert Q&As: Read smart minds' thoughts on everything from food security to the power grid to the legal system on our special project page .

More Health and medicine

Pilot program to address HIV care, intimate partner violence in Uganda

Editor's note: This is the second in a five-part series about ASU faculty conducting summer research abroad. Read about carbon collection in the Namib Desert.Uganda has one of the highest rates of…

Hot and bothered: ASU event to discuss heat and health

Arizona State University's upcoming Health Talks event on July 18 will address how vulnerable populations are being impacted by heat across the world and how state governments are planning to deal…

ASU students redesign 'Workstation on Wheels' for HonorHealth nurses

Going into this summer, Sheetal Jha, a biomedical engineering student, had specific expectations for the internship she would be participating in. “I was looking to gain hands-on experience in…

- Skip to main content

- Skip to FDA Search

- Skip to in this section menu

- Skip to footer links

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

U.S. Food and Drug Administration

- Search

- Menu

- Medical Devices

- Digital Health Center of Excellence

- Software as a Medical Device (SaMD)

Artificial Intelligence and Machine Learning in Software as a Medical Device

Update: March 15, 2024

Artificial Intelligence and Medical Products: How CBER, CDER, CDRH, and OCP are Working Together

The U.S. Food and Drug Administration (FDA) issued " Artificial Intelligence and Medical Products: How CBER, CDER, CDRH, and OCP are Working Together ," which outlines the agency's commitment and cross-center collaboration to protect public health while fostering responsible and ethical medical product innovation through Artificial Intelligence.

Download the Paper (PDF - 1.2 MB)

Artificial intelligence (AI) and machine learning (ML) technologies have the potential to transform health care by deriving new and important insights from the vast amount of data generated during the delivery of health care every day. Medical device manufacturers are using these technologies to innovate their products to better assist health care providers and improve patient care. The complex and dynamic processes involved in the development, deployment, use, and maintenance of AI technologies benefit from careful management throughout the medical product life cycle.

On this page:

What is artificial intelligence and machine learning, how are artificial intelligence and machine learning (ai/ml) transforming medical devices, how is the fda considering regulation of artificial intelligence and machine learning medical devices, additional resources.

Artificial Intelligence is a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments. Artificial intelligence systems use machine- and human-based inputs to perceive real and virtual environments; abstract such perceptions into models through analysis in an automated manner; and use model inference to formulate options for information or action.

Machine Learning is a set of techniques that can be used to train AI algorithms to improve performance at a task based on data.

Some real-world examples of artificial intelligence and machine learning technologies include:

- An imaging system that uses algorithms to give diagnostic information for skin cancer in patients.

- A smart sensor device that estimates the probability of a heart attack.

Additional information can be found at Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence .

AI/ML technologies have the potential to transform health care by deriving new and important insights from the vast amount of data generated during the delivery of health care every day. Medical device manufacturers are using these technologies to innovate their products to better assist health care providers and improve patient care. One of the greatest benefits of AI/ML in software resides in its ability to learn from real-world use and experience, and its capability to improve its performance.

The FDA reviews medical devices through an appropriate premarket pathway, such as premarket clearance (510(k)), De Novo classification , or premarket approval . The FDA may also review and clear modifications to medical devices, including software as a medical device, depending on the significance or risk posed to patients of that modification. Learn the current FDA guidance for risk-based approach for 510(k) software modifications .

The FDA's traditional paradigm of medical device regulation was not designed for adaptive artificial intelligence and machine learning technologies. Many changes to artificial intelligence and machine learning-driven devices may need a premarket review.

On April 2, 2019, the FDA published a discussion paper " Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) - Discussion Paper and Request for Feedback " that describes a potential approach to premarket review for artificial intelligence and machine learning-driven software modifications.

In January 2021, the FDA published the " Artificial Intelligence and Machine Learning Software as a Medical Device Action Plan " or "AI/ML SaMD Action Plan." Consistent with the action plan, the FDA later issued the following documents:

- October 2021 - Good Machine Learning Practice for Medical Device Development: Guiding Principles

- April 2023 - Draft Guidance: Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence/Machine Learning (AI/ML)-Enabled Device Software Functions

- October 2023 - Predetermined Change Control Plans for Machine Learning-Enabled Medical Devices: Guiding Principles

- June 2024 - Transparency for Machine Learning-Enabled Medical Devices: Guiding Principles

On March 15, 2024 the FDA published the " Artificial Intelligence and Medical Products: How CBER, CDER, CDRH, and OCP are Working Together ," which represents the FDA's coordinated approach to AI. This paper is intended to complement the " AI/ML SaMD Action Plan " and represents a commitment between the FDA's Center for Biologics Evaluation and Research (CBER), the Center for Drug Evaluation and Research (CDER), and the Center for Devices and Radiological Health (CDRH), and the Office of Combination Products (OCP), to drive alignment and share learnings applicable to AI in medical products more broadly.

If you have questions about artificial intelligence, machine learning, or other digital health topics, ask a question about digital health regulatory policies .

- Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices

- Artificial Intelligence Program: Research on AI/ML-Based Medical Devices

- Digital Health Research and Partnerships

- Artificial Intelligence and Medical Products

Subscribe to Digital Health

Sign up to receive email updates on Digital Health.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 14 June 2023

Bias in AI-based models for medical applications: challenges and mitigation strategies

- Mirja Mittermaier ORCID: orcid.org/0000-0003-0678-6676 1 , 2 ,

- Marium M. Raza 3 &

- Joseph C. Kvedar ORCID: orcid.org/0000-0002-7517-2291 3

npj Digital Medicine volume 6 , Article number: 113 ( 2023 ) Cite this article

19k Accesses

35 Citations

61 Altmetric

Metrics details

- Computational models

- Health policy

Artificial intelligence systems are increasingly being applied to healthcare. In surgery, AI applications hold promise as tools to predict surgical outcomes, assess technical skills, or guide surgeons intraoperatively via computer vision. On the other hand, AI systems can also suffer from bias, compounding existing inequities in socioeconomic status, race, ethnicity, religion, gender, disability, or sexual orientation. Bias particularly impacts disadvantaged populations, which can be subject to algorithmic predictions that are less accurate or underestimate the need for care. Thus, strategies for detecting and mitigating bias are pivotal for creating AI technology that is generalizable and fair. Here, we discuss a recent study that developed a new strategy to mitigate bias in surgical AI systems.

Bias in medical AI algorithms

Artificial intelligence (AI) technology is increasingly applied to healthcare, from AI-augmented clinical research to algorithms for image analysis or disease prediction. Specifically, within the field of surgery, AI applications hold promise as tools to predict surgical outcomes 1 , aid surgeons via computer vision for intraoperative surgical navigation 2 , and even as algorithms to assess technical skills and surgical performance 1 , 3 , 4 , 5 .

Kiyasseh et al. 4 highlight this potential application in their work deploying surgical AI systems (SAIS) on videos of robotic surgeries from three hospitals. They used SAIS to assess the skill level of surgeons completing multiple different surgical activities, including needle handling and needle driving. In applying this AI model, Kiyasseh et al. 4 found that it could reliably assess surgical performance but exhibited bias. The SAIS model showed an underskilling or overskilling bias at different rates across surgeon sub-cohort. Underskilling was the AI model downgrading surgical performance erroneously, predicting a particular skill to be lower quality than it actually was. Overskilling was the reverse—the AI model upgraded surgical performance erroneously, predicting a specific skill to be of higher quality than it was. Underskilling and overskilling were measured based on the AI-based predictions’ negative and positive predictive values negative, respectively.

Strategies to mitigate bias

The issue of bias being exhibited, perpetuated, or even amplified by AI algorithms is an increasing concern within healthcare. Bias is usually defined as a difference in performance between subgroups for a predictive task 6 , 7 . For example, an AI algorithm used for predicting future risk of breast cancer may suffer from a performance gap wherein black patients are more likely to be assigned as “low risk” incorrectly. Further, an algorithm trained on hospital data from German patients might not perform well in the USA, as patient population, treatment strategies or medications might differ. Similar cases have already been seen in healthcare systems 8 . There could be many different reasons for this performance gap. Bias can be generated across AI model development steps, including data collection/preparation, model development, model evaluation, and deployment in clinical settings 9 . With this particular example, the algorithm may have been trained on data predominantly from white patients, or health records from Black patients may be less accessible. Additionally, there are likely underlying social inequalities in healthcare access and expenditures that impact how a model might be trained to predict risk 6 , 10 . Regardless of the cause, the impact of an algorithm disproportionately assigning false negatives would include fewer follow-up scans, and potentially more undiagnosed/untreated cancer cases, worsening health inequity for an already disadvantaged population. Thus, strategies to detect and mitigate bias will be pivotal to improving healthcare outcomes. Bias mitigation strategies may involve interventions such as pre-processing data through sampling before a model is built, in-processing by implementing mathematical approaches to incentivize a model to learn balanced predictions, and post-processing 11 . Further, as experts can be aware of biases specific to datasets, “keeping the human in the loop” can be another important strategy to mitigate bias.