New method developed to detect fake vaccines in supply chains

Research published this week and led by University of Oxford researchers describes a first-of-its-kind method capable of distinguishing authentic and falsified vaccines by applying machine learning to mass spectral data. The method proved effective in differentiating between a range of authentic and ‘faked’ vaccines previously found to have entered supply chains.

This latest research will bring the world community one step closer to being able to tell apart falsified, ineffective vaccines from the real thing, making us all safer. It has been a tremendous collaborative effort, with everyone having this same important goal in mind. Co-author Professor Nicole Zitzmann (Department of Biochemistry, University of Oxford)

The results of the study provide a proof-of-concept method that could be scaled to address the urgent need for more effective global vaccine supply chain screening. A key benefit is that it uses clinical mass spectrometers already distributed globally for medical diagnostics.

The global population is increasingly reliant on vaccines to maintain population health with billions of doses used annually in immunisation programs worldwide. The vast majority of vaccines are of excellent quality. However, a rise in substandard and falsified vaccines threaten global public health. Besides failing to treat the disease for which they were intended, these can have serious health consequences, including death, and reduce confidence in vaccines. Unfortunately, there is currently no global infrastructure in place to monitor supply chains using screening methods developed to identify ineffective vaccines.

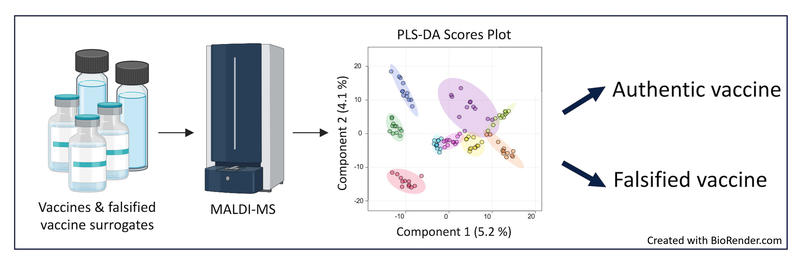

In this new study, researchers developed and validated a method that is able to distinguish authentic and falsified vaccines using instruments developed for identifying bacteria in hospital microbiology laboratories. The method is based on matrix-assisted laser desorption/ionisation-mass spectrometry (MALDI-MS), a technique used to identify the components of a sample by giving the constituent molecules a charge and then separating them. The MALDI-MS analysis is then combined with open-source machine learning. This provides a reliable multi-component model which can differentiate authentic and falsified vaccines, and is not reliant on a single marker or chemical constituent.

This innovative research provides compelling evidence that MALDI mass spectrometry techniques could be used in accessible systems for screening for vaccine falsification globally, especially in centres with hospital microbiology laboratories, enhancing public health and confidence in vaccines. Co-author Professor Paul Newton (Centre for Tropical Medicine and Global Health, University of Oxford)

The method successfully distinguished between a range of genuine vaccines – including for influenza (flu), hepatitis B virus, and meningococcal disease – and solutions commonly used in falsified vaccines, such as sodium chloride.

Professor James McCullagh , study co-leader and Professor of Biological Chemistry in the Department of Chemistry, University of Oxford said: ‘We are thrilled to see the method’s effectiveness and its potential for deployment into real-world vaccine authenticity screening. This is an important milestone for the Vaccine Identity Evaluation (VIE) consortium which focusses on the development and evaluation of innovative devices for detecting falsified and substandard vaccines, supported by multiple research partners including the World Health Organization (WHO), medicine regulatory authorities and vaccine manufacturers.’

The study ‘Using matrix assisted laser desorption ionisation mass spectrometry combined with machine learning for vaccine authenticity screening’ has been published in npj Vaccines.

This research was funded by two anonymous philanthropic families, the Oak Foundation, the Wellcome Trust and the World Health Organization (WHO).

The study was led by a team at the Mass Spectrometry Research Facility in the Department of Chemistry and the Department of Biochemistry, University of Oxford and was part of a research consortium involving teams from the Rutherford Appleton Laboratory of STFC at Harwell and the Departments of Chemistry, Biochemistry and Nuffield Department of Medicine Centre for Global Health Research at the University of Oxford.

DISCOVER MORE

- Support Oxford's research

- Partner with Oxford on research

- Study at Oxford

- Research jobs at Oxford

You can view all news or browse by category

Scientists develop new method to detect fake vaccines

New method to detect fake vaccines

- A team from the University of Oxford have developed a first of its kind mass spectrometry method for vaccine authenticity screening using machine learning.

- The method repurposes clinical mass spectrometers already present in hospitals worldwide, making the approach feasible for global supply chain monitoring.

- The discovery offers an effective solution to the rise in substandard and counterfeit vaccines threatening public health.

Research published today in the Nature portfolio journal npj Vaccines describes a new method capable of distinguishing authentic and falsified vaccines using machine learning analysis of mass spectral data. The method proved effective in differentiating between a range of authentic and ‘faked’ vaccines previously found to have entered supply chains.

A key benefit of the novel method is that it uses clinical mass spectrometers already distributed globally for medical diagnostics, giving it the potential to address the urgent need for more effective global vaccine supply chain screening.

Professor James McCullagh , study co-leader and Professor of Biological Chemistry in the Department of Chemistry said:

This method is the culmination of a number of years of collaborative research that has brought together scientists from multiple departments and divisions across the university with outside partners including Prof. Pavel Matousek at the Rutherford Appleton Laboratory at Harwell. Rebecca Clarke (former Part II student) and John Walsby-Tickle both played key roles in the method’s development in the Department of Chemistry.

"We are thrilled to see the method’s effectiveness and its potential for deployment into real-world vaccine authenticity screening. This is an important milestone for The Vaccine Identity Evaluation (VIE) consortium which focusses on the development and evaluation of innovative devices for detecting falsified and substandard vaccines, supported by multiple research partners including the World Health Organisation (WHO), medicine regulatory authorities and vaccine manufacturers."

The global population is increasingly reliant on vaccines to maintain population health with billions of doses used annually in immunisation programs worldwide. The vast majority of vaccines are of excellent quality. However, a rise in substandard and falsified vaccines threaten global public health. Besides failing to treat the disease for which they were intended, these can have serious health consequences, including death, and reduce confidence in vaccines. Unfortunately, there is currently no global infrastructure in place to monitor supply chains using screening methods developed to identify ineffective vaccines.

In this new study, researchers developed and validated a method that is able to distinguish authentic and falsified vaccines using instruments developed for identifying bacteria in hospital microbiology laboratories. The method is based on matrix-assisted laser desorption/ionisation-mass spectrometry (MALDI-MS), a technique used to identify the components of a sample by giving the constituent molecules a charge then separating them. The MALDI-MS analysis is then combined with open-source machine learning. This provides a reliable multi-component model which can differentiate authentic and falsified vaccines, and is not reliant on a single marker or chemical constituent.

The method successfully distinguished between a range of genuine vaccines – including for influenza (flu), hepatitis B virus, and meningococcal disease – and solutions commonly used in falsified vaccines, such as sodium chloride. The results provide a proof-of-concept method that could be scaled to address the urgent need for global vaccine supply chain screening.

Co-author Professor Nicole Zitzmann (Professor of Virology in the Department of Biochemistry) said:

This latest research will bring the world community one step closer to being able to tell apart falsified, ineffective vaccines from the real thing, making us all safer. It has been a tremendous collaborative effort, with everyone having this same important goal in mind. Bevin Gangadharan, Tehmina Bharucha, Laura Gomez and Yohan Arman from the Department of Biochemistry all played key roles in co-developing the method.

Co-author Professor Paul Newton (Professor of Tropical Medicine at the Centre for Tropical Medicine and Global Health) said:

This innovative research provides compelling evidence that MALDI mass spectrometry techniques could be used in accessible systems for screening for vaccine falsification globally, especially in centres with hospital microbiology laboratories, enhancing public health and confidence in vaccines.

Read more in npj Vaccines : https://www.nature.com/articles/s41541-024-00946-5

Inset and banner images: John Walsby-Tickle (Mass Spectrometry Services Manager, Department of Chemistry Mass Spectrometry Research Facility) and Isabelle Legge (Research Assistant in the McCullagh Group, Department of Chemistry) using the MALDI-MS system for vaccine authenticity testing.

Every print subscription comes with full digital access

Science News

How to detect, resist and counter the flood of fake news.

Although most people are concerned about misinformation, few know how to spot a deceitful post

As a wave of misinformation threatens to drown us, researchers are coming up with ways for us to get our footing.

Brian Stauffer

Share this:

By Alexandra Witze

May 6, 2021 at 6:00 am

From lies about election fraud to QAnon conspiracy theories and anti-vaccine falsehoods, misinformation is racing through our democracy. And it is dangerous.

Awash in bad information, people have swallowed hydroxychloroquine hoping the drug will protect them against COVID-19 — even with no evidence that it helps ( SN Online: 8/2/20 ). Others refuse to wear masks, contrary to the best public health advice available. In January, protestors disrupted a mass vaccination site in Los Angeles, blocking life-saving shots for hundreds of people. “COVID has opened everyone’s eyes to the dangers of health misinformation,” says cognitive scientist Briony Swire-Thompson of Northeastern University in Boston.

The pandemic has made clear that bad information can kill. And scientists are struggling to stem the tide of misinformation that threatens to drown society. The sheer volume of fake news, flooding across social media with little fact-checking to dam it, is taking an enormous toll on trust in basic institutions. In a December poll of 1,115 U.S. adults, by NPR and the research firm Ipsos, 83 percent said they were concerned about the spread of false information . Yet fewer than half were able to identify as false a QAnon conspiracy theory about pedophilic Satan worshippers trying to control politics and the media.

Scientists have been learning more about why and how people fall for bad information — and what we can do about it. Certain characteristics of social media posts help misinformation spread, new findings show. Other research suggests bad claims can be counteracted by giving accurate information to consumers at just the right time, or by subtly but effectively nudging people to pay attention to the accuracy of what they’re looking at. Such techniques involve small behavior changes that could add up to a significant bulwark against the onslaught of fake news.

Misinformation is tough to fight, in part because it spreads for all sorts of reasons. Sometimes it’s bad actors churning out fake-news content in a quest for internet clicks and advertising revenue, as with “troll farms” in Macedonia that generated hoax political stories during the 2016 U.S. presidential election. Other times, the recipients of misinformation are driving its spread.

Some people unwittingly share misinformation on social media and elsewhere simply because they find it surprising or interesting. Another factor is the method through which the misinformation is presented — whether through text, audio or video. Of these, video can be seen as the most credible, according to research by S. Shyam Sundar, an expert on the psychology of messaging at Penn State. He and colleagues decided to study this after a series of murders in India started in 2017 as people circulated via WhatsApp a video purported to be of child abduction. (It was, in reality, a distorted clip of a public awareness campaign video from Pakistan.)

Sundar recently showed 180 participants in India audio, text and video versions of three fake-news stories as WhatsApp messages, with research funding from WhatsApp. The video stories were assessed as the most credible and most likely to be shared by respondents with lower levels of knowledge on the topic of the story. “Seeing is believing,” Sundar says.

Video sells

WhatsApp users looked at three versions of a story that falsely claimed that rice was being made out of plastic — in (left to right) text, audio or a video showing a man feeding plastic sheets into a machine.

Participants tended to rate the video version as more credible than the audio or text versions. The effect diminished for users who were highly involved with the topic of the false story, suggesting that video is a particularly compelling medium for those who may not be knowledgeable on the topic at hand.

Perceived credibility of a message based on format and issue involvement

The findings, in press at the Journal of Computer-Mediated Communication , suggest several ways to fight fake news, he says. For instance, social media companies could prioritize responding to user complaints when the misinformation being spread includes video, above those that are text-only. And media-literacy efforts might focus on educating people that videos can be highly deceptive. “People should know they are more gullible to misinformation when they see something in video form,” Sundar says. That’s especially important with the rise of deepfake technologies that feature false but visually convincing videos ( SN: 9/15/18, p. 12 ).

One of the most insidious problems with fake news is how easily it lodges itself in our brains and how hard it is to dislodge once it’s there. We’re constantly deluged with information, and our minds use cognitive shortcuts to figure out what to retain and what to let go, says Sara Yeo, a science-communication expert at the University of Utah in Salt Lake City. “Sometimes that information is aligned with the values that we hold, which makes us more likely to accept it,” she says. That means people continually accept information that aligns with what they already believe, further insulating them in self-reinforcing bubbles.

Special report: Awash in deception

- A few simple tricks make fake news stories stick in the brain

- Vaccine hesitancy is nothing new. Here’s the damage it’s done over centuries

- Climate change disinformation is evolving. So are efforts to fight back

Compounding the problem is that people can process the facts of a message properly while misunderstanding its gist because of the influence of their emotions and values , psychologist Valerie Reyna of Cornell University wrote in 2020 in Proceedings of the National Academy of Sciences .

Thanks to new insights like these, psychologists and cognitive scientists are developing tools people can use to battle misinformation before it arrives — or that prompts them to think more deeply about the information they are consuming.

One such approach is to “prebunk” beforehand rather than debunk after the fact. In 2017, Sander van der Linden, a social psychologist at the University of Cambridge, and colleagues found that presenting information about a petition that denied the reality of climate science following true information about climate change canceled any benefit of receiving the true information . Simply mentioning the misinformation undermined people’s understanding of what was true.

That got van der Linden thinking: Would giving people other relevant information before giving them the misinformation be helpful? In the climate change example, this meant telling people ahead of time that “Charles Darwin” and “members of the Spice Girls” were among the false signatories to the petition. This advance knowledge helped people resist the bad information they were then exposed to and retain the message of the scientific consensus on climate change.

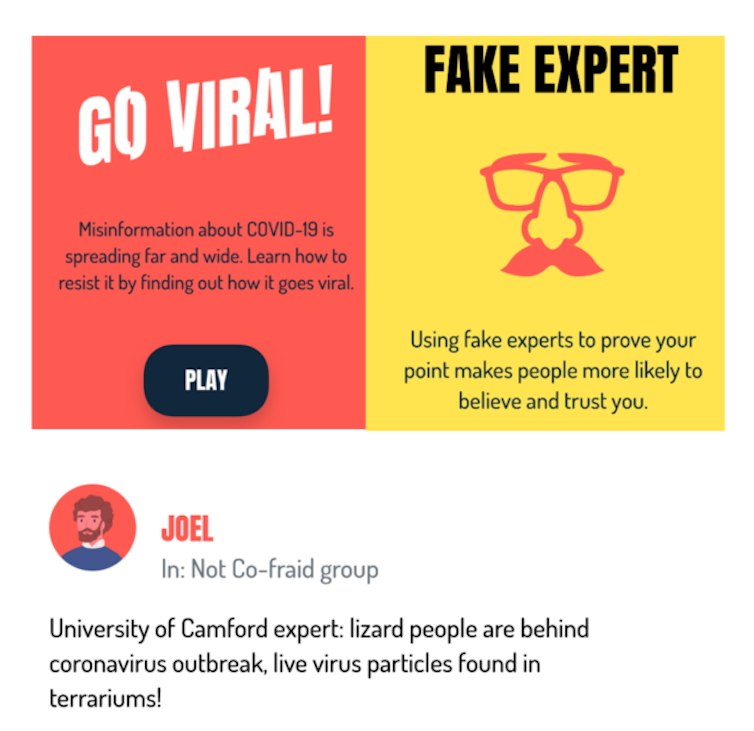

Here’s a very 2021 metaphor: Think of misinformation as a virus, and prebunking as a weakened dose of that virus. Prebunking becomes a vaccine that allows people to build up antibodies to bad information. To broaden this beyond climate change, and to give people tools to recognize and battle misinformation more broadly, van der Linden and colleagues came up with a game, Bad News , to test the effectiveness of prebunking (see Page 36). The results were so promising that the team developed a COVID-19 version of the game, called GO VIRAL! Early results suggest that playing it helps people better recognize pandemic-related misinformation.

Take a breath

Sometimes it doesn’t take very much of an intervention to make a difference. Sometimes it’s just a matter of getting people to stop and think for a moment about what they’re doing, says Gordon Pennycook, a social psychologist at the University of Regina in Canada.

In one 2019 study, Pennycook and David Rand, a cognitive scientist now at MIT, tested real news headlines and partisan fake headlines, such as “Pennsylvania federal court grants legal authority to REMOVE TRUMP after Russian meddling,” with nearly 3,500 participants. The researchers also tested participants’ analytical reasoning skills. People who scored higher on the analytical tests were less likely to identify fake news headlines as accurate, no matter their political affiliation. In other words, lazy thinking rather than political bias may drive people’s susceptibility to fake news, Pennycook and Rand reported in Cognition .

When it comes to COVID-19, however, political polarization does spill over into people’s behavior. In a working paper first posted online April 14, 2020, at PsyArXiv.org, Pennycook and colleagues describe findings that political polarization, especially in the United States with its contrasting media ecosystems, can overwhelm people’s reasoning skills when it comes to taking protective actions, such as wearing masks.

Inattention plays a major role in the spread of misinformation, Pennycook argues. Fortunately, that suggests some simple ways to intervene, to “nudge” the concept of accuracy into people’s minds, helping them resist misinformation. “It’s basically critical thinking training, but in a very light form,” he says. “We have to stop shutting off our brains so much.”

Push in the right direction

Nudging Twitter users to think about the accuracy of a nonpolitical headline resulted in users temporarily sharing more information from more trustworthy media outlets (blue dots toward the right) and less from less trustworthy outlets (blue dots toward the left). Dot size is proportional to the number of tweets that link to that website prior to the accuracy nudge.

Effect of an accuracy nudge on news sharing

With nearly 5,400 people who previously tweeted links to articles from two sites known for posting misinformation — Breitbart and InfoWars — Pennycook, Rand and colleagues used innocuous-sounding Twitter accounts to send direct messages with a seemingly random question about the accuracy of a nonpolitical news headline. Then the scientists tracked how often the people shared links from sites of high-quality information versus those known for low-quality information, as rated by professional fact-checkers, for the next 24 hours.

On average, people shared higher-quality information after the intervention than before. It’s a simple nudge with simple results, Pennycook acknowledges — but the work, reported online March 17 in Nature , suggests that very basic reminders about accuracy can have a subtle but noticeable effect .

For debunking, timing can be everything. Tagging headlines as “true” or “false” after presenting them helped people remember whether the information was accurate a week later, compared with tagging before or at the moment the information was presented, Nadia Brashier, a cognitive psychologist at Harvard University, reported with Pennycook, Rand and political scientist Adam Berinsky of MIT in February in Proceedings of the National Academy of Sciences .

How to debunk

Debunking bad information is challenging, especially if you’re fighting with a cranky family member on Facebook. Here are some tips from misinformation researchers:

- Arm yourself with media-literacy skills, at sites such as the News Literacy Project (newslit.org), to better understand how to spot hoax videos and stories.

- Don’t stigmatize people for holding inaccurate beliefs. Show empathy and respect, or you’re more likely to alienate your audience than successfully share accurate information.

- Translate complicated but true ideas into simple messages that are easy to grasp. Videos, graphics and other visual aids can help.

- When possible, once you provide a factual alternative to the misinformation, explain the underlying fallacies (such as cherry-picking information, a common tactic of climate change deniers).

- Mobilize when you see misinformation being shared on social media as soon as possible. If you see something, say something.

Prebunking still has value, they note. But providing a quick and simple fact-check after someone reads a headline can be helpful , particularly on social media platforms where people often mindlessly scroll through posts.

Social media companies have taken some steps to fight misinformation spread on their platforms, with mixed results. Twitter’s crowdsourced fact-checking program, Birdwatch, launched as a beta test in January, has already run into trouble with the poor quality of user-flagging . And Facebook has struggled to effectively combat misinformation about COVID-19 vaccines on its platform.

Misinformation researchers have recently called for social media companies to share more of their data so that scientists can better track the spread of online misinformation. Such research can be done without violating users’ privacy, for instance by aggregating information or asking users to actively consent to research studies.

Much of the work to date on misinformation’s spread has used public data from Twitter because it is easily searchable, but platforms such as Facebook have many more users and much more data. Some social media companies do collaborate with outside researchers to study the dynamics of fake news, but much more remains to be done to inoculate the public against false information.

“Ultimately,” van der Linden says, “we’re trying to answer the question: What percentage of the population needs to be vaccinated in order to have herd immunity against misinformation?”

Trustworthy journalism comes at a price.

Scientists and journalists share a core belief in questioning, observing and verifying to reach the truth. Science News reports on crucial research and discovery across science disciplines. We need your financial support to make it happen – every contribution makes a difference.

More Stories from Science News on Science & Society

A new book tackles AI hype – and how to spot it

A fluffy, orange fungus could transform food waste into tasty dishes

‘Turning to Stone’ paints rocks as storytellers and mentors

Old books can have unsafe levels of chromium, but readers’ risk is low

Astronauts actually get stuck in space all the time

Scientists are getting serious about UFOs. Here’s why

‘Then I Am Myself the World’ ponders what it means to be conscious

Twisters asks if you can 'tame' a tornado. We have the answer

Subscribers, enter your e-mail address for full access to the Science News archives and digital editions.

Not a subscriber? Become one now .

Reexamining Misinformation: How Unflagged, Factual Content Drives Vaccine Hesitancy

Research from the Computational Social Science Lab finds that factual, vaccine-skeptical content on Facebook has a greater overall effect than “fake news,” discouraging millions from the COVID-19 shot.

By Ian Scheffler, Penn Engineering

What threatens public health more, a deliberately false Facebook post about tracking microchips in the COVID-19 vaccine that is flagged as misinformation, or an unflagged, factual article about the rare case of a young, healthy person who died after receiving the vaccine?

According to Duncan J. Watts, Stevens University Professor in Computer and Information Science at Penn Engineering and Director of the Computational Social Science (CSS) Lab , along with David G. Rand, Erwin H. Schell Professor at MIT Sloan School of Management, and Jennifer Allen, 2024 MIT Sloan School of Management Ph.D. graduate and incoming CSS postdoctoral fellow, the latter is much more damaging. “The misinformation flagged by fact-checkers was 46 times less impactful than the unflagged content that nonetheless encouraged vaccine skepticism,” they conclude in a new paper in Science.

Historically, research on “fake news” has focused almost exclusively on deliberately false or misleading content, on the theory that such content is much more likely to shape human behavior. But, as Allen points out, “When you actually look at the stories people encounter in their day-to-day information diets, fake news is a miniscule percentage. What people are seeing is either no news at all or mainstream media.”

“Since the 2016 U.S. presidential election, many thousands of papers have been published about the dangers of false information propagating on social media,” says Watts. “But what this literature has almost universally overlooked is the related danger of information that is merely biased. That’s what we look at here in the context of COVID vaccines.”

In the study, Watts, one of the paper’s senior authors, and Allen, the paper’s first author, used thousands of survey results and AI to estimate the impact of more than 13,000 individual Facebook posts. “Our methodology allows us to estimate the effect of each piece of content on Facebook,” says Allen. “What makes our paper really unique is that it allows us to break open Facebook and actually understand what types of content are driving misinformed-ness.”

One of the paper’s key findings is that “fake news,” or articles flagged as misinformation by professional fact-checkers, has a much smaller overall effect on vaccine hesitancy than unflagged stories that the researchers describe as “vaccine-skeptical,” many of which focus on statistical anomalies that suggest that COVID-19 vaccines are dangerous.

“Obviously, people are misinformed,” says Allen, pointing to the low vaccination rates among U.S. adults, in particular for the COVID-19 booster vaccine, “but it doesn’t seem like fake news is doing it.” One of the most viewed URLs on Facebook during the time period covered by the study, at the height of the pandemic, for instance, was a true story in a reputable newspaper about a doctor who happened to die shortly after receiving the COVID-19 vaccine.

That story racked up tens of millions of views on the platform, multiples of the combined number of views of all COVID-19-related URLs that Facebook flagged as misinformation during the time period covered by the study. “Vaccine-skeptical content that’s not being flagged by Facebook is potentially lowering users’ intentions to get vaccinated by 2.3 percentage points,” Allen says. “A back-of-the-envelope estimate suggests that translates to approximately 3 million people who might have gotten vaccinated had they not seen this content.”

Despite the fact that, in the survey results, fake news identified by fact-checkers proved more persuasive on an individual basis, so many more users were exposed to the factual, vaccine-skeptical articles with clickbait-style headlines that the overall impact of the latter outstripped that of the former.

“Even though misinformation, when people see it, can be more persuasive than factual content in the context of vaccine hesitancy,” says Allen, “it is seen so little that these accurate, ‘vaccine-skeptical’ stories dwarf the impact of outright false claims.”

As the researchers point out, being able to quantify the impact of misleading but factual stories points to a fundamental tension between free expression and combating misinformation, as Facebook would be unlikely to shut down mainstream publications. “Deciding how to weigh these competing values is an extremely challenging normative question with no straightforward solution,” the authors write in the paper.

Allen points to content moderation that involves the user community as one possible means to address this challenge. “Crowdsourcing fact-checking and moderation works surprisingly well,” she says. “That’s a potential, more democratic solution.”

With the 2024 U.S. Presidential election on the horizon, Allen emphasizes the need for Americans to seriously consider these tradeoffs. “The most popular story on Facebook in the lead-up to the 2020 election was about military ballots found in the trash that were mostly votes for Donald Trump,” she notes. “That was a real story, but the headline did not mention that there were nine votes total, seven of them for Trump.”

This study was conducted at the University of Pennsylvania’s School of Engineering and Applied Science, the Annenberg School for Communication and the Wharton School, along with the Massachusetts Institute of Technology Sloan School of Management, and was supported by funding from Alain Rossmann.

This article originally appeared on the Penn Engineering Blog.

- Public Health

- Social Media

Research Areas

- Health Communication

- Science Communication

Related News

New APPC Survey Finds Belief in COVID-19 Vaccination Misinformation Has Grown

Exploring Research With Real World Impact

A Major NIH Grant Will Help Researchers Study Messaging About Risks of Tobacco Products

- Publications

- Conferences & Events

- Professional Learning

- Science Standards

- Awards & Competitions

- Instructional Materials

- Free Resources

- For Preservice Teachers

- NCCSTS Case Collection

- Science and STEM Education Jobs

- Interactive eBooks+

- Digital Catalog

- Regional Product Representatives

- e-Newsletters

- Browse All Titles

- Bestselling Books

- Latest Books

- Popular Book Series

- Submit Book Proposal

- Web Seminars

- National Conference • New Orleans 24

- Leaders Institute • New Orleans 24

- National Conference • Philadelphia 25

- Exhibits & Sponsorship

- Submit a Proposal

- Conference Reviewers

- Past Conferences

- Latest Resources

- Professional Learning Units & Courses

- For Districts

- Online Course Providers

- Schools & Districts

- College Professors & Students

- The Standards

- Teachers and Admin

- eCYBERMISSION

- Toshiba/NSTA ExploraVision

- Junior Science & Humanities Symposium

- Teaching Awards

- Climate Change

- Earth & Space Science

- New Science Teachers

- Early Childhood

- Middle School

- High School

- Postsecondary

- Informal Education

- Journal Articles

- Lesson Plans

- e-newsletters

- Science & Children

- Science Scope

- The Science Teacher

- Journal of College Sci. Teaching

- Connected Science Learning

- NSTA Reports

- Next-Gen Navigator

- Science Update

- Teacher Tip Tuesday

- Trans. Sci. Learning

MyNSTA Community

- My Collections

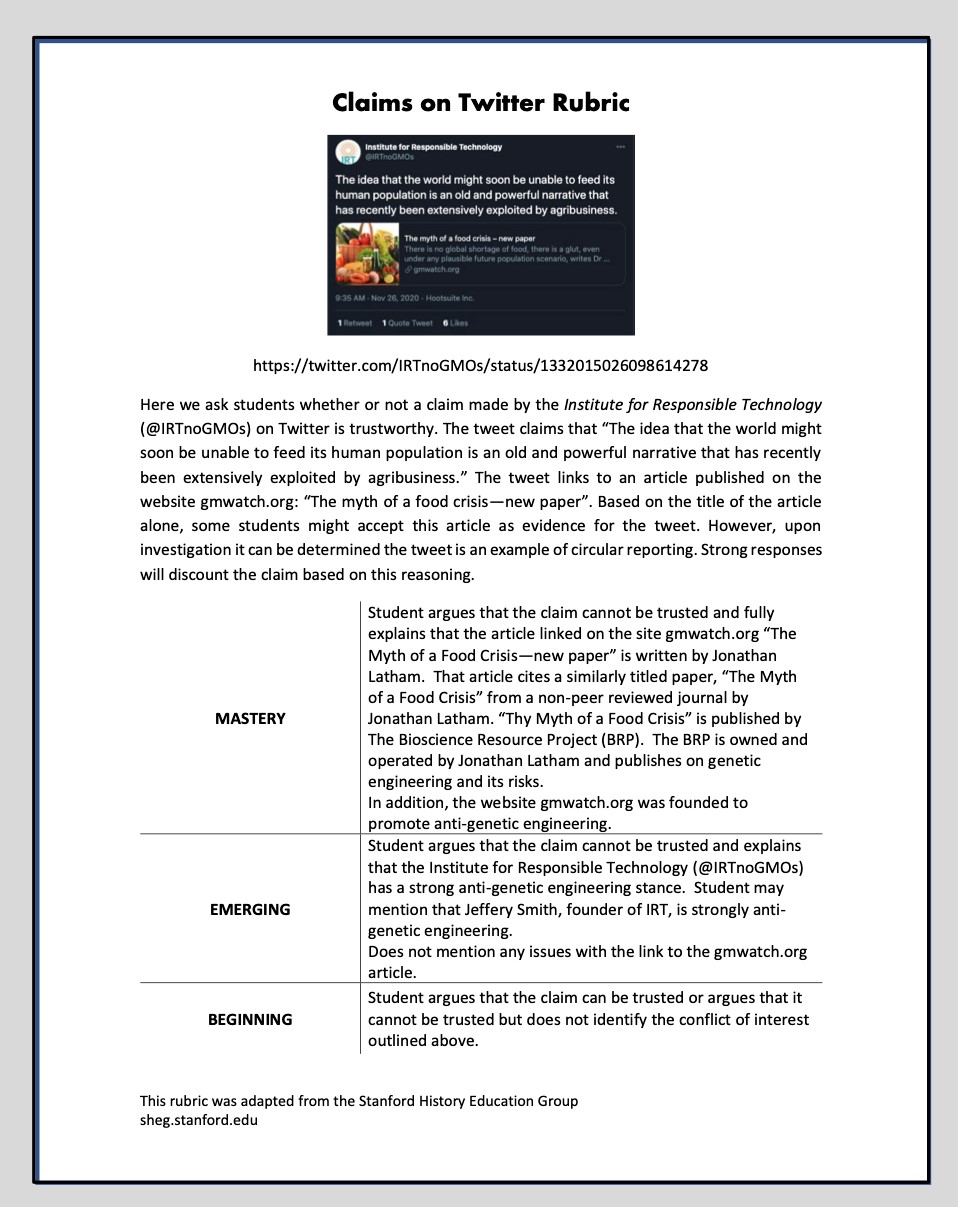

Fact-Checking in an Era of Fake News

A Template for a Lesson on Lateral Reading of Social Media Posts

Connected Science Learning May-June 2021 (Volume 3, Issue 3)

By Troy E. Hall, Jay Well, and Elizabeth Emery

Share Start a Discussion

As all science teachers know, the rate of scientific advancement is accelerating , far outpacing the ability of teachers or students to master. Nevertheless, scien tific understanding is crucial to address contemporary social and environmental challenges, from climate change to food supply to vaccines. Citizens must be able to interpret scientific claims presented in the media and online to make informed personal and political decisions . Informed decision-making requires scientifi c litera cy, the ability to decipher fact from fiction, and a willingness to engage in open-minded, productive discussions around contentious issues . Scientific literacy does not come naturally for most people; these skills need to be taught, practiced, and honed (Hodgin and Kahne 2018). Such scientific literacy skills are recognized specifically in the science and engineering practice of Obtaining, Evaluating, and Communicating Information described in the Next Generation Science Standards (NGSS; NGSS Lead States 2013) . However, these skills can be difficult to integrate into lessons because—while these practices have been identified as important—it is not well understood how to teach them in the digital age.

This article describes a biology lesson we developed that incorporates a relatively new approach to teaching middle and high school students how to fact-check online information. This lesson emerged out of a partnership between school science teachers, an academic unit at Oregon State University (OSU), and OSU’s Science and Math Investigative Learning Experiences (SMILE) program. SMILE is a longstanding precollege program that increases underrepresented students’ access to and success in STEM (science, technology, engineering, and math) education and careers. For more than 30 years, the program has provided a range of educational activities, predominantly in rural areas, to help broaden underrepresented student groups’ participation in STEM and provide professional development resources to support teachers in meeting their students’ needs. Our lesson focuses on social media posts about genetic engineering (GE) of plants, but this promising approach to digital literacy can be adopted for other scientific topics and internet information sources.

Challenges in Curating Online Information Sources

Internet sites have expanded as sources of all types of information, including scien tific research. Today, digital sources outcompete traditional news and information sources among the general public, including U.S. adolescents, who rely heavily on the internet for information (McGrew et al. 2019) and school assignments ( Hinostroza et al. 2018). However, many internet sites have no gatekeepers to monitor the integrity of their content, and sophisticated producers can mask false or misleading claims as factual analysis (Wineburg and McGrew 2019). Many studies have shown that students lack the ability to adequately search for and assess online information (Hinostroza et al. 2018), which can leave them unprepared to think critically about issues raised in their classes and societally.

In the case of controversial socio-scientific issues like GE, social media serves as an outlet for both ardent proponents and objectors, and it can be difficult for even trained fact-checkers to locate and evaluate the quality of sources. In such cases, students can encounter biased or partial information, depending on how they search online. It is well-known that people gravitate toward information that resonates with their preexisting attitudes, a phenomenon known as confirmation bias (Sinatra and Lombardi 2020). Indeed, some research has shown that students judge claims that align with their prior views to be true, regardless of the actual validity of the claims (Kahne and Bowyer 2017). Adolescents trust favored search engines, often clicking on the first links that appear, unaware that such sites may contain sponsored content and unable to separate such content from objective facts (Breakstone et al. 2018; Hargittai et al. 2010; Walsh-Moorman, Pytash, and Ausperk 2020). Unless prompted to be more critical, students may rely on intuitive assessments of online information to judge content validity or the credibility of the source (Tandoc et al. 2018). Unfortunately, students may be unwilling to make an effort to critically evaluate content if they judge the task as having low stakes or being about something that does not personally interest them (Hinostroza et al. 2018).

Although partisan internet sites often portray an issue or technology like GE in black-or-white terms, it is rare for any significant issue of public concern to be such a simple matter. For example, GE has been used to create vaccines and insulin, which few would argue are bad. On the other hand, using GE technology to improve agricultural crops involves many complex environmental and ethical considerations; for example, GE can be used in applications to increase the global food supply but may promote herbicide resistance in weeds or allow modified genes to flow to non-GE crops. Given myriad controversial considerations, it is important to assess GE agricultural products individually and resist the tendency to engage students in lessons tasking them to evaluate GE agriculture as a single, monolithic entity.

Teaching Students to Become Fact-Checkers

In the face of such multifaceted issues, educators strive to shape students to become citizens and consumers who can carefully consider different dimensions of an issue, locate scientifically credible information, and critically evaluate both sources and content (Hodgin and Kahne 2018). Science teach ers must spend adequate time cultivating these lifelong learning skills that today’s students will need in their adult lives. This is as much about learning how to learn (i.e., obtain, evaluate, and communicate information ) as it is about mastering scientific content.

Considerable scientific study has identified the types of cues that signal the quality of an information source, such as the author’s credentials (Stadtler et al. 2016). However, the source is only one cue to the quality of information provided. In addition, one should evaluate the recency of information, look at the URL, evaluate the language used, and investigate the sponsorship of the site (Breakstone et al. 2018). Such items are often included in “checklist” approaches used to teach students how to evaluate online information. However, scholars have questioned the efficacy of checklists because internet sites promoting misinformation are becoming so prevalent and convincing that they pass these checklist tests (Fielding 2019; Sinatra and Lombardi 2020). Moreover, checklists do not teach students broader digital literacy skills, such as how different search terms generate different results. For example, “genetically modified organism” is a common search term that links to polarized information. However, “genetically engineered agriculture,” a related but less-common search term, links to less polarized information. This type of nuance is problematic for students who may rely on common search terms. Teachers need new strategies to address NGSS practices and give students a strong ability to evaluate the information they find when searching.

One promising alternative to assessing digital credibility with checklists is “lateral reading” (Wineburg and McGrew 2019; Walsh-Moorman et al. 2020). This technique, pioneered by the Stanford History Education Group (SHEG), is modeled after professional fact-checkers’ source evaluation strategies. Lateral reading involves validating unfamiliar sites by looking outside the site itself—using the power of web searches to cross-reference information in the unfamiliar site until its trustworthiness and credibility can be established. SHEG’s materials and evaluation have been developed for university students with a focus on social issues, as opposed to science, so there is an opportunity to refine them for different audiences and materials. Recently, Walsh-Moorman et al. (2020) called for more exploration of how educators could use lateral reading in middle schools, especially in ways that might be efficiently embedded in the curriculum. Our lesson adapts the process of lateral reading for science education with a K–12 audience.

In the following section, we describe this lesson, which was developed as one part of a larger curriculum for middle and high school science students on the science and social issues associated with GE agricultural applications . Our overall goal was to develop educational materials that encourage open-minded thinking about the breadth of social issues surrounding specific GE agricultural products, rather than understanding the basic science of GE or debating whether GE as a whole is good or bad. This particular lesson focuses on fact-checking information presented in social media using lateral reading .

The Fact-Checking Lesson

Lesson development.

Knowing that GE is controversial— with multiple social , environmental, and economic dimensions—we invited middle and high school SMILE club teachers to participate in focus groups and surveys intended to understand their interest and knowledge in teaching about GE, their self-assessed capacity and comfort to deliver this type of material, their students’ interests and prior attitudes about the topic, and the need for our material to connect to NGSS . Thirty-nine teachers from 26 schools completed surveys, and 16 high school teachers participated in four focus groups lasting approximately one hour each.

To our surprise, these assessments showed that teachers did not consider the controversial nature of GE to be a barrier to teaching this type of material. Additionally, their interest in teaching about the environmental, food, and economic aspects of GE was relatively high. However, based on their self-reports and a quizlike assessment, their knowledge about these associated aspects was moderate to low, suggesting that they would benefit from a tool to help students assess validity of information they find when studying these topics.

In addition to teacher surveys and focus groups, we examined the GE curriculum teachers were referencing and using at the time. Teachers were generally teaching about GE as an applied lesson connected to an introductory genetics unit. Commonly, GE curriculum involved a class debate where students argued for or against GE technology over one or two class periods. As preparation, students developed lists of pros and cons from digital media sources. Because students are generally not skilled at fact-checking, this often resulted in their lists containing misinformation that might not be validated by teachers until the actual debate, if at all. This had the effect of confirming misinformation in students’ minds.

We identified two main concerns about these existing lessons. First, they assume GE can productively be discussed as good or bad. However, some of the many agricultural applications have been shown to have few adverse impacts, while others are more significant. Students need to understand the differences among specific GE agricultural products—including their various environmental, societal, scientific, and economical considerations—to be able to have a productive debate about whether a specific GE product should be used. Students should not be encouraged to think about all GE products as involving the same considerations.

Second, many existing lessons are outdated and do not reflect current understandings of the nuances of GE agriculture. Links embedded in curricular materials quickly become outdated, as advances in GE are so rapid. Therefore, rather than using static materials that contain old links, students need to be able to identify contemporary information resources regarding specific GE agricultural products. In addition to building digital searching skills, this will enable them to develop meaningful lists of the pros and cons associated with a specific GE product.

Thus, through our review and consultation with teachers, it became clear that students need the skills to curate information about GE agriculture and teachers need tools to formatively assess their students’ information-gathering skills. This led us to build upon SHEG’s work on lateral reading in our lesson.

Traditionally, fact-checking lessons involved what is called “vertical” reading, in which students systematically explore and critique elements within a source. Many students are familiar with and have used checklists based on vertical reading to determine whether a piece of information is credible, such as assessing the source’s authority, purpose, accuracy, currency, and relevance. However, in the digital age, verifying a source through vertical reading can be quite difficult, as some internet sites are deliberately designed to be misleading. Additionally, now that most students can access information via the internet, they should not be restricted to vertical reading. Instead, current recommendations suggest teaching to read laterally, that is, by examining other sources and triangulating findings. SHEG’s approach to this involves validating a target source using six steps: investigate the source’s author, perform keyword searches, verify information and quotations, research citations, look up organizations cited, and analyze sponsorship or ads (Walsh-Moorman et al. 2020).

Lesson overview

Our lesson promoted the basics of lateral reading in the context of topic-specific social media posts about GE agriculture. We drew from tweets, YouTube videos, and Facebook posts, because historical posts and pages are publicly available, easily accessible, and contain the types of elements students should learn to assess, such as the domain, the numbers of likes or retweets, and links to primary sources. The lesson has been designed to be completed in a single 60-minute class period but provides students lateral-reading skills they can build on in future units and lessons, regardless of the content area.

The lesson ( see Supplemental Resources ) begins with a classroom discussion about the term “fake news,” including where it comes from, its purpose, and its role in society. To aid this discussion, we provide resources for teachers to highlight how the process of developing news has changed over time, how advances in technology have made it easier for anyone to develop “news” or “user-created content,” and how fake news can quickly proliferate through social media networks.

This productive discussion about “fake news” encourages students to think about the importance of validating information and reflect on how they currently do this. At this point in the lesson, teachers introduce lateral reading as a strategy to validate sources of information. This introduction focuses on three key components: (1) the source, (2) the evidence, and (3) whether other reputable sources agree with the claims under scrutiny. Additionally, teachers highlight the importance of searching to confirm the information outside of the original source.

After introducing the basics of lateral reading, teachers use case studies we developed about specific GE agricultural products ( Figure 1 ). In small groups, students review a case study, discuss the three lateral reading components, and determine whether the post is credible. If the students have access to the internet, they are encouraged to base their reasoning on information they found regarding each of the components, such as information about the author of the material. If students do not have access to an internet-connected device, they can describe the types of searches they would perform. Reconvening as a large group, teachers capture the students’ reasoning and categorize it into the key components of lateral reading. By doing this as a class, students can see the variety of ways the example source could be validated using the lateral reading strategy.

While lateral reading is often more accurate than vertical reading, it is less straightforward and often more cumbersome, requiring students to engage in complex reasoning and nuanced appraisals. Teachers and students need a way to assess their understanding and application of the skill of lateral reading as it develops. To assist in this, each case study has an associated rubric to evaluate competency as beginning, emerging, or mastery ( Figure 2 ). For each skill level, an example of source validation reasoning is provided as a quick reference for teachers and students to assess and improve their lateral reading skills. These rubrics enable students to get quick feedback and provide opportunities to practice skills in an authentic way before searching for information on their own.

Once students have been introduced to lateral reading and the rubrics as a class, they work on their own in small groups to complete similar evaluations of new topic-specific case studies from a variety of social media sources. Students assess each source’s validity and provide a short written analysis. Afterward, groups pair up and share their reasoning for each case study. At this time, teachers provide students the rubrics specific to these cases, so students can determine how they can improve their lateral-reading skills.

To debrief the lesson, groups are provided a list of discussion questions that focus on how they consume and produce information, their responsibilities in checking the validity of the information they use and share, and when they would use lateral reading. During this debrief students reflect on their personal goals for validating sources and their skills around doing so.

Assessment of the lesson

Using SMILE’s statewide network of teachers, we provided professional development about this lesson to 17 middle school and 11 high school teachers, who then piloted it in their afterschool STEM clubs. This provided teachers a low-risk environment to experiment with the lesson and provide authentic feedback via teacher logs, ongoing professional development sessions, and personal conversations.

In a follow-up teacher workshop five months later, teachers reported that the lesson was well-received by their students. When paired with additional lessons about GE agriculture that required students to collect online information, teachers said that the lateral reading exercises led to more productive classroom discussions. This lesson was the most highly rated among the seven we provided in the GE agriculture unit; teachers reported that they planned to use it with other discussion-based science lessons in their classrooms. Further, teachers described how the lesson highlighted their students’ struggle to assess the accuracy of online information.

Despite the overall positive reaction to the lesson, some aspects of lateral reading were difficult for both students and teachers. Many of the teachers reported that they normally use vertical reading checklists to teach digital literacy skills—the same checklists that SHEG reports as problematic. Teachers were apprehensive about shifting away from them because the vertical reading checklists provide a concrete, straightforward approach to analyze a source. They tend to be easier for novice students to follow than the less-defined, more-nuanced skill of lateral reading. Teachers reported that lateral reading required a higher degree of critical reasoning and, if not scaffolded properly, some students became confused, frustrated, and gave up on the process.

The most success was obtained with tweets that were clearly true or false. In this context, students were able to demonstrate the validity of the source and provide reasoning to support their determination using one or two quick internet searches. However, it was more difficult for students to assess the validity of other social media posts with more nuanced misinformation that was harder to check. Additionally, reading laterally sometimes took students to scientific journal articles or other dense sources that provided contradictory claims. To validate the source and provide accurate reasoning, students needed considerably more time for reading or searching for other references. In these cases, students were less successful and often gave up on the process. Thus, it was clear from the feedback that this lesson is not a panacea and students need to practice the skills of lateral reading to be able to use them effectively and efficiently.

Fake news and scientific misinformation are rampant on the internet. Science education must therefore expand from teaching primarily about scientific content to teaching how to obtain, evaluate, and use scientific findings. Existing approaches—such as vertical reading or lessons based around materials curated at a single point in time—are outdated and inadequate. Students do most of their information gathering and communicating in online environments using a wide variety of sources. The lateral reading approach we described builds on recent recommendations and may be more suitable for addressing NGSS recommendations in the dynamic digital landscape.

Using this lesson framework allows teachers to build fact-checking skills among their students that can be used in future ex ercises and provides teachers with a way to formatively assess students’ skills. This assessment can be valuable when conducted prior to students using information from the internet in a debate exercise and allows for a more productive, accurate debate .

Our partnership with teachers was critical to the development and refinement of the lesson. It also revealed some unforeseen challenges. First, some of our assumptions were incorrect, such as teachers’ reluctance to address a socially controversial topic. Other assumptions were more accurate; for example, teachers felt unprepared as content experts in this domain. The experienced STEM teachers provided feedback that was used to refine lessons over time.

While we see great benefit in teaching lateral reading, our experience suggests that it should be carefully planned, as it requires skills that many students have not yet developed. Thus, it is important to scaffold for students' needs. The initial lateral reading sources and assessments need to be content-specific, straightforward, and clear to help students understand the process and begin to build their skills. However, most socio-scientific issues are not straightforward, and lateral reading of online posts can prove challenging. This reveals a tension between the need to simplify a skill to teach to novices and the need to develop higher level, critical-thinking skills. Similar to how the NGSS recommends developing science and engineering practices among students over time, lateral reading is a skill that needs to be continually practiced for students to gain mastery.

To scaffold our lesson for science students, we recommend taking a long-term approach to skill progression over time. We suggest that teachers build students’ critical-thinking skills over an entire year through multiple applied lessons using lateral reading in which students curate information from a variety of internet sources. Initially, checklists could be used to introduce students to analyzing online sources. These early examples should be selected to illustrate checklists’ limitations and transition to using the lateral reading technique. We also suggest that teachers carefully select initial “case studies” that can be readily evaluated through lateral reading to help students self-assess their skill development. As students build this skill over the course of a school year, they can begin independently using lateral reading to critically evaluate online sources that they find on their own.

The lesson we developed can easily be applied to online information sources about many topics. Often, platforms such as Facebook, Youtube, or TikTok point to websites where additional information about the source or topic can be accessed. The goal of our lesson is for students to determine if these are quality sources. Only after making that determination should they be consuming any of the information provided.

Research has shown that media literacy education can be effective (Hodgin and Kahne 2018), specifically in improving students’ abilities to judge the accuracy of online posts (Kahne and Bowyer 2017). In this article we have described the development of a lesson and approach based in contemporary recommendations for increasing media literacy among youth by teaching the critical-thinking skills of lateral reading.

Acknowledgments

We thank the National Science Foundation Plant Genome Research Program (IOS # 1546900) for support of this project. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Troy E. Hall ( [email protected] ) is Professor and Head of Oregon State University’s Forest Ecosystems and Society Department, Corvallis, Oregon. Jay Well is Associate Director of Precollege Programs and Science and Math Investigative Learning Experiences (SMILE) at Oregon State University, Corvallis, Oregon. Elizabeth Emery is Environmental Campaign Manager at the Association of Northwest Steelheaders, Portland, Oregon.

citation: Hall, T.E., J. Well and E. Emery. 2021. Fact-checking in an era of fake news: A template for a lesson on lateral reading of social media posts. Connected Science Learning 3 (3). https://www.nsta.org/connected-science-learning/connected-science-learning-may-june-2021/fact-checking-era-fake-news

Breakstone, J., S. McGrew, M. Smith, T. Ortega, and S. Wineburg. 2018. Teaching students to navigate the online landscape. Social Education 82 (4): 219–221.

Fielding, J.A. 2019. Rethinking CRAAP: Getting students thinking like fact-checkers in evaluating web sources. College and Research Libraries News 80 (11): 620 – 622.

Hargittai, E., L. Fullerton, E. Menchen-Trevino, and K.Y. Thomas. 2010. Trust online: Young adults' evaluation of web content. International Journal of Communication 4: 27.

Hinostroza, J.E., A. Ibieta, C. Labbé, and M.T. Soto. 2018. Browsing the internet to solve information problems: A study of students’ search actions and behaviours using a ‘think aloud’ protocol. Education and Information Technologies 23 (5): 1933–1953.

Hodgin, E., and J. Kahne. 2018. Misinformation in the information age: What teachers can do to support students. Social Education 82 (4): 208–212.

Kahne, J., B. Bowyer. 2017. Educating for democracy in a partisan age: Confronting the challenges of motivated reasoning and misinformation. American Educational Research Journal 54: 3 – 34.

McGrew, S., J. Breakstone, T. Ortega, M. Smith, and S. Wineburg. 2018. Can students evaluate online sources? Learning from assessments of civic online reasoning. Theory and Research in Social Education 46 (2): 165 – 193.

McGrew, S., M. Smith, J. Breakstone, T. Ortega, and S. Wineburg. 2019. Improving university students’ web savvy: An intervention study. British Journal of Educational Psychology 89 (3): 485 – 500.

NGSS Lead States. 2013. Next Generation Science Standards: For States, By State s. Washington, DC: The National Academies Press. Retrieved from http://www.nextgenscience.org/

Sinatra, G.M., and D. Lombardi. 2020. Evaluating sources of scientific evidence and claims in the post-truth era may require reappraising plausibility judgments. Educational Psychologist (online) 1–12.

Stadtler, M., L. Scharrer, M. Macedo-Rouet, J.F. Rouet, and R. Bromme. 2016. Improving vocational students’ consideration of source information when deciding about science controversies. Reading and Writing 29 (4): 705 – 729.

Tandoc Jr., E.C., R. Ling, O. Westlund, A. Duffy, D. Goh, and L. Zheng Wei. 2018. Audiences’ acts of authentication in the age of fake news: A conceptual framework. New Media and Society 20 (8): 2745–2763.

Walsh-Moorman, E.A., K.E. Pytash, and M. Ausperk. 2020. Naming the moves: Using lateral reading to support students’ evaluation of digital sources. Middle School Journal 51 (5): 29 – 34.

Wineburg, S., and S. McGrew. 2019. Lateral reading and the nature of expertise: Reading less and learning more when evaluating digital information. Teachers College Record 121 (11): 1–39.

Instructional Materials Lesson Plans STEM Teaching Strategies Middle School High School Informal Education

You may also like

NSTA Press Book

COMING SOON! AVAILABLE FOR PREORDER. Here’s a fresh way to help your students learn life science by determining how you can help them learn best...

Reports Article

Web Seminar

Join us on Thursday, January 30, 2025, from 7:00 PM to 8:00 PM ET, to learn about the search for planets located outside our Solar System....

Join us on Thursday, November 14, 2024, from 7:00 PM to 8:00 PM ET, to learn about the role that rocks and minerals play regarding climate change....

- Corporate Relations

- Future Students

- Current Students

- Faculty and Staff

- Parents and Families

- High School Counselors

- Academics at Stevens

- Find Your Program

- Our Schools

Undergraduate Study

- Majors and Minors

- SUCCESS - The Stevens Core Curriculum

- The Foundations Program

- Special Programs

- Undergraduate Research

- Study Abroad

- Academic Resources

- Graduate Study

- Stevens Online

- Corporate Education

- Samuel C. Williams Library

Discover Stevens

The innovation university.

- Our History

- Leadership & Vision

- Strategic Plan

- Stevens By the Numbers

- Diversity, Equity and Inclusion

- Sustainability

Student Life

New students.

- Undergraduate New Students

- Graduate New Students

The Stevens Experience

- Living at Stevens

- Student Groups and Activities

- Arts and Culture

Supporting Your Journey

- Counseling and Psychological Services

- Office of Student Support

- Student Health Services

- Office of Disability Services

- Other Support Resources

- Undergraduate Student Life

- Graduate Student Life

- Building Your Career

- Student Affairs

- Commencement

- Technology With Purpose

- Research Pillars

- Faculty Research

- Student Research

- Research Centers & Labs

- Partner with Us

Admission & Aid

- Why Stevens

Undergraduate Admissions

- How to Apply

- Dates and Deadlines

- Visit Campus

- Accepted Students

- Meet Your Counselor

Graduate Admissions

- Apply to a Graduate Program

- Costs and Funding

- Visits and Events

- Chat with a Student

Tuition and Financial Aid

- How to Apply for Aid

- FAFSA Simplification

- Undergraduate Costs and Aid

- Graduate Costs and Funding

- Consumer Info

- Contact Financial Aid

- International Students

Veterans and Military

- Military Education and Leadership Programs

- Stevens ROTC Programs

- Using Your GI Bill

- Pre-College Programs

Defusing Fake News: New Stevens Research Points the Way

To address the alarming rise in misinformation, new strategies and AI for truthfulness emerge from Stevens research

Social media is adrift in a daily sea of misinformation about health, elections, politics and war

Russian-government media and social media outlets have long saturated the airwaves with false claims, most recently about Ukrainian terrorism, ethnic cleansing and military aggression. U.S. infectious disease institute director Anthony Fauci laments the spread of COVID-19 vaccine misinformation with "no basis," while musicians incuding Neil Young recently removed entire song catalogues from the streaming service Spotify when the platform would not remove a podcast spreading health misinformation.

But new research from Stevens Institute of Technology faculty, students and alumni — working with MIT, Penn and others to study Congress, analyze social media and develop fake news-spotting artificial intelligence — is giving new hope in the fight for facts.

Their work is pointing the way to novel technologies and strategies that can successfully defuse false information.

Repeating false claims can help disprove them

Smarter strategies when confronting false claims can make a real difference. That's the conclusion of a research team of communications, marketing and data science experts in the Harvard Kennedy School Misinformation Review .

Stevens business assistant professor Jingyi Sun and colleagues at institutions including the University of Pennsylvania, University of Southern California, Michigan State University and the University of Florida recently analyzed thousands of Facebook posts, from nearly 2,000 public accounts specifically focused on COVID vaccine information, published between March 2020 and March 2021.

Roughly half of the posts studied included false information about COVID vaccines, while the other half were chiefly efforts to fact-check, dispute or debunk false vaccine claims. The posts received millions of total engagements in the Facebook community.

There was a significant quantity of false vaccine information shared, discussed and debated, the team found — and the groups publishing the most misinformation held several commonalities, including being very well organized.

"The accounts with the largest number of connections, and that were connected with the most diverse contacts, were fake news accounts, Trump-supporting groups, and anti-vaccine groups," wrote the authors.

The team then examined the specific discussions, threads, interactions and reactions to identify strategies that seemed to make a difference in viewers' perceptions of and engagement with health misinformation.

Interestingly, when fact-checkers weighed in to discussions to dispute or debate false vaccine information, repeating that false information during the process of disputing it appeared to open readers' minds more effectively.

That stands in contrast to conventional wisdom that false claims should not be repeated when debunking them.

"Fact checkers’ posts that repeated the misinformation were significantly more likely to receive comments than the posts about misinformation," wrote the study authors. "This finding offers some evidence that fact-checking can be more effective in triggering engagement when it includes the original misinformation."

The absence of any reference to an actual false claim being discussed, on the other hand, produced negative emotions in audiences reacting to fact-checking posts.

"Fact-checking without repeating the original misinformation are most likely to trigger sad reactions," the authors wrote.

Fact-checks including repetition of false claims are therefore probably a more effective messaging strategy, the group concludes.

"The benefits [ of repeating a false claim while disputing it ] may outweigh the costs," they wrote.

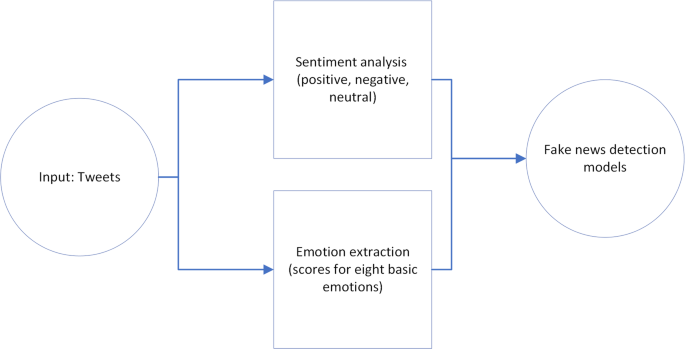

Leveraging AI to spot false vaccine information

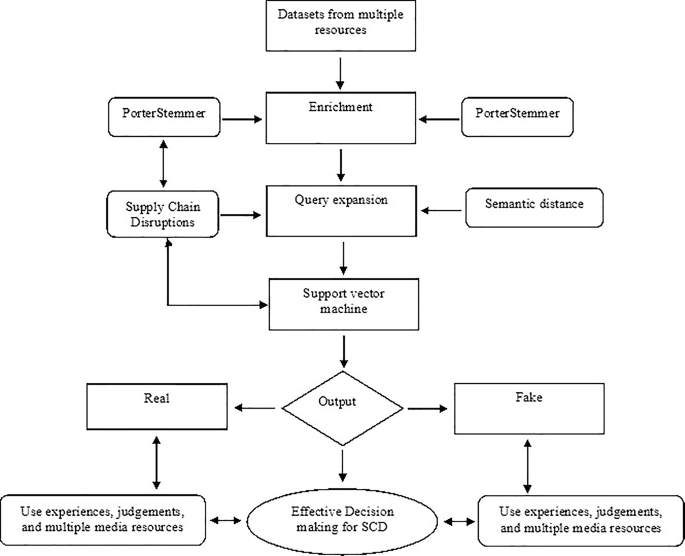

Another Stevens team is hard at work designing an experimental artificial intelligence-powered application that appears to detect false COVID-19 information dispersed via social media with a very high degree of accuracy.

In early tests, the system has been nearly 90% successful at separating COVID-19 vaccine fact from fiction on social media.

"We urgently need new tools to help people find information they can trust," explains electrical and computer engineering professor K.P. "Suba" Subbalakshmi, an AI expert in the Stevens Institute for Artificial Intelligence (SIAI).

To create one such experimental tool, Subbalakshmi and graduate students Mingxuan Chen and Xingqiao Chu first analyzed more than 2,500 public news stories about COVID-19 vaccines published over a period of 15 months during the initial stages of the pandemic, scoring each for credibility and truthfulness.

The team cross-indexed and analyzed nearly 25,000 social media posts discussing those same news stories, developing a so-called "stance detection" algorithm to quickly determine how each post supported or refuted news that was already known to be either truthful or deceptive.

"Using stance detection gives us a much richer perspective, and helps us detect fake news much more effectively," says Subbalakshmi.

Once the AI engine is trained, it is able to judge whether a hitherto unseen tweet, referencing a news article is fake or real.

"It’s possible to take any written sentence and turn it into a data point that represents the author’s use of language,” explains Subbalakshmi. "Our algorithm examines those data points to decide if an article is more or less likely to be fake news."

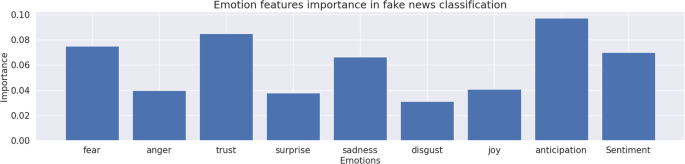

Bombastic, extreme or emotional language often correlated with false claims, the team found. But the AI also discovered that time of publication, article length, or the number of authors of a given article can be used to help determine truthfulness.

The team will continue its work, says Subbalakshmi, integrating video and image analysis into the algorithms being refined in an effort to increase accuracy further.

"Each time we take a step forward, bad actors are able to learn from our methods and build something even more sophisticated," she cautions. "It’s a constant battle."

Slowing the spread of fake news

Stevens alumnus Mohsen Mosleh Ph.D. '17 has also investigated the question of how to combat misinformation shared via social media.

Mosleh, a researcher at MIT's Sloan School of Management and business professor at the University of Exeter Business School, recently co-authored an intriguing study in the prestigious journal Nature adding more credibility to the idea that thinking about the concept of accuracy can help deter the sharing of potential and likely lies.

"False COVID vaccine information on social media can affect vaccine confidence and be a threat to many people's lives," notes Mosleh. "Social media platforms should work with researchers to help immunize against such dangerous content."

With colleagues at MIT and the University of Regina, Mosleh conducted a large field experiment on approximately 5,000 Twitter users who had previously shared low-quality content — in particular, "fake news" and other content from lower-quality, hyper-partisan websites.

The team sent the Twitter users direct messages asking them to rate the accuracy of a single non-political headline, in order to remind them of the concept of accuracy. The researchers then collected the timelines of those users both before and after receiving the single accuracy-nudge message.

The researchers found that, even though very few replied to the message, simply reminding social media users of the concept of accuracy seemed to make them more discerning in subsequent sharing decisions. The users shared proportionally few links from lower-quality, hyper-partisan news websites — and proportionally more links to higher-quality, mainstream news websites (as rated by professional fact-checkers).

"These studies suggest that when deciding what to share on social media, people are often distracted from considering the accuracy of the content," concluded the team in Nature . "Therefore, shifting attention to the concept of accuracy can cause people to improve the quality of the news that they share."

'Follow-the-leader politics' also shape misinformation flow

As the COVID-19 pandemic has transformed American life, it has also revealed how ideological divides and partisan politics can influence public information and misinformation beyond social media — even in official government communications.

That's the conclusion of Stevens political science professor Lindsey Cormack and recent graduate Kirsten Meidlinger M.S. '21, who conducted a data analysis of more than 10,000 congressional email communications to constituents between January and July 2020 — nearly 80% of which mentioned the pandemic in some fashion.

Before performing their analysis, Cormack and Meidlinger first constructed a dataset tabulating total COVID-19 deaths by congressional district during the same time period. Democrats and Republicans, they found, sent roughly the same numbers of COVID communications, and politics did not seem to have been a factor initially in the frequency of those communications.

Rather, members adhered to historic tendencies.

"More communicative members seemed to be more so in the face of crisis, as well," explains Cormack. "We found that legislators from both parties were quick to talk about COVID-19 with constituents, and that on-the-ground realities, not partisanship, drove much of the variation in communication volume."

However, partisanship did influence certain COVID-19 communications, and for an apparent reason.

The researchers discovered Republicans were much more likely to use derogatory ethnic terminology to refer to COVID-19 in official communications and also more likely to promote the use of an unproven and potentially harmful medication, hydroxychloroquine, following the lead of then-President Donald Trump in each case.

"This was evidence," says Cormack, "of what we call 'follow-the-leader-politics.' In the case of hydroxychloroquine, this was in spite of the fact that the FDA, NIH and WHO all did not find any evidence of its efficacy — and even found that detrimental effects outweighed its utility.

"When legislators are following a leader who is promoting something that can potentially kill people, that is a problem."

The research was reported in the journal Congress & the Presidency in September 2021.

Tone as important as truth to counter vaccine fake news

False assertions about Covid-19 vaccines have had a deadly impact – they are one reason why some people delayed being inoculated until it was too late. Some still refuse to be vaccinated.

More than two years after the start of the pandemic, false rumours continue to circulate that the vaccines do not work, cause illness and death, have not been properly tested and even contain microchips or toxic metals.

Now a study raises hopes of deflecting such falsehoods in future by changing the tone of official health messaging and building people’s trust.

In many countries, public confidence in government, media, the pharmaceutical industry and health experts was already on the wane before the pandemic. And in some cases, it deteriorated further during the rollout of Covid vaccines.

This was partly because some national campaigns said the jabs would protect people from falling ill.

Friends over facts

‘There was a lot of overpromising around the vaccine without really knowing what would happen,’ said Prof Dimitra Dimitrakopoulou, research scientist and Marie Curie Global Fellow at the Massachusetts Institute of Technology and the University of Zurich.

“ We have lived with fake news and misinformation long enough to understand that it cannot be debunked with facts. Prof Dimitra Dimitrakopoulou, FAKEOLOGY

‘Then people started getting sick, even though they were vaccinated. That created a lack of trust in the government issuing these policies, and in the scientific community.’

Prof Dimitrakopoulou studied public perceptions of Covid vaccines and obstacles to acceptance of reliable information as part of a project called FAKEOLOGY .

She found that, when people lose faith in institutional sources, they end up relying only on themselves, close friends and family.

‘They trust their instincts, they trust what resonates with them,’ Prof Dimitrakopoulou said. That means they will search the internet, social media and other sources until they find information that reinforces the beliefs they already hold.

‘We have lived with fake news and misinformation long enough to understand that it cannot be debunked with facts,’ she said. ‘People just raise these emotional blocks.’

For example, a story about a mother whose child fell sick after getting a Covid vaccination would likely be more influential than a message containing scientific facts.

Building trust

Prof Dimitrakopoulou surveyed 3 200 parents of children under 11 years old in the United States, and conducted focus groups with 54 of them, to discuss their views about Covid vaccines for kids.

Many parents felt confused by conflicting information about the shots and had a lot of questions about their effectiveness.

She gave the parents a selection of messages to assess. They were put off by the ones that were largely factual, rigid and prescriptive – the tone of many public health campaigns.

They were more persuaded by messages that addressed their concerns about the vaccines with empathy and compassion while acknowledging that they face a difficult decision.

‘We need to be ready to answer any questions they may have and be ready to have a conversation - without expecting the conversation to end with someone getting vaccinated,’ said Prof Dimitrakopoulou.

Those exchanges will ultimately help bolster public faith in health bodies and government institutions. ‘Covid is a great opportunity for us to start building this trust,’ she said.

While a lengthy process, building these bridges could enlighten people’s perceptions for the rest of their lives, she said.

Fake news filter

It is also important for journalists, researchers and the general public to be able to spot and filter out fake news.

Researchers on a project called SocialTruth have developed a tool to flag fake news content on the internet and social media. The software, called a Digital Companion, can check the reliability of a piece of information. It analyses the text, images, source and author and, within two minutes, produces a credibility score – a rating of between one and five stars.