Learning Informatica PowerCenter 9.x by Rahul Malewar

Get full access to Learning Informatica PowerCenter 9.x and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

The assignment task

The assignment task is used to assign a value to user-defined variables in Workflow Manager.

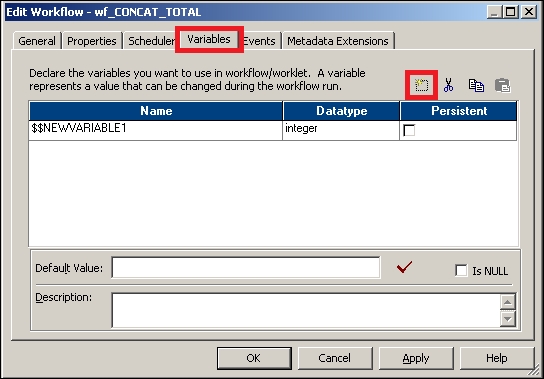

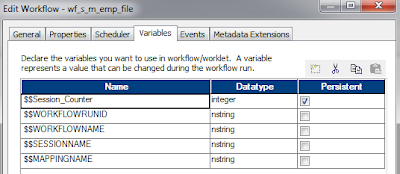

Before you can define a variable in the assignment task, you need to add the variable in Workflow Manager. To add a variable to the Workflow, perform the following steps:

A new variable is created, as shown in the preceding screenshot. The new variable that is created is $$NEWVARIABLE1 . You can ...

Get Learning Informatica PowerCenter 9.x now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

- Success Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMs Success Success Accelerators Accelerate your Purchase to Value engaging with Informatica Architects for Customer Success My Engagements All your Engagements at one place

- Communities A collaborative platform to connect and grow with like-minded Informaticans across the globe Communities Product Communities Connect and collaborate with Informatica experts and champions Discussions Have a question? Start a Discussion and get immediate answers you are looking for User Groups Customer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica Get Started Community Guidelines

- Knowledge Center Troubleshooting documents, product guides, how to videos, best practices, and more Knowledge Center Knowledge Base One-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and more Support TV Video channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much more Documentation Information library of the latest product documents Velocity (Best Practices) Best practices and use cases from the Implementation team

- Learn Rich resources to help you leverage full capabilities of our products Learn Trainings Role-based training programs for the best ROI Certifications Get certified on Informatica products. Free, Foundation, or Professional Product Learning Paths Free and unlimited modules based on your expertise level and journey Experience Lounge Self-guided, intuitive experience platform for outcome-focused product capabilities and use cases

- Resources Library of content to help you leverage the best of Informatica products Resources Tech Tuesdays Webinars Most popular webinars on product architecture, best practices, and more Product Availability Matrix Product Availability Matrix statements of Informatica products SupportFlash Monthly support newsletter Support Documents Informatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description Schedule Product Lifecycle End of Life statements of Informatica products Ideas Events Change Request Tracking Marketplace

- Learning Path

- Data Engineering Integration: Beginner

Data Engineering Integration (Big Data Management) delivers high-throughput data ingestion and data integration processing so business analysts can get the data they need quickly. Hundreds of prebuilt, high-performance connectors, data integration transformations, and parsers enable virtually any type of data to be quickly ingested and processed on big data infrastructures, such as Apache Hadoop, NoSQL, and MPP appliances. Beyond prebuilt components and automation, Informatica provides dynamic mappings, dynamic schema support, and parameterization for programmatic and templatized automation of data integration processes.

The Beginner Level of learning path will enable you to understand DEI fundamentals. It constitutes of videos, webinars, and other documents on introduction to DEI, DEI architecture, Blaze architecture, use cases on Azure, Amazon cloud and many other ecosystems, integration with AWS and many more.

After you have successfully completed finish all the three levels of DEI product learning, you will earn an Informatica Badge for Data Engineering Integration (Big Data Management). So start your product learning right away and earn your badge!

This module covered an introduction to DEI, which consisted of a short overview of Big Data Management and getting started with DEI and discussed various functionalities of Big Data.

This module also discussed how to redeploy PowerCenter applications and mappings into the Big Data world, setting up and configuring the Spark Engine, Blaze configuration, how to collect logs and what are the locations for the blazed log, common issues and how to troubleshoot them, offering advanced Spark functionality, solution overview, themes, drivers and new use cases for BDS 10.2.2, Stream processing and analytics.

You also explored how to handle DEI on Microsoft Azure, DEI on Amazon Cloud, DEI on Cloudera Altus, DEI on MapR. You also got an insight of operational insights and goals, workspaces, Informatica Azure Databricks Support, how to create ClusterConfigObject (CCO), Cluster provisioning Configuration, Databricks Connection and DEI's capabilities to integrate with AWS ecosystem.

Now move on to the next "Intermediate level" for your DEI onboarding and get to know more about the product.

This video provides a brief introduction and overview of the Informatica Data Engineering Integration (Big Data Management).

Watch the video to learn more about Hadoop and next-generation data integration on the Hadoop platform and its features.

Additional Resources :

Big Data: What you need to know

The Big Data Workbook

Informatica DEI Data Sheet

If you are a beginner in DEI products, go through the following primer to get brief information on each product and the services provided.

You could also find information on the tools, documentation, and resources associated with every product. This primer includes information on the tools, services, and other resources for the following:

- Data Engineering Integration (Big Data Management)

- Data Engineering Quality (Big Data Quality)

- Enterprise Data Lake

- Data Engineering Integration Streaming

- Enterprise Data Catalog

A cluster configuration is an object in the domain that contains configuration information about the Hadoop cluster. The cluster configuration enables the Data Integration Service to push mapping logic to the Hadoop environment. Creating CCO helps a beginner in DEI products to create hive, hdfs, Hadoop, and hbase connection.

Metadata Access Service is a requirement in the Data Engineering Integration context to allow Developer Client Tool (DxT) installation/configuration to be simplified so that the end-user doesn't need to install/configure various adapter-related binaries with every DxT installation. This video provides an introduction to Metadata Access Service (MAS) and also explains the adapters enabled through MAS.

Here’s a video to learn about setting up and configuring the Spark Engine. It also contains a demo to help you with this process.

This video provides an overview of Spark Engine and Spark Configuration Properties. The video also explains how to debug when mapping is executed in Spark Engine.

This video provides an overview of Sqoop. The video also discusses the pre-requisites required and how to configure Sqoop enabled JDBC connection.

This video explains how to configure Sqoop for Microsoft SQL Server Database.

This video discusses the capabilities of Avro and Parquet. It also describes the benefits of using Parquet and explains how to transform from Avro to Parquet.

Informatica Data Engineering Streaming (Big Data Streaming) provides real-time streaming processing of unbounded Data Engineering Integration. Go through this video to get an introduction to the functionalities of Data Engineering Integration, Stream Data Management Reference Architecture, and use cases that Data Engineering can help you with.

This video gives an overview of Big Data Streaming (BDS) and its key concepts. It also describes Kafka Architecture and license options for Informatica BDS.

This video explores Streaming mapping and the sources and targets that are supported in Streaming Mapping. It also explains how to create a BDS mapping in Informatica Developer with Kafka source.

This video provides information on Confluent Kafka in Data Engineering Integration v10.4. It also explains how to create a Confluent Kafka connection using infacmd.

This video discusses how to enable Column Projection for Kafka Topic using Informatica Big Data Streaming.

This video explains how to create Amazon Kinesis Connection in the Informatica Developer tool, in the admin console, and the command line.

This video explains how to create JMS Connection in the Informatica Developer tool, admin console, or using infacmd command to run the Big Data Streaming mapping.

This video discusses how to create JMS Connection in the Informatica Developer tool to import the Data Object.

This video demonstrates the ease with which you could use the existing PowerCenter applications and mappings and redeploy them into the Big Data world.

The video discusses what the PowerCenter Reuse report identifies and contains a quick demo that explains how to reuse existing PowerCenter applications for big data workloads, generate a report to assess the effort in the journey to big data world and use the import utility to seamlessly import the PowerCenter mappings.

Learn more about Blaze - one of the execution engines that Informatica uses. The video gives you an overview of the following features in Blaze:

- Architecture

- Blaze configuration

- How to collect logs

- What are the locations for the blaze log

- Common issues and how to troubleshoot them

- Tips and tricks while you are using Blaze

The video explains the setting up and configuration process of the Spark Engine on Informatica Data Engineering Integration. The video also has a demonstration that guides you through the entire set-up process and important information useful while using the Spark Engine in real-time scenarios.

Learn how DEI integrates seamlessly with Cloudera Altus. The video gives an overview of Cloudera Altus, a typical scenario in Informatica's customer base, followed by a demo.

This video explains what Azure Databricks is and how it is integrated with Informatica Data Engineering Integration.

The video will also take you through workspaces, Informatica Azure Databricks Support, how to create ClusterConfigObject (CCO), Cluster provisioning Configuration, Databricks Connection followed by a demo.

Learn how to implement end-to-end big data solutions in the Amazon Ecosystem using Data Engineering Integration (Big Data Management) 10.2.1.

The video explains the DEI 10.2.1 capabilities to integrate with the AWS ecosystem.

Go through this video to learn about how to integrate Informatica BDM and Azure DataBricks Delta.

Watch this video to learn how DEI helps resolve the "Data Lake on Cloud" use case in the Azure ecosystem followed by a demo.

If you are moving your on-prem data to Cloud and processing from on-prem to Azure, this video would be helpful in understanding how DEI can enable you in your journey to Cloud. The video also discusses different features and functionalities in the Azure ecosystem and a use case.

Click here to watch the video

Watch another video to learn how DEI helps resolve the “Data Lake on cloud” use case in the Amazon ecosystem followed by a demo.

If you are moving away from the on-premise data warehouse and moving towards cloud and data lake scenarios, this video would help you in understanding how DEI can help you in this journey to Cloud. This video also explains the additional Informatica features on Amazon, and a data lake use case supported by a demo.

The video takes you through DEI 10.2.2 and elaborates on its strategic themes, one being an enterprise-class product in the big data ecosystems, offering advanced Spark functionality, and being available across clouds and connectivity.

The video takes you through Enterprise Data Streaming (EDS) Management Solution Overview, themes, drivers, and new use cases for EDS 10.2.2, Stream processing and analytics, Streaming Data Integration, Spark structured Streaming support, and how that can help you, CLAIRE Integration and much more.

Operational Insights is a Cloud-based Application that provides visibility into the performance and operational efficiency of Informatica assets across the enterprise. This webinar will introduce a new product offering - Operational Insights for Informatica Data Engineering Integration (Big Data Management). Now you can better understand big data cluster resource utilization of Informatica jobs, analyze mapping/workflow executions, manage capacity, and troubleshoot issues in minutes. Includes product demos

Webinar: Introduction to Operational Insights for Informatica Data Engineering Integration (Big Data Management)

Watch this video to learn how DEI integrates with one of the Hadoop vendors - the MapR ecosystem.

This video also explains how to solve “Data warehousing offloading” use case with the help of a demo.

Webinar: Informatica Big Data Management and Serverless

Big Data Characteristics: How They Improve Business Operations

Turning a Data Lake into a Data Marketplace

How to Run a Big Data POC in Six Weeks

Product Feature

Informatica 10.5.x CICD Features

Link Copied to Clipboard

Workflow in Informatica: Create, Task, Parameter, Reusable, Manager

What is Workflow?

Workflow is a group of instructions/commands to the integrations service in Informatica. The integration service is an entity which reads workflow information from the repository, fetches data from sources and after performing transformation loads it into the target.

Workflow – It defines how to run tasks like session task, command task, email task , etc.

To create a workflow

- You first need to create tasks

- And then add those tasks to the workflow.

A Workflow is like an empty container, which has the capacity to store an object you want to execute. You add tasks to the workflow that you want to execute. In this tutorial, we are going to do following things in workflow.

Workflow execution can be done in two ways

- Sequence : Tasks execute in the order in which they are defined

- Event based : Tasks gets executed based on the event conditions.

How to open Workflow Manager

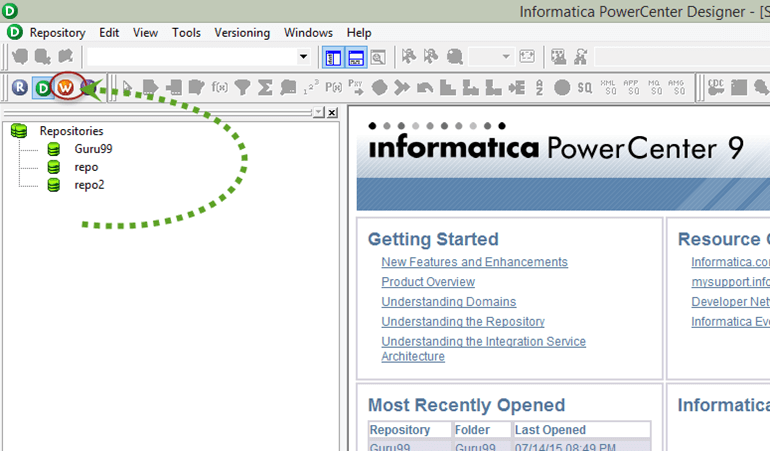

Step 1) In the Informatica Designer, Click on the Workflow manager icon

Step 2) This will open a window of Workflow Manager. Then, in the workflow Manager.

- We are going to connect to repository “guru99”, so double click on the folder to connect.

- Enter user name and password then select “Connect Button”.

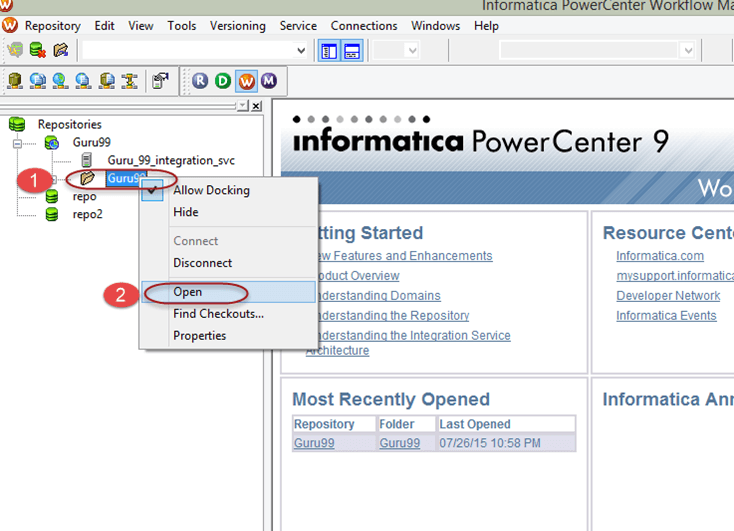

Step 3) In the workflow manager.

- Right click on the folder

- In the pop up menu, select open option

This will open up the workspace of Workflow manager.

How to Create Connections for Workflow Manager

To execute any task in workflow manager, you need to create connections . By using these connections, Integration Service connects to different objects.

For Example, in your mapping if you have source table in oracle database, then you will need oracle connection so that integration service can connect to the oracle database to fetch the source data.

Following type of connections can be created in workflow manager.

- Relational Connection

- Ftp Connection

- Application

The choice of connection you will create, will depend on the type of source and target systems you want to connect. More often, you would be using relational connections.

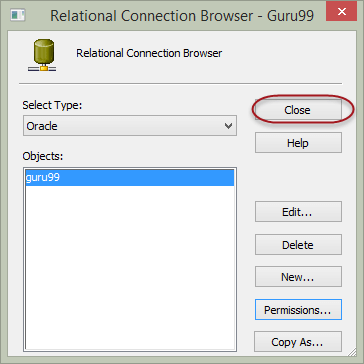

To Create a Relational Connection

Step 1) In Workflow Manager

- Click on the Connection menu

- Select Relational Option

Step 2) In the pop up window

- Select Oracle in type

- Click on the new button

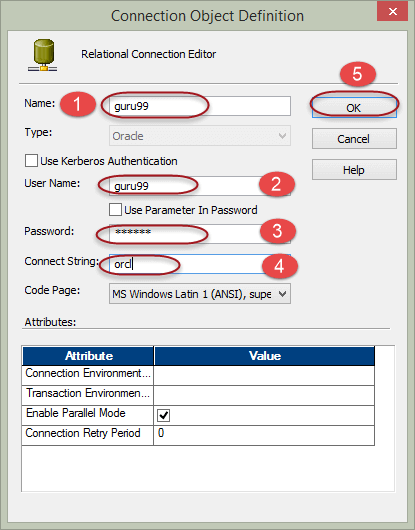

Step 3) In the new window of connection object definition

- Enter Connection Name (New Name-guru99)

- Enter username

- Enter password

- Enter connection string

- Leave other settings as default and Select OK button

Step 4) You will return on the previous window. Click on the close button.

Now you are set with the relational connection in workflow manager.

Components of Workflow manager

There are three component tools of workflow manager that helps in creating various objects in workflow manager. These tools are

- Task Developer

- Worklet Designer

- Workflow Designer

Task Developer – Task developer is a tool with the help of which you can create reusable objects. Reusable object in workflow manager are objects which can be reused in multiple workflows. For Example, if you have created a command task in task developer, then you can reuse this task in any number of workflows.

The role of Workflow designer is to execute the tasks those are added in it. You can add any no of tasks in a workflow.

You can create three types of reusable tasks in task developer.

- Command task

- Session task

Command task – A command task is used to execute different windows/unix commands during the execution of the workflow. You can create command task to execute various command based tasks. With help of this task you can execute commands to create files/folders, to delete files/folders, to do ftp of files etc.

Session Task – A session task in Informatica is required to run a mapping.

- Without a session task, you cannot execute or run a mapping

- A session task can execute only a single mapping. So, there is a one to one relationship between a mapping and a session

- A session task is an object with the help of which informatica gets to know how and where to execute a mapping and at which time

- Sessions cannot be executed independently, a session must be added to a workflow

- In session object cache properties can be configured and also advanced performance optimization configuration.

Email task – With the help of email task you can send email to defined recipients when the Integration Service runs a workflow. For example, if you want to monitor how long a session takes to complete, you can configure the session to send an email containing the details of session start and end time. Or, if you want the Integration Service to notify you when a workflow completes/fails, you can configure the email task for the same.

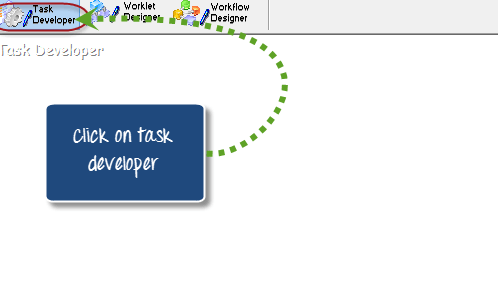

How to create command task

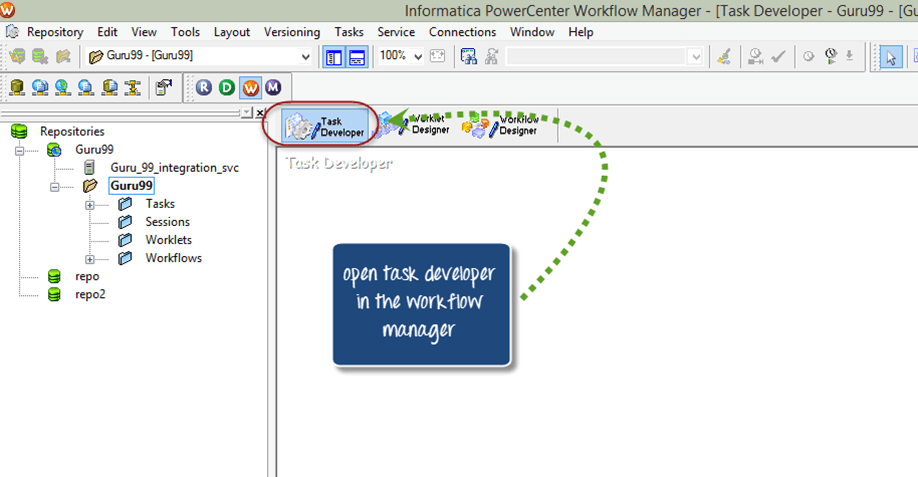

Step 1) To create a command task we are going to use Task Developer. In Workflow Manager, open the task developer by clicking on tab “task developer” from the menu.

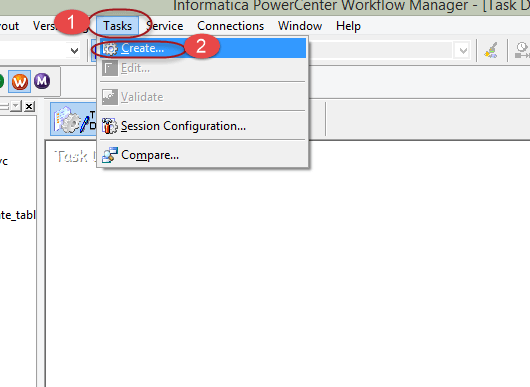

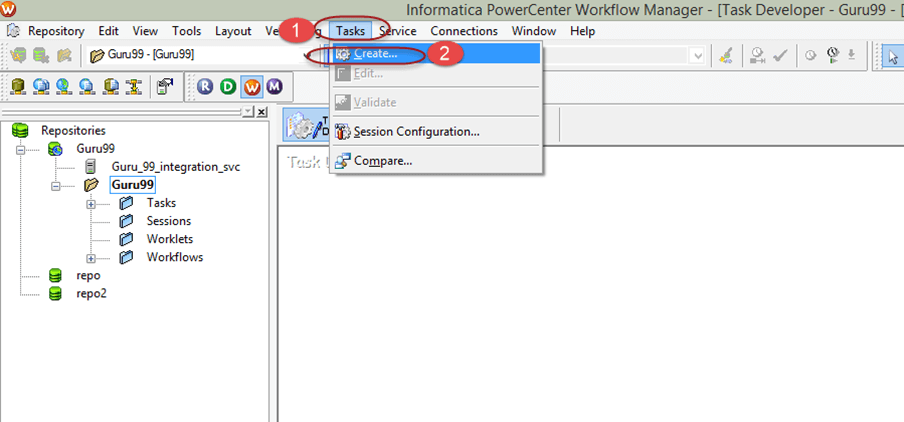

Step 2) Once task developer is opened up, follow these steps

- Select Tasks menu

- Select Create option

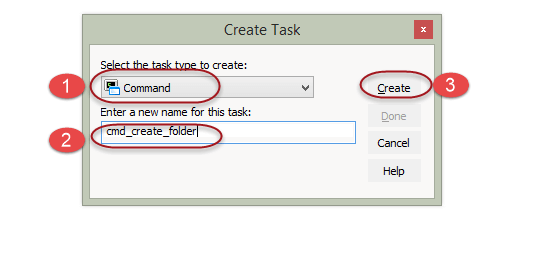

Step 3) In the create task window

- Select command as type of task to create

- Enter task name

- Select create button

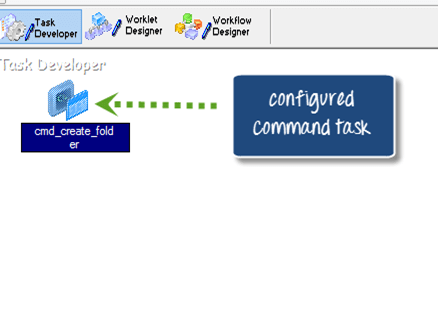

This will create command task folder. Now you have to configure the task to add command in it, that we will see in next step.

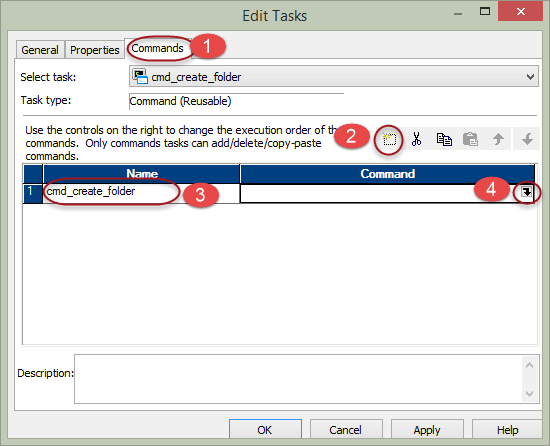

Step 4) To configure the task, double click on the command task icon and it will open an “edit task window”. On the new edit task window

- Select the commands menu

- Click on the add new command icon

- Enter command name

- Click on the command icon to add command text

This will open a command editor box.

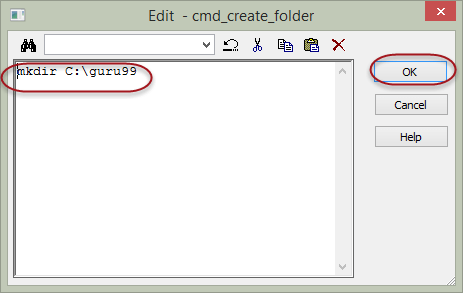

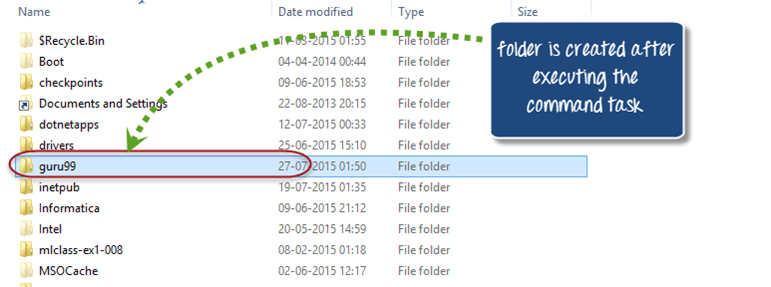

Step 5) On the command editor box, enter the command “mkdir C:\guru99” (this is the windows command to create a folder named “guru99”) and select OK.

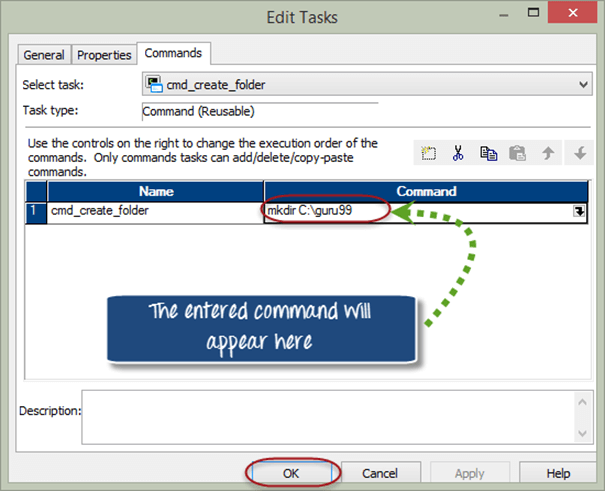

Afther this step you will return to the edit tasks window and you will be able to see the command you added in to the command text box.

Step 6) Click OK on the edit task window,

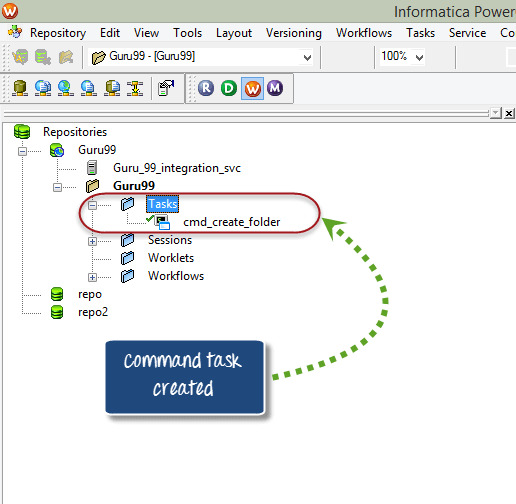

The command task will be created in the task developer under “Guru99” repository.

Note use ctrl+s shortcut to save the changes in repository

How to create workflow to execute command task

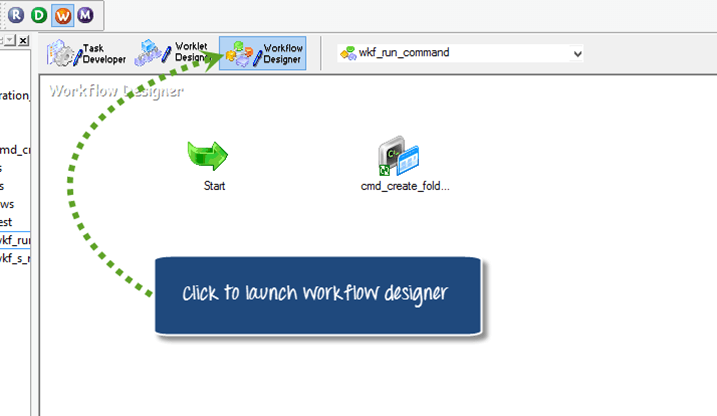

To execute command taks you have to switch on to workflow designer. A workflow designer is a parent or container object in which you can add multiple tasks and when workflow is executed, all the added tasks will execute. To create a workflow

Step 1) Open the workflow designer by clicking on workflow designer menu

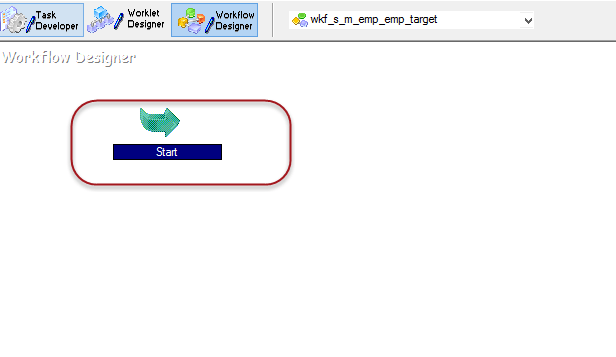

Step 2) In workflow designer

- Select workflows menu

- Select create option

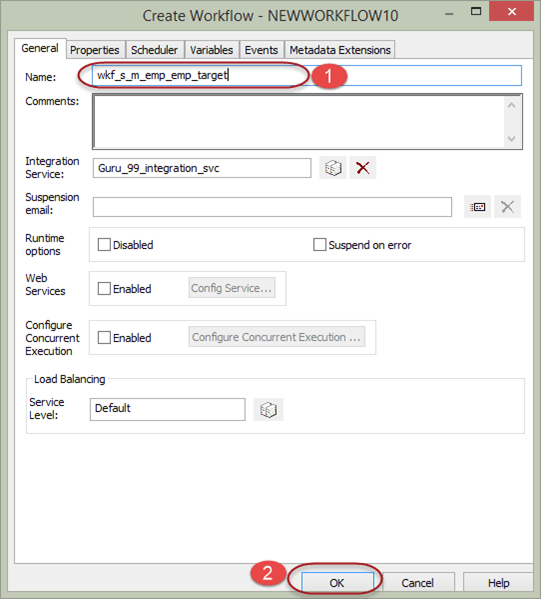

Step 3) In create workflow window

- Enter workflow name

- Select OK Button ( leave other options as default)

This will create the workflow.

Naming Convention – Workflow names are prefixed with using ‘ wkf_’ , if you have a session named ‘ s_m_employee_detail ‘ then workflow for the same can be named as ‘ wkf_s_m_employee_detail’ .

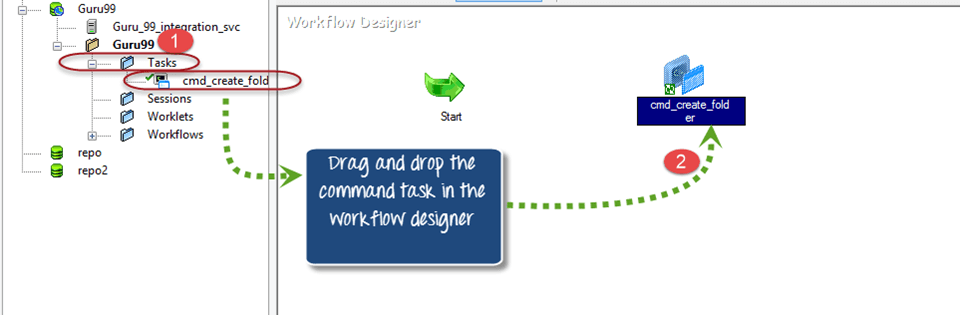

When you create a workflow, it does not consist of any tasks. So, to execute any task in a workflow you have to add task in it.

Step 4) To add command task that we have created in Task developer to the workflow desinger

- In the navigator tree, expand the tasks folder

- Drag and drop the command task to workflow designer

Step 5) Select the “link task option” from the toolbox from the top menu. (The link task option links various tasks in a workflow to the start task, so that the order of execution of tasks can be defined).

Step 6) Once you select the link task icon, it will allow you to drag the link between start task and command task. Now select the start task and drag a link to the command task.

Now you are ready with the workflow having a command task to be executed.

How to execute workflow

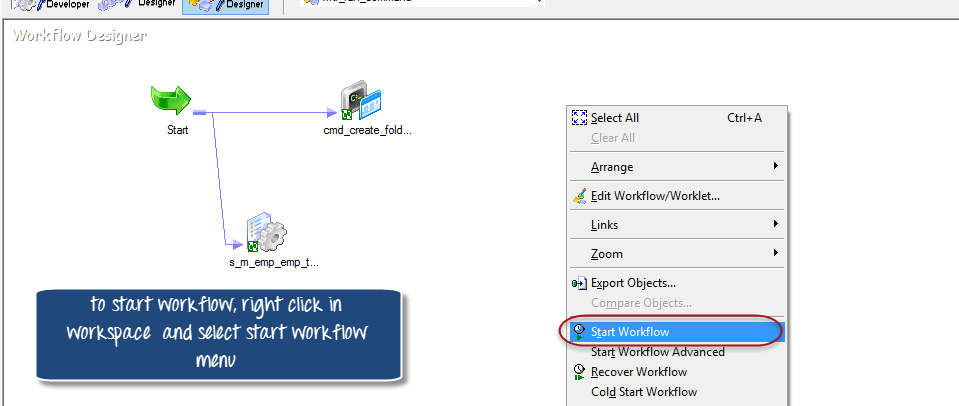

Step 1) To execute the workflow

- Select workflows option from the menu

- Select start workflow option

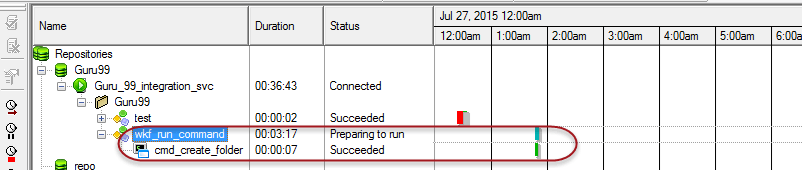

This will open workflow monitor window and executes the workflow

Once the workflow is executed, it will execute the command task to create a folder (guru99 folder) in the defined directory.

Session Task

A session task in Informatica is required to run a mapping.

Without a session task, you cannot execute or run a mapping and a session task can execute only a single mapping. So, there is a one to one relationship between a mapping and a session. A session task is an object with the help of which Informatica gets to know how and where to execute a mapping and at which time. Sessions cannot be executed independently, a session must be added to a workflow. In session object cache properties can be configured and also advanced performance optimization configuration.

How to create a session task

In this exercise you will create a session task for the mapping “m_emp_emp_target” which you created in the previous article.

Step 1) Open Workflow manager and open task developer

Step 2) Now once the task developer opens, in the workflow manager go to main menu

- Click on task menu

This will open a new window “Create Task”

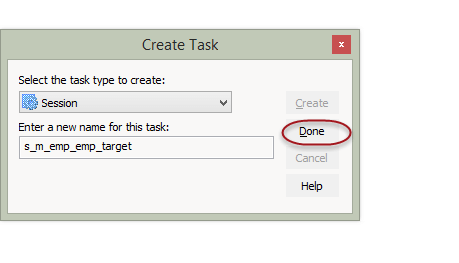

- Select session task as type of task.

- Enter name of task.

- Click create button

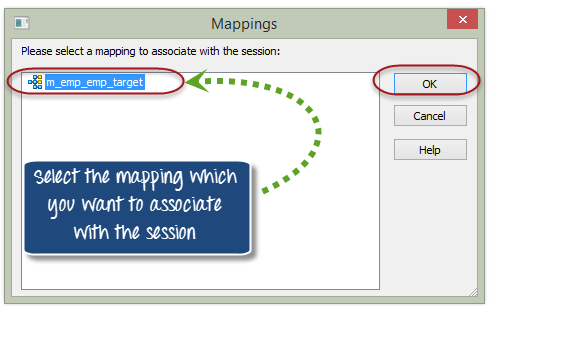

Step 4) A window for selecting the mapping will appear. Select the mapping which you want to associate with this session, for this example select “m_emp_emp_target” mapping and click OK Button.

Step 5) After that, click on “Done” button

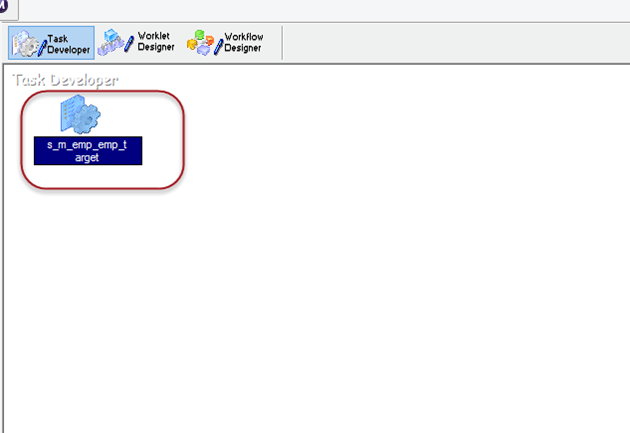

Session object will appear in the task developer

Step 6) In this step you will create a workflow for the session task. Click on the workflow designer icon.

Step 7) In the workflow designer tool

- Click on workflow menu

Step 8) In the create workflow window

- Select OK. ( leave other properties as default, no need to change any properties)

In workflow manager a start task will appear, it’s a starting point of execution of workflow.

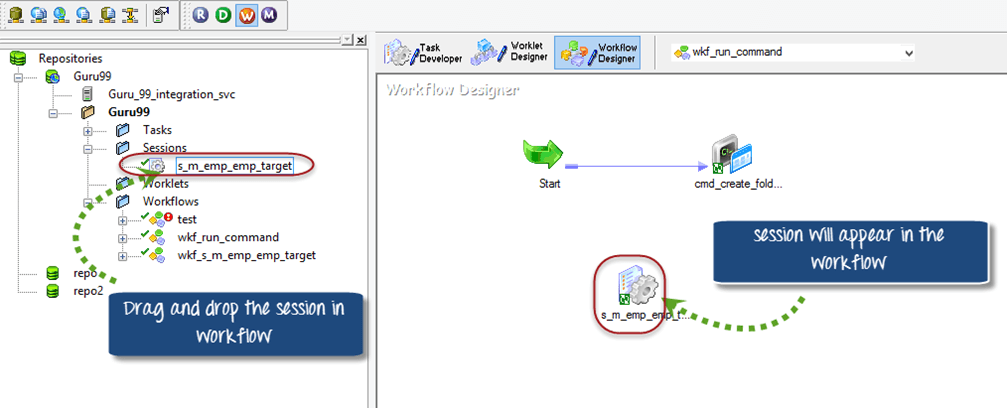

Step 9) In workflow manager

- Expand the sessions folder under navigation tree.

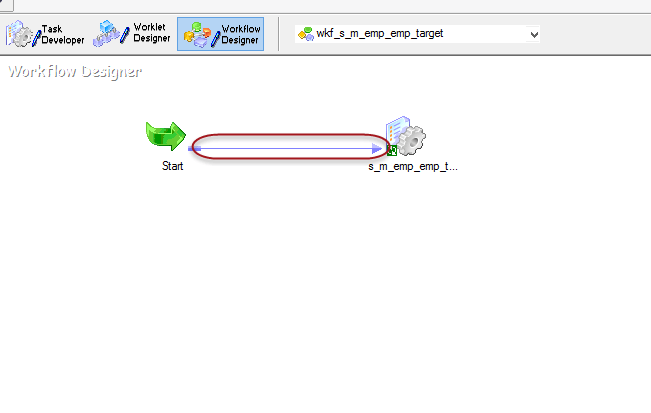

- Drag and drop the session you created in the workflow manager workspace.

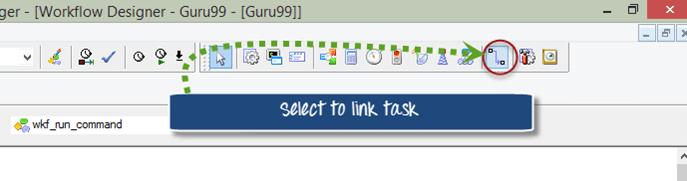

Step 10) Click on the link task option in the tool box.

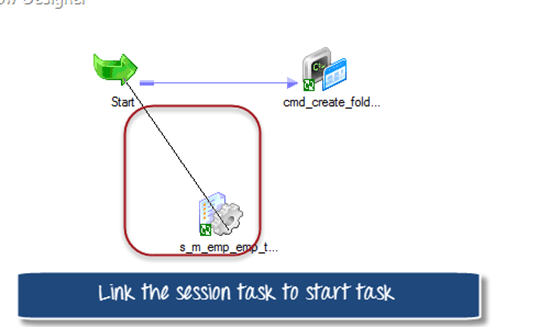

Step 11) Link the start task and session task using the link.

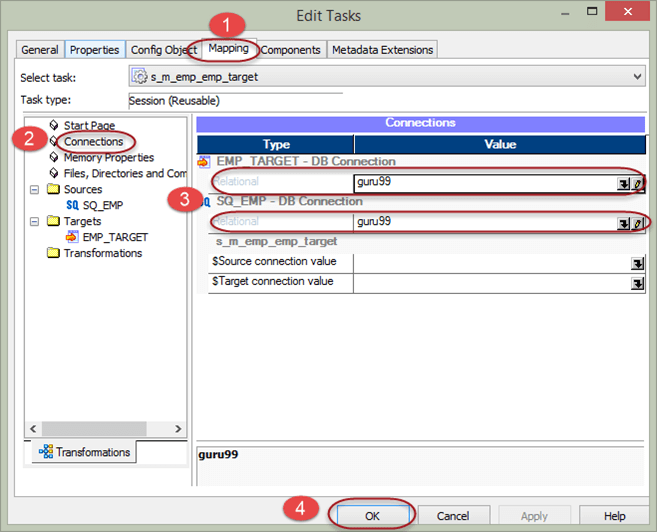

Step 12) Double click on the session object in wokflow manager. It will open a task window to modify the task properties.

Step 13) In the edit task window

- Select mapping tab

- Select connection property

- Assign the connection to source and target, the connection which we created in early steps.

- Select OK Button

Now your configuration of workflow is complete, and you can execute the workflow.

How to add multiple tasks to a start task

The start task is a starting point for the execution of workflow. There are two ways of linking multiple tasks to a start task.

In parallel linking the tasks are linked directly to the start task and all tasks start executing in parallel at same time.

How to add tasks in parallel

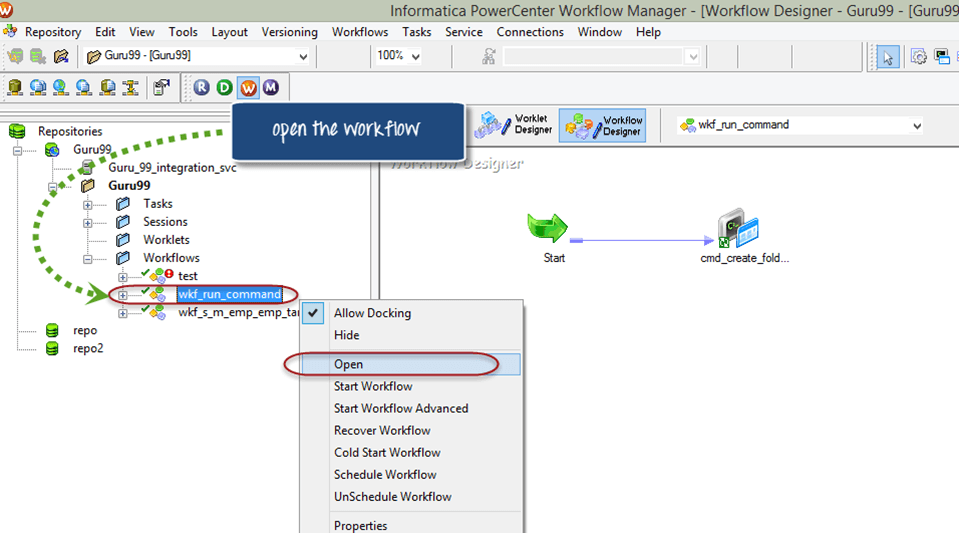

Step 1) In the workflow manager, open the workflow “wkf_run_command”

Step 2) In the workflow, add session task “s_m_emp_emp_target”. ( by selecting session and then drag and drop)

Step 3) Select the link task option from the toolbox

Step 4) link the session task to the start task (by clicking on start taks, holding the click and connecting to session task)

After linking the session task, the workflow will look like this.

Step 5) Start the workflow and monitor in the workflow monitor.

How to add tasks in serial mode

But before we add tasks in serial mode, we have to delete the task that we added to demonstrate parallel execution of task. For that

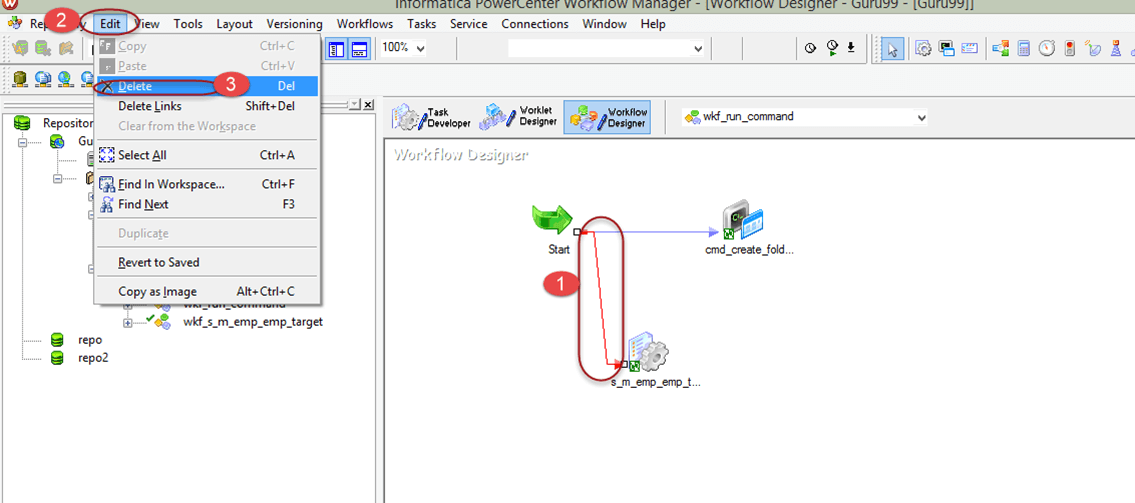

Step 1) Open the workflow “w.kf_run_command”

- Select the link to the session task.

- Select edit option in the menu

- Select delete option

Step 2) Confirmation dialogue box will appear in a window, select yes option

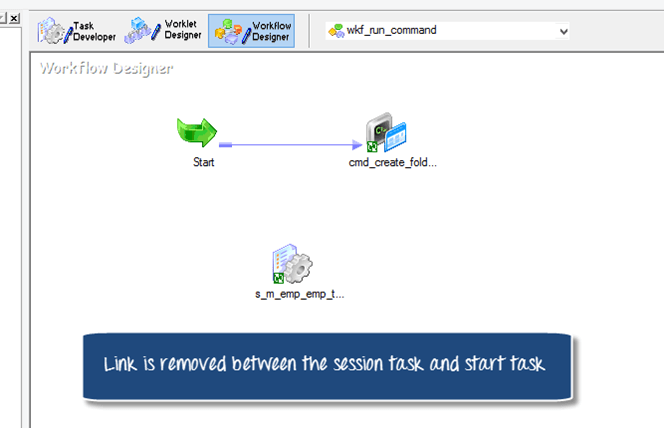

The link between the start task and session task will be removed.

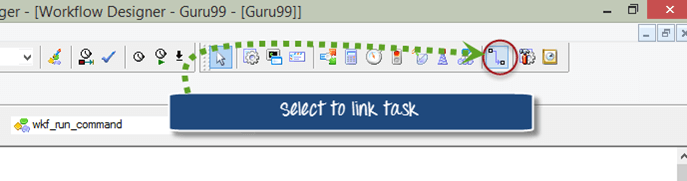

Step 3) Now again go to top menu and select the link task option from the toolbox

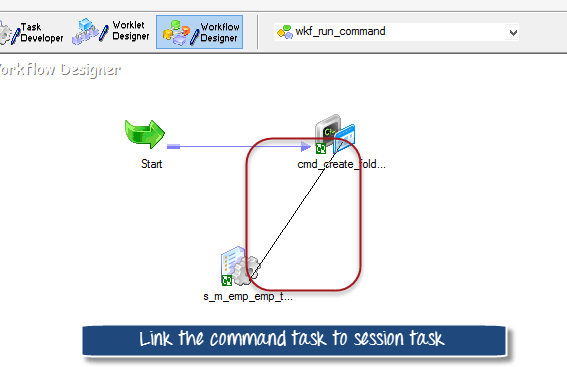

Step 4) link the session task to the command task

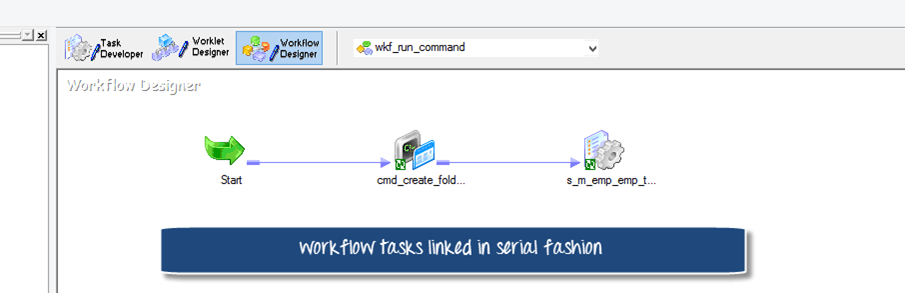

After linking the workflow will look like this

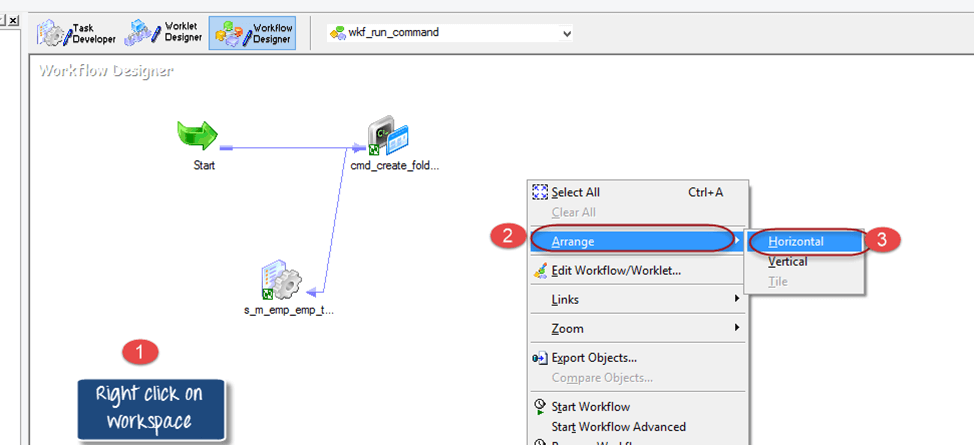

Step 5) To make the visual appearance of workflow more clear

- Right click on wokspace of workflow

- Select arrange menu

- Select Horizontal option

If you start the workflow the command task will execute first and after its execution, session task will start.

Workflow Variable

Workflow variables allows different tasks in a workflow to exchange information with each other and also allows tasks to access certain properties of other tasks in a workflow. For example, to get the current date you can use the inbuilt variable “sysdate”.

Most common scenario is when you have multiple tasks in a workflow and in one task you access the variable of another task. For example, if you have two tasks in a workflow and the requirement is to execute the second task only when first task is executed successfully. You can implement such scenario using predefined variable in the workflow.

Implementing the scenario

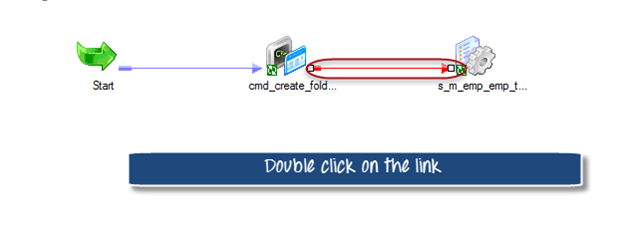

We had a workflow “wkf_run_command” having tasks added in serial mode. Now we will add a condition to the link between session task and command task, so that, only after the success of command task the session task will be executed.

Step 1) Open the workflow “wkf_run_command”

Step 2) Double click on the link between session and command task

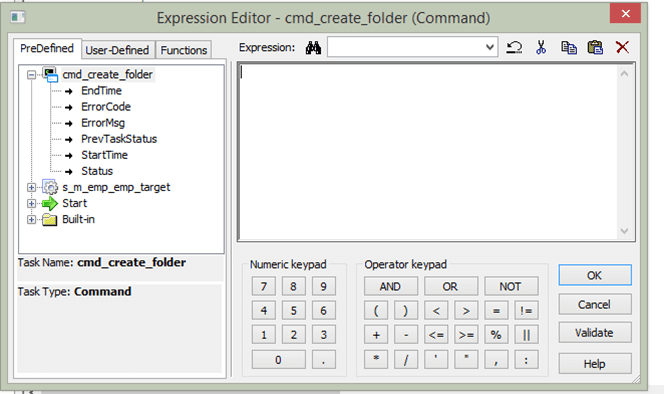

An Expression window will appear

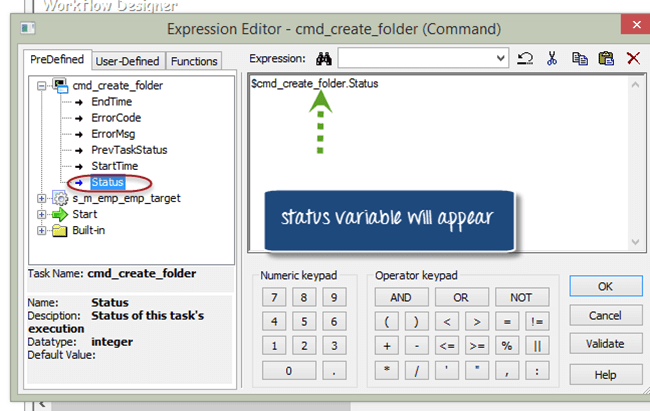

Step 3) Double click the status variable under “cmd_create_folder” menu. A variable “$cmd_create_folder.status” will appear in the editor window on right side.

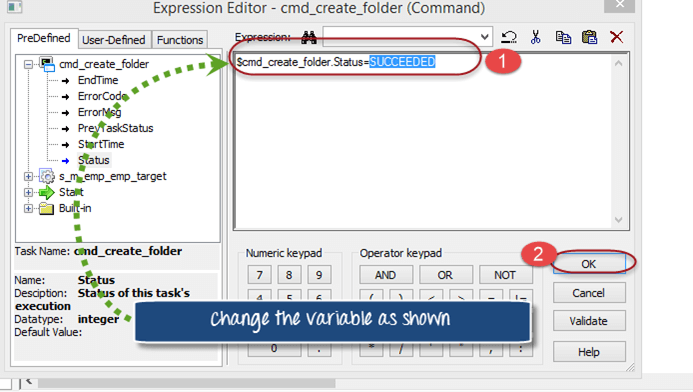

Step 4) Now we will set the variable “$cmd_create_folder.status” condition to succeeded status . which means when the previous tasks is executed and the execution was success, then only execute the next session task.

- Change the variable to “$cmd_create_folder.status=SUCCEEDED” value.

- Click OK Button

The workflow will look like this

When you execute this workflow, the command task executes first and only when it succeeds then only the session task will get executed.

Workflow Parameter

Workflow parameters are those values which remain constant throughout the run. once their value is assigned it remains same. Parameters can be used in workflow properties and their values can be defined in parameter files. For example, instead of using hard coded connection value you can use a parameter/variable in the connection name and value can be defined in the parameter file.

Parameter files are the files in which we define the values of mapping/workflow variables or parameters. There files have the extension of “.par”. As a general standard a parameter file is created for a workflow.

Advantages of Parameter file

- Helps in migration of code from one environment to other

- Alows easy debugging and testing

- Values can be modified with ease without change in code

Structure of parameter file

The structure of parameter file

- [folder_name.WF:Workflow_name]

- $Parameter_name=Parameter_value

Folder_name is the name of repository folder, workflow name is the name of workflow for which you are creating the parameter file.

We will be creating a parameter file for the database connection “guru99” which we assigned in our early sessions for sources and targets.

How to create parameter file

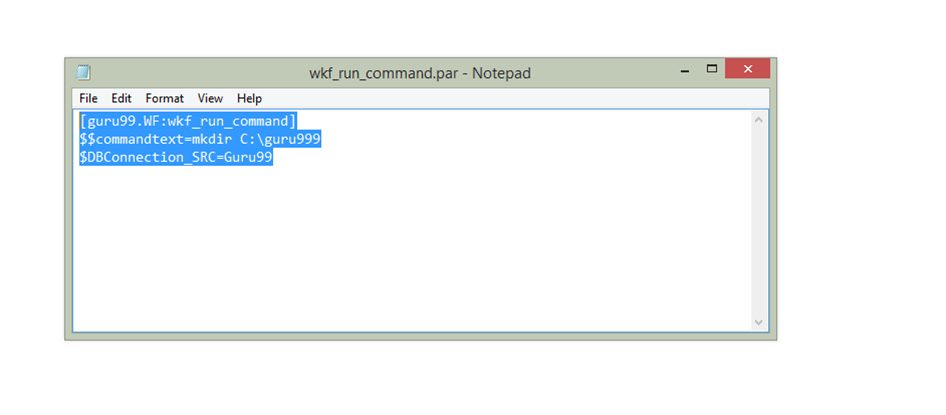

Step 1) Create a new empty file (notepad file)

Step 2) In the file enter text as shown in figure

Step 3) Save the file under a folder guru99 at the location “C:\guru99” as “wkf_run_command.par”

In the file we have created a parameter “$DBConnection_SRC”, we will assign the same to a connection in our workflow.

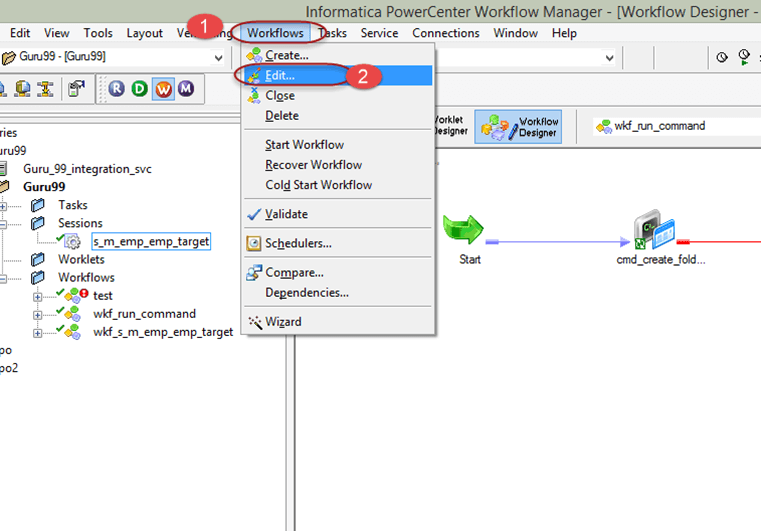

Step 4) Open the workflow “wkf_run_command”

- Select edit option

Step 5) This will open up edit workflow window, in this window

- Go to properties tab menu

- Enter the parameter file name as “c:\guru99\wkf_run_command.par”

Now we are done with defining the parameter file content and point it to a workflow.

Next step is to use the parameter in session.

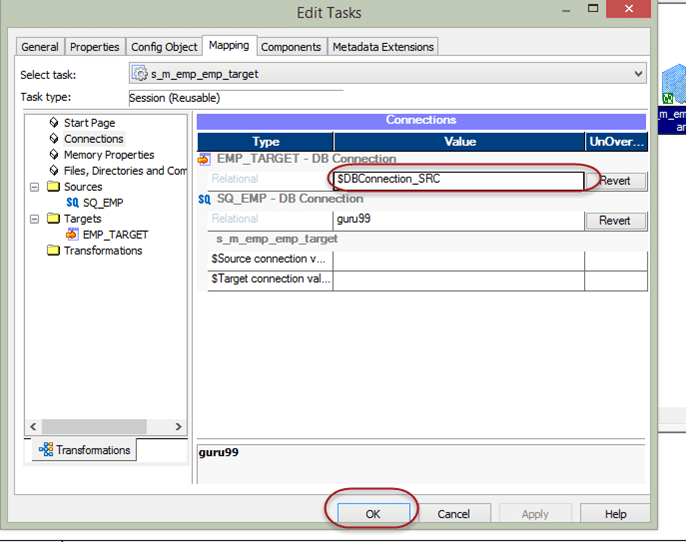

Step 6) In workflow double click on the session “s_m_emp_emp_target”, then

- Select mappings tab menu

- Select connection property in the left panel

- Click on the target connection, which is hardcoded now as “guru99”

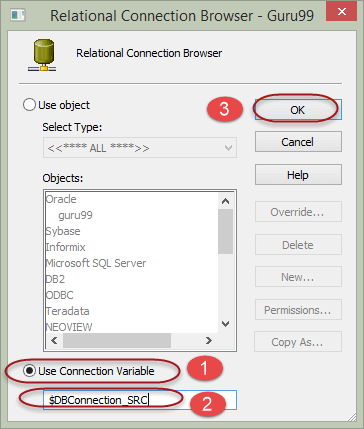

Step 7) A connection browser window will appear, in that window

- Select the option to use connection variable

- Enter connection variable name as “$DBConnection_SRC”

- Select Ok Button

Step 8) In the edit task window connection variable will appear for the target, Select OK button in the edit task window.

Now we are done with creating parameter for a connection and assigning its value to parameter file.

When we execute the workflow, the workflow picks the parameter file looks for the value of its paramters/variables in the parameter file and takes those values.

- Workflow Monitor in Informatica: Task & Gantt Chart View Examples

- Debugger in Informatica: Session, Breakpoint, Verbose Data & Mapping

- Session Properties in Informatica: Complete Tutorial

- INFORMATICA Transformations Tutorial & Filter Transformation

- Lookup Transformation in Informatica (Example)

- Normalizer Transformation in Informatica with EXAMPLE

- Performance Tuning in Informatica: Complete Tutorial

- INFORMATICA TUTORIAL: Complete Online Training

Command Task in Informatica

Command task in informatica example, step 1: create source definition for informatica command task, step 2: create target definition for command task in informatica, step 3: create mapping for command task in informatica, step 4: create a workflow for command task in informatica, step 4(a): create a session for command task in informatica, step 4(b): create a command task in informatica, command task in informatica example 2.

Informatica Complete Reference

- INFORMATICA INTRODUCTION

- TRANSFORMATIONS LIST

- ADVANCE TOPICS

- Informatica Scenarios

- Informatica Questions

- Datawarehousing

Social Profiles

Informatica introduction.

- What is Informatica

- Informatica 9x Architecture

- Powercenter Integration Service 9

- Informatica Software Architecture 8x

- Informatica Server 9.1.0 Install and Configure

- Informatica Server upgrade 9.1.0 to 9.5.0

- Informatica Client Tool 9.1.0 Installation

- Informatica Client Tools

- How to Add Repository and Connect It

Transformations List

- List of Transformations in Designer

- Source Qualifier

- SQL Transformation

- Sequence Generator

- Stored Procedure

- Transaction Control

- Update Strategy

- XML Generator

- Workflow Manager Overview

- List of Tasks in Workflow Manager

- Command Task

- Control Task

- Event Wait Task

- Event Raise Task

- Decision Task

Assignment Task

Advance topics.

- Few Featured Informatica 9

- Tools Included in Informatica 9

- Types of Repositories

- Shortcut Keys in Informatica Powercenter

- Transformations Naming Conventions

- Informatica Performance Tuning

- Import Flat File Definition

- Import Relational Definition

- Dynamic Lookup

- Working with Excel Source File

- File List / Indirect Load

- Mapping Parameters & Variables

- Advance Mapping Parameters & Variables

- Parameter File

- Constraint-Based Loading

- User-Defined Function

- Tips and Important Concepts

- Database and Informatica capabilities

- Mapping Optimization Techniques

- SQL - Script Mode Static

- SQL - Script Mode Dynamic(Connection Object)

- SQL - Script Mode Dynamic(Full Connection)

- SQL - Query Mode Static

- SQL - Query Mode Dynamic (Connection Object)

- SQL - Query Mode Dynamic (Full Connection)

- Currently Processed File Name

- Slowly Changing Dimension Type 1

- Slowly Changing Dimension Type 2 - Effective Date Range

- Slowly Changing Dimension Type 3

FaceBook Follows

Friday, June 21, 2013

8 comments:

Hi Admin, May I know how are you increasing value by one for $$Session_Counter after each successful run?

Hi Vaibhav, Please see about post 9th point. 9. Enter the value or expression you want to assign. If you have still doubt, please let me know.

Hi..nice solution...but i have multiple sessions in my workflow..is there any other technique than using assignment task at session level

Hi goutham, I don't how to assign the values of workflow variables to target.

How can we solve that when the source file getting along with date and time ,but we do not require that date and time with document name as target

can we assign the the value of variable in the parameter file?

Si así lo requieres si se puede asignar desde un archivo de parámetros pero depende de la necesidad.

Hi ! Alice this side from Sample Assignment. It is said that by taking affordable Electrical Engineering assignments Help from these service providers, students can clear their courses with the best academic grades. Sample Assignment provide lots of benefits to students along with their assignment help online services in Australia, such as 24*7 client support service, live interactive sessions with subject matter experts, lucrative offers and deals, etc.

- English English

- Español Spanish

- Deutsch German

- Français French

- 日本語 Japanese

- Português Portuguese

- Multidomain MDM

- 10.5 HotFix 3

- 10.5 HotFix 2

- 10.5 HotFix 2 SP 1

- 10.5 HotFix 1

- 10.4 HotFix 3

- 10.4 HotFix 2

- 10.4 HotFix 1

- 10.3 HotFix 3

- 10.3 HotFix 2

- 10.3 HotFix 1

Data Director Implementation Guide

- Updated : December 2019

- Multidomain MDM 10.3 HotFix 2

- All Products

Rename Saved Search

Confirm Deletion

Are you sure you want to delete the saved search?

Table of Contents

- Prerequisites

- IDD Application

- IDD Configuration Manager

- IDD Configuration Files

- Provisioning Tool

- Subject Areas

- Subject Area Groups

- One:Many Child Relationships

- Many:Many Child Relationships

- One:Many Grandchild Relationships

- Many:Many Grandchild Relationships

- Sibling References

- Parent Records

- Using a Web Server

- User Authentication (SSO)

- Base Objects

- Caches and the Clear Cache Option

- Match Paths

- Basic - SQL-based Search

- Extended - Match-based Search

- Advanced Search

- Cleansing and Standardization

- Cleanse Functions Returning NULL

- Tasks and Actions

- In-Flight Data

- Hierarchy Manager

- Object and Column Security

- Data Security

- Data Masking

- Dependent Lookups

- Timeline Rules

- Hierarchy View

- Implementation Process Overview

- Before You Begin

- Step 1. Create the IDD Application

- Step 2. Configure Subject Area Groups

- Step 3.1. Configure Subject Areas in the Hub Console

- Step 3.2. Configure Subject Areas in the IDD Configuration Manager

- Step 3.3. Validate, Deploy, and Test the Changes

- Step 3.4. Configure Other Child Tabs

- Step 4. Configure Cleanse and Validation

- Step 5.1. Configure Basic Search

- Step 5.2. Configure Extended Search

- Step 5.3. Configure Public Queries

- Case-Insensitive Searching

- Step 6. Configure the Match Process

- Setting a Default Approval Workflow for the Subject Area Data View

- Updating Workflows to Support Multiple Task Actions

- Step 8. Configure Security

- Step 9. Configure User Interface Extensions

- Step 10. Localize the Application

- IDD Configuration Manager Overview

- Starting the Informatica Data Director Configuration Manager

- ORS Binding

- Add an IDD Application

- Import an IDD Application Configuration

- Application State

- Logical ORS Databases

- Session Timeout

- Subject Area Group Properties

- Subject Area Properties

- Subject Area Child and Grandchild Properties

- Lookup Localization

- Object Missing

- Uploading the Custom Login Provider Package

- Third-Party Libraries

- Custom Login Provider with External Login Form

- Configuring E360 to Send POST Requests to Web Service

- Custom Login Provider with IDD Login Form

- Build Login Provider Library

- Set Up SalesForce SSO Authentication (WebLogic)

- Set Up SalesForce SSO Authentication (WebSphere)

- Google Single Sign-On Login Provider Implementation Example

- Set Up Google SSO Authentication

- Manual IDD Configuration Overview

- Work with the IDD Configuration XML File

- Lookup Tables With Subtype Column

- Static Lookup Values

- Display the Secondary Fields from a Base Object in the Child Tab

- Displaying a Parent of a Primary Object in a Child Tab

- Expand a Child Subject Area in Data View by Default

- Create Sibling Reference

- Grandchildren

- Subject Area Links

- Logical Menu Grouping

- Adding Groups Within the New Window

- Customizing Column Labels

- Configure Checkbox Edit Style

- Add Relationships

- Rendering Optimization

- Hierarchy Manager Relationship Types

- Hierarchy Manager Filter

- Enabling Inactive Relationships

- Hierarchy View Relationship Table Records

- Customizations

- Top-Level Workspace Tabs

- Custom Top-Level Tabs

- External Links (Custom Start Workspace Components)

- Start Workspace Layout

- Custom Child Tab Attributes

- External Link Properties

- Standard Custom Action

- Custom Action with Callback

- Custom Child Tabs

- Custom Actions

- User Exits and the Entity 360 Framework

- User Exit Operations

- Building User Exits

- Configuring a User Exit

- Configuring a User Exit to Set Start Date and End Date for a Period

- User Exit Messages

- Troubleshooting

- Setting the Login Page and Configuration Manager Default Display Language

- Configuring a Custom Error Page

- Downloading a Revised User Guide Help File

- Importing a Revised User Guide Help File

- Testing the Revised Help

- Creating a Custom Help File

- Importing a Custom Help File

- Informatica Data Director Global Properties Reference

- Updating the Global Properties

- Database Server Sizing

- Application Server Sizing

- Client and Network Sizing

- Browser Configuration Requirements

- Application Components Reference

- IDD Security Configuration Reference

- Data Security Using Filters

- Data Security Parameters

- Data Security Parent Object Configuration Example

- Data Security Grandchild Object Configuration Example

- Data Security in Search Data

- Open a Record

- Open a Record Using a Single Role

- Filter Record Using a Single Role

- Filter Records Using Multiple Roles

- Data Security Filters for Inherited Roles

- View Relationships

- Export Data and Export Profiles

- Save a Record

- Find Duplicates (Potential Matches)

- Add Hierarchy Manager Entities

- Represent Hierarchy Manager Graphs

- Open History Details

- View History Events

- Data Security in Deep Links

- Example Role-Based Security Configuration Overview

- IDD, Security Access Manager (SAM), and Services Integration Framework (SIF)

- Tools for Setting Up IDD Security

- Related Reading

- Object and Task Security

- Tips for Designing Security for IDD Usage

- Other Considerations

- Configure Design Objects in the Hub Console

- Configure IDD Application Users (Users Tool)

- Configure Secure Resources (Secure Resources Tool)

- Create and Configure a New IDD Application (IDD Configuration Manager)

- View Custom Resources (Secure Resources Tool)

- Create Roles

- Configure Resource Privileges for Base Objects and Affiliated Objects

- Configure Resource Privileges for Packages

- Configure Resource Privileges for Cleanse Functions

- Configure Resource Privileges for Custom Resources

- Additional Configuration Tips

- Assign Roles to Users (Users and Groups Tool)

- What Sample IDD Users Might Be Able To See and Do

- Data Masking Overview

- Expressions

- Sample Patterns

- Sample Mask Definition

- Siperian BPM is Deprecated

- Workflows and Tasks

- Workflow and Task Configuration Component Descriptions

- Task Configuration

- Task Types - Sample XML

- displayName

- creationType

- displayType

- dataUpdateType

- defaultApproval

- Description Tag

- Target Task Tag

- Task Type Customization

- Action Types

- Action Types - Sample XML

- manualReassign

- closeTaskView

- Task Security Configuration

Task Assignment Configuration

- Task Assignment Configuration UI

- Automatic Task Assignment

- Customizing Automatic Task Assignment

- Manual Task Assignment

- Customizing Task Assignment

- Changing Assigned Tasks

- Configuring the Task Notification Email

- User Manager Configuration in the Hub Console

- Reports and Task Management Metrics

- Review Task

- Open Review Tasks with a Single Role

- Open Review Tasks with Multiple Roles

- Filter Child Record in the Task View

- Open Merge/Unmerge Tasks

- Data Aware Task Assignment

- Language Codes

- Country Codes

- Troubleshooting Overview

- Check Your SAM Configuration

- Check Your Cleanse Function Configuration

- Informatica Data Director Metadata Has Not Updated

- Informatica Data Director Stops Responding When You Switch Entities

- Informatica Data Director Configuration Is Not Valid

- Match Performance is Very Slow

- administrator

- authentication

- auxiliary files

- base object

- best version of the truth (BVT)

- business process

- business process management (BPM)

- cleanse function

- Cleanse Match Server

- business entity

- business entity service

- content metadata

- Custom Login Provider

- data cleansing

- Data governance

- Data security

- data steward

- deduplicate

- design object

- External Login Provider

- foreign key

- fuzzy match

- fuzzy match key

- history table

- Hub Console

- In-flight data

- Master data

- Master Database

- match column

- match rule set

- match table

- Operational Reference Store (ORS)

- parentReference

- relationship base object

- resource group

- Resource Kit

- Schema Manager

- Security Access Manager (SAM)

- Security filter

- Services Integration Framework (SIF)

- sibling reference

- state management

- stored procedure

- Subject area group

- Subject Area Relationships

- Subject area

- system state

- validation process

Are you sure you want to delete the comment?

Confirm Rejection

Enter the reason for rejecting the comment.

Are you sure to delete your comment?

IMAGES

VIDEO

COMMENTS

You can add an Assignment task to the workflow to assign another value to the variable. The Data Integration Service uses the assigned value for the variable during the remainder of the workflow. For example, you create a counter variable and set the initial value to 0. In the Assignment task, you increment the variable by setting the variable ...

Click Create. Then click Done. The Workflow Designer creates and adds the Assignment task to the workflow. Double-click the Assignment task to open the Edit Task dialog box. On the Expressions tab, click Add to add an assignment. Click the Open button in the User Defined Variables field. Select the variable for which you want to assign a value.

An Assignment task assigns a value to a user-defined workflow variable. Add an Assignment task before the Mapping task in the workflow. Use an expression in the Assignment task to assign the value of the department name parameter and the current date to the workflow variable. Right-click the workflow editor and select. Add Workflow Object.

As a workflow progresses, the Data Integration Service can calculate and change the initial variable value according to how you configure the workflow. You can assign a value to a user-defined variable using an Assignment task. Or, you can assign a value to a user-defined variable using task output.

In the workflow, create a non-reusable task (s_m_dummy) pointing to the mapping. In the session, click components > post session on success variable assignment > Assign mapping variable to workflow variable for both parameters. In the same workflow create a command task and link it from session s_m_dummy. In the command task edit the command as ...

For example, each task includes an Is Successful output value that indicates whether the task ran successfully. The workflow cannot directly access this task output data. To use the data in the remainder of the workflow, you create a boolean workflow variable named TaskSuccessful and assign the Is Successful output to the variable.

Before you can define a variable in the assignment task, you need to add the variable in Workflow Manager. To add a variable to the Workflow, perform the following steps: In Workflow Manager, open the workflow for which you wish to define user-defined variables. Navigate to Workflow | Edit | Variables. Click on the Add a new variable option to ...

Step 4. Create the Principal Name and Keytab Files in the Active Directory Server. Step 5. Specify the Kerberos Authentication Properties for the Data Integration Service. Step 6. Configure the Execution Options for the Data Integration Service. Configuring Big Data Management to Access an SSL-Enabled Cluster.

The Beginner Level of learning path will enable you to understand DEI fundamentals. It constitutes of videos, webinars, and other documents on introduction to DEI, DEI architecture, Blaze architecture, use cases on Azure, Amazon cloud and many other ecosystems, integration with AWS and many more. After you have successfully completed finish all ...

Easy option 1: This is a simple copy and paste. Copy the contents of the file, custom_task_assignment.sql found in C:\InfaMDM95-JB\hub\resourcekit\samples\BDD\task-assignment\custom_task_assignment.sql into the CMX UE .ASSIGN_TASKS packaged procedure definition. Modify the code you copied into CMX UE.

Introduction. Informatica ® Big Data Management ™ allows users to build big data pipelines that can be seamlessly ported on to any big data ecosystem such as Amazon AWS, Azure HDInsight and so on. A pipeline built in the Big Data Management (BDM) is known as a mapping and typically defines a data flow from one or more sources to one or more targets with optional transformations in between.

Step 1) In the Informatica Designer, Click on the Workflow manager icon. Step 2) This will open a window of Workflow Manager. Then, in the workflow Manager. We are going to connect to repository "guru99", so double click on the folder to connect. Enter user name and password then select "Connect Button".

Create the Principal Name and Keytab Files in the Active Directory Server. Step 5. Specify the Kerberos Authentication Properties for the Data Integration Service. Step 6. Configure the Execution Options for the Data Integration Service. Configuring Big Data Management to Access an SSL-Enabled Cluster.

Tasks Overview. The Workflow Manager contains many types of tasks to help you build workflows and worklets. You can create reusable tasks in the Task Developer. Or, create and add tasks in the Workflow or Worklet Designer as you develop the workflow. The following table summarizes workflow tasks available in Workflow Manager: Task Name. Tool.

Use a Command Task step to run shell scripts or batch commands from multiple script files on the Secure Agent machine. For example, you can use a command task to move a file, copy a file, zip or unzip a file, or run clean scripts or SQL scripts as part of a taskflow. You can use the Command Task outputs to orchestrate subsequent tasks in the ...

We will use Decision Transformation to achieve this. We define a strategy and concatenate the results of the previous if statement to the current one and obtain the entire result. The mapping looks like the following: The strategy definition is: The output port product is reused in the next if statement. That is, the result of next if statement ...

To create an Informatica Command Task, First, go to the Task Developer Tab. Next, navigate to Tasks Menu and select the Create option. Once you select the Create option, a new window called Create Task will open. First, select the Command Task, provide a unique name for this Task and click on the Create button.

The Integration Service evaluates the condition in the Decision task and sets the predefined condition variable to True (1) or False (0). We can specify one decision condition per Decision task. Depending on the workflow, we might use link conditions instead of a Decision task.

Step 4. Create the Principal Name and Keytab Files in the Active Directory Server. Step 5. Specify the Kerberos Authentication Properties for the Data Integration Service. Step 6. Configure the Execution Options for the Data Integration Service. Configuring Big Data Management to Access an SSL-Enabled Cluster.

Introduction to Informatica Big Data Management. Data Engineering Integration 10.2.2.

If you are registered with any of the following Informatica applications, you can log in using the same credentials: If you are an Informatica Partner, do not create an account here. Please contact your Partner Portal Admin, your Informatica Partner Sales Manager, or contact Partner Support. Sign In. Forgot Password?

Hi ! Alice this side from Sample Assignment. It is said that by taking affordable Electrical Engineering assignments Help from these service providers, students can clear their courses with the best academic grades. Sample Assignment provide lots of benefits to students along with their assignment help online services in Australia, such as 24*7 client support service, live interactive sessions ...

HOW TO: Remove Task Buttons using ActiveVOS Designer in MDM FAQ: Is it possible to auto-assign an ActiveVOS task to user in E360 application? HOW TO: Import the OOTB MDM BE ActiveVOS workflow in ActiveVOS Designer for referring and doing any custom changes

For information about these tasks, see the Informatica Application Service Guide. Creating an Application. When you create an application, you select the objects to include in the application. Create an application when you want to deploy one or more objects so end users can access the data through third-party tools. 1.

tasks can be assigned to users with either a Data Steward role or a Customer-NY role. Tasks of type UpdateRejectedRecord can be assigned only to one user (user1). task, with the name of one of the task types defined in the IDD configuration. Additionally, it must contain either one or more child element's security Role, or user attribute with ...