About Project Euler

What is Project Euler?

Project Euler is a series of challenging mathematical/computer programming problems that will require more than just mathematical insights to solve. Although mathematics will help you arrive at elegant and efficient methods, the use of a computer and programming skills will be required to solve most problems. The motivation for starting Project Euler, and its continuation, is to provide a platform for the inquiring mind to delve into unfamiliar areas and learn new concepts in a fun and recreational context.

Who are the problems aimed at?

The intended audience include students for whom the basic curriculum is not feeding their hunger to learn, adults whose background was not primarily mathematics but had an interest in things mathematical, and professionals who want to keep their problem solving and mathematics on the cutting edge.

Currently we have 1306553 registered members who have solved at least one problem, representing 220 locations throughout the world, and collectively using 113 different programming languages to solve the problems.

Can anyone solve the problems?

The problems range in difficulty and for many the experience is inductive chain learning. That is, by solving one problem it will expose you to a new concept that allows you to undertake a previously inaccessible problem. So the determined participant will slowly but surely work his/her way through every problem.

In order to track your progress it is necessary to setup an account and have Cookies enabled.

If you already have an account, then Sign In . Otherwise, please Register – it's completely free!

However, as the problems are challenging, then you may wish to view the Problems before registering.

"Project Euler exists to encourage, challenge, and develop the skills and enjoyment of anyone with an interest in the fascinating world of mathematics."

The page has been left unattended for too long and that link/button is no longer active. Please refresh the page.

Learn by .css-1v0lc0l{color:var(--chakra-colors-blue-500);} doing

Guided interactive problem solving that’s effective and fun. Master concepts in 15 minutes a day.

Data Analysis

Computer Science

Programming & AI

Science & Engineering

Join over 10 million people learning on Brilliant

Master concepts in 15 minutes a day.

Whether you’re a complete beginner or ready to dive into machine learning and beyond, Brilliant makes it easy to level up fast with fun, bite-sized lessons.

Effective, hands-on learning

Visual, interactive lessons make concepts feel intuitive — so even complex ideas just click. Our real-time feedback and simple explanations make learning efficient.

Learn at your level

Students and professionals alike can hone dormant skills or learn new ones. Progress through lessons and challenges tailored to your level. Designed for ages 13 to 113.

Guided bite-sized lessons

We make it easy to stay on track, see your progress, and build your problem-solving skills one concept at a time.

Stay motivated

Form a real learning habit with fun content that’s always well-paced, game-like progress tracking, and friendly reminders.

© 2024 Brilliant Worldwide, Inc., Brilliant and the Brilliant Logo are trademarks of Brilliant Worldwide, Inc.

Browse Course Material

Course info.

- Prof. Dimitris Bertsimas

Departments

- Electrical Engineering and Computer Science

- Sloan School of Management

As Taught In

- Algorithms and Data Structures

- Software Design and Engineering

- Systems Engineering

- Applied Mathematics

- Discrete Mathematics

Learning Resource Types

Introduction to mathematical programming, course description.

You are leaving MIT OpenCourseWare

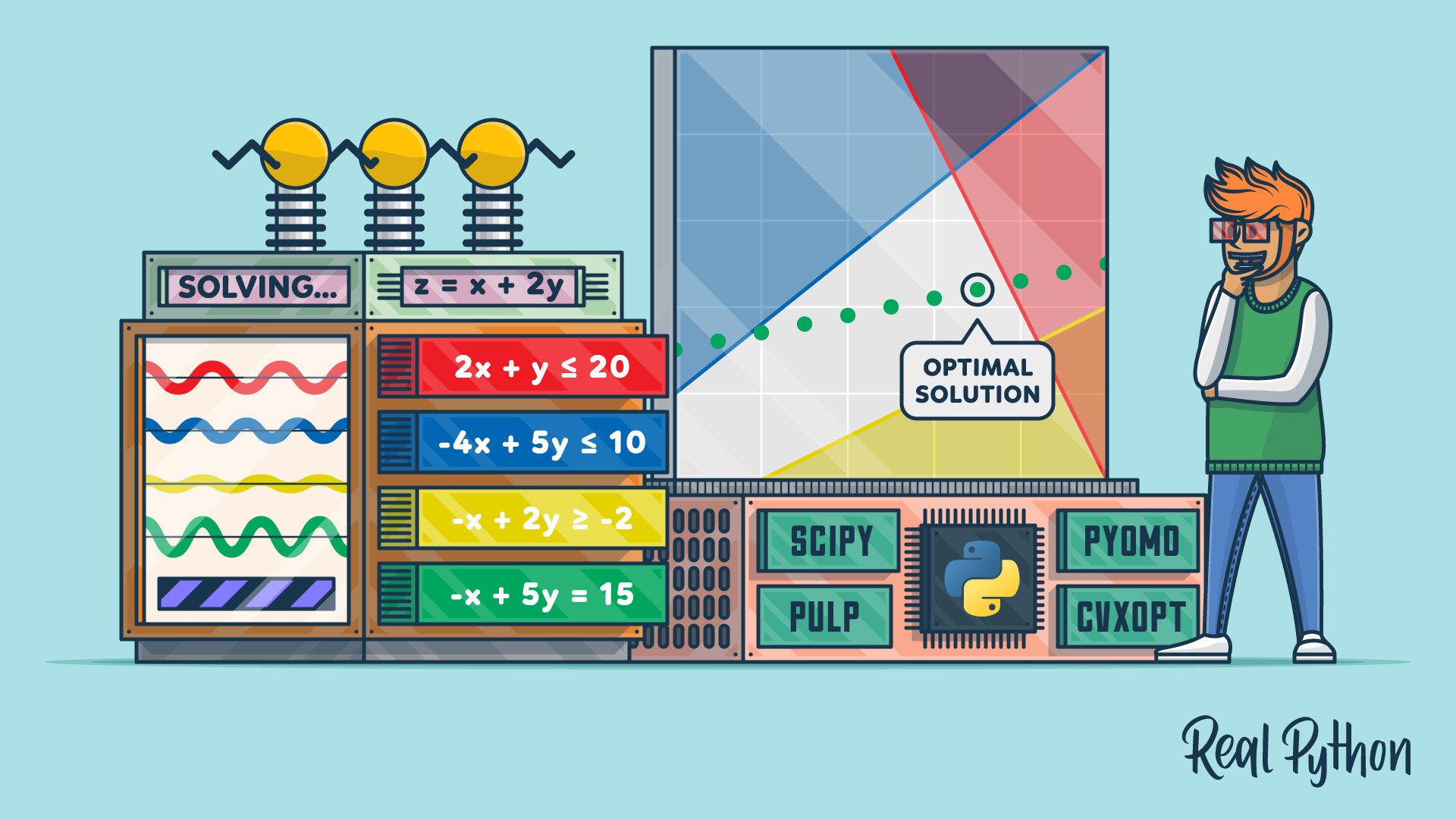

Hands-On Linear Programming: Optimization With Python

Table of Contents

What Is Linear Programming?

What is mixed-integer linear programming, why is linear programming important, linear programming with python, small linear programming problem, infeasible linear programming problem, unbounded linear programming problem, resource allocation problem, installing scipy and pulp, using scipy, linear programming resources, linear programming solvers.

Linear programming is a set of techniques used in mathematical programming , sometimes called mathematical optimization, to solve systems of linear equations and inequalities while maximizing or minimizing some linear function . It’s important in fields like scientific computing, economics, technical sciences, manufacturing, transportation, military, management, energy, and so on.

The Python ecosystem offers several comprehensive and powerful tools for linear programming. You can choose between simple and complex tools as well as between free and commercial ones. It all depends on your needs.

In this tutorial, you’ll learn:

- What linear programming is and why it’s important

- Which Python tools are suitable for linear programming

- How to build a linear programming model in Python

- How to solve a linear programming problem with Python

You’ll first learn about the fundamentals of linear programming. Then you’ll explore how to implement linear programming techniques in Python. Finally, you’ll look at resources and libraries to help further your linear programming journey.

Free Bonus: 5 Thoughts On Python Mastery , a free course for Python developers that shows you the roadmap and the mindset you’ll need to take your Python skills to the next level.

Linear Programming Explanation

In this section, you’ll learn the basics of linear programming and a related discipline, mixed-integer linear programming. In the next section , you’ll see some practical linear programming examples. Later, you’ll solve linear programming and mixed-integer linear programming problems with Python.

Imagine that you have a system of linear equations and inequalities. Such systems often have many possible solutions. Linear programming is a set of mathematical and computational tools that allows you to find a particular solution to this system that corresponds to the maximum or minimum of some other linear function.

Mixed-integer linear programming is an extension of linear programming. It handles problems in which at least one variable takes a discrete integer rather than a continuous value . Although mixed-integer problems look similar to continuous variable problems at first sight, they offer significant advantages in terms of flexibility and precision.

Integer variables are important for properly representing quantities naturally expressed with integers, like the number of airplanes produced or the number of customers served.

A particularly important kind of integer variable is the binary variable . It can take only the values zero or one and is useful in making yes-or-no decisions, such as whether a plant should be built or if a machine should be turned on or off. You can also use them to mimic logical constraints.

Linear programming is a fundamental optimization technique that’s been used for decades in science- and math-intensive fields. It’s precise, relatively fast, and suitable for a range of practical applications.

Mixed-integer linear programming allows you to overcome many of the limitations of linear programming. You can approximate non-linear functions with piecewise linear functions , use semi-continuous variables , model logical constraints, and more. It’s a computationally intensive tool, but the advances in computer hardware and software make it more applicable every day.

Often, when people try to formulate and solve an optimization problem, the first question is whether they can apply linear programming or mixed-integer linear programming.

Some use cases of linear programming and mixed-integer linear programming are illustrated in the following articles:

- Gurobi Optimization Case Studies

- Five Areas of Application for Linear Programming Techniques

The importance of linear programming, and especially mixed-integer linear programming, has increased over time as computers have gotten more capable, algorithms have improved, and more user-friendly software solutions have become available.

The basic method for solving linear programming problems is called the simplex method , which has several variants. Another popular approach is the interior-point method .

Mixed-integer linear programming problems are solved with more complex and computationally intensive methods like the branch-and-bound method , which uses linear programming under the hood. Some variants of this method are the branch-and-cut method , which involves the use of cutting planes , and the branch-and-price method .

There are several suitable and well-known Python tools for linear programming and mixed-integer linear programming. Some of them are open source, while others are proprietary. Whether you need a free or paid tool depends on the size and complexity of your problem as well as on the need for speed and flexibility.

It’s worth mentioning that almost all widely used linear programming and mixed-integer linear programming libraries are native to and written in Fortran or C or C++. This is because linear programming requires computationally intensive work with (often large) matrices. Such libraries are called solvers . The Python tools are just wrappers around the solvers.

Python is suitable for building wrappers around native libraries because it works well with C/C++. You’re not going to need any C/C++ (or Fortran) for this tutorial, but if you want to learn more about this cool feature, then check out the following resources:

- Building a Python C Extension Module

- CPython Internals

- Extending Python with C or C++

Basically, when you define and solve a model, you use Python functions or methods to call a low-level library that does the actual optimization job and returns the solution to your Python object.

Several free Python libraries are specialized to interact with linear or mixed-integer linear programming solvers:

- SciPy Optimization and Root Finding

In this tutorial, you’ll use SciPy and PuLP to define and solve linear programming problems.

Linear Programming Examples

In this section, you’ll see two examples of linear programming problems:

- A small problem that illustrates what linear programming is

- A practical problem related to resource allocation that illustrates linear programming concepts in a real-world scenario

You’ll use Python to solve these two problems in the next section .

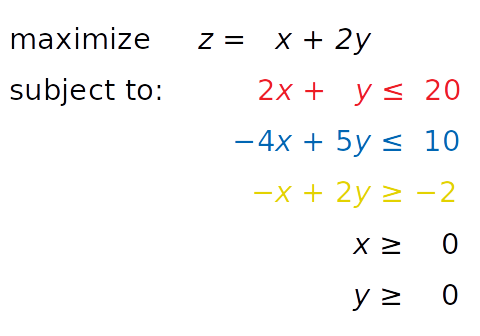

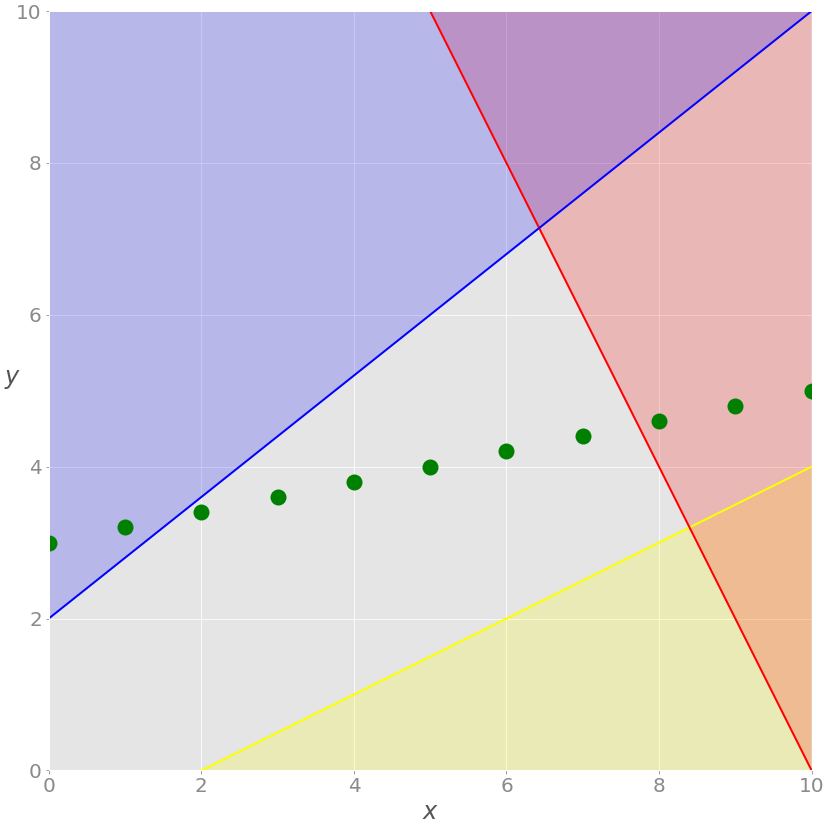

Consider the following linear programming problem:

You need to find x and y such that the red, blue, and yellow inequalities, as well as the inequalities x ≥ 0 and y ≥ 0, are satisfied. At the same time, your solution must correspond to the largest possible value of z .

The independent variables you need to find—in this case x and y —are called the decision variables . The function of the decision variables to be maximized or minimized—in this case z —is called the objective function , the cost function , or just the goal . The inequalities you need to satisfy are called the inequality constraints . You can also have equations among the constraints called equality constraints .

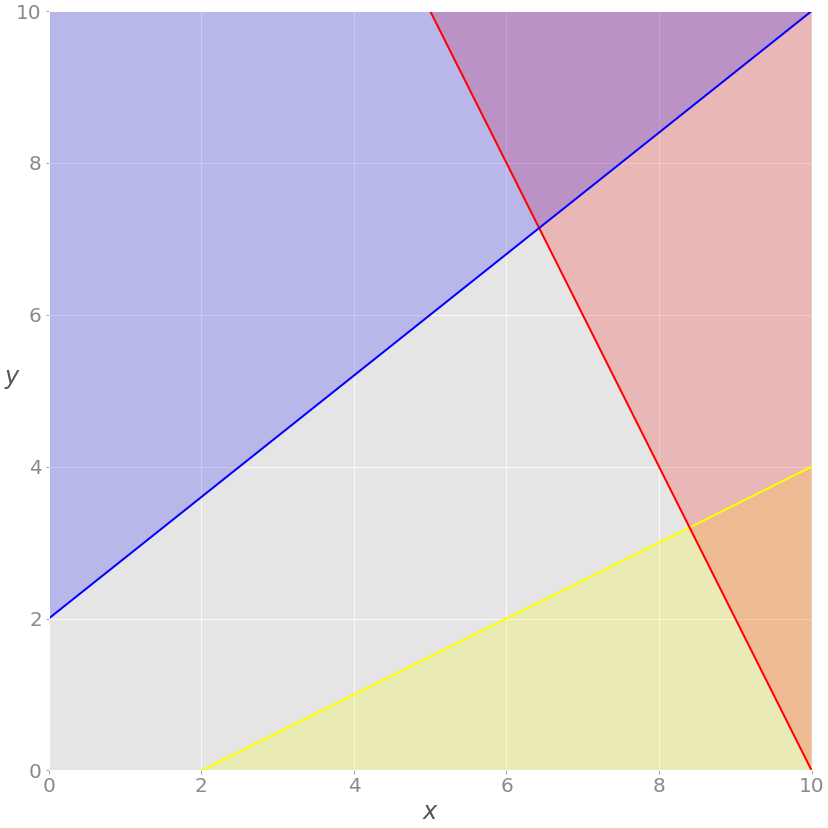

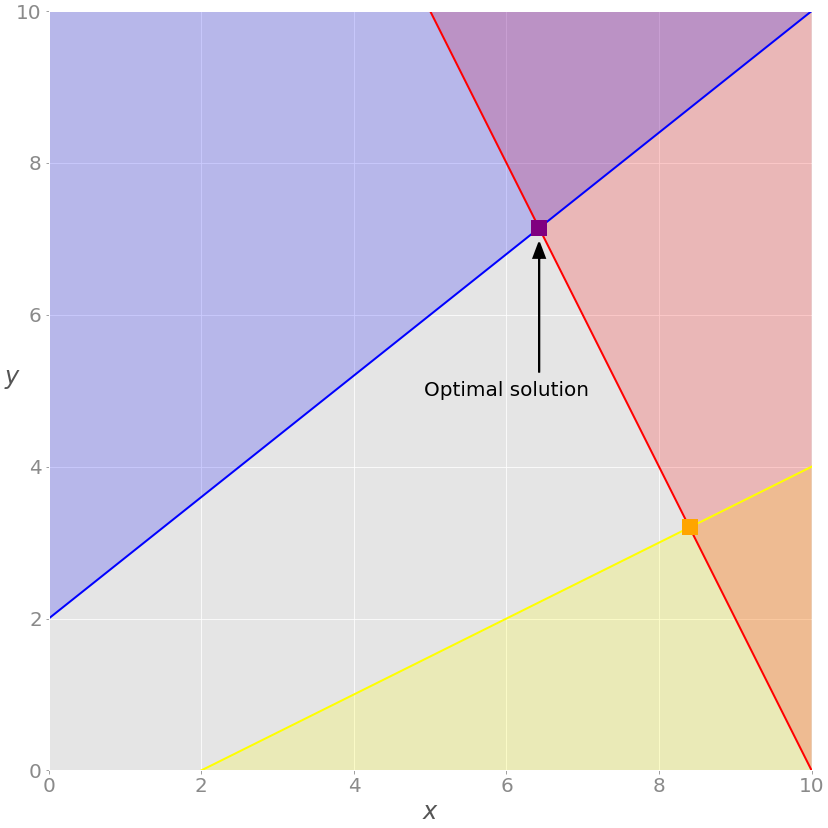

This is how you can visualize the problem:

The red line represents the function 2 x + y = 20, and the red area above it shows where the red inequality is not satisfied. Similarly, the blue line is the function −4 x + 5 y = 10, and the blue area is forbidden because it violates the blue inequality. The yellow line is − x + 2 y = −2, and the yellow area below it is where the yellow inequality isn’t valid.

If you disregard the red, blue, and yellow areas, only the gray area remains. Each point of the gray area satisfies all constraints and is a potential solution to the problem. This area is called the feasible region , and its points are feasible solutions . In this case, there’s an infinite number of feasible solutions.

You want to maximize z . The feasible solution that corresponds to maximal z is the optimal solution . If you were trying to minimize the objective function instead, then the optimal solution would correspond to its feasible minimum.

Note that z is linear. You can imagine it as a plane in three-dimensional space. This is why the optimal solution must be on a vertex , or corner, of the feasible region. In this case, the optimal solution is the point where the red and blue lines intersect, as you’ll see later .

Sometimes a whole edge of the feasible region, or even the entire region, can correspond to the same value of z . In that case, you have many optimal solutions.

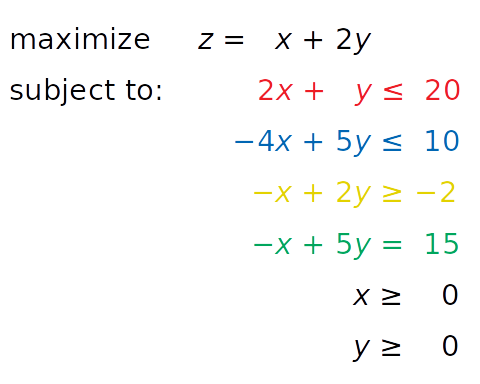

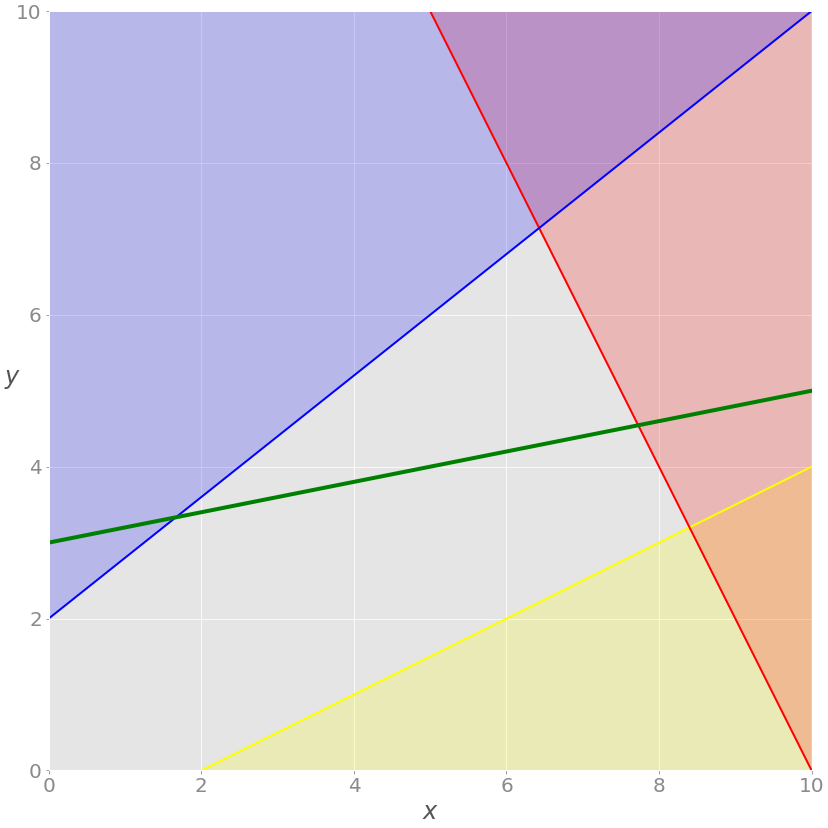

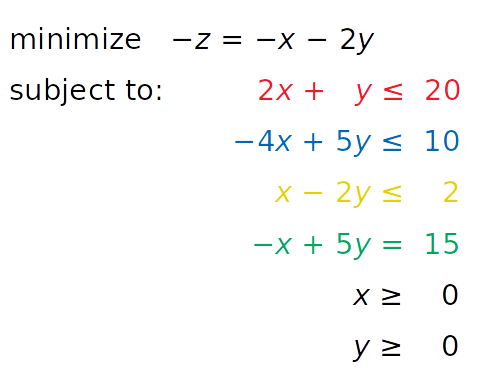

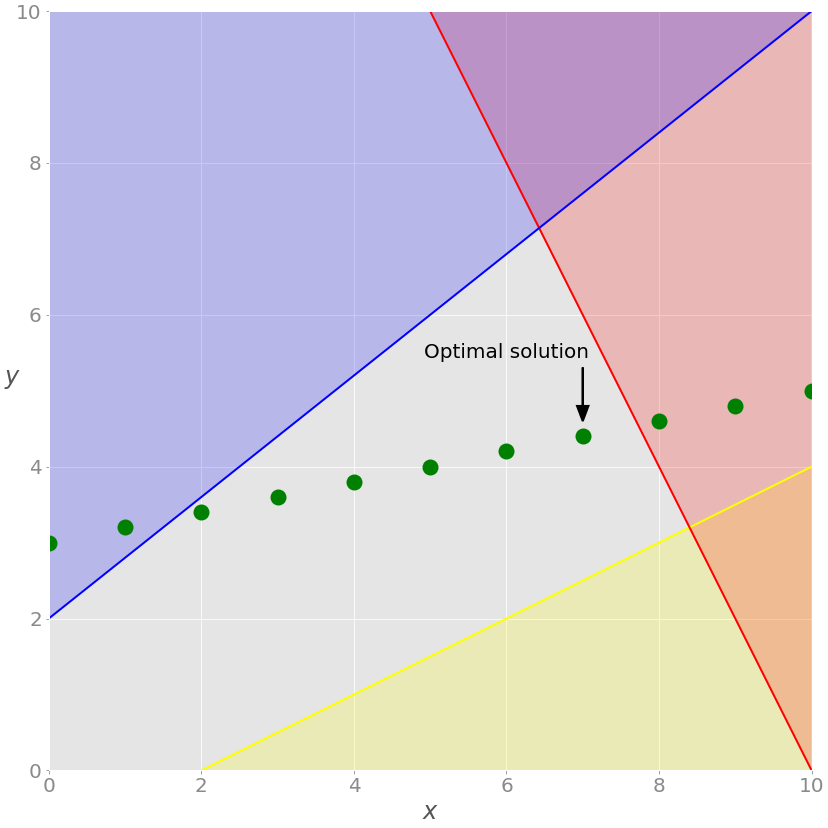

You’re now ready to expand the problem with the additional equality constraint shown in green:

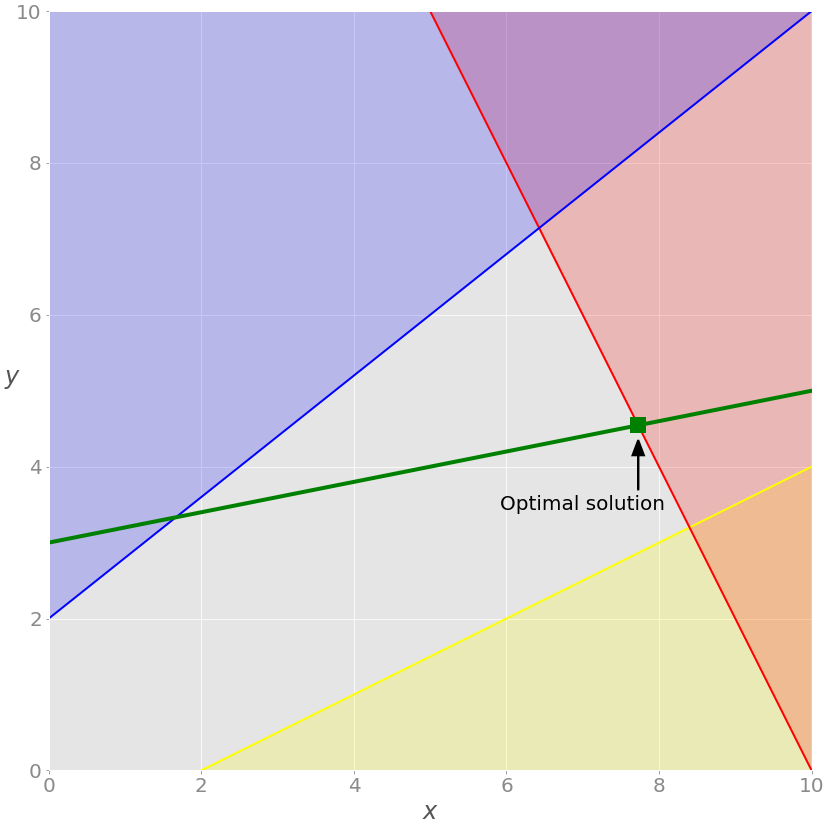

The equation − x + 5 y = 15, written in green, is new. It’s an equality constraint. You can visualize it by adding a corresponding green line to the previous image:

The solution now must satisfy the green equality, so the feasible region isn’t the entire gray area anymore. It’s the part of the green line passing through the gray area from the intersection point with the blue line to the intersection point with the red line. The latter point is the solution.

If you insert the demand that all values of x must be integers, then you’ll get a mixed-integer linear programming problem, and the set of feasible solutions will change once again:

You no longer have the green line, only the points along the line where the value of x is an integer. The feasible solutions are the green points on the gray background, and the optimal one in this case is nearest to the red line.

These three examples illustrate feasible linear programming problems because they have bounded feasible regions and finite solutions.

A linear programming problem is infeasible if it doesn’t have a solution. This usually happens when no solution can satisfy all constraints at once.

For example, consider what would happen if you added the constraint x + y ≤ −1. Then at least one of the decision variables ( x or y ) would have to be negative. This is in conflict with the given constraints x ≥ 0 and y ≥ 0. Such a system doesn’t have a feasible solution, so it’s called infeasible.

Another example would be adding a second equality constraint parallel to the green line. These two lines wouldn’t have a point in common, so there wouldn’t be a solution that satisfies both constraints.

A linear programming problem is unbounded if its feasible region isn’t bounded and the solution is not finite. This means that at least one of your variables isn’t constrained and can reach to positive or negative infinity, making the objective infinite as well.

For example, say you take the initial problem above and drop the red and yellow constraints. Dropping constraints out of a problem is called relaxing the problem. In such a case, x and y wouldn’t be bounded on the positive side. You’d be able to increase them toward positive infinity, yielding an infinitely large z value.

In the previous sections, you looked at an abstract linear programming problem that wasn’t tied to any real-world application. In this subsection, you’ll find a more concrete and practical optimization problem related to resource allocation in manufacturing.

Say that a factory produces four different products, and that the daily produced amount of the first product is x ₁, the amount produced of the second product is x ₂, and so on. The goal is to determine the profit-maximizing daily production amount for each product, bearing in mind the following conditions:

The profit per unit of product is $20, $12, $40, and $25 for the first, second, third, and fourth product, respectively.

Due to manpower constraints, the total number of units produced per day can’t exceed fifty.

For each unit of the first product, three units of the raw material A are consumed. Each unit of the second product requires two units of the raw material A and one unit of the raw material B. Each unit of the third product needs one unit of A and two units of B. Finally, each unit of the fourth product requires three units of B.

Due to the transportation and storage constraints, the factory can consume up to one hundred units of the raw material A and ninety units of B per day.

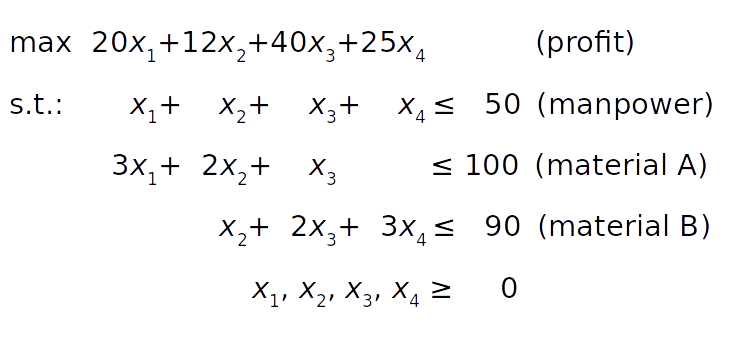

The mathematical model can be defined like this:

The objective function (profit) is defined in condition 1. The manpower constraint follows from condition 2. The constraints on the raw materials A and B can be derived from conditions 3 and 4 by summing the raw material requirements for each product.

Finally, the product amounts can’t be negative, so all decision variables must be greater than or equal to zero.

Unlike the previous example, you can’t conveniently visualize this one because it has four decision variables. However, the principles remain the same regardless of the dimensionality of the problem.

Linear Programming Python Implementation

In this tutorial, you’ll use two Python packages to solve the linear programming problem described above:

- SciPy is a general-purpose package for scientific computing with Python.

- PuLP is a Python linear programming API for defining problems and invoking external solvers.

SciPy is straightforward to set up. Once you install it, you’ll have everything you need to start. Its subpackage scipy.optimize can be used for both linear and nonlinear optimization .

PuLP allows you to choose solvers and formulate problems in a more natural way. The default solver used by PuLP is the COIN-OR Branch and Cut Solver (CBC) . It’s connected to the COIN-OR Linear Programming Solver (CLP) for linear relaxations and the COIN-OR Cut Generator Library (CGL) for cuts generation.

Another great open source solver is the GNU Linear Programming Kit (GLPK) . Some well-known and very powerful commercial and proprietary solutions are Gurobi , CPLEX , and XPRESS .

Besides offering flexibility when defining problems and the ability to run various solvers, PuLP is less complicated to use than alternatives like Pyomo or CVXOPT, which require more time and effort to master.

To follow this tutorial, you’ll need to install SciPy and PuLP. The examples below use version 1.4.1 of SciPy and version 2.1 of PuLP.

You can install both using pip :

You might need to run pulptest or sudo pulptest to enable the default solvers for PuLP, especially if you’re using Linux or Mac:

Optionally, you can download, install, and use GLPK. It’s free and open source and works on Windows, MacOS, and Linux. You’ll see how to use GLPK (in addition to CBC) with PuLP later in this tutorial.

On Windows, you can download the archives and run the installation files.

On MacOS, you can use Homebrew :

On Debian and Ubuntu, use apt to install glpk and glpk-utils :

On Fedora, use dnf with glpk-utils :

You might also find conda useful for installing GLPK:

After completing the installation, you can check the version of GLPK:

See GLPK’s tutorials on installing with Windows executables and Linux packages for more information.

In this section, you’ll learn how to use the SciPy optimization and root-finding library for linear programming.

To define and solve optimization problems with SciPy, you need to import scipy.optimize.linprog() :

Now that you have linprog() imported, you can start optimizing.

Let’s first solve the linear programming problem from above:

linprog() solves only minimization (not maximization) problems and doesn’t allow inequality constraints with the greater than or equal to sign (≥). To work around these issues, you need to modify your problem before starting optimization:

- Instead of maximizing z = x + 2 y, you can minimize its negative(− z = − x − 2 y).

- Instead of having the greater than or equal to sign, you can multiply the yellow inequality by −1 and get the opposite less than or equal to sign (≤).

After introducing these changes, you get a new system:

This system is equivalent to the original and will have the same solution. The only reason to apply these changes is to overcome the limitations of SciPy related to the problem formulation.

The next step is to define the input values:

You put the values from the system above into the appropriate lists, tuples , or NumPy arrays :

- obj holds the coefficients from the objective function.

- lhs_ineq holds the left-side coefficients from the inequality (red, blue, and yellow) constraints.

- rhs_ineq holds the right-side coefficients from the inequality (red, blue, and yellow) constraints.

- lhs_eq holds the left-side coefficients from the equality (green) constraint.

- rhs_eq holds the right-side coefficients from the equality (green) constraint.

Note: Please, be careful with the order of rows and columns!

The order of the rows for the left and right sides of the constraints must be the same. Each row represents one constraint.

The order of the coefficients from the objective function and left sides of the constraints must match. Each column corresponds to a single decision variable.

The next step is to define the bounds for each variable in the same order as the coefficients. In this case, they’re both between zero and positive infinity:

This statement is redundant because linprog() takes these bounds (zero to positive infinity) by default.

Note: Instead of float("inf") , you can use math.inf , numpy.inf , or scipy.inf .

Finally, it’s time to optimize and solve your problem of interest. You can do that with linprog() :

The parameter c refers to the coefficients from the objective function. A_ub and b_ub are related to the coefficients from the left and right sides of the inequality constraints, respectively. Similarly, A_eq and b_eq refer to equality constraints. You can use bounds to provide the lower and upper bounds on the decision variables.

You can use the parameter method to define the linear programming method that you want to use. There are three options:

- method="interior-point" selects the interior-point method. This option is set by default.

- method="revised simplex" selects the revised two-phase simplex method.

- method="simplex" selects the legacy two-phase simplex method.

linprog() returns a data structure with these attributes:

.con is the equality constraints residuals.

.fun is the objective function value at the optimum (if found).

.message is the status of the solution.

.nit is the number of iterations needed to finish the calculation.

.slack is the values of the slack variables, or the differences between the values of the left and right sides of the constraints.

.status is an integer between 0 and 4 that shows the status of the solution, such as 0 for when the optimal solution has been found.

.success is a Boolean that shows whether the optimal solution has been found.

.x is a NumPy array holding the optimal values of the decision variables.

You can access these values separately:

That’s how you get the results of optimization. You can also show them graphically:

As discussed earlier, the optimal solutions to linear programming problems lie at the vertices of the feasible regions. In this case, the feasible region is just the portion of the green line between the blue and red lines. The optimal solution is the green square that represents the point of intersection between the green and red lines.

If you want to exclude the equality (green) constraint, just drop the parameters A_eq and b_eq from the linprog() call:

The solution is different from the previous case. You can see it on the chart:

In this example, the optimal solution is the purple vertex of the feasible (gray) region where the red and blue constraints intersect. Other vertices, like the yellow one, have higher values for the objective function.

You can use SciPy to solve the resource allocation problem stated in the earlier section :

As in the previous example, you need to extract the necessary vectors and matrix from the problem above, pass them as the arguments to .linprog() , and get the results:

The result tells you that the maximal profit is 1900 and corresponds to x ₁ = 5 and x ₃ = 45. It’s not profitable to produce the second and fourth products under the given conditions. You can draw several interesting conclusions here:

The third product brings the largest profit per unit, so the factory will produce it the most.

The first slack is 0 , which means that the values of the left and right sides of the manpower (first) constraint are the same. The factory produces 50 units per day, and that’s its full capacity.

The second slack is 40 because the factory consumes 60 units of raw material A (15 units for the first product plus 45 for the third) out of a potential 100 units.

The third slack is 0 , which means that the factory consumes all 90 units of the raw material B. This entire amount is consumed for the third product. That’s why the factory can’t produce the second or fourth product at all and can’t produce more than 45 units of the third product. It lacks the raw material B.

opt.status is 0 and opt.success is True , indicating that the optimization problem was successfully solved with the optimal feasible solution.

SciPy’s linear programming capabilities are useful mainly for smaller problems. For larger and more complex problems, you might find other libraries more suitable for the following reasons:

SciPy can’t run various external solvers.

SciPy can’t work with integer decision variables.

SciPy doesn’t provide classes or functions that facilitate model building. You have to define arrays and matrices, which might be a tedious and error-prone task for large problems.

SciPy doesn’t allow you to define maximization problems directly. You must convert them to minimization problems.

SciPy doesn’t allow you to define constraints using the greater-than-or-equal-to sign directly. You must use the less-than-or-equal-to instead.

Fortunately, the Python ecosystem offers several alternative solutions for linear programming that are very useful for larger problems. One of them is PuLP, which you’ll see in action in the next section.

PuLP has a more convenient linear programming API than SciPy. You don’t have to mathematically modify your problem or use vectors and matrices. Everything is cleaner and less prone to errors.

As usual, you start by importing what you need:

Now that you have PuLP imported, you can solve your problems.

You’ll now solve this system with PuLP:

The first step is to initialize an instance of LpProblem to represent your model:

You use the sense parameter to choose whether to perform minimization ( LpMinimize or 1 , which is the default) or maximization ( LpMaximize or -1 ). This choice will affect the result of your problem.

Once that you have the model, you can define the decision variables as instances of the LpVariable class:

You need to provide a lower bound with lowBound=0 because the default value is negative infinity. The parameter upBound defines the upper bound, but you can omit it here because it defaults to positive infinity.

The optional parameter cat defines the category of a decision variable. If you’re working with continuous variables, then you can use the default value "Continuous" .

You can use the variables x and y to create other PuLP objects that represent linear expressions and constraints:

When you multiply a decision variable with a scalar or build a linear combination of multiple decision variables, you get an instance of pulp.LpAffineExpression that represents a linear expression.

Note: You can add or subtract variables or expressions, and you can multiply them with constants because PuLP classes implement some of the Python special methods that emulate numeric types like __add__() , __sub__() , and __mul__() . These methods are used to customize the behavior of operators like + , - , and * .

Similarly, you can combine linear expressions, variables, and scalars with the operators == , <= , or >= to get instances of pulp.LpConstraint that represent the linear constraints of your model.

Note: It’s also possible to build constraints with the rich comparison methods .__eq__() , .__le__() , and .__ge__() that define the behavior of the operators == , <= , and >= .

Having this in mind, the next step is to create the constraints and objective function as well as to assign them to your model. You don’t need to create lists or matrices. Just write Python expressions and use the += operator to append them to the model:

In the above code, you define tuples that hold the constraints and their names. LpProblem allows you to add constraints to a model by specifying them as tuples. The first element is a LpConstraint instance. The second element is a human-readable name for that constraint.

Setting the objective function is very similar:

Alternatively, you can use a shorter notation:

Now you have the objective function added and the model defined.

Note: You can append a constraint or objective to the model with the operator += because its class, LpProblem , implements the special method .__iadd__() , which is used to specify the behavior of += .

For larger problems, it’s often more convenient to use lpSum() with a list or other sequence than to repeat the + operator. For example, you could add the objective function to the model with this statement:

It produces the same result as the previous statement.

You can now see the full definition of this model:

The string representation of the model contains all relevant data: the variables, constraints, objective, and their names.

Note: String representations are built by defining the special method .__repr__() . For more details about .__repr__() , check out Pythonic OOP String Conversion: __repr__ vs __str__ or When Should You Use .__repr__() vs .__str__() in Python? .

Finally, you’re ready to solve the problem. You can do that by calling .solve() on your model object. If you want to use the default solver (CBC), then you don’t need to pass any arguments:

.solve() calls the underlying solver, modifies the model object, and returns the integer status of the solution, which will be 1 if the optimum is found. For the rest of the status codes, see LpStatus[] .

You can get the optimization results as the attributes of model . The function value() and the corresponding method .value() return the actual values of the attributes:

model.objective holds the value of the objective function, model.constraints contains the values of the slack variables, and the objects x and y have the optimal values of the decision variables. model.variables() returns a list with the decision variables:

As you can see, this list contains the exact objects that are created with the constructor of LpVariable .

The results are approximately the same as the ones you got with SciPy.

Note: Be careful with the method .solve() —it changes the state of the objects x and y !

You can see which solver was used by calling .solver :

The output informs you that the solver is CBC. You didn’t specify a solver, so PuLP called the default one.

If you want to run a different solver, then you can specify it as an argument of .solve() . For example, if you want to use GLPK and already have it installed, then you can use solver=GLPK(msg=False) in the last line. Keep in mind that you’ll also need to import it:

Now that you have GLPK imported, you can use it inside .solve() :

The msg parameter is used to display information from the solver. msg=False disables showing this information. If you want to include the information, then just omit msg or set msg=True .

Your model is defined and solved, so you can inspect the results the same way you did in the previous case:

You got practically the same result with GLPK as you did with SciPy and CBC.

Let’s peek and see which solver was used this time:

As you defined above with the highlighted statement model.solve(solver=GLPK(msg=False)) , the solver is GLPK.

You can also use PuLP to solve mixed-integer linear programming problems. To define an integer or binary variable, just pass cat="Integer" or cat="Binary" to LpVariable . Everything else remains the same:

In this example, you have one integer variable and get different results from before:

Now x is an integer, as specified in the model. (Technically it holds a float value with zero after the decimal point.) This fact changes the whole solution. Let’s show this on the graph:

As you can see, the optimal solution is the rightmost green point on the gray background. This is the feasible solution with the largest values of both x and y , giving it the maximal objective function value.

GLPK is capable of solving such problems as well.

Now you can use PuLP to solve the resource allocation problem from above:

The approach for defining and solving the problem is the same as in the previous example:

In this case, you use the dictionary x to store all decision variables. This approach is convenient because dictionaries can store the names or indices of decision variables as keys and the corresponding LpVariable objects as values. Lists or tuples of LpVariable instances can be useful as well.

The code above produces the following result:

As you can see, the solution is consistent with the one obtained using SciPy. The most profitable solution is to produce 5.0 units of the first product and 45.0 units of the third product per day.

Let’s make this problem more complicated and interesting. Say the factory can’t produce the first and third products in parallel due to a machinery issue. What’s the most profitable solution in this case?

Now you have another logical constraint: if x ₁ is positive, then x ₃ must be zero and vice versa. This is where binary decision variables are very useful. You’ll use two binary decision variables, y ₁ and y ₃, that’ll denote if the first or third products are generated at all:

The code is very similar to the previous example except for the highlighted lines. Here are the differences:

Line 5 defines the binary decision variables y[1] and y[3] held in the dictionary y .

Line 12 defines an arbitrarily large number M . The value 100 is large enough in this case because you can’t have more than 100 units per day.

Line 13 says that if y[1] is zero, then x[1] must be zero, else it can be any non-negative number.

Line 14 says that if y[3] is zero, then x[3] must be zero, else it can be any non-negative number.

Line 15 says that either y[1] or y[3] is zero (or both are), so either x[1] or x[3] must be zero as well.

Here’s the solution:

It turns out that the optimal approach is to exclude the first product and to produce only the third one.

Linear programming and mixed-integer linear programming are very important topics. If you want to learn more about them—and there’s much more to learn than what you saw here—then you can find plenty of resources. Here are a few to get started with:

- Wikipedia Linear Programming Article

- Wikipedia Integer Programming Article

- MIT Introduction to Mathematical Programming Course

- Brilliant.org Linear Programming Article

- CalcWorkshop What Is Linear Programming?

- BYJU’S Linear Programming Article

Gurobi Optimization is a company that offers a very fast commercial solver with a Python API. It also provides valuable resources on linear programming and mixed-integer linear programming, including the following:

- Linear Programming (LP) – A Primer on the Basics

- Mixed-Integer Programming (MIP) – A Primer on the Basics

- Choosing a Math Programming Solver

If you’re in the mood to learn optimization theory, then there’s plenty of math books out there. Here are a few popular choices:

- Linear Programming: Foundations and Extensions

- Convex Optimization

- Model Building in Mathematical Programming

- Engineering Optimization: Theory and Practice

This is just a part of what’s available. Linear programming and mixed-integer linear programming are popular and widely used techniques, so you can find countless resources to help deepen your understanding.

Just like there are many resources to help you learn linear programming and mixed-integer linear programming, there’s also a wide range of solvers that have Python wrappers available. Here’s a partial list:

- SCIP with PySCIPOpt

- Gurobi Optimizer

Some of these libraries, like Gurobi, include their own Python wrappers. Others use external wrappers. For example, you saw that you can access CBC and GLPK with PuLP.

You now know what linear programming is and how to use Python to solve linear programming problems. You also learned that Python linear programming libraries are just wrappers around native solvers. When the solver finishes its job, the wrapper returns the solution status, the decision variable values, the slack variables, the objective function, and so on.

In this tutorial, you learned how to:

- Define a model that represents your problem

- Create a Python program for optimization

- Run the optimization program to find the solution to the problem

- Retrieve the result of optimization

You used SciPy with its own solver as well as PuLP with CBC and GLPK, but you also learned that there are many other linear programming solvers and Python wrappers. You’re now ready to dive into the world of linear programming!

If you have any questions or comments, then please put them in the comments section below.

🐍 Python Tricks 💌

Get a short & sweet Python Trick delivered to your inbox every couple of days. No spam ever. Unsubscribe any time. Curated by the Real Python team.

About Mirko Stojiljković

Mirko has a Ph.D. in Mechanical Engineering and works as a university professor. He is a Pythonista who applies hybrid optimization and machine learning methods to support decision making in the energy sector.

Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. The team members who worked on this tutorial are:

Master Real-World Python Skills With Unlimited Access to Real Python

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

What Do You Think?

What’s your #1 takeaway or favorite thing you learned? How are you going to put your newfound skills to use? Leave a comment below and let us know.

Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. Get tips for asking good questions and get answers to common questions in our support portal . Looking for a real-time conversation? Visit the Real Python Community Chat or join the next “Office Hours” Live Q&A Session . Happy Pythoning!

Keep Learning

Related Topics: intermediate data-science

Keep reading Real Python by creating a free account or signing in:

Already have an account? Sign-In

Almost there! Complete this form and click the button below to gain instant access:

5 Thoughts On Python Mastery

🔒 No spam. We take your privacy seriously.

5.11 Linear Programming

Learning objectives.

After completing this section, you should be able to:

- Compose an objective function to be minimized or maximized.

- Compose inequalities representing a system application.

- Apply linear programming to solve application problems.

Imagine you hear about some natural disaster striking a far-away country; it could be an earthquake, a fire, a tsunami, a tornado, a hurricane, or any other type of natural disaster. The survivors of this disaster need help—they especially need food, water, and medical supplies. You work for a company that has these supplies, and your company has decided to help by flying the needed supplies into the disaster area. They want to maximize the number of people they can help. However, there are practical constraints that need to be taken into consideration; the size of the airplanes, how much weight each airplane can carry, and so on. How do you solve this dilemma? This is where linear programming comes into play. Linear programming is a mathematical technique to solve problems involving finding maximums or minimums where a linear function is limited by various constraints.

As a field, linear programming began in the late 1930s and early 1940s. It was used by many countries during World War II; countries used linear programming to solve problems such as maximizing troop effectiveness, minimizing their own casualties, and maximizing the damage they could inflict upon the enemy. Later, businesses began to realize they could use the concept of linear programming to maximize output, minimize expenses, and so on. In short, linear programming is a method to solve problems that involve finding a maximum or minimum where a linear function is constrained by various factors.

A Mathematician Invents a “Tsunami Cannon”

On December 26, 2004, a massive earthquake occurred in the Indian Ocean. This earthquake, which scientists estimate had a magnitude of 9.0 or 9.1 on the Richter Scale, set off a wave of tsunamis across the Indian Ocean. The waves of the tsunami averaged over 30 feet (10 meters) high, and caused massive damage and loss of life across the coastal regions bordering the Indian Ocean.

Usama Kadri works as an applied mathematician at Cardiff University in Wales. His areas of research include fluid dynamics and non-linear phenomena. Lately, he has been focusing his research on the early detection and easing of the effects of tsunamis. One of his theories involves deploying a series of devices along coastlines which would fire acoustic-gravity waves (AGWs) into an oncoming tsunami, which in theory would lessen the force of the tsunami. Of course, this is all in theory, but Kadri believes it will work. There are issues with creating such a device: they would take a tremendous amount of electricity to generate an AGW, for instance, but if it would save lives, it may well be worth it.

Compose an Objective Function to Be Minimized or Maximized

An objective function is a linear function in two or more variables that describes the quantity that needs to be maximized or minimized.

Example 5.96

Composing an objective function for selling two products.

Miriam starts her own business, where she knits and sells scarves and sweaters out of high-quality wool. She can make a profit of $8 per scarf and $10 per sweater. Write an objective function that describes her profit.

Let x x represent the number of scarves sold, and let y y represent the number of sweaters sold. Let P P represent profit. Since each scarf has a profit of $8 and each sweater has a profit of $10, the objective function is P = 8 x + 10 y P = 8 x + 10 y .

Your Turn 5.96

Example 5.97, composing an objective function for production.

William’s factory produces two products, widgets and wadgets. It takes 24 minutes for his factory to make 1 widget, and 32 minutes for his factory to make 1 wadget. Write an objective function that describes the time it takes to make the products.

Let x x equal the number of widgets made; let y y equal the number of wadgets made; let T T represent total time. The objective function is T = 24 x + 32 y T = 24 x + 32 y .

Your Turn 5.97

Composing inequalities representing a system application.

For our two examples of profit and production, in an ideal world the profit a person makes and/or the number of products a company produces would have no restrictions. After all, who wouldn’t want to have an unrestricted profit? However in reality this is not the case; there are usually several variables that can restrict how much profit a person can make or how many products a company can produce. These restrictions are called constraints .

Many different variables can be constraints. When making or selling a product, the time available, the cost of manufacturing and the amount of raw materials are all constraints. In the opening scenario with the tsunami, the maximum weight on an airplane and the volume of cargo it can carry would be constraints. Constraints are expressed as linear inequalities; the list of constraints defined by the problem forms a system of linear inequalities that, along with the objective function, represent a system application.

Example 5.98

Representing the constraints for selling two products.

Two friends start their own business, where they knit and sell scarves and sweaters out of high-quality wool. They can make a profit of $8 per scarf and $10 per sweater. To make a scarf, 3 bags of knitting wool are needed; to make a sweater, 4 bags of knitting wool are needed. The friends can only make 8 items per day, and can use not more than 27 bags of knitting wool per day. Write the inequalities that represent the constraints. Then summarize what has been described thus far by writing the objective function for profit and the two constraints.

Let x x represent the number of scarves sold, and let y y represent the number of sweaters sold. There are two constraints: the number of items the business can make in a day (a maximum of 8) and the number of bags of knitting wool they can use per day (a maximum of 27). The first constraint (total number of items in a day) is written as:

Since each scarf takes 3 bags of knitting wool and each sweater takes 4 bags of knitting wool, the second constraint, total bags of knitting wool per day, is written as:

In summary, here are the equations that represent the new business:

P = 8 x + 10 y P = 8 x + 10 y ; This is the profit equation: The business makes $8 per scarf and $10 per sweater.

Your Turn 5.98

Example 5.99, representing constraints for production.

A factory produces two products, widgets and wadgets. It takes 24 minutes for the factory to make 1 widget, and 32 minutes for the factory to make 1 wadget. Research indicates that long-term demand for products from the factory will result in average sales of 12 widgets per day and 10 wadgets per day. Because of limitations on storage at the factory, no more than 20 widgets or 17 wadgets can be made each day. Write the inequalities that represent the constraints. Then summarize what has been described thus far by writing the objective function for time and the two constraints.

Let x x equal the number of widgets made; let y y equal the number of wadgets made. Based on the long-term demand, we know the factory must produce a minimum of 12 widgets and 10 wadgets per day. We also know because of storage limitations, the factory cannot produce more than 20 widgets per day or 17 wadgets per day. Writing those as inequalities, we have:

x ≥ 12 x ≥ 12

y ≥ 10 y ≥ 10

x ≤ 20 x ≤ 20

y ≤ 17 y ≤ 17

The number of widgets made per day must be between 12 and 20, and the number of wadgets made per day must be between 10 and 17. Therefore, we have:

12 ≤ x ≤ 20 12 ≤ x ≤ 20

10 ≤ y ≤ 17 10 ≤ y ≤ 17

The system is:

T = 24 x + 32 y T = 24 x + 32 y

T T is the variable for time; it takes 24 minutes to make a widget and 32 minutes to make a wadget.

Your Turn 5.99

Applying linear programming to solve application problems.

There are four steps that need to be completed when solving a problem using linear programming. They are as follows:

Step 1: Compose an objective function to be minimized or maximized.

Step 2: Compose inequalities representing the constraints of the system.

Step 3: Graph the system of inequalities representing the constraints.

Step 4: Find the value of the objective function at each corner point of the graphed region.

The first two steps you have already learned. Let’s continue to use the same examples to illustrate Steps 3 and 4.

Example 5.100

Solving a linear programming problem for two products.

Three friends start their own business, where they knit and sell scarves and sweaters out of high-quality wool. They can make a profit of $8 per scarf and $10 per sweater. To make a scarf, 3 bags of knitting wool are needed; to make a sweater, 4 bags of knitting wool are needed. The friends can only make 8 items per day, and can use not more than 27 bags of knitting wool per day. Determine the number of scarves and sweaters they should make each day to maximize their profit.

Step 1: Compose an objective function to be minimized or maximized. From Example 5.98 , the objective function is P = 8 x + 10 y P = 8 x + 10 y .

Step 2: Compose inequalities representing the constraints of the system. From Example 5.98 , the constraints are x + y ≤ 8 x + y ≤ 8 and 3 x + 4 y ≤ 27 3 x + 4 y ≤ 27 .

Step 3: Graph the system of inequalities representing the constraints. Using methods discussed in Graphing Linear Equations and Inequalities , the graphs of the constraints are shown below. Because the number of scarves ( x x ) and the number of sweaters ( y y ) both must be non-negative numbers (i.e., x ≥ 0 x ≥ 0 and y ≥ 0 y ≥ 0 ), we need to graph the system of inequalities in Quadrant I only. Figure 5.99 shows each constraint graphed on its own axes, while Figure 5.100 shows the graph of the system of inequalities (the two constraints graphed together). In Figure 5.100 , the large shaded region represents the area where the two constraints intersect. If you are unsure how to graph these regions, refer back to Graphing Linear Equations and Inequalities .

Step 4: Find the value of the objective function at each corner point of the graphed region. The “graphed region” is the area where both of the regions intersect; in Figure 5.101 , it is the large shaded area. The “corner points” refer to each vertex of the shaded area. Why the corner points? Because the maximum and minimum of every objective function will occur at one (or more) of the corner points. Figure 5.101 shows the location and coordinates of each corner point.

Three of the four points are readily found, as we used them to graph the regions; the fourth point, the intersection point of the two constraint lines, will have to be found using methods discussed in Systems of Linear Equations in Two Variables , either using substitution or elimination. As a reminder, set up the two equations of the constraint lines:

For this example, substitution will be used.

Substituting 8 - x 8 - x into the first equation for y y , we have

Now, substituting the 5 in for x x in either equation to solve for y y . Choosing the second equation, we have:

Therefore, x = 5 x = 5 , and y = 3 y = 3 .

To find the value of the objective function, P = 8 x + 10 y P = 8 x + 10 y , put the coordinates for each corner point into the equation and solve. The largest solution found when doing this will be the maximum value, and thus will be the answer to the question originally posed: determining the number of scarves and sweaters the new business should make each day to maximize their profit.

| Corner ( , ) | Objective Function |

|---|---|

The maximum value for the profit P P occurs when x = 5 x = 5 and y = 3 y = 3 . This means that to maximize their profit, the new business should make 5 scarves and 3 sweaters every day.

Your Turn 5.100

People in mathematics, leonid kantorovich.

Leonid Vitalyevich Kantorovich was born January 19, 1912, in St. Petersburg, Russia. Two major events affected young Leonid’s life: when he was five, the Russian Revolution began, making life in St. Petersburg very difficult; so much so that Leonid’s family fled to Belarus for a year. When Leonid was 10, his father died, leaving his mother to raise five children on her own.

Despite the hardships, Leonid showed incredible mathematical ability at a young age. When he was only 14, he enrolled in Leningrad State University to study mathematics. Four years later, at age 18, he graduated with what would be equivalent to a Ph.D. in mathematics.

Although his primary interests were in pure mathematics, in 1938 he began working on problems in economics. Supposedly, he was approached by a local plywood manufacturer with the following question: how to come up with a work schedule for eight lathes to maximize output, given the five different kinds of plywood they had at the factory. By July 1939, Leonid had come up with a solution, not only to the lathe scheduling problem but to other areas as well, such as an optimal crop rotation schedule for farmers, minimizing waste material in manufacturing, and finding optimal routes for transporting goods. The technique he discovered to solve these problems eventually became known as linear programming. He continued to use this technique for solving many other problems involving optimization, which resulted in the book The Best Use of Economic Resources , which was published in 1959. His continued work in linear programming would ultimately result in him winning the Nobel Prize of Economics in 1975.

Check Your Understanding

- P = 20 t − 10 c

- P = 20 c + 10 t

- P = 20 t + 10 c

- P = 20 c − 10 t

- P = 150 w + 180 b

- P = 150 b + 180 w

- P = 180 w + 150 b

- P = 150 w − 180 b

- P = 2.50 f + 6.75 c

- P = 2.50 f + 6.75 t

- P = 2.50 t + 6.75 f

- None of these

- 20 t + 10 c ≤ 70

- 15 t + 4 c ≥ 70

- 15 t + 4 c ≤ 70

- 20 t + 10 c ≤ 12

- 20 t + 10 c ≥ 12

- w + b ≤ 945

- 10 w + 15 b ≥ 945

- 10 w + 15 b ≤ 945

- 150 w + 180 w ≤ 945

- 150 w + 180 b ≤ 1,635

- 25 w + 30 b ≤ 1,635

- w + b ≤ 1,635

- 30 w + 25 b ≤ 1,635

- ( 0 , 0 ) , ( 0 , 12 ) , ( 10 , 2 ) , ( 12 , 0 )

- ( 0 , 0 ) , ( 0 , 12 ) , ( 2 , 10 ) , ( 4 2 3 , 0 )

- ( 0 , 0 ) , ( 17.5 , 0 ) , ( 2 , 10 ) , ( 12 , 0 )

Section 5.11 Exercises

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Authors: Donna Kirk

- Publisher/website: OpenStax

- Book title: Contemporary Mathematics

- Publication date: Mar 22, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Section URL: https://openstax.org/books/contemporary-mathematics/pages/5-11-linear-programming

© Jul 25, 2024 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Solving mathematical problems using code: Project Euler

It is week 9 here at the Flatiron school and we are now approaching the end of our first module with Javascript. We have been so busy trying to digest all the new material that we have had barely anytime to catch a break. Everyday seems so long and packed yet there is never enough time. We have been moving at an accelerated pace, uncovering new topics when we are starting to barely grasp the material we are currently on. So to take a short breather from all this, I decided to take a step back to the very beginning stages of my coding journey and introduce you all to my friend Project Euler.

Project Euler

Project Euler is a series of challenging mathematical/computer programming problems that will require more than just mathematical insights to solve. Although mathematics will help you arrive at elegant and efficient methods, the use of a computer and programming skills will be required to solve most problems. The motivation for starting Project Euler, and its continuation, is to provide a platform for the inquiring mind to delve into unfamiliar areas and learn new concepts in a fun and recreational context.

I discovered Project Euler through a friend who recommended I try solving the problems on the site to enhance my coding skills to prep myself before attending a coding bootcamp. Project Euler is a site with many mathematical problems that are intended to be solved in an efficient way using code. The problems are labeled with the description/title, number of users that solved the problem, the difficulty level, and status of the problem (whether I solved it or not). Some of the problems also provide a PDF file that shows a mathematical approach to the problem using algorithms once the question has been solved.

Let’s take a look at one of the problems on the site:

“The prime factors of 13195 are 5, 7, 13 and 29. What is the largest prime factor of the number 600851475143 ?”

We are first given an example explaining what a prime factor is. From what we can tell, a prime factor is a number that is evenly divisible by a number and is also a prime number. Then we are given the number 600851475143 and are told to find the largest prime factor of this number.

Now let’s take this step by step and try to solve this problem using our coding knowledge.

First we need a method or function that can check whether a number is a factor of 600851475143. We also need a method or function that can check whether that number is a prime number.

Then we build out our largest_prime_factor_of(n) method to find the result.

In our new method, we are checking to see if every number between 1 and 600851475143 is a prime factor. If it is, then we select and store that number in an array and call .last on the array to return the greatest prime factor. Now I’m sure our logic works perfectly fine but when I ran this code, it went on for longer than 5 minutes so I terminated the process. Since 600851475143 is a very large number, it takes quite a bit of time for the system to calculate whether each number up to the given number is a prime factor or not.

So instead of having to calculate every single number up to 600851475143, we can simply reject all the numbers we don’t want to calculate using prime factorization. We can do this by dividing the number by it’s first prime factor until the only prime factor is itself.

Great! We can now see that our code returns the correct value in an acceptable amount of time. But we can further refactor this code to be more efficient than it is now by requiring and using the built in ruby Prime class.

- https://projecteuler.net

Written by James Rhee

Text to speech

- Docs »

- Introduction

- Edit on GitHub

Nothing in the world takes place without optimization, and there is no doubt that all aspects of the world that have a rational basis can be explained by optimization methods. Leonhard Euler, 1744 (translation found in “Optimization Stories”, edited by Martin Grötschel).

Introduction ¶

This introductory chapter is a run-up to Chapter 2 onwards. It is an overview of mathematical optimization through some simple examples, presenting also the main characteristics of the solver used in this book: SCIP ( http://scip.zib.de ).

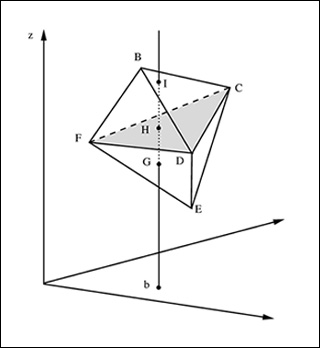

The rest of this chapter is organized as follows. Section Mathematical Optimization introduces the basics of mathematical optimization and illustrates main ideas via a simple example. Section Linear Optimization presents a real-world production problem to discuss concepts and definitions of linear-optimization model, showing details of SCIP/Python code for solving a production problem. Section Integer Optimization introduces an integer optimization model by adding integer conditions to variables, taking as an example a simple puzzle sometimes used in junior high school examinations. A simple transportation problem, which is a special form of the linear optimization problem, along with its solution is discussed in Section Transportation Problem . Here we show how to model an optimization problem as a function, using SCIP/Python. Section Duality explains duality, an important theoretical background of linear optimization, by taking a transportation problem as an example. Section Multi-product Transportation Problem presents a multi-commodity transportation problem, which is an generalization of the transportation, and describes how to handle sparse data with SCIP/Python. Section Blending problem introduces mixture problems as an application example of linear optimization. Section Fraction optimization problem presents the fraction optimization problem, showing two ways to reduce it to a linear problem. Section Multi-Constrained Knapsack Problem illustrates a knapsack problem with details of its solution procedure, including an explanation on how to debug a formulation. Section The Modern Diet Problem considers how to cope with nutritional problems, showing an example of an optimization problem with no solution.

Mathematical Optimization ¶

Let us start by describing what mathematical optimization is: it is the science of finding the “best” solution based on a given objective function, i.e., finding a solution which is at least as good and any other possible solution. In order to do this, we must start by describing the actual problem in terms of mathematical formulas; then, we will need a methodology to obtain an optimal solution from these formulas. Usually, these formulas consist of constraints, describing conditions that must be satisfied, and by an objective function.

- objective function (which we want to maximize of minimize);

- conditions of the problem: constraint 1, constraint 2, …

- a set of variables : the unknowns that need to be found as a solution to the problem;

- a set of constraints : equations or inequalities that represent requirements in the problem as relationships between the variables

- an objective function : an expression, in terms of the defined variables, which determines e.g. the total cost, or the profit of the targeted problem.

The problem is a minimization when smaller values of the objective are preferrable, as with costs; it is a maximization when larger values are better, as with profits. The essence of the problem is the same, whether it is a minimization or a maximization (one can be converted into the other simply by putting a minus sign in the objective function).

In this text, the problem is described by the following format.

Maximize or minimize Objective function Subject to: Constraint 1 Constraint 2 …

The optimization problem seeks a solution to either minimize or maximize the objective function, while satisfying all the constraints. Such a desirable solution is called optimum or optimal solution — the best possible from all candidate solutions measured by the value of the objective function. The variables in the model are typically defined to be non-negative real numbers.

There are many kinds of mathematical optimization problems; the most basic and simple is linear optimization [1] . In a linear optimization problem, the objective function and the constraints are all linear expressions (which are straight lines, when represented graphically). If our variables are \(x_1, x_2, \ldots, x_n\) , a linear expression has the form \(a_1 x_1 + a_2 x_2 + \ldots + ax_n\) , where \(a_1, \ldots, a_n\) are constants.

For example,

is a linear optimization problem.

One of the important features of linear optimization problems is that they are easy to solve. Common texts on mathematical optimization describe in lengthy detail how a linear optimization problem can be solved. Taking the extreme case, for most practitioners, how to solve a linear optimization problem is not important. For details on how methods for solving these problems have emerged, see Margin seminar 1 . Most of the software packages for mathematical optimization support linear optimization. Given a description of the problem, an optimum solution (i.e., a solution that is guaranteed to be the best answer) to most of the practical problems can be obtained in an extremely short time.

Unfortunately, not all the problems that we find in the real world can be described as a linear optimization problem. Simple linear expressions are not enough to accurately represent many complex conditions that occur in practice. In general, optimization problems that do not fit in the linear optimization paradigm are called nonlinear optimization problems.

In practice, nonlinear optimization problems are often difficult to solve in a reliable manner. Using the mathematical optimization solver covered in this document, SCIP, it is possible to efficiently handle some nonlinear functions; in particular, quadratic optimization (involving functions which are a polynomial of up to two, such as \(x^2 + xy\) ) is well supported, especially if they are convex.

A different complication arises when some of the variables must take on integer values; in this situation, even if the expressions in the model are linear, the general case belongs to a class of difficult problems (technically, the NP-hard class [2] ). Such problems are called integer optimization problems; with ingenuity, it is possible to model a variety of practical situations under this paradigm. The case where some of the variables are restricted to integer values, and other are continuous, is called a mixed-integer optimization problem. Even for solvers that do not support nonlinear optimization, some techniques allow us to use mixed-integer optimization to approximate arbitrary nonlinear functions; these techniques (piecewise linear approximation) are described in detail in Chapter Piecewise linear approximation of nonlinear functions .

Linear Optimization ¶

We begin with a simple linear optimization problem; the goal is to explain the terminology commonly used optimization.

Let us start by explaining the meaning of \(x_1, x_2, x_3\) : these are values that we do not know, and which can change continuously; hence, they are called variables .

The first expression defines the function to be maximized, which is called the objective function .

The second and subsequent expressions restrict the value of the variables \(x1, x2, x3\) , and are commonly referred to as constraints . Expressions ensuring that the variables are non-negative \((x1, x2, x3 \geq 0)\) have the specific name of sign restrictions or non-negativity constraints . As these variables can take any non-negative real number, they are called real variables , or continuous variables .

In this problem, both the objective function and the constraint expressions consist of adding and subtracting the variables \(x_1, x_2, x_3\) multiplied by a constant. These are called linear expressions . The problem of maximizing (or minimizing) a linear objective function subject to linear constraints is called a linear optimization problem .

The set of values for variables \(x_1, x_2, x_3\) is called a solution , and if it satisfies all constraints it is called a feasible solution . Among feasible solutions, those that maximize (or minimize) the objective function are called optimal solutions . The maximum (or minimum) value of the objective function is called the optimum . In general, there are multiple solutions with an optimum objective value, but usually the aim is to find just one of them.

Finding such point can be explored in some methodical way; this is what a linear optimization solver does for finding the optimum. Without delay, we are going to see how to solve this example using the SCIP solver. SCIP has been developed at the Zuse Institute Berlin (ZIB), an interdisciplinary research institute for applied mathematics and computing. SCIP solver can be called from several programming languages; for this book we have chosen the very high-level language Python . For more information about SCIP and Python, see appendices SCIPintro and PYTHONintro , respectively.

The first thing to do is to read definitions contained in the SCIP module (a module is a different file containing programs written in Python). The SCIP module is called pyscipopt , and functionality defined there can be accessed with:

The instruction for using a module is import . In this statement we are importing the definitions of Model . We could also have used from pyscipopt import * , where the asterisk means to import all the definitions available in pyscipopt . .. ; we have imported just some of them, and we could have used other idioms, as we will see later. One of the features of Python is that, if the appropriate module is loaded, a program can do virtually anything [3] .

The next operation is to create an optimization model; this can be done with the Model class, which we have imported from the pyscipopt module.

With this instruction, we create an object named model , belonging the class Model (more precisely, model is a reference to that object). The model description is the (optional) string "Simple linear optimization" , passed as an argument.

There is a number of actions that can be done with objects of type Model , allowing us to add variables and constraints to the model before solving it. We start defining variables \(x_1, x_2, x_3\) (in the program, x1, x2, x3 ). We can generate a variable using the method addVar of the model object created above (a method is a function associated with objects of a class). For example, to generate a variable x1 we use the following statement:

With this statement, the method addVar of class Model is called, creating a variable x1 (to be precise, x1 holds a reference to the variable object). In Python, arguments are values passed to a function or method when calling it (each argument corresponds to a parameter that has been specified in the function definition). Arguments to this method are specified within parenthesis after addVar . There are several ways to specify arguments in Python, but the clearest way is to write argument name = argument value as a keyword argument .

Here, vtype = "C" indicates that this is a continuous variable, and name = "x1" indicates that its name (used, e.g., for printing) is the string "x1" . The complete signature (i.e., the set of parameters) for the addVar method is the following:

Arguments are, in order, the name, the type of variable, the lower bound, the upper bound, the coefficients in the objective function. The last parameter, pricedVar is used for column generation , a method that will be explained in Chapter Bin packing and cutting stock problems . In Python, when calling a method omitting keyword arguments (which are optional) default values (given after = ) are applied. In the case of addVar , all the parameters are optional. This means that if we add a variable with model.addVar() , SCIP will create a continuous, non-negative and unbounded variable, whose name is an empty string, with coefficient 0 in the objective ( obj=0 ). The default value for the lower bound is specified with lb=0.0 , and the upper bound ub is implicitly assigned the value infinity (in Python, the constant None usually means the absence of a value). When calling a function or method, keyword arguments without a default value cannot be omitted.

Functions and methods may also be called by writing the arguments without their name, in a predetermined order, as in:

Other variables may be generated similarly. Note that the third constraint \(x 3 \leq 30\) is the upper bound constraint of variable \(x_3\) , so we may write ub = 30 when declaring the variable.

Next, we will see how to enter a constraint. For specifying a constraint, we will need to create a linear expression , i.e., an expression in the form of \(c_1 x_1 + c_2 x2 + \ldots + c_n x_n\) , where each \(c_i\) is a constant and each \(x_i\) is a variable. We can specify a linear constraint through a relation between two linear expressions. In SCIP’s Python interface, the constraint \(2x1 + x2 + x3 \leq 60\) is entered by using method addConstr as follows:

The signature for addConstr (ignoring some parameters which are not of interest now) is:

SCIP supports more general cases, but for the time being let us concentrate on linear constraints. In this case, parameter relation is a linear constraint, including a left-hand side (lhs), a right-hand side (rhs), and the sense of the constraint. Both lhs and rhs may be constants, variables, or linear expressions; sense maybe "<=" for less than or equal to, ">=" for greater than or equal to, or "==" for equality. The name of the constraint is optional, the default being an empty string. Linear constraints may be specified in several ways; for example, the previous constraint could be written equivalently as:

Before solving the model, we must specify the objective using the setObjective method, as in:

The signature for setObjective is:

The first argument of setObjective is a linear (or more general) expression, and the second argument specifies the direction of the objective function with strings "minimize" (the default) or "maximize" . (The third parameter, clear , if "true" indicates that coefficients for all other variables should be set to zero.) We may also set the direction of optimization using model.setMinimize() or model.setMaximize() .

At this point, we can solve the problem using the method optimize of the model object:

After executing this statement — if the problem is feasible and bounded, thus allowing completion of the solution process —, we can output the optimal value of each variable. This can be done through method getVal of Model objects; e.g.:

The complete program for solving our model can be stated as follows:

| from pyscipopt import Model model = Model("Simple linear optimization") x1 = model.addVar(vtype="C", name="x1") x2 = model.addVar(vtype="C", name="x2") x3 = model.addVar(vtype="C", name="x3") model.addCons(2*x1 + x2 + x3 <= 60) model.addCons(x1 + 2*x2 + x3 <= 60) model.addCons(x3 <= 30) model.setObjective(15*x1 + 18*x2 + 30*x3, "maximize") model.optimize() if model.getStatus() == "optimal": print("Optimal value:", model.getObjVal()) print("Solution:") print(" x1 = ", model.getVal(x1)) print(" x2 = ", model.getVal(x2)) print(" x3 = ", model.getVal(x3)) else: print("Problem could not be solved to optimality") |

If we execute this Python program, the output will be:

| [solver progress output omitted] Optimal value: 1230.0 Solution: x1 = 10.0 x2 = 10.0 x3 = 30.0 |

The first lines, not shown, report progress of the SCIP solver (this can be suppressed) while lines 2 to 6 correspond to the output instructions of lines 14 to 16 of the previous program.

Margin seminar 1

Linear programming

Linear programming was proposed by George Dantzig in 1947, based on the work of three Nobel laureate economists: Wassily Leontief, Leonid Kantrovich, Tjalling Koopmans. At that time, the term used was “optimization in linear structure”, but it was renamed as “linear programming” in 1948, and this is the name commonly used afterwards. The simplex method developed by Dantzig has long been the almost unique algorithm for linear optimization problems, but it was pointed out that there are (mostly theoretical) cases where the method requires a very long time.

The question as to whether linear optimization problems can be solved efficiently in the theoretical sense (in other words, whether there is an algorithm which solves linear optimization problems in polynomial time) has been answered when the ellipsoid method was proposed by Leonid Khachiyan (Khachian), of the former Soviet Union, in 1979. Nevertheless, the algorithm of Khachiyan was only theoretical, and in practice the supremacy of the simplex method was unshaken. However, the interior point method proposed by Narendra Karmarkar in 1984 [4] has been proved to be theoretically efficient, and in practice it was found that its performance can be similar or higher than the simplex method’s. Currently available optimization solvers are usually equipped with both the simplex method (and its dual version, the dual simplex method ) and with interior point methods, and are designed so that users can choose the most appropriate of them.

Integer Optimization ¶

For many real-world optimization problems, sometimes it is necessary to obtain solutions composed of integers instead of real numbers. For instance, there are many puzzles like this: “In a farm having chicken and rabbits, there are 5 heads and 16 feet. How many chicken and rabbits are there?” Answer to this puzzle is meaningful if the solution has integer values only.

Let us consider a concrete puzzle.

Let us formalize this as an optimization problem with mathematical formulas. This process of describing a situation algebraically is called the formulation of a problem in mathematical optimization.

Then, the number of heads can be expressed as \(x + y + z\) . Cranes have two legs each, turtles have four legs each, and each octopus has eight legs. Therefore, the number of legs can be expressed as \(2x + 4y + 8z\) . So the set of \(x, y, z\) must satisfy the following “constraints”:

Since there are three variables and only two equations, there may be more than one solution. Therefore, we add a condition to minimize the sum \(y + z\) of the number of turtles and octopuses. This is the “objective function”. We obtain the complete model after adding the non-negativity constraints.