We’re fighting to restore access to 500,000+ books in court this week. Join us!

Internet Archive Audio

- This Just In

- Grateful Dead

- Old Time Radio

- 78 RPMs and Cylinder Recordings

- Audio Books & Poetry

- Computers, Technology and Science

- Music, Arts & Culture

- News & Public Affairs

- Spirituality & Religion

- Radio News Archive

- Flickr Commons

- Occupy Wall Street Flickr

- NASA Images

- Solar System Collection

- Ames Research Center

- All Software

- Old School Emulation

- MS-DOS Games

- Historical Software

- Classic PC Games

- Software Library

- Kodi Archive and Support File

- Vintage Software

- CD-ROM Software

- CD-ROM Software Library

- Software Sites

- Tucows Software Library

- Shareware CD-ROMs

- Software Capsules Compilation

- CD-ROM Images

- ZX Spectrum

- DOOM Level CD

- Smithsonian Libraries

- FEDLINK (US)

- Lincoln Collection

- American Libraries

- Canadian Libraries

- Universal Library

- Project Gutenberg

- Children's Library

- Biodiversity Heritage Library

- Books by Language

- Additional Collections

- Prelinger Archives

- Democracy Now!

- Occupy Wall Street

- TV NSA Clip Library

- Animation & Cartoons

- Arts & Music

- Computers & Technology

- Cultural & Academic Films

- Ephemeral Films

- Sports Videos

- Videogame Videos

- Youth Media

Search the history of over 866 billion web pages on the Internet.

Mobile Apps

- Wayback Machine (iOS)

- Wayback Machine (Android)

Browser Extensions

Archive-it subscription.

- Explore the Collections

- Build Collections

Save Page Now

Capture a web page as it appears now for use as a trusted citation in the future.

Please enter a valid web address

- Donate Donate icon An illustration of a heart shape

Case study research : design and methods

Bookreader item preview, share or embed this item, flag this item for.

- Graphic Violence

- Explicit Sexual Content

- Hate Speech

- Misinformation/Disinformation

- Marketing/Phishing/Advertising

- Misleading/Inaccurate/Missing Metadata

obscured text on back cover

![[WorldCat (this item)] [WorldCat (this item)]](https://archive.org/images/worldcat-small.png)

plus-circle Add Review comment Reviews

1,859 Previews

31 Favorites

Better World Books

DOWNLOAD OPTIONS

No suitable files to display here.

PDF access not available for this item.

IN COLLECTIONS

Uploaded by station16.cebu on December 23, 2021

SIMILAR ITEMS (based on metadata)

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is a Case Study? | Definition, Examples & Methods

What Is a Case Study? | Definition, Examples & Methods

Published on May 8, 2019 by Shona McCombes . Revised on November 20, 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organization, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyze the case, other interesting articles.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

| Research question | Case study |

|---|---|

| What are the ecological effects of wolf reintroduction? | Case study of wolf reintroduction in Yellowstone National Park |

| How do populist politicians use narratives about history to gain support? | Case studies of Hungarian prime minister Viktor Orbán and US president Donald Trump |

| How can teachers implement active learning strategies in mixed-level classrooms? | Case study of a local school that promotes active learning |

| What are the main advantages and disadvantages of wind farms for rural communities? | Case studies of three rural wind farm development projects in different parts of the country |

| How are viral marketing strategies changing the relationship between companies and consumers? | Case study of the iPhone X marketing campaign |

| How do experiences of work in the gig economy differ by gender, race and age? | Case studies of Deliveroo and Uber drivers in London |

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

TipIf your research is more practical in nature and aims to simultaneously investigate an issue as you solve it, consider conducting action research instead.

Unlike quantitative or experimental research , a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

Example of an outlying case studyIn the 1960s the town of Roseto, Pennsylvania was discovered to have extremely low rates of heart disease compared to the US average. It became an important case study for understanding previously neglected causes of heart disease.

However, you can also choose a more common or representative case to exemplify a particular category, experience or phenomenon.

Example of a representative case studyIn the 1920s, two sociologists used Muncie, Indiana as a case study of a typical American city that supposedly exemplified the changing culture of the US at the time.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews , observations , and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data.

Example of a mixed methods case studyFor a case study of a wind farm development in a rural area, you could collect quantitative data on employment rates and business revenue, collect qualitative data on local people’s perceptions and experiences, and analyze local and national media coverage of the development.

The aim is to gain as thorough an understanding as possible of the case and its context.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis , with separate sections or chapters for the methods , results and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyze its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). What Is a Case Study? | Definition, Examples & Methods. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/methodology/case-study/

Is this article helpful?

Shona McCombes

Other students also liked, primary vs. secondary sources | difference & examples, what is a theoretical framework | guide to organizing, what is action research | definition & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Enter the URL below into your favorite RSS reader.

A case study of the assistive technology network in Sierra Leone before and after a targeted systems-level investment

- Citation (BibTeX)

Sorry, something went wrong. Please try again.

If this problem reoccurs, please contact Scholastica Support

Error message:

View more stats

Many people with disabilities in low-income settings, such as Sierra Leone, do not have access to the assistive technology (AT) they need, yet research to measure and address this issue remains limited. This paper presents a case study of the Assistive Technology 2030 (AT2030) funded Country Investment project in Sierra Leone. The research explored the nature and strength of the AT stakeholder network in Sierra Leone over the course of one year, presenting a snapshot of the network before and after a targeted systems level investment.

Mixed-method surveys were distributed via the Qualtrics software twice, in December 2021 and September 2022 to n=20 and n=16 participants (respectively). Qualitative data was analyzed thematically, while quantitative data was analyzed with the NodeXL software and MS Excel to generate descriptive statistics, visualizations, and specific metrics related to indegree, betweenness and closeness centrality of organizations grouped by type.

Findings suggest the one-year intervention did stimulate change within the AT network in Sierra Leone, increasing the number of connections within the AT network and strengthening existing relationships within the network. Findings are also consistent with existing data suggesting cost is a key barrier to AT access for both organizations providing AT and people with disabilities to obtain AT.

While this paper is the first to demonstrate that a targeted investment in AT systems and policies at the national level can have a resulting impact on the nature and strength of the AT, it only measures outcomes at one-year after investment. Further longitudinal impact evaluation would be desirable. Nonetheless, the results support the potential for systemic investments which leverage inter-organizational relationships and prioritize financial accessibility of AT, as one means of contributing towards increased access to AT for all, particularly in low-income settings.

Assistive technology (AT) is an umbrella term which broadly encompasses assistive products (AP) and the related services which improves function and enhances the user’s participation in all areas of life. 1 Assistive products are “any external products (including devices, equipment, instruments and software) […] with the primary purpose to maintain or improve an individual’s functioning and independence and/or well-being, or to prevent impairments and secondary health conditions”. 2

Recently, awareness for the urgent need to improve access to Assistive Technology has expanded, as 2022 global population statistics highlights one in three people, or 2.5 billion people, requires at least one assistive product. 1 The demand for AT is projected to increase to 3.5 billion people by 2050, yet 90% of them lack access to the products and services they need. 1 , 3 A systemic approach which adequately measures outcomes and impact is urgently required to stimulate evidence-based policies and systems which support universal access to AT. 1 , 4 , 5 However, a systemic approach first necessitates baseline understandings of the existing system, inclusive of sociopolitical context and the key stakeholders working within that context.

Assistive technology is necessary for people with disabilities to engage in activities of daily living, such as personal care or employment, and social engagement. 6 Moreover, people with disabilities also require AT to enact their basic human rights, as outlined in the United Nations Convention on the Rights of Persons with Disabilities (UNCRPD). 7 Unfortunately, many people do not have access to the AT they require, an inequity which is perpetuated within low-income settings. 8 Despite this growing disparity and a well-documented association between poverty and disability, 9 research gaps remain related to AT within low-income settings in the global South. 10

In Sierra Leone, the national prevalence of disability is estimated to be 1.3%, according to the most recent population and housing census data. 11 , 12 This is unusually low, as compared to the 16% global prevalence (World Health Organization, 2022). National stakeholders within the AT network argue this statistic does not adequately represent the true scope of disability in Sierra Leone. 10 Their stance is supported by survey data from the Rapid Assistive Technology Assessment (rATA) across a subset of the population in Freetown, which indicated a dramatically different picture: a 24.9% prevalence of self-reported disability on the basis of the Washington Group Questions (20.6% reported as having “some difficulty”, while 4.3% rated “a lot of difficulty” or above), predominantly mobility and vision related disabilities. 13 The rATA also highlighted 62.5% of older people surveyed indicated having a disability, while the incidence of disability among females was nearly 2% higher than in males. 13

Despite the 2011 Sierra Leone Disability Act being implemented, access to AT in Sierra Leone remains poor. 13 The rATA suggests only 14.9% of those with disabilities in Freetown have the assistive products they require, an alarming rate which also fails to consider people with disabilities not surveyed in rural Sierra Leone where access to such services is likely lower. 13 Meanwhile, it is estimated over half of the population of Sierra Leone lives in poverty, with 13% in extreme poverty. 14 As affordability ranks as the top barrier for AT access, poverty further perpetuates the challenges of people with disabilities within this subset of the population to access necessary AT. 13 Within the context of low-resource settings it is therefore imperative that those resources which are allocated to provide assistive products are used in the most optimal manner, and that different stakeholders work together to co-construct a systemic approach which can identify and prioritise those most in need.

This paper presents a dataset collected in tandem with an Assistive Technology 2030 (AT2030) funded Country Investment project in Sierra Leone in collaboration with Clinton Health Access Initiative (CHAI). The study aimed to explore the nature and strength of the assistive technology stakeholder network in Sierra Leone over the course of one year through a mixed methods survey methodology. We provide a systemic snapshot of the AT network in Sierra Leone, highlighting what assistive products are available, who provides and receives them, and how. We also present a relational analysis of the existing AT network, inclusive of the organizations working within areas of AT and their degrees of connectivity and collaboration amongst one another. We hope that such data can strengthen the provision of AT in Sierra Leone through identifying assistive product availability, procurement, and provision, as well as the nature of the relationships between (the relationality ) of the AT network. We also sought to provide an overview of any possible changes to the network over the course of a one-year investment by AT2030.

This study used a mixed methods survey approach, facilitated by Qualtrics online survey software. Surveys were collaboratively developed and distributed at the two time periods in December 2021 and September 2022 (herein respectively described as Baseline=T1 and Follow Up= T2).

Intervention

This paper presents the Sierra Leone country project built within a larger, targeted investment in assistive technology systems development in four African countries,by AT2030, a project led by the Global Disability Innovation Hub and funded by UK Aid. The four in-country projects were administered by Clinton Health Access Initiative (CHAI) in partnership with local government ministries and agencies. As part of this investment, CHAI and its partners convened a Technical Working Group which brought together key stakeholders in the assistive technology field. Over the course of one year, the Technical Working Group had an overarching goal to develop and strengthen key assistive technology related policies in each of the four countries. The data in this study on the AT network in Sierra Leone was collected at the outset and following completion of the AT2030 investment, by researchers who were not part of the investment process, thus allowing for third-party evaluation. To maintain objectivity, neither CHAI nor the funder were responsible for the design, data collection, analysis or reporting of results, but this paper has benefited from a programmatic perspective provided by CHIA.

Participants

Participants included members of relevant ministries involved in assistive technology leadership and/or delivery, and staff representing relevant non-profit organizations (both international and local), service providers and organizations for persons with disabilities. Participants were asked to respond on behalf of their organization. All prospective participants were identified by the researchers and local project partners, including those coordinating the investment identified above, and added to a distribution list on Qualtrics, which only contained pertinent identifying information such as name, organization, and email. Over the course of the study, n=20 (T1) and n=16 (T2) participants consented to and completed surveys. While the relatively small sample size may inherently restrict the generalizability of this study, the sample size is reflective of the size of the assistive technology network in Sierra Leone, which we aimed to explore.

Data collection

The survey was emailed to the distribution list at two time points: December 2021 (T1) and September 2022 (T2). Two reminder emails were sent out via Qualtrics at two-week intervals following each time point, to participants who had not yet completed the surveys as a means to stimulate participant retention. The T1 and T2 surveys were identical, however the T2 survey utilized display logic functionalities such as conditional skipping to prevent retained respondents from completing redundant questions such as demographic information. If a participant completed the survey for the first time during the T2 period, they received the survey in its entirety without conditional skipping.

Survey content

Survey questions aimed to capture what AT is available, how it is being provided, who is receiving it and how. Questions also consisted of demographic information and qualitative prompts to identify participants’ roles within the AT network and critical challenges experienced in enacting their roles, as well as the nature and strength of relationships between stakeholders. Additional data was collected on participatory engagement in policy development, knowledge of assistive technology, and capacity for leadership which will be published separately.

Using the methodology reported by Smith and colleagues, 15 the WHO priority assistive products list was provided for respondents to select the products and associated services their organization provides. Additionally, the survey requested respondents to select from a list of organizations, which ones they were aware of as working within AT areas in Sierra Leone, followed by a subsequent 5-point Likert scale (1-5, 1= no relationship, 5= collaboration) to indicate which organizations they had working relationships with and to what extent. In attempts to maximize response rates and maintain participant retention, two reminder emails were sent to participants for T1 and T2; however, challenges encountered were participant drop-out from T1 to T2.

Data analysis

Data was reviewed across the two time periods and descriptive statistics (counts and means) were calculated for all variables using MS Excel software. Qualitative data employed content analysis of the text responses from each open-ended survey question, with a particular emphasis on themes which represented commonalities or a lack of representation across all stakeholders. Network data was analyzed using the NodeXL software and MS Excel to generate visualizations, and specific metrics related to indegree, betweenness and closeness centrality of organizations grouped by organization type. Indegree represents the total number of incoming connections per organization, while weighted indegree represents the sum of weights (strength) of each connection. Closeness centrality represents the relationship of the organization to the centre of the network (lower scores indicate greater centrality). To accommodate for different response rates at baseline and follow up, indegree was calculated as a proportion of incoming connections out of the total respondents (n) for that time point. Weighted indegree was calculated as a proportion of the sum of weights of incoming connections divided by the total possible weighting for the respondents for that time point (i.e. n*5). Statistical comparisons for overall network metrics across T1 and T2 were calculated using a paired t-test in SPSS v.28. While means are also reported by organization type as a subsample of the overall data, no statistical tests were carried out due to small subsample sizes.

The study received ethical approval from Maynooth University and the Sierra Leone Ethics and Scientific Review Committee. Each survey contained a mandatory informed consent section which required completion prior to respondents accessing the survey questions. Respondents were not required to answer any specific questions and were not coerced to participate. All respondents received a unique identification code to preserve anonymity, and any identifying information was removed prior to data analysis.

A total of 27 participants from 24 organizations participated in the surveys across both baseline and follow-up time points (T1 n=20 and T2 n= 16). Nine individuals and 11 organizations were retained across both T1 and T2 surveys. The majority of participants represented International non-governmental organizations (n=9), followed by Organizations of Persons with Disabilities (n=8), Government Ministry (n=4), Service Delivery organisations (n=4) and Academic Institutions (n=2).

Additionally, the respondents were requested to identify multiple areas of AT that their organizations were aligned with. Advocacy ranked as the top selection (24.5%), followed by direct service provision (14.9%), human resources and capacity building (14.9%), policy or systems development (13.8%), product selection and/or procurement (13.8%), data and information systems (11.7%), and financing (6.4%).

Assistive Products in Sierra Leone

Participants were asked to select from the APL which products and/or product services they provide. Manual wheelchairs, crutches, canes, lower limb prosthetics and orthopaedic footwear were the most selected across both time points. Table 1 lists summarises the types of assistive products and services provided in Sierra Leone, and the number of organisations providing each product and/or service across all 50 APL products.

| No products or services provided | Alarm signallers, audio players, closed captioning displays, fall detectors, global positioning locators, hearing loops/FM systems, magnifiers (digital hand-held and optical), personal emergency alarm, pill organizers, watches |

| 1 organization providing product or service | Braille displays/note takers, communication software, gesture to voice technology, incontinence products, keyboard and mouse emulation software, pressure relief mattresses, screen readers, simplified mobile phones, tablets*, upright supportive chair and table for children*, rubber tips*, pencil grips*, adapted cups*, sponges*, weighted spoons*, weighted vests*, rollators**, time management products**, travel aids** |

| 2-3 organizations providing product or service | Communication boards, deafblind communicators, hearing aids, orthoses (lower limb, spinal and upper limb), personal digital assistant, pressure relief cushions, prostheses (lower limb), recorders, spectacles, therapeutic footwear, video communication devices, walking frames, wheelchairs (power), |

| 4-5 organizations providing product or service | Braille writing equipment, canes/sticks, clubfoot braces, handrails/grab bars, standing frames, tricycles, white canes, |

| 6-9 organizations product or service | Chairs for shower/bath/toilet, ramps |

| 10 or more organizations | Crutches/axillary, wheelchairs (manual) |

*Other assistive product offered but not on the Assistive Product List **Assistive product not provided, only service related to the prescription, servicing and maintenance, and customization of that Assistive product

Respondents indicated that the products they provide were most commonly procured by their organizations through purchase (38.7%), followed by donation (29%), building products themselves (22.6%) or other (9.7%), which was explicated as recycling used products.

Providers of Assistive Products in Sierra Leone

Participants were asked to indicate whether their organization provided assistive products and/or related services. The findings highlighted 38.3% of stakeholders directly provided AT and 40.4% directly provided AT related services to beneficiaries, while only 21.3% indicated they do not provide AT or AT related services at all.

More specifically, respondents who did indicate providing products and/or services indicated they provided the following services: provision of locally made assistive products, repairs and maintenance of assistive products, education and training of users on the utility of assistive products, referrals of people with disabilities to service providers, prosthetic and orthotics, accessibility assessments, and rehabilitation service provision. Participants whom do not directly provide AT or AT related services indicated their work falls within AT advocacy, fundraising, procurement, policy, and research.

When asked about the challenges they experienced procuring and distributing these products to beneficiaries, qualitative data indicated difficulty sourcing materials, challenges obtaining products due to poor infrastructure, poor quality standards and/or customizability of products, and low technical and managerial support as common barriers. High product and material costs and inadequate funds from both the organizations and beneficiaries was the most commonly cited challenge.

Beneficiaries of Assistive Products in Sierra Leone

When probed on the number of clients they served each month, respondents indicated the range of beneficiaries spanned from as little as 10 per month to upwards of 1000, while one respondent noted there was no fixed number as they serve at the national level. Respondents noted that their beneficiaries were predominantly people with mobility related disabilities or functional limitations (21.4%), closely followed by people with vision disabilities (17.9%), communication disabilities (15.4%) and hearing disabilities (13.1%).

Participants emphasized children and adolescents were the highest populations served, with an equal representation among the ages of 5-12 (23.7%) and 13-18 (23.7%). Adults aged 20-50 years (21%) closely followed, while children under 4 (15.8%) and adults over 50 years of age (15.8%) are equally less represented as beneficiaries of assistive products and services in Sierra Leone.

Respondents whose organizations provide assistive products indicated that their beneficiaries most commonly received APs free of cost (63.2%), followed by client payment (26.3%) and a fixed cost structure (10.5%).

Network Analysis

Respondents were asked to indicate which organizations in the AT network they were aware of, and subsequently to rate the strength of their relationship with the organizations they indicated an awareness of. The degree of relationality among these stakeholders involved in the assistive technology network was then analyzed across the two time points and organizational relationships were visualized using the NodeXL software, presented in Figure 1 and Figure 2 . The colored nodes in the figures depict the various sub-types of organizations, while the lines between the nodes represent their relationships, with thicker lines indicating stronger relationships. The red nodes represent government ministries and agencies, the green represent service delivery organizations, blue represents organization of persons with disabilities, black represents NGOs and yellow represents academic institutions.

Overall, this representation depicts a relatively centralized network with a higher degree of connections between organizations. Furthermore, ministries and government agencies appear towards the centre of the network, indicating a relatively greater role in connecting organizations to one another, however it is noteworthy that these are not the most central organizations in the network.

Table 2 provides quantitative data which demonstrates the overall number and strength of interconnections among the organizations within the assistive technology network in Sierra Leone. Indegree is the number of identified inward connections, or the number of other organizations who identified a connection with that organization. Indegree data are presented as a mean value per organization type to preserve anonymity. The data visualized in Table 2 significantly increased over one year from baseline to follow up, while the relative centrality of organizations did not change, at least over the one-year time period of this study.

| Organization Type | Indegree Mean (SD) | Weighted Indegree Mean (SD) | Closeness Centrality Mean (SD) | |||

| Baseline | Follow Up | Baseline | Follow Up | Baseline | Follow Up | |

| Ministry or Government Agency | 0.46 (0.11) | 0.50 (0.16) | 9.07 (3.49) | 10.36 (4.34) | 0.53 (0.01) | 0.64 (0.14) |

| Organization of Persons with Disabilities | 0.23 (0.07) | 0.38 (0.11) | 3.73 (0.91) | 6.73 (2.19) | 0.54 (0.09) | 0.58 (0.13) |

| Service Delivery Organization | 0.34 (0.06) | 0.48 (0.09) | 6.00 (1.52) | 7.84 (2.76) | 0.57 (0.13) | 0.53 (0.01) |

| Local NGO | 0.24 (0.07) | 0.40 (0.17) | 4.48 (2.05) | 6.96 (3.87) | 0.52 (0.01) | 0.52 (0.02) |

| International NGO | 0.27 (0.07) | 0.40 (0.16) | 4.90 (1.64) | 7.29 (3.77) | 0.55 (0.07) | 0.56 (0.07) |

| Overall | 0.29 (0.11) | 0.42* (0.14) | 5.12 (2.34) | 7.51* (3.31) | 0.54 (0.08) | 0.56 (0.10) |

SD – standard deviation, NGO – non-governmental organization *Differs significantly from baseline at p<0.01 (two-tailed)

Overall, there was a statistically significant increase in indegree scores between the two timepoints suggesting a higher level of connection among AT organizations in Sierra Leone following the 1-year investment. This suggests those organizations built more relationships and expanded their reach within the AT network. As relationship strength was measured on a 5-point scale (no awareness, awareness, communication, cooperation, collaboration), we can interpret increases in weighted indegree to suggest greater inter-organizational working between members of the network (please refer to Table 2 ).

These findings suggest the one-year intervention did indeed stimulate change within the AT network in Sierra Leone, increasing the number connections within the AT network, and strengthening existing relationships within the network.

The most common assistive products available in Sierra Leone were indicated to be manual wheelchairs, crutches, canes, lower limb prosthetics and orthopaedic footwear. This aligns with our participants ranking mobility related disabilities or functional limitations as the most common reason for beneficiary referral, as well as the rATA data 13 ). The global report on AT notes “the type, complexity, magnitude and duration of a humanitarian crisis impacts the need for and supply of assistive technology”. 1 When we factor in the sociopolitical context of Sierra Leone and its history of civil war, and the population requiring these products due to political violence, such as lower limb amputations, it is also not surprising that mobility related products are so widely available due to population need. Moreover, as many low-income settings procure their products through donations, often from abroad, these items are probable to be in high circulation in relation to the high global prevalence of mobility related disabilities, likely shaping what products donors perceive as being most relevant. 1

Interestingly, data from the rATA shows the people with disabilities who did have AP, most often obtained their product(s) through purchase, despite cost being the most significant barrier to access. 13 As such, these APs were often purchased through informal and unregulated providers who offer lower costs, such as market vendors. 16 In comparison, our findings demonstrated AT stakeholders providing AP did so predominantly at no-cost. This discrepancy could suggest those who need AT most are not aware of the regulated providers who offer free AP and/or AP services in Sierra Leone, or they simply cannot access them due to infrastructural barriers, or not having AT needed to navigate their environment in the first place. For example, our data highlighted only two organizations offering spectacles, yet the rATA indicated spectacles as being the most common AP obtained by people with disabilities sampled in Sierra Leone. This further supports our interpretation that access to free APs is limited if only a small subset of regulated providers are offering them, leading to an increased reliance on people with disabilities procuring APs from informal and unregulated providers in Sierra Leone. An interconnected and coherent national AT network could offer a way forward, should collaborative relationships among AT stakeholders continue to forge and their collective resources, contacts and beneficiaries were to be cross-pollinated for the advancement of beneficiary access.

As technology and what constitutes as AT continues to advance, juxtaposed with the prevalence of disability increasing, there is a risk that the gap in access to AT will continue to rise. 17 It is therefore paramount that the goal of improving AT related outcomes, such as improved access to AT for all, is first warranted by the measurement of such outcomes. 4 This paper has attempted to provide a systemic snapshot of the AT network in Sierra Leone, highlighting key information such as what assistive products are presently available, who provides them, who receives them (and how), and the relational cohesion of the network itself.

This paper is the first to demonstrate that a targeted investment in assistive technology systems and policies at the national level can have a resulting impact on the nature and strength of the assistive technology ecosystem relationships. It is therefore recommended as an intervention to engage stakeholders within the assistive technology space, in particular policy makers who have power to formulate AP related policy and access. However, this work is limited in scope as it only provides a reassessment of outcomes following the one-year investment, and does provide a more longitudinal evaluation of the impact of that investment in the longer term.

Future research is recommended to replicate the work done to date to evaluate whether there is an improvement in access to assistive technologies over a longer period of time as a result of targeted policy and systems change, as well as larger impacts on policy formulation for AP access. For example, attention to data collection of which types and categories of AP are being manufactured locally can inform policy formation to encourage continuity of local manufacturing, while improving access to AP. Moreover, further studies to investigate factors influencing limited uptake of free AP by persons with disabilities, as explicated above and discovered in this study, are recommended.

CONCLUSIONS

Cohesive AT networks are particularly important in low-income settings such as Sierra Leone, where the intersection of poverty and disability disproportionately reduces people with disabilities’ access to the AT they need. Power and colleagues 18 have proposed the Assistive Technology Embedded Systems Thinking (ATEST) Model as a way of conceptualising the embedded relationships between individual-community- system-country-world influences on assistive technology provision. This paper suggests that even where resources are scarce and systemic relationships are uneven, an internationally-funded investment, which embraces the participation of country-level stakeholders and service providing organisations can result in enhanced inter-organisational working, which in turn has the potential to use existing resources more optimally, allowing greater access to services for individuals most in need.

The findings of this paper demonstrate an increase in organizational collaboration can strengthen assistive technology networks, however key barriers to access remain cost for both organizations providing AT and people with disabilities to obtain AT. Future work should use systemic approaches to leverage organizational relationality and prioritize financial accessibility of AT within systemic approaches to AT policy and practice, to leverage existing resources (particularly no-cost AT) and advance towards the ultimate goal of increased access to AT for all.

Ethics Statement

Ethical approval for the study was granted by Maynooth University and the Sierra Leone Ethics and Scientific Review Committee. The study involved human participants but was not a clinical trial. All participants provided informed consent freely and were aware they could withdraw from the study at any time.

Data Availability

All data generated or analysed during this study are included in this article.

This work was funded by the Assistive Technology 2030 project, funded by the United Kingdom Foreign Commonwealth Development Office (FCDO; UK Aid) and administered by the Global Disability Innovation Hub.

Authorship Contributions

Stephanie Huff led the manuscript preparation and contributed to data analysis. Emma M. Smith led the research design, data collection, analysis and contributed to manuscript preparation. Finally, Malcolm MacLachlan contributed to research design, analysis, manuscript review, and supervision. All authors read and approved the final manuscript.

Disclosure of interest

The authors completed the ICMJE Disclosure of Interest Form (available upon request from the corresponding author) and disclose no relevant interests.

Correspondence to:

Emma M. Smith Maynooth University Maynooth, Co. Kildare Ireland [email protected]

Submitted : February 27, 2024 BST

Accepted : June 26, 2024 BST

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

Guidance Regarding Methods for De-identification of Protected Health Information in Accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule

This page provides guidance about methods and approaches to achieve de-identification in accordance with the Health Insurance Portability and Accountability Act of 1996 (HIPAA) Privacy Rule. The guidance explains and answers questions regarding the two methods that can be used to satisfy the Privacy Rule’s de-identification standard: Expert Determination and Safe Harbor 1 . This guidance is intended to assist covered entities to understand what is de-identification, the general process by which de-identified information is created, and the options available for performing de-identification.

In developing this guidance, the Office for Civil Rights (OCR) solicited input from stakeholders with practical, technical and policy experience in de-identification. OCR convened stakeholders at a workshop consisting of multiple panel sessions held March 8-9, 2010, in Washington, DC. Each panel addressed a specific topic related to the Privacy Rule’s de-identification methodologies and policies. The workshop was open to the public and each panel was followed by a question and answer period. Read more on the Workshop on the HIPAA Privacy Rule's De-Identification Standard. Read the Full Guidance .

1.1 Protected Health Information 1.2 Covered Entities, Business Associates, and PHI 1.3 De-identification and its Rationale 1.4 The De-identification Standard 1.5 Preparation for De-identification

Guidance on Satisfying the Expert Determination Method

2.1 Have expert determinations been applied outside of the health field? 2.2 Who is an “expert?” 2.3 What is an acceptable level of identification risk for an expert determination? 2.4 How long is an expert determination valid for a given data set? 2.5 Can an expert derive multiple solutions from the same data set for a recipient? 2.6 How do experts assess the risk of identification of information? 2.7 What are the approaches by which an expert assesses the risk that health information can be identified? 2.8 What are the approaches by which an expert mitigates the risk of identification of an individual in health information? 2.9 Can an Expert determine a code derived from PHI is de-identified? 2.10 Must a covered entity use a data use agreement when sharing de-identified data to satisfy the Expert Determination Method?

Guidance on Satisfying the Safe Harbor Method

3.1 When can ZIP codes be included in de-identified information? 3.2 May parts or derivatives of any of the listed identifiers be disclosed consistent with the Safe Harbor Method? 3.3 What are examples of dates that are not permitted according to the Safe Harbor Method? 3.4 Can dates associated with test measures for a patient be reported in accordance with Safe Harbor? 3.5 What constitutes “any other unique identifying number, characteristic, or code” with respect to the Safe Harbor method of the Privacy Rule? 3.6 What is “actual knowledge” that the remaining information could be used either alone or in combination with other information to identify an individual who is a subject of the information? 3.7 If a covered entity knows of specific studies about methods to re-identify health information or use de-identified health information alone or in combination with other information to identify an individual, does this necessarily mean a covered entity has actual knowledge under the Safe Harbor method? 3.8 Must a covered entity suppress all personal names, such as physician names, from health information for it to be designated as de-identified? 3.9 Must a covered entity use a data use agreement when sharing de-identified data to satisfy the Safe Harbor Method? 3.10 Must a covered entity remove protected health information from free text fields to satisfy the Safe Harbor Method?

Glossary of Terms

Protected health information.

The HIPAA Privacy Rule protects most “individually identifiable health information” held or transmitted by a covered entity or its business associate, in any form or medium, whether electronic, on paper, or oral. The Privacy Rule calls this information protected health information (PHI) 2 . Protected health information is information, including demographic information, which relates to:

- the individual’s past, present, or future physical or mental health or condition,

- the provision of health care to the individual, or

- the past, present, or future payment for the provision of health care to the individual, and that identifies the individual or for which there is a reasonable basis to believe can be used to identify the individual. Protected health information includes many common identifiers (e.g., name, address, birth date, Social Security Number) when they can be associated with the health information listed above.

For example, a medical record, laboratory report, or hospital bill would be PHI because each document would contain a patient’s name and/or other identifying information associated with the health data content.

By contrast, a health plan report that only noted the average age of health plan members was 45 years would not be PHI because that information, although developed by aggregating information from individual plan member records, does not identify any individual plan members and there is no reasonable basis to believe that it could be used to identify an individual.

The relationship with health information is fundamental. Identifying information alone, such as personal names, residential addresses, or phone numbers, would not necessarily be designated as PHI. For instance, if such information was reported as part of a publicly accessible data source, such as a phone book, then this information would not be PHI because it is not related to heath data (see above). If such information was listed with health condition, health care provision or payment data, such as an indication that the individual was treated at a certain clinic, then this information would be PHI.

Back to top

Covered Entities, Business Associates, and PHI

In general, the protections of the Privacy Rule apply to information held by covered entities and their business associates. HIPAA defines a covered entity as 1) a health care provider that conducts certain standard administrative and financial transactions in electronic form; 2) a health care clearinghouse; or 3) a health plan. 3 A business associate is a person or entity (other than a member of the covered entity’s workforce) that performs certain functions or activities on behalf of, or provides certain services to, a covered entity that involve the use or disclosure of protected health information. A covered entity may use a business associate to de-identify PHI on its behalf only to the extent such activity is authorized by their business associate agreement.

See the OCR website https://www.hhs.gov/ocr/privacy/ for detailed information about the Privacy Rule and how it protects the privacy of health information.

De-identification and its Rationale

The increasing adoption of health information technologies in the United States accelerates their potential to facilitate beneficial studies that combine large, complex data sets from multiple sources. The process of de-identification, by which identifiers are removed from the health information, mitigates privacy risks to individuals and thereby supports the secondary use of data for comparative effectiveness studies, policy assessment, life sciences research, and other endeavors.

The Privacy Rule was designed to protect individually identifiable health information through permitting only certain uses and disclosures of PHI provided by the Rule, or as authorized by the individual subject of the information. However, in recognition of the potential utility of health information even when it is not individually identifiable, §164.502(d) of the Privacy Rule permits a covered entity or its business associate to create information that is not individually identifiable by following the de-identification standard and implementation specifications in §164.514(a)-(b). These provisions allow the entity to use and disclose information that neither identifies nor provides a reasonable basis to identify an individual. 4 As discussed below, the Privacy Rule provides two de-identification methods: 1) a formal determination by a qualified expert; or 2) the removal of specified individual identifiers as well as absence of actual knowledge by the covered entity that the remaining information could be used alone or in combination with other information to identify the individual.

Both methods, even when properly applied, yield de-identified data that retains some risk of identification. Although the risk is very small, it is not zero, and there is a possibility that de-identified data could be linked back to the identity of the patient to which it corresponds.

Regardless of the method by which de-identification is achieved, the Privacy Rule does not restrict the use or disclosure of de-identified health information, as it is no longer considered protected health information.

The De-identification Standard

Section 164.514(a) of the HIPAA Privacy Rule provides the standard for de-identification of protected health information. Under this standard, health information is not individually identifiable if it does not identify an individual and if the covered entity has no reasonable basis to believe it can be used to identify an individual.

§ 164.514 Other requirements relating to uses and disclosures of protected health information. (a) Standard: de-identification of protected health information. Health information that does not identify an individual and with respect to which there is no reasonable basis to believe that the information can be used to identify an individual is not individually identifiable health information.

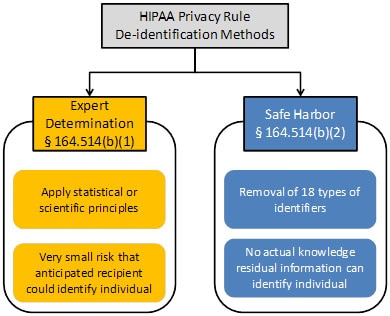

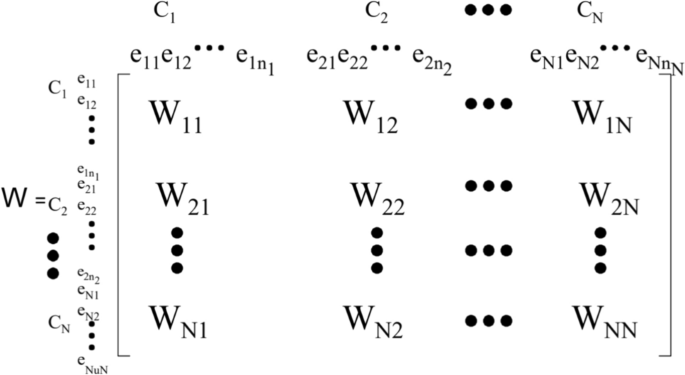

Sections 164.514(b) and(c) of the Privacy Rule contain the implementation specifications that a covered entity must follow to meet the de-identification standard. As summarized in Figure 1, the Privacy Rule provides two methods by which health information can be designated as de-identified.

Figure 1. Two methods to achieve de-identification in accordance with the HIPAA Privacy Rule.

The first is the “Expert Determination” method:

(b) Implementation specifications: requirements for de-identification of protected health information. A covered entity may determine that health information is not individually identifiable health information only if: (1) A person with appropriate knowledge of and experience with generally accepted statistical and scientific principles and methods for rendering information not individually identifiable: (i) Applying such principles and methods, determines that the risk is very small that the information could be used, alone or in combination with other reasonably available information, by an anticipated recipient to identify an individual who is a subject of the information; and (ii) Documents the methods and results of the analysis that justify such determination; or

The second is the “Safe Harbor” method:

(2)(i) The following identifiers of the individual or of relatives, employers, or household members of the individual, are removed:

(B) All geographic subdivisions smaller than a state, including street address, city, county, precinct, ZIP code, and their equivalent geocodes, except for the initial three digits of the ZIP code if, according to the current publicly available data from the Bureau of the Census: (1) The geographic unit formed by combining all ZIP codes with the same three initial digits contains more than 20,000 people; and (2) The initial three digits of a ZIP code for all such geographic units containing 20,000 or fewer people is changed to 000

(C) All elements of dates (except year) for dates that are directly related to an individual, including birth date, admission date, discharge date, death date, and all ages over 89 and all elements of dates (including year) indicative of such age, except that such ages and elements may be aggregated into a single category of age 90 or older

(D) Telephone numbers

(L) Vehicle identifiers and serial numbers, including license plate numbers

(E) Fax numbers

(M) Device identifiers and serial numbers

(F) Email addresses

(N) Web Universal Resource Locators (URLs)

(G) Social security numbers

(O) Internet Protocol (IP) addresses

(H) Medical record numbers

(P) Biometric identifiers, including finger and voice prints

(I) Health plan beneficiary numbers

(Q) Full-face photographs and any comparable images

(J) Account numbers

(R) Any other unique identifying number, characteristic, or code, except as permitted by paragraph (c) of this section [Paragraph (c) is presented below in the section “Re-identification”]; and

(K) Certificate/license numbers

(ii) The covered entity does not have actual knowledge that the information could be used alone or in combination with other information to identify an individual who is a subject of the information.

Satisfying either method would demonstrate that a covered entity has met the standard in §164.514(a) above. De-identified health information created following these methods is no longer protected by the Privacy Rule because it does not fall within the definition of PHI. Of course, de-identification leads to information loss which may limit the usefulness of the resulting health information in certain circumstances. As described in the forthcoming sections, covered entities may wish to select de-identification strategies that minimize such loss.

Re-identification

The implementation specifications further provide direction with respect to re-identification , specifically the assignment of a unique code to the set of de-identified health information to permit re-identification by the covered entity.

If a covered entity or business associate successfully undertook an effort to identify the subject of de-identified information it maintained, the health information now related to a specific individual would again be protected by the Privacy Rule, as it would meet the definition of PHI. Disclosure of a code or other means of record identification designed to enable coded or otherwise de-identified information to be re-identified is also considered a disclosure of PHI.

(c) Implementation specifications: re-identification. A covered entity may assign a code or other means of record identification to allow information de-identified under this section to be re-identified by the covered entity, provided that: (1) Derivation. The code or other means of record identification is not derived from or related to information about the individual and is not otherwise capable of being translated so as to identify the individual; and (2) Security. The covered entity does not use or disclose the code or other means of record identification for any other purpose, and does not disclose the mechanism for re-identification.

Preparation for De-identification

The importance of documentation for which values in health data correspond to PHI, as well as the systems that manage PHI, for the de-identification process cannot be overstated. Esoteric notation, such as acronyms whose meaning are known to only a select few employees of a covered entity, and incomplete description may lead those overseeing a de-identification procedure to unnecessarily redact information or to fail to redact when necessary. When sufficient documentation is provided, it is straightforward to redact the appropriate fields. See section 3.10 for a more complete discussion.

In the following two sections, we address questions regarding the Expert Determination method (Section 2) and the Safe Harbor method (Section 3).

In §164.514(b), the Expert Determination method for de-identification is defined as follows:

(1) A person with appropriate knowledge of and experience with generally accepted statistical and scientific principles and methods for rendering information not individually identifiable: (i) Applying such principles and methods, determines that the risk is very small that the information could be used, alone or in combination with other reasonably available information, by an anticipated recipient to identify an individual who is a subject of the information; and (ii) Documents the methods and results of the analysis that justify such determination

Have expert determinations been applied outside of the health field?

Yes. The notion of expert certification is not unique to the health care field. Professional scientists and statisticians in various fields routinely determine and accordingly mitigate risk prior to sharing data. The field of statistical disclosure limitation, for instance, has been developed within government statistical agencies, such as the Bureau of the Census, and applied to protect numerous types of data. 5

Who is an “expert?”

There is no specific professional degree or certification program for designating who is an expert at rendering health information de-identified. Relevant expertise may be gained through various routes of education and experience. Experts may be found in the statistical, mathematical, or other scientific domains. From an enforcement perspective, OCR would review the relevant professional experience and academic or other training of the expert used by the covered entity, as well as actual experience of the expert using health information de-identification methodologies.

What is an acceptable level of identification risk for an expert determination?

There is no explicit numerical level of identification risk that is deemed to universally meet the “very small” level indicated by the method. The ability of a recipient of information to identify an individual (i.e., subject of the information) is dependent on many factors, which an expert will need to take into account while assessing the risk from a data set. This is because the risk of identification that has been determined for one particular data set in the context of a specific environment may not be appropriate for the same data set in a different environment or a different data set in the same environment. As a result, an expert will define an acceptable “very small” risk based on the ability of an anticipated recipient to identify an individual. This issue is addressed in further depth in Section 2.6.

How long is an expert determination valid for a given data set?

The Privacy Rule does not explicitly require that an expiration date be attached to the determination that a data set, or the method that generated such a data set, is de-identified information. However, experts have recognized that technology, social conditions, and the availability of information changes over time. Consequently, certain de-identification practitioners use the approach of time-limited certifications. In this sense, the expert will assess the expected change of computational capability, as well as access to various data sources, and then determine an appropriate timeframe within which the health information will be considered reasonably protected from identification of an individual.

Information that had previously been de-identified may still be adequately de-identified when the certification limit has been reached. When the certification timeframe reaches its conclusion, it does not imply that the data which has already been disseminated is no longer sufficiently protected in accordance with the de-identification standard. Covered entities will need to have an expert examine whether future releases of the data to the same recipient (e.g., monthly reporting) should be subject to additional or different de-identification processes consistent with current conditions to reach the very low risk requirement.

Can an expert derive multiple solutions from the same data set for a recipient?

Yes. Experts may design multiple solutions, each of which is tailored to the covered entity’s expectations regarding information reasonably available to the anticipated recipient of the data set. In such cases, the expert must take care to ensure that the data sets cannot be combined to compromise the protections set in place through the mitigation strategy. (Of course, the expert must also reduce the risk that the data sets could be combined with prior versions of the de-identified dataset or with other publically available datasets to identify an individual.) For instance, an expert may derive one data set that contains detailed geocodes and generalized aged values (e.g., 5-year age ranges) and another data set that contains generalized geocodes (e.g., only the first two digits) and fine-grained age (e.g., days from birth). The expert may certify a covered entity to share both data sets after determining that the two data sets could not be merged to individually identify a patient. This certification may be based on a technical proof regarding the inability to merge such data sets. Alternatively, the expert also could require additional safeguards through a data use agreement.

How do experts assess the risk of identification of information?

No single universal solution addresses all privacy and identifiability issues. Rather, a combination of technical and policy procedures are often applied to the de-identification task. OCR does not require a particular process for an expert to use to reach a determination that the risk of identification is very small. However, the Rule does require that the methods and results of the analysis that justify the determination be documented and made available to OCR upon request. The following information is meant to provide covered entities with a general understanding of the de-identification process applied by an expert. It does not provide sufficient detail in statistical or scientific methods to serve as a substitute for working with an expert in de-identification.

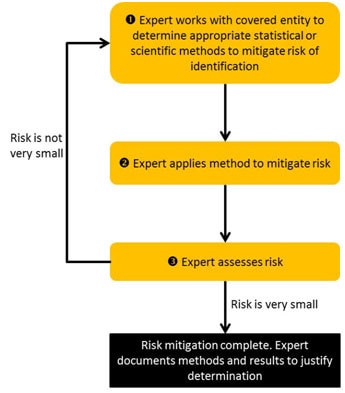

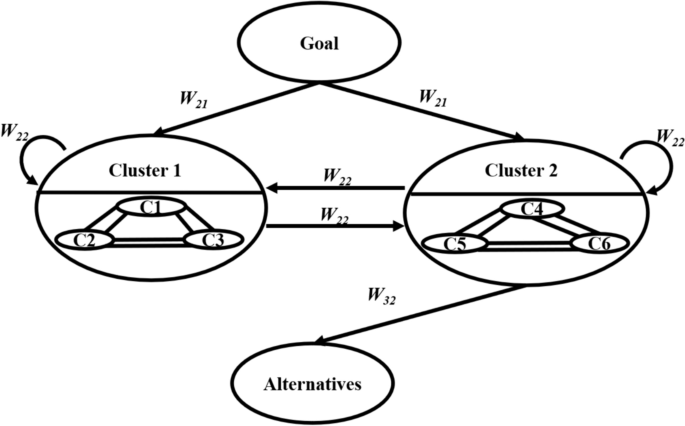

A general workflow for expert determination is depicted in Figure 2. Stakeholder input suggests that the determination of identification risk can be a process that consists of a series of steps. First, the expert will evaluate the extent to which the health information can (or cannot) be identified by the anticipated recipients. Second, the expert often will provide guidance to the covered entity or business associate on which statistical or scientific methods can be applied to the health information to mitigate the anticipated risk. The expert will then execute such methods as deemed acceptable by the covered entity or business associate data managers, i.e., the officials responsible for the design and operations of the covered entity’s information systems. Finally, the expert will evaluate the identifiability of the resulting health information to confirm that the risk is no more than very small when disclosed to the anticipated recipients. Stakeholder input suggests that a process may require several iterations until the expert and data managers agree upon an acceptable solution. Regardless of the process or methods employed, the information must meet the very small risk specification requirement.

Figure 2. Process for expert determination of de-Identification.

Data managers and administrators working with an expert to consider the risk of identification of a particular set of health information can look to the principles summarized in Table 1 for assistance. 6 These principles build on those defined by the Federal Committee on Statistical Methodology (which was referenced in the original publication of the Privacy Rule). 7 The table describes principles for considering the identification risk of health information. The principles should serve as a starting point for reasoning and are not meant to serve as a definitive list. In the process, experts are advised to consider how data sources that are available to a recipient of health information (e.g., computer systems that contain information about patients) could be utilized for identification of an individual. 8

Table 1. Principles used by experts in the determination of the identifiability of health information.

| Prioritize health information features into levels of risk according to the chance it will consistently occur in relation to the individual. | Results of a patient’s blood glucose level test will vary | |

| Demographics of a patient (e.g., birth date) are relatively stable | ||

| Determine which external data sources contain the patients’ identifiers and the replicable features in the health information, as well as who is permitted access to the data source. | The results of laboratory reports are not often disclosed with identity beyond healthcare environments. | |

| Patient name and demographics are often in public data sources, such as vital records -- birth, death, and marriage registries. | ||

| Determine the extent to which the subject’s data can be distinguished in the health information. | It has been estimated that the combination of and is unique for approximately 0.04% of residents in the United States . This means that very few residents could be identified through this combination of data alone. | |

| It has been estimated that the combination of a patient’s and is unique for over 50% of residents in the United States , . This means that over half of U.S. residents could be uniquely described just with these three data elements. | ||

| The greater the replicability, availability, and distinguishability of the health information, the greater the risk for identification. | Laboratory values may be very distinguishing, but they are rarely independently replicable and are rarely disclosed in multiple data sources to which many people have access. | |

| Demographics are highly distinguishing, highly replicable, and are available in public data sources. |

When evaluating identification risk, an expert often considers the degree to which a data set can be “linked” to a data source that reveals the identity of the corresponding individuals. Linkage is a process that requires the satisfaction of certain conditions. The first condition is that the de-identified data are unique or “distinguishing.” It should be recognized, however, that the ability to distinguish data is, by itself, insufficient to compromise the corresponding patient’s privacy. This is because of a second condition, which is the need for a naming data source, such as a publicly available voter registration database (see Section 2.6). Without such a data source, there is no way to definitively link the de-identified health information to the corresponding patient. Finally, for the third condition, we need a mechanism to relate the de-identified and identified data sources. Inability to design such a relational mechanism would hamper a third party’s ability to achieve success to no better than random assignment of de-identified data and named individuals. The lack of a readily available naming data source does not imply that data are sufficiently protected from future identification, but it does indicate that it is harder to re-identify an individual, or group of individuals, given the data sources at hand.

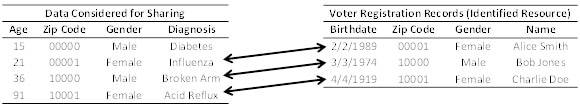

Example Scenario Imagine that a covered entity is considering sharing the information in the table to the left in Figure 3. This table is devoid of explicit identifiers, such as personal names and Social Security Numbers. The information in this table is distinguishing, such that each row is unique on the combination of demographics (i.e., Age , ZIP Code , and Gender ). Beyond this data, there exists a voter registration data source, which contains personal names, as well as demographics (i.e., Birthdate , ZIP Code , and Gender ), which are also distinguishing. Linkage between the records in the tables is possible through the demographics. Notice, however, that the first record in the covered entity’s table is not linked because the patient is not yet old enough to vote.

Figure 3. Linking two data sources to identity diagnoses.

Thus, an important aspect of identification risk assessment is the route by which health information can be linked to naming sources or sensitive knowledge can be inferred. A higher risk “feature” is one that is found in many places and is publicly available. These are features that could be exploited by anyone who receives the information. For instance, patient demographics could be classified as high-risk features. In contrast, lower risk features are those that do not appear in public records or are less readily available. For instance, clinical features, such as blood pressure, or temporal dependencies between events within a hospital (e.g., minutes between dispensation of pharmaceuticals) may uniquely characterize a patient in a hospital population, but the data sources to which such information could be linked to identify a patient are accessible to a much smaller set of people.

Example Scenario An expert is asked to assess the identifiability of a patient’s demographics. First, the expert will determine if the demographics are independently replicable . Features such as birth date and gender are strongly independently replicable—the individual will always have the same birth date -- whereas ZIP code of residence is less so because an individual may relocate. Second, the expert will determine which data sources that contain the individual’s identification also contain the demographics in question. In this case, the expert may determine that public records, such as birth, death, and marriage registries, are the most likely data sources to be leveraged for identification. Third, the expert will determine if the specific information to be disclosed is distinguishable . At this point, the expert may determine that certain combinations of values (e.g., Asian males born in January of 1915 and living in a particular 5-digit ZIP code) are unique, whereas others (e.g., white females born in March of 1972 and living in a different 5-digit ZIP code) are never unique. Finally, the expert will determine if the data sources that could be used in the identification process are readily accessible , which may differ by region. For instance, voter registration registries are free in the state of North Carolina, but cost over $15,000 in the state of Wisconsin. Thus, data shared in the former state may be deemed more risky than data shared in the latter. 12

What are the approaches by which an expert assesses the risk that health information can be identified?

The de-identification standard does not mandate a particular method for assessing risk.

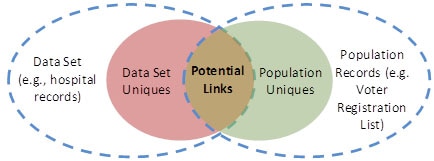

A qualified expert may apply generally accepted statistical or scientific principles to compute the likelihood that a record in a data set is expected to be unique, or linkable to only one person, within the population to which it is being compared. Figure 4 provides a visualization of this concept. 13 This figure illustrates a situation in which the records in a data set are not a proper subset of the population for whom identified information is known. This could occur, for instance, if the data set includes patients over one year-old but the population to which it is compared includes data on people over 18 years old (e.g., registered voters).

The computation of population uniques can be achieved in numerous ways, such as through the approaches outlined in published literature. 14 , 15 For instance, if an expert is attempting to assess if the combination of a patient’s race, age, and geographic region of residence is unique, the expert may use population statistics published by the U.S. Census Bureau to assist in this estimation. In instances when population statistics are unavailable or unknown, the expert may calculate and rely on the statistics derived from the data set. This is because a record can only be linked between the data set and the population to which it is being compared if it is unique in both. Thus, by relying on the statistics derived from the data set, the expert will make a conservative estimate regarding the uniqueness of records.

Example Scenario Imagine a covered entity has a data set in which there is one 25 year old male from a certain geographic region in the United States. In truth, there are five 25 year old males in the geographic region in question (i.e., the population). Unfortunately, there is no readily available data source to inform an expert about the number of 25 year old males in this geographic region.

By inspecting the data set, it is clear to the expert that there is at least one 25 year old male in the population, but the expert does not know if there are more. So, without any additional knowledge, the expert assumes there are no more, such that the record in the data set is unique. Based on this observation, the expert recommends removing this record from the data set. In doing so, the expert has made a conservative decision with respect to the uniqueness of the record.

In the previous example, the expert provided a solution (i.e., removing a record from a dataset) to achieve de-identification, but this is one of many possible solutions that an expert could offer. In practice, an expert may provide the covered entity with multiple alternative strategies, based on scientific or statistical principles, to mitigate risk.

Figure 4. Relationship between uniques in the data set and the broader population, as well as the degree to which linkage can be achieved.

The expert may consider different measures of “risk,” depending on the concern of the organization looking to disclose information. The expert will attempt to determine which record in the data set is the most vulnerable to identification. However, in certain instances, the expert may not know which particular record to be disclosed will be most vulnerable for identification purposes. In this case, the expert may attempt to compute risk from several different perspectives.

What are the approaches by which an expert mitigates the risk of identification of an individual in health information?

The Privacy Rule does not require a particular approach to mitigate, or reduce to very small, identification risk. The following provides a survey of potential approaches. An expert may find all or only one appropriate for a particular project, or may use another method entirely.

If an expert determines that the risk of identification is greater than very small, the expert may modify the information to mitigate the identification risk to that level, as required by the de-identification standard. In general, the expert will adjust certain features or values in the data to ensure that unique, identifiable elements no longer, or are not expected to, exist. Some of the methods described below have been reviewed by the Federal Committee on Statistical Methodology 16 , which was referenced in the original preamble guidance to the Privacy Rule de-identification standard and recently revised.

Several broad classes of methods can be applied to protect data. An overarching common goal of such approaches is to balance disclosure risk against data utility. 17 If one approach results in very small identity disclosure risk but also a set of data with little utility, another approach can be considered. However, data utility does not determine when the de-identification standard of the Privacy Rule has been met.

Table 2 illustrates the application of such methods. In this example, we refer to columns as “features” about patients (e.g., Age and Gender) and rows as “records” of patients (e.g., the first and second rows correspond to records on two different patients).

Table 2. An example of protected health information.

| 15 | Male | 00000 | Diabetes |

| 21 | Female | 00001 | Influenza |

| 36 | Male | 10000 | Broken Arm |

| 91 | Female | 10001 | Acid Reflux |