Back to blog home

Hypothesis testing explained in 4 parts, yuzheng sun, phd.

As data scientists, Hypothesis Testing is expected to be well understood, but often not in reality. It is mainly because our textbooks blend two schools of thought – p-value and significance testing vs. hypothesis testing – inconsistently.

For example, some questions are not obvious unless you have thought through them before:

Are power or beta dependent on the null hypothesis?

Can we accept the null hypothesis? Why?

How does MDE change with alpha holding beta constant?

Why do we use standard error in Hypothesis Testing but not the standard deviation?

Why can’t we be specific about the alternative hypothesis so we can properly model it?

Why is the fundamental tradeoff of the Hypothesis Testing about mistake vs. discovery, not about alpha vs. beta?

Addressing this problem is not easy. The topic of Hypothesis Testing is convoluted. In this article, there are 10 concepts that we will introduce incrementally, aid you with visualizations, and include intuitive explanations. After this article, you will have clear answers to the questions above that you truly understand on a first-principle level and explain these concepts well to your stakeholders.

We break this article into four parts.

Set up the question properly using core statistical concepts, and connect them to Hypothesis Testing, while striking a balance between technically correct and simplicity. Specifically,

We emphasize a clear distinction between the standard deviation and the standard error, and why the latter is used in Hypothesis Testing

We explain fully when can you “accept” a hypothesis, when shall you say “failing to reject” instead of “accept”, and why

Introduce alpha, type I error, and the critical value with the null hypothesis

Introduce beta, type II error, and power with the alternative hypothesis

Introduce minimum detectable effects and the relationship between the factors with power calculations , with a high-level summary and practical recommendations

Part 1 - Hypothesis Testing, the central limit theorem, population, sample, standard deviation, and standard error

In Hypothesis Testing, we begin with a null hypothesis , which generally asserts that there is no effect between our treatment and control groups. Commonly, this is expressed as the difference in means between the treatment and control groups being zero.

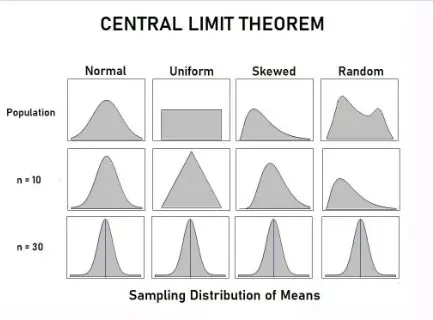

The central limit theorem suggests an important property of this difference in means — given a sufficiently large sample size, the underlying distribution of this difference in means will approximate a normal distribution, regardless of the population's original distribution. There are two notes:

1. The distribution of the population for the treatment and control groups can vary, but the observed means (when you observe many samples and calculate many means) are always normally distributed with a large enough sample. Below is a chart, where the n=10 and n=30 correspond to the underlying distribution of the sample means.

2. Pay attention to “the underlying distribution”. Standard deviation vs. standard error is a potentially confusing concept. Let’s clarify.

Standard deviation vs. Standard error

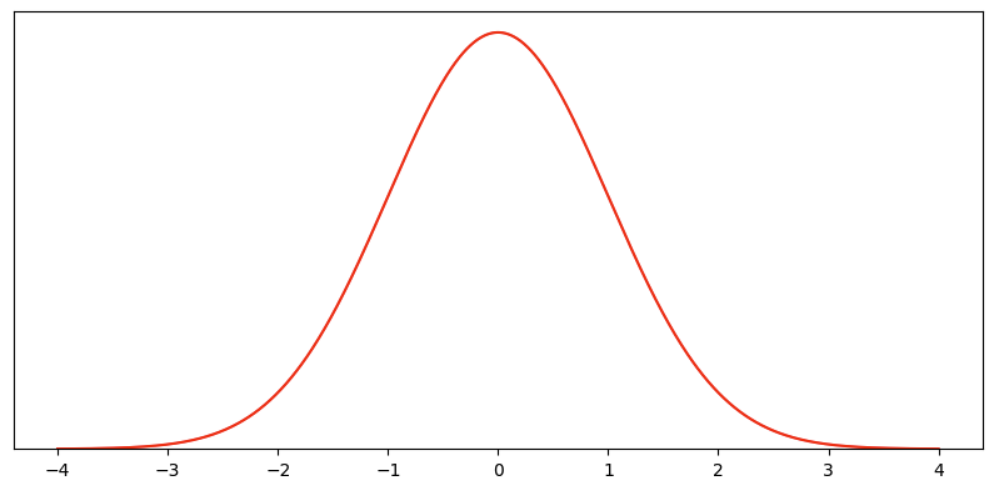

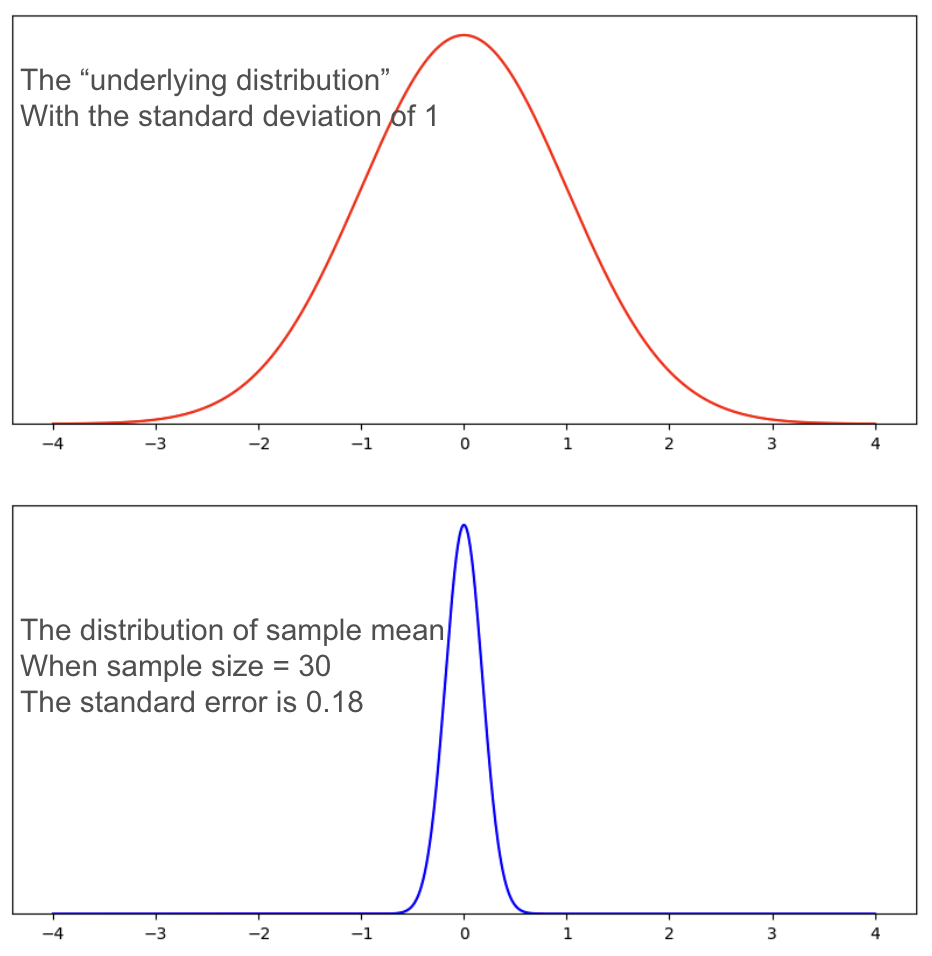

Let’s declare our null hypothesis as having no treatment effect. Then, to simplify, let’s propose the following normal distribution with a mean of 0 and a standard deviation of 1 as the range of possible outcomes with probabilities associated with this null hypothesis.

The language around population, sample, group, and estimators can get confusing. Again, to simplify, let’s forget that the null hypothesis is about the mean estimator, and declare that we can either observe the mean hypothesis once or many times. When we observe it many times, it forms a sample*, and our goal is to make decisions based on this sample.

* For technical folks, the observation is actually about a single sample, many samples are a group, and the difference in groups is the distribution we are talking about as the mean hypothesis. The red curve represents the distribution of the estimator of this difference, and then we can have another sample consisting of many observations of this estimator. In my simplified language, the red curve is the distribution of the estimator, and the blue curve with sample size is the repeated observations of it. If you have a better way to express these concepts without causing confusiongs, please suggest.

This probability density function means if there is one realization from this distribution, the realitization can be anywhere on the x-axis, with the relative likelihood on the y-axis.

If we draw multiple observations , they form a sample . Each observation in this sample follows the property of this underlying distribution – more likely to be close to 0, and equally likely to be on either side, which makes the odds of positive and negative cancel each other out, so the mean of this sample is even more centered around 0.

We use the standard error to represent the error of our “sample mean” .

The standard error = the standard deviation of the observed sample / sqrt (sample size).

For a sample size of 30, the standard error is roughly 0.18. Compared with the underlying distribution, the distribution of the sample mean is much narrower.

In Hypothesis Testing, we try to draw some conclusions – is there a treatment effect or not? – based on a sample. So when we talk about alpha and beta, which are the probabilities of type I and type II errors , we are talking about the probabilities based on the plot of sample means and standard error .

Part 2, The null hypothesis: alpha and the critical value

From Part 1, we stated that a null hypothesis is commonly expressed as the difference in means between the treatment and control groups being zero.

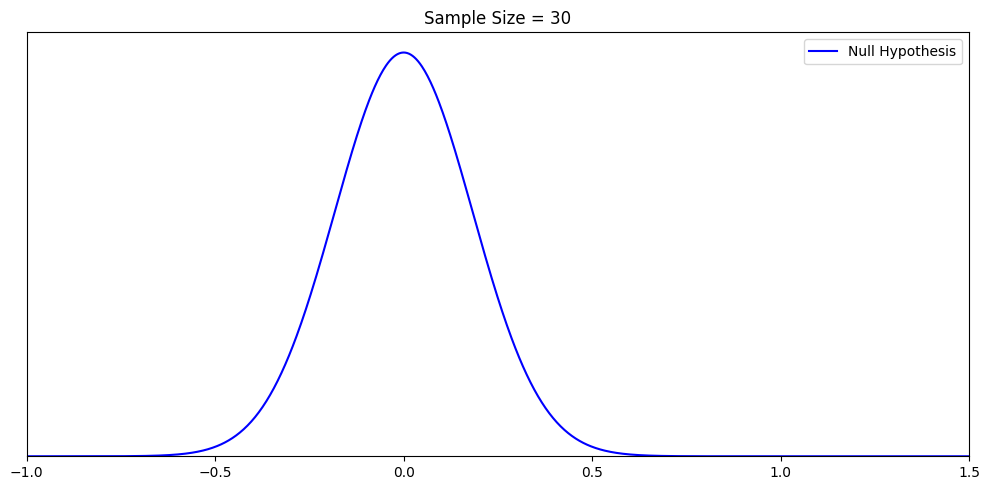

Without loss of generality*, let’s assume the underlying distribution of our null hypothesis is mean 0 and standard deviation 1

Then the sample mean of the null hypothesis is 0 and the standard error of 1/√ n, where n is the sample size.

When the sample size is 30, this distribution has a standard error of ≈0.18 looks like the below.

*: A note for the technical readers: The null hypothesis is about the difference in means, but here, without complicating things, we made the subtle change to just draw the distribution of this “estimator of this difference in means”. Everything below speaks to this “estimator”.

The reason we have the null hypothesis is that we want to make judgments, particularly whether a treatment effect exists. But in the world of probabilities, any observation, and any sample mean can happen, with different probabilities. So we need a decision rule to help us quantify our risk of making mistakes.

The decision rule is, let’s set a threshold. When the sample mean is above the threshold, we reject the null hypothesis; when the sample mean is below the threshold, we accept the null hypothesis.

Accepting a hypothesis vs. failing to reject a hypothesis

It’s worth noting that you may have heard of “we never accept a hypothesis, we just fail to reject a hypothesis” and be subconsciously confused by it. The deep reason is that modern textbooks do an inconsistent blend of Fisher’s significance testing and Neyman-Pearson’s Hypothesis Testing definitions and ignore important caveats ( ref ). To clarify:

First of all, we can never “prove” a particular hypothesis given any observations, because there are infinitely many true hypotheses (with different probabilities) given an observation. We will visualize it in Part 3.

Second, “accepting” a hypothesis does not mean that you believe in it, but only that you act as if it were true. So technically, there is no problem with “accepting” a hypothesis.

But, third, when we talk about p-values and confidence intervals, “accepting” the null hypothesis is at best confusing. The reason is that “the p-value above the threshold” just means we failed to reject the null hypothesis. In the strict Fisher’s p-value framework, there is no alternative hypothesis. While we have a clear criterion for rejecting the null hypothesis (p < alpha), we don't have a similar clear-cut criterion for "accepting" the null hypothesis based on beta.

So the dangers in calling “accepting a hypothesis” in the p-value setting are:

Many people misinterpret “accepting” the null hypothesis as “proving” the null hypothesis, which is wrong;

“Accepting the null hypothesis” is not rigorously defined, and doesn’t speak to the purpose of the test, which is about whether or not we reject the null hypothesis.

In this article, we will stay consistent within the Neyman-Pearson framework , where “accepting” a hypothesis is legal and necessary. Otherwise, we cannot draw any distributions without acting as if some hypothesis was true.

You don’t need to know the name Neyman-Pearson to understand anything, but pay attention to our language, as we choose our words very carefully to avoid mistakes and confusion.

So far, we have constructed a simple world of one hypothesis as the only truth, and a decision rule with two potential outcomes – one of the outcomes is “reject the null hypothesis when it is true” and the other outcome is “accept the null hypothesis when it is true”. The likelihoods of both outcomes come from the distribution where the null hypothesis is true.

Later, when we introduce the alternative hypothesis and MDE, we will gradually walk into the world of infinitely many alternative hypotheses and visualize why we cannot “prove” a hypothesis.

We save the distinction between the p-value/significance framework vs. Hypothesis Testing in another article where you will have the full picture.

Type I error, alpha, and the critical value

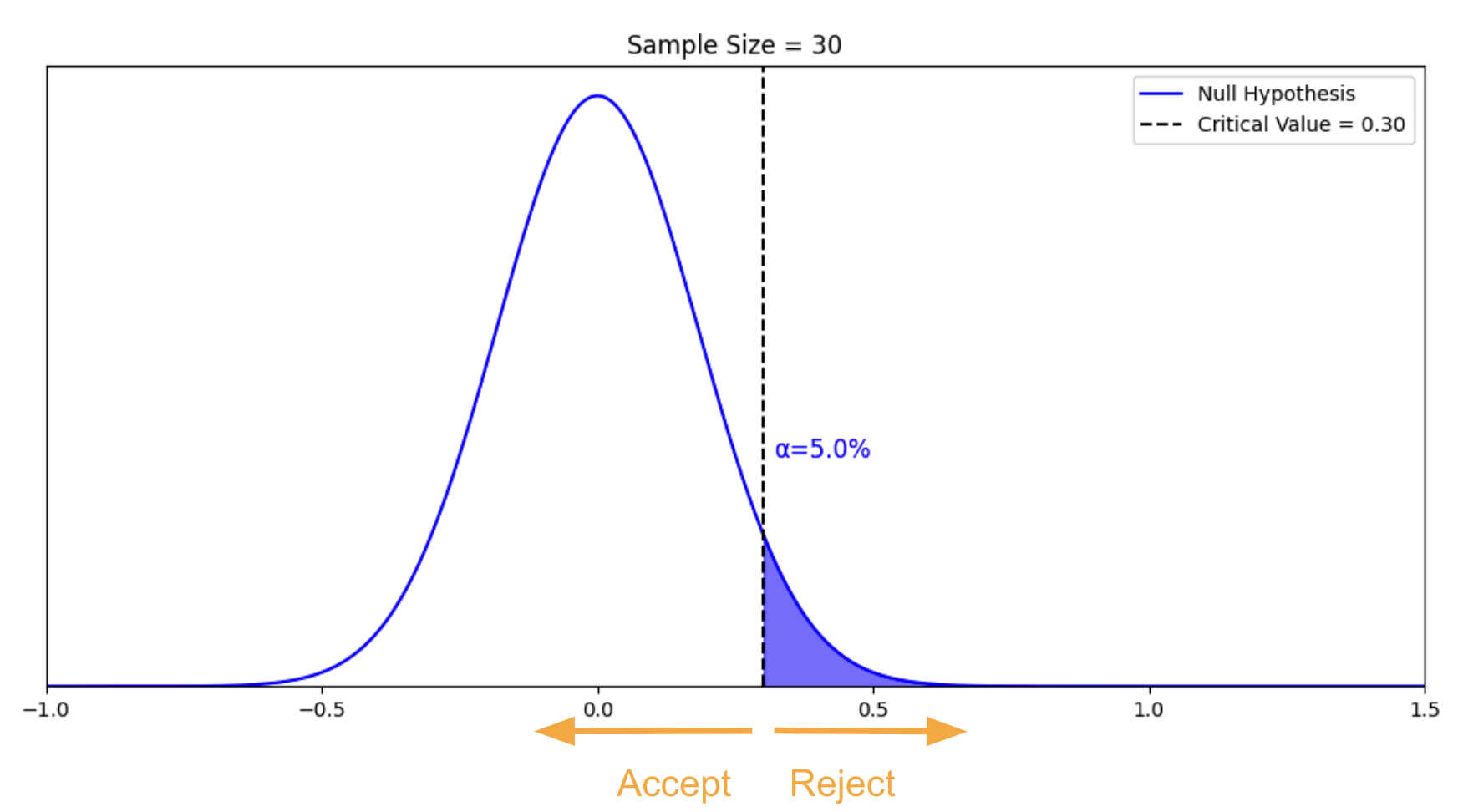

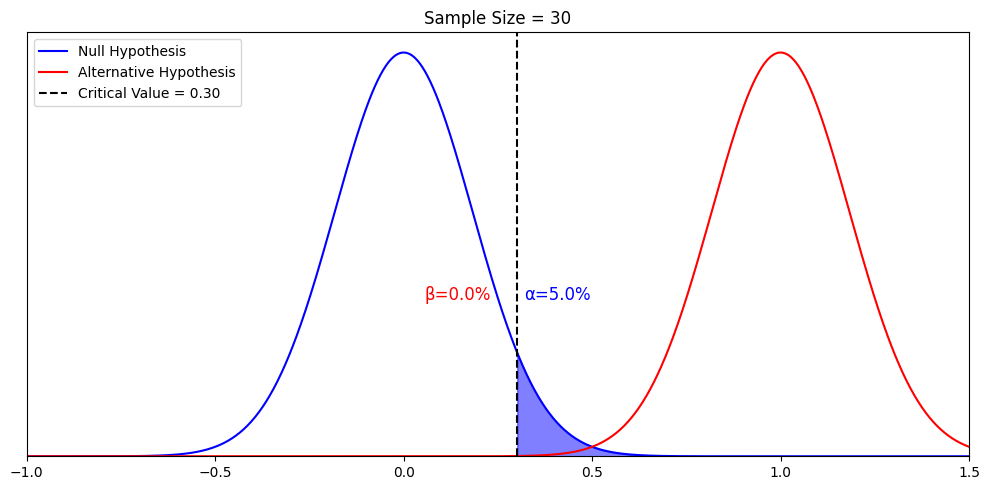

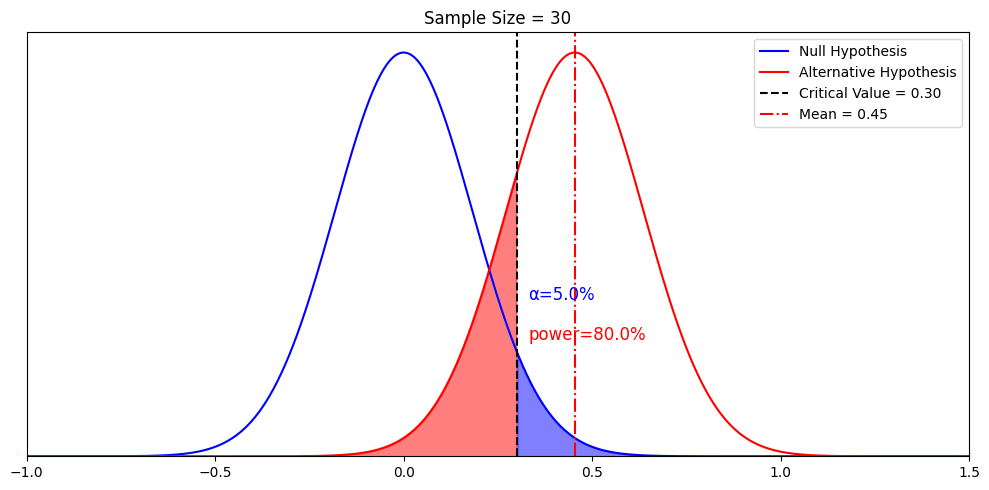

We’re able to construct a distribution of the sample mean for this null hypothesis using the standard error. Since we only have the null hypothesis as the truth of our universe, we can only make one type of mistake – falsely rejecting the null hypothesis when it is true. This is the type I error , and the probability is called alpha . Suppose we want alpha to be 5%. We can calculate the threshold required to make it happen. This threshold is called the critical value . Below is the chart we further constructed with our sample of 30.

In this chart, alpha is the blue area under the curve. The critical value is 0.3. If our sample mean is above 0.3, we reject the null hypothesis. We have a 5% chance of making the type I error.

Type I error: Falsely rejecting the null hypothesis when the null hypothesis is true

Alpha: The probability of making a Type I error

Critical value: The threshold to determine whether the null hypothesis is to be rejected or not

Part 3, The alternative hypothesis: beta and power

You may have noticed in part 2 that we only spoke to Type I error – rejecting the null hypothesis when it is true. What about the Type II error – falsely accepting the null hypothesis when it is not true?

But it is weird to call “accepting” false unless we know the truth. So we need an alternative hypothesis which serves as the alternative truth.

Alternative hypotheses are theoretical constructs

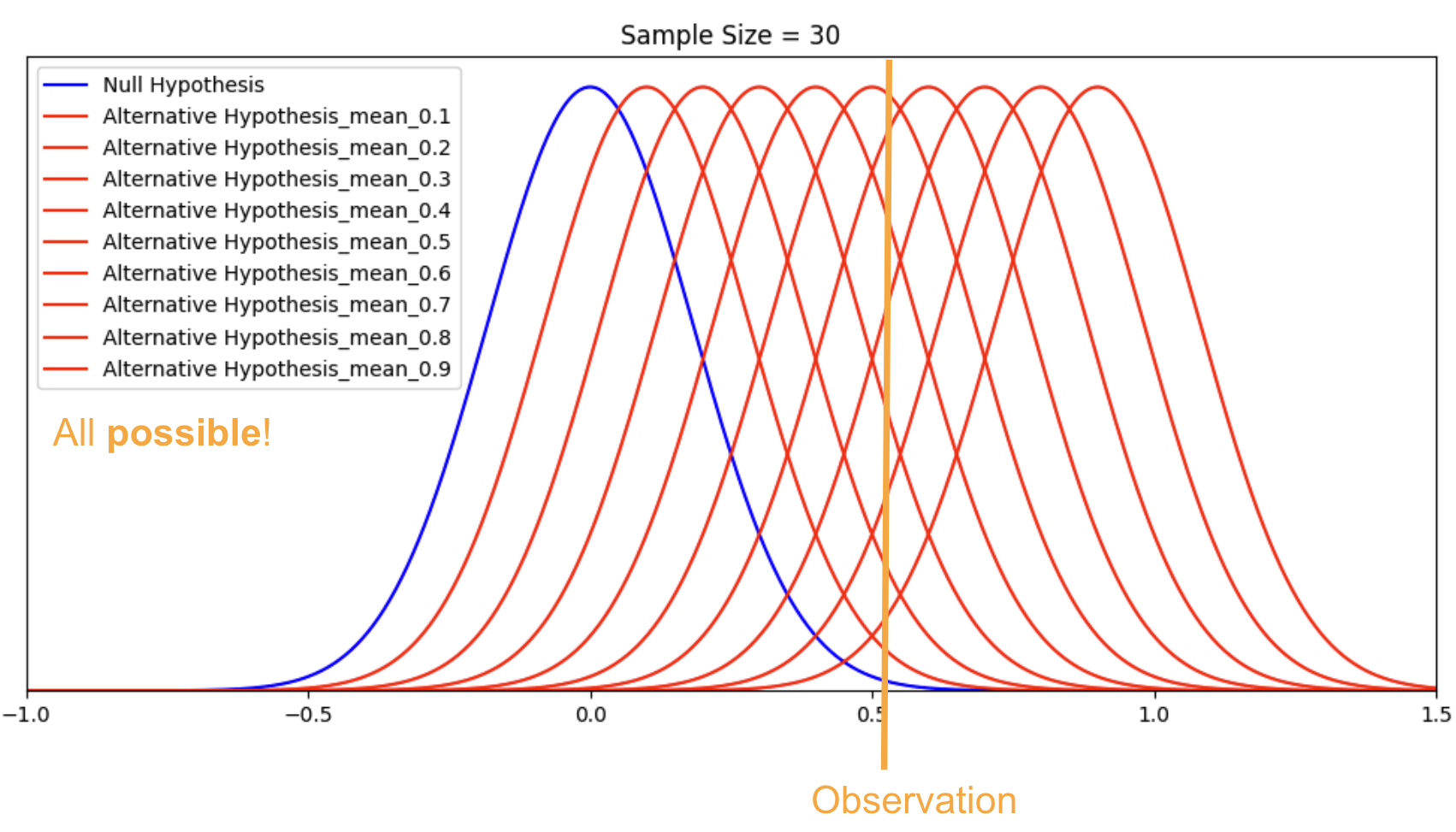

There is an important concept that most textbooks fail to emphasize – that is, you can have infinitely many alternative hypotheses for a given null hypothesis, we just choose one. None of them are more special or “real” than the others.

Let’s visualize it with an example. Suppose we observed a sample mean of 0.51, what is the true alternative hypothesis?

With this visualization, you can see why we have “infinitely many alternative hypotheses” because, given the observation, there is an infinite number of alternative hypotheses (plus the null hypothesis) that can be true, each with different probabilities. Some are more likely than others, but all are possible.

Remember, alternative hypotheses are a theoretical construct. We choose one particular alternative hypothesis to calculate certain probabilities. By now, we should have more understanding of why we cannot “accept” the null hypothesis given an observation. We can’t prove that the null hypothesis is true, we just fail to accept it given the observation and our pre-determined decision rule.

We will fully reconcile this idea of picking one alternative hypothesis out of the world of infinite possibilities when we talk about MDE. The idea of “accept” vs. “fail to reject” is deeper, and we won’t cover it fully in this article. We will do so when we have an article about the p-value and the confidence interval.

Type II error and Beta

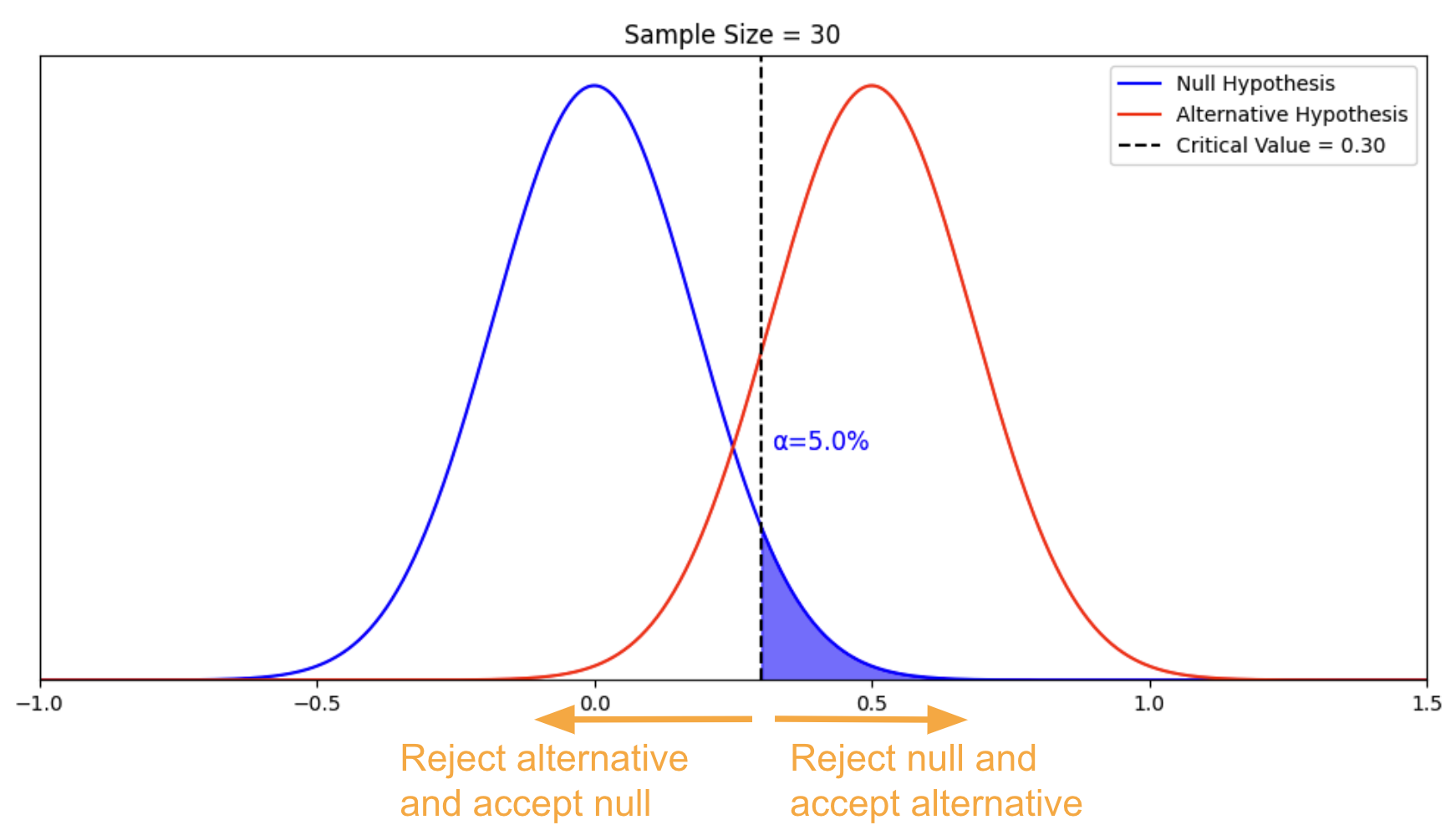

For the sake of simplicity and easy comparison, let’s choose an alternative hypothesis with a mean of 0.5, and a standard deviation of

1. Again, with a sample size of 30, the standard error ≈0.18. There are now two potential “truths” in our simple universe.

Remember from the null hypothesis, we want alpha to be 5% so the corresponding critical value is 0.30. We modify our rule as follows:

If the observation is above 0.30, we reject the null hypothesis and accept the alternative hypothesis ;

If the observation is below 0.30, we accept the null hypothesis and reject the alternative hypothesis .

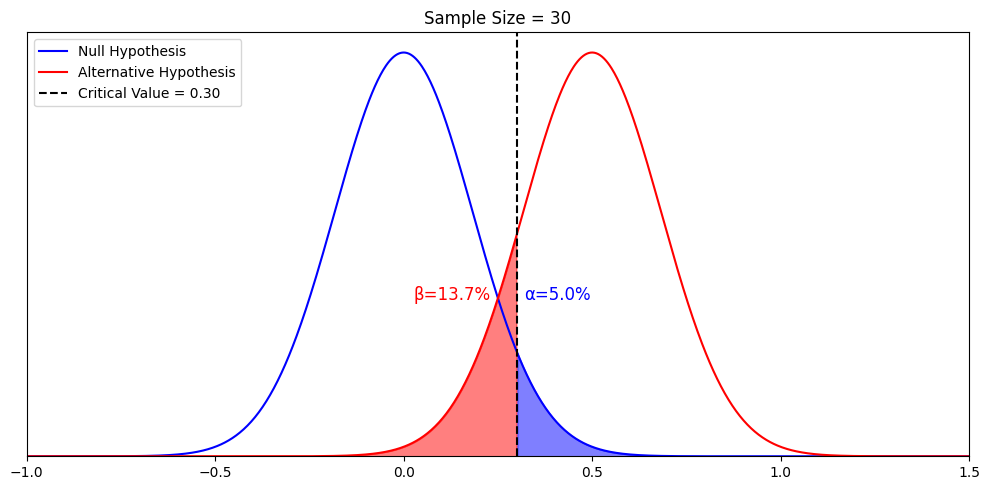

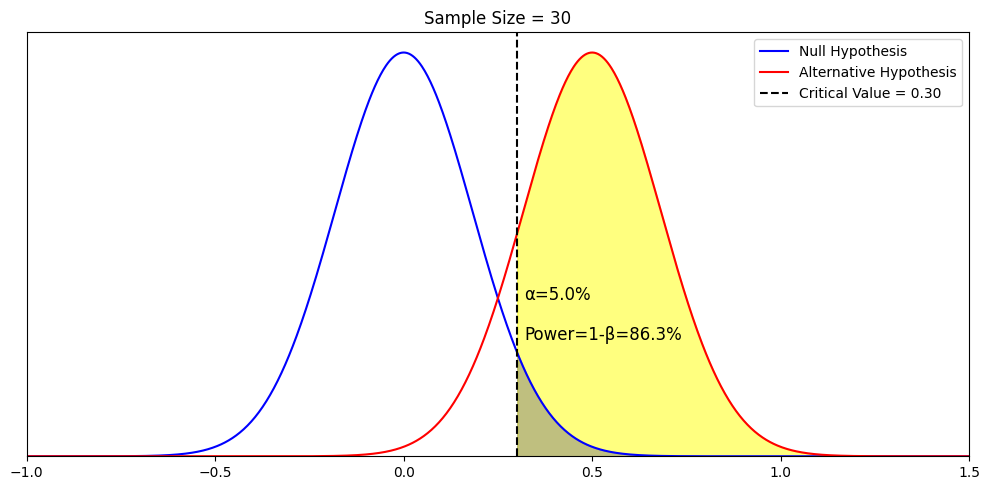

With the introduction of the alternative hypothesis, the alternative “(hypothesized) truth”, we can call “accepting the null hypothesis and rejecting the alternative hypothesis” a mistake – the Type II error. We can also calculate the probability of this mistake. This is called beta, which is illustrated by the red area below.

From the visualization, we can see that beta is conditional on the alternative hypothesis and the critical value. Let’s elaborate on these two relationships one by one, very explicitly, as both of them are important.

First, Let’s visualize how beta changes with the mean of the alternative hypothesis by setting another alternative hypothesis where mean = 1 instead of 0.5

Beta change from 13.7% to 0.0%. Namely, beta is the probability of falsely rejecting a particular alternative hypothesis when we assume it is true. When we assume a different alternative hypothesis is true, we get a different beta. So strictly speaking, beta only speaks to the probability of falsely rejecting a particular alternative hypothesis when it is true . Nothing else. It’s only under other conditions, that “rejecting the alternative hypothesis” implies “accepting” the null hypothesis or “failing to accept the null hypothesis”. We will further elaborate when we talk about p-value and confidence interval in another article. But what we talked about so far is true and enough for understanding power.

Second, there is a relationship between alpha and beta. Namely, given the null hypothesis and the alternative hypothesis, alpha would determine the critical value, and the critical value determines beta. This speaks to the tradeoff between mistake and discovery.

If we tolerate more alpha, we will have a smaller critical value, and for the same beta, we can detect a smaller alternative hypothesis

If we tolerate more beta, we can also detect a smaller alternative hypothesis.

In short, if we tolerate more mistakes (either Type I or Type II), we can detect a smaller true effect. Mistake vs. discovery is the fundamental tradeoff of Hypothesis Testing.

So tolerating more mistakes leads to more chance of discovery. This is the concept of MDE that we will elaborate on in part 4.

Finally, we’re ready to define power. Power is an important and fundamental topic in statistical testing, and we’ll explain the concept in three different ways.

Three ways to understand power

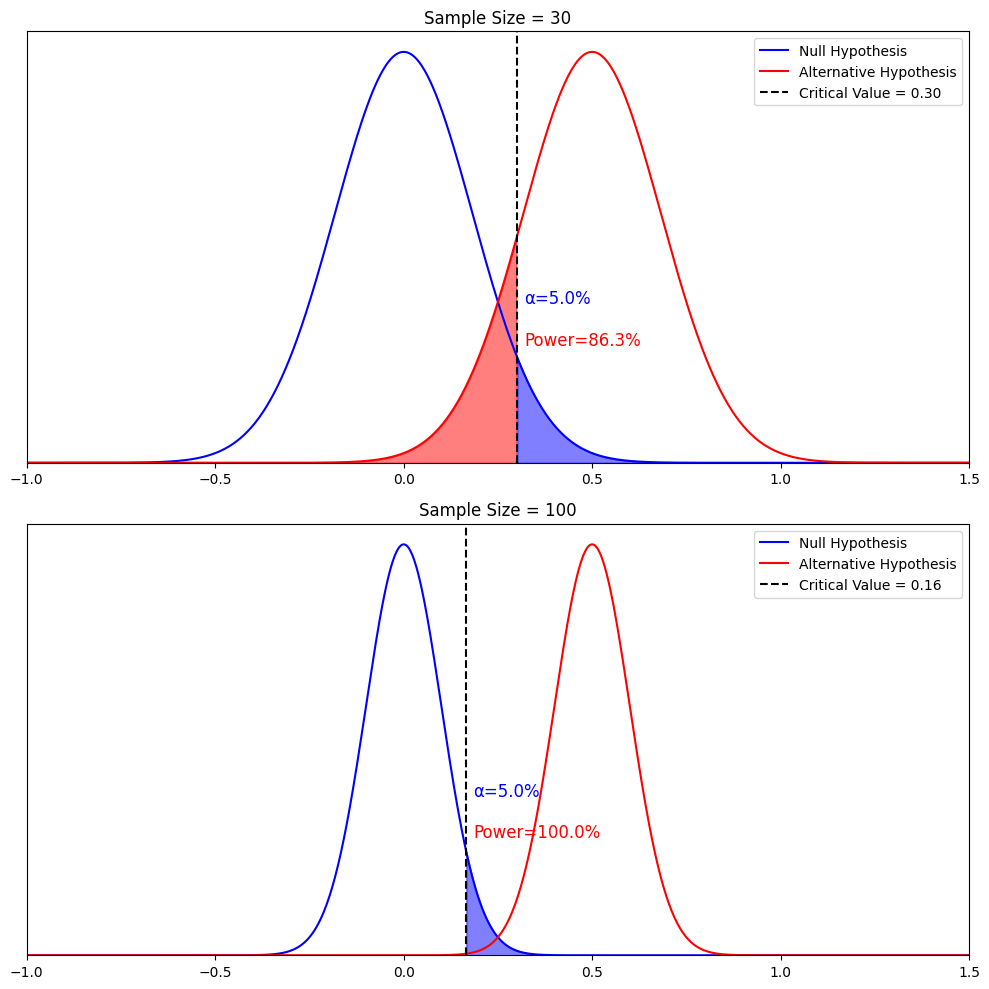

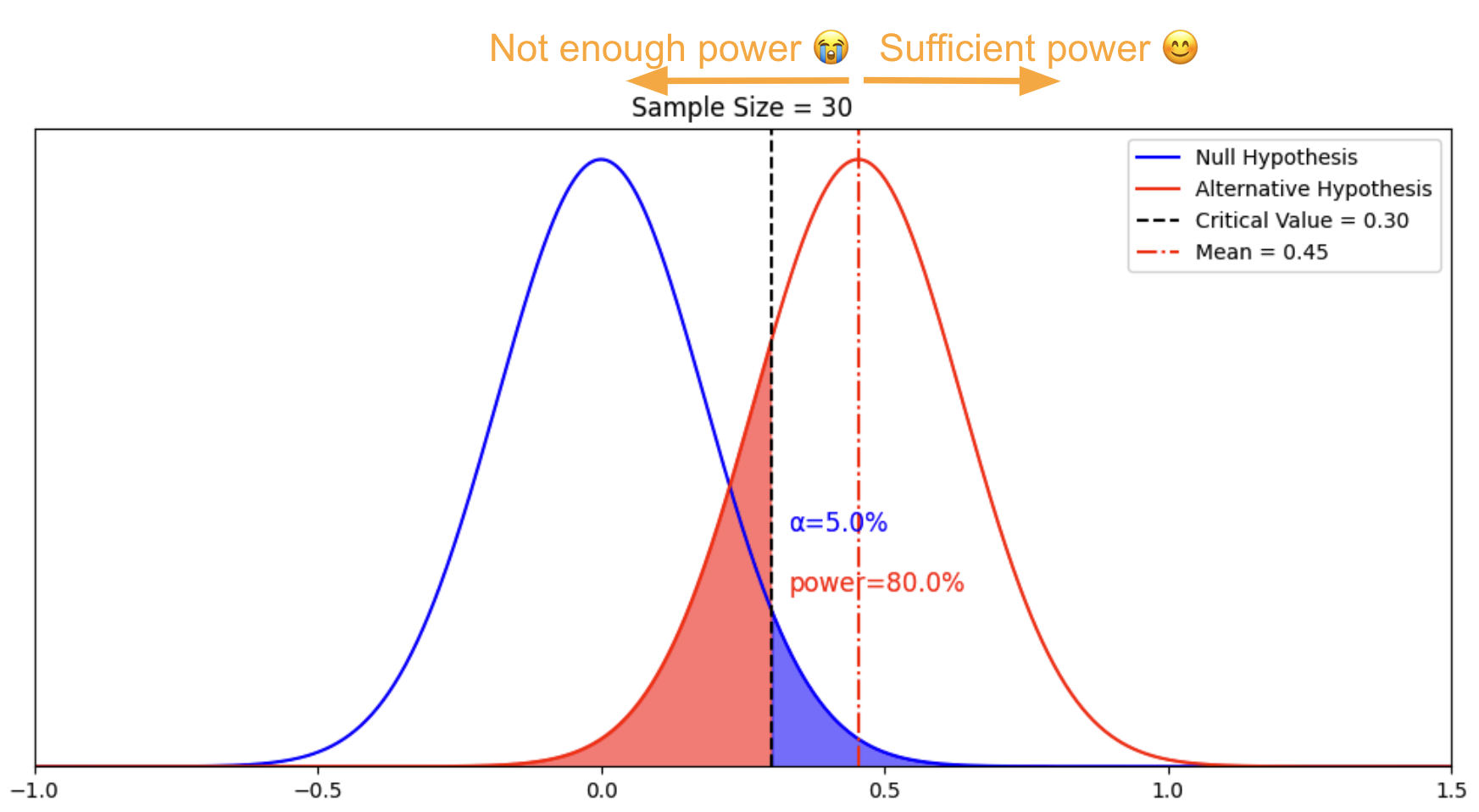

First, the technical definition of power is 1−β. It represents that given an alternative hypothesis and given our null, sample size, and decision rule (alpha = 0.05), the probability is that we accept this particular hypothesis. We visualize the yellow area below.

Second, power is really intuitive in its definition. A real-world example is trying to determine the most popular car manufacturer in the world. If I observe one car and see one brand, my observation is not very powerful. But if I observe a million cars, my observation is very powerful. Powerful tests mean that I have a high chance of detecting a true effect.

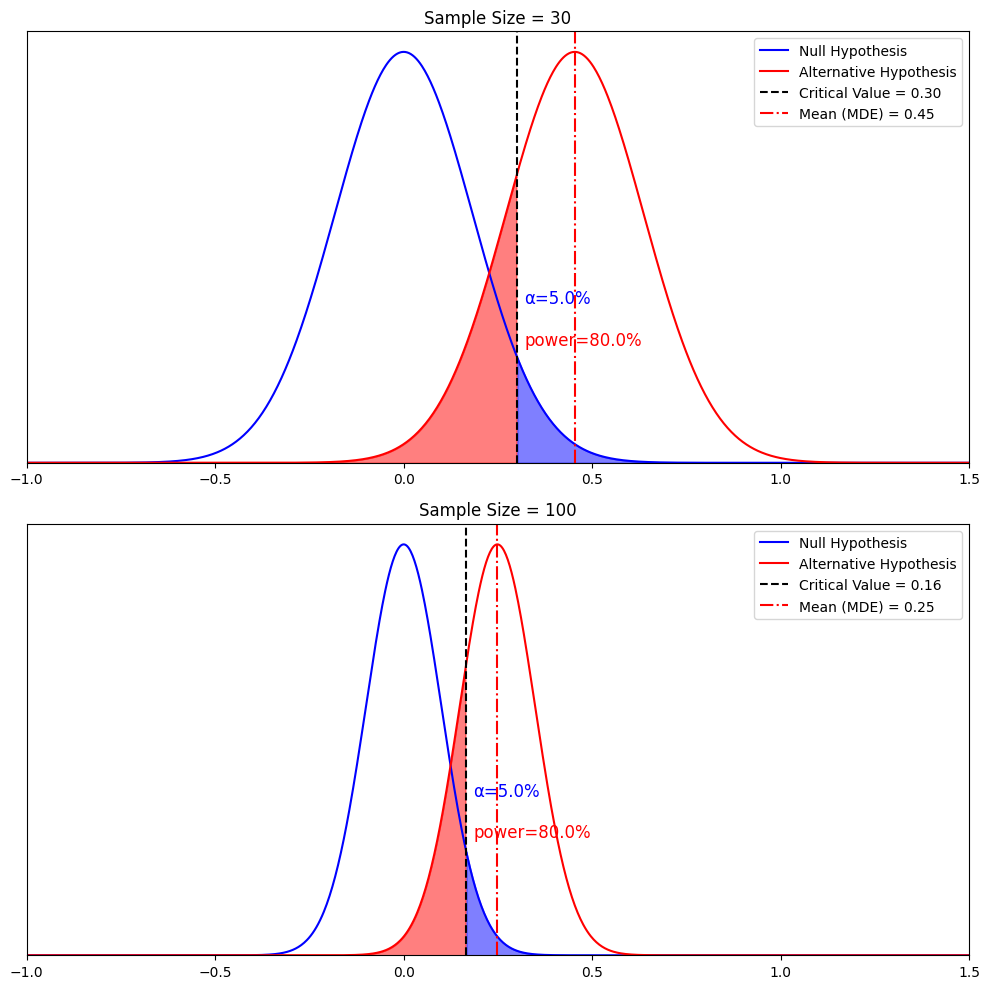

Third, to illustrate the two concepts concisely, let’s run a visualization by just changing the sample size from 30 to 100 and see how power increases from 86.3% to almost 100%.

As the graph shows, we can easily see that power increases with sample size . The reason is that the distribution of both the null hypothesis and the alternative hypothesis became narrower as their sample means got more accurate. We are less likely to make either a type I error (which reduces the critical value) or a type II error.

Type II error: Failing to reject the null hypothesis when the alternative hypothesis is true

Beta: The probability of making a type II error

Power: The ability of the test to detect a true effect when it’s there

Part 4, Power calculation: MDE

The relationship between mde, alternative hypothesis, and power.

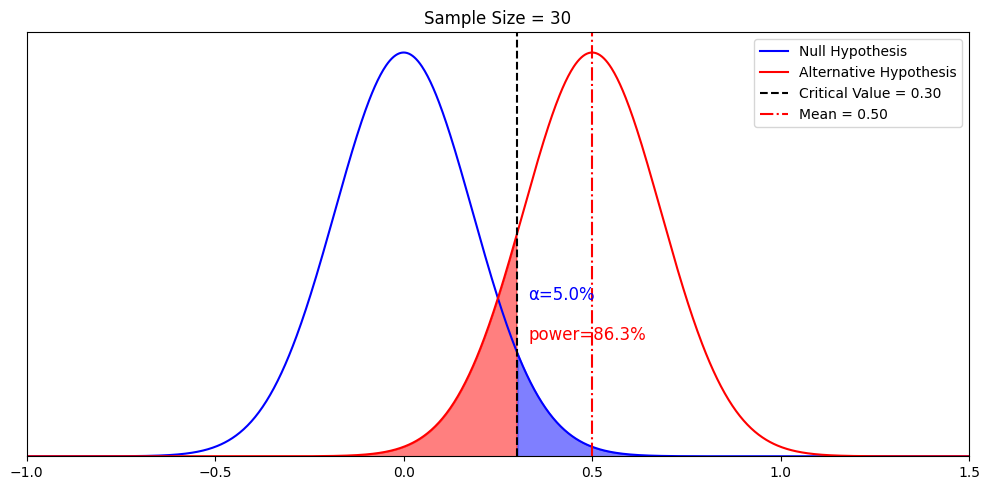

Now, we are ready to tackle the most nuanced definition of them all: Minimum detectable effect (MDE). First, let’s make the sample mean of the alternative hypothesis explicit on the graph with a red dotted line.

What if we keep the same sample size, but want power to be 80%? This is when we recall the previous chapter that “alternative hypotheses are theoretical constructs”. We can have a different alternative that corresponds to 80% power. After some calculations, we discovered that when it’s the alternative hypothesis with mean = 0.45 (if we keep the standard deviation to be 1).

This is where we reconcile the concept of “infinitely many alternative hypotheses” with the concept of minimum detectable delta. Remember that in statistical testing, we want more power. The “ minimum ” in the “ minimum detectable effect”, is the minimum value of the mean of the alternative hypothesis that would give us 80% power. Any alternative hypothesis with a mean to the right of MDE gives us sufficient power.

In other words, there are indeed infinitely many alternative hypotheses to the right of this mean 0.45. The particular alternative hypothesis with a mean of 0.45 gives us the minimum value where power is sufficient. We call it the minimum detectable effect, or MDE.

The complete definition of MDE from scratch

Let’s go through how we derived MDE from the beginning:

We fixed the distribution of sample means of the null hypothesis, and fixed sample size, so we can draw the blue distribution

For our decision rule, we require alpha to be 5%. We derived that the critical value shall be 0.30 to make 5% alpha happen

We fixed the alternative hypothesis to be normally distributed with a standard deviation of 1 so the standard error is 0.18, the mean can be anywhere as there are infinitely many alternative hypotheses

For our decision rule, we require beta to be 20% or less, so our power is 80% or more.

We derived that the minimum value of the observed mean of the alternative hypothesis that we can detect with our decision rule is 0.45. Any value above 0.45 would give us sufficient power.

How MDE changes with sample size

Now, let’s tie everything together by increasing the sample size, holding alpha and beta constant, and see how MDE changes.

Narrower distribution of the sample mean + holding alpha constant -> smaller critical value from 0.3 to 0.16

+ holding beta constant -> MDE decreases from 0.45 to 0.25

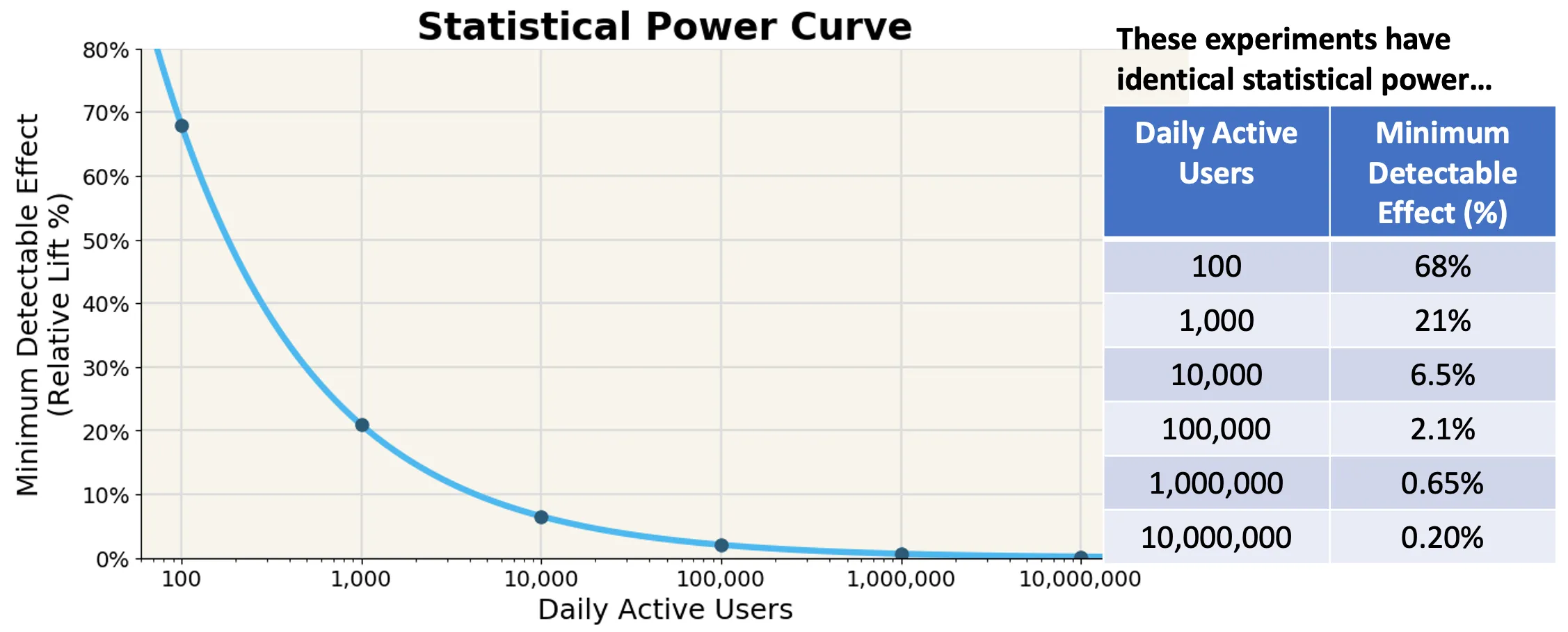

This is the other key takeaway: The larger the sample size, the smaller of an effect we can detect, and the smaller the MDE.

This is a critical takeaway for statistical testing. It suggests that even for companies not with large sample sizes if their treatment effects are large, AB testing can reliably detect it.

Summary of Hypothesis Testing

Let’s review all the concepts together.

Assuming the null hypothesis is correct:

Alpha: When the null hypothesis is true, the probability of rejecting it

Critical value: The threshold to determine rejecting vs. accepting the null hypothesis

Assuming an alternative hypothesis is correct:

Beta: When the alternative hypothesis is true, the probability of rejecting it

Power: The chance that a real effect will produce significant results

Power calculation:

Minimum detectable effect (MDE): Given sample sizes and distributions, the minimum mean of alternative distribution that would give us the desired alpha and sufficient power (usually alpha = 0.05 and power >= 0.8)

Relationship among the factors, all else equal: Larger sample, more power; Larger sample, smaller MDE

Everything we talk about is under the Neyman-Pearson framework. There is no need to mention the p-value and significance under this framework. Blending the two frameworks is the inconsistency brought by our textbooks. Clarifying the inconsistency and correctly blending them are topics for another day.

Practical recommendations

That’s it. But it’s only the beginning. In practice, there are many crafts in using power well, for example:

Why peeking introduces a behavior bias, and how to use sequential testing to correct it

Why having multiple comparisons affects alpha, and how to use Bonferroni correction

The relationship between sample size, duration of the experiment, and allocation of the experiment?

Treat your allocation as a resource for experimentation, understand when interaction effects are okay, and when they are not okay, and how to use layers to manage

Practical considerations for setting an MDE

Also, in the above examples, we fixed the distribution, but in reality, the variance of the distribution plays an important role. There are different ways of calculating the variance and different ways to reduce variance, such as CUPED, or stratified sampling.

Related resources:

How to calculate power with an uneven split of sample size: https://blog.statsig.com/calculating-sample-sizes-for-a-b-tests-7854d56c2646

Real-life applications: https://blog.statsig.com/you-dont-need-large-sample-sizes-to-run-a-b-tests-6044823e9992

Create a free account

2m events per month, free forever..

Sign up for Statsig and launch your first experiment in minutes.

Build fast?

Try statsig today.

Recent Posts

Top 8 common experimentation mistakes and how to fix them.

I discussed 8 A/B testing mistakes with Allon Korem (Bell Statistics) and Tyler VanHaren (Statsig). Learn fixes to improve accuracy and drive better business outcomes.

Introducing Differential Impact Detection

Introducing Differential Impact Detection: Identify how different user groups respond to treatments and gain useful insights from varied experiment results.

Identifying and experimenting with Power Users using Statsig

Identify power users to drive growth and engagement. Learn to pinpoint and leverage these key players with targeted experiments for maximum impact.

How to Ingest Data Into Statsig

Simplify data pipelines with Statsig. Use SDKs, third-party integrations, and Data Warehouse Native Solution for effortless data ingestion at any stage.

A/B Testing performance wins on NestJS API servers

Learn how we use Statsig to enhance our NestJS API servers, reducing request processing time and CPU usage through performance experiments.

An overview of making early decisions on experiments

Learn the risks vs. rewards of making early decisions in experiments and Statsig's techniques to reduce experimentation times and deliver trustworthy results.

Introduction to Hypothesis Testing

A statistical hypothesis is an assumption about a population parameter .

For example, we may assume that the mean height of a male in the U.S. is 70 inches.

The assumption about the height is the statistical hypothesis and the true mean height of a male in the U.S. is the population parameter .

A hypothesis test is a formal statistical test we use to reject or fail to reject a statistical hypothesis.

The Two Types of Statistical Hypotheses

To test whether a statistical hypothesis about a population parameter is true, we obtain a random sample from the population and perform a hypothesis test on the sample data.

There are two types of statistical hypotheses:

The null hypothesis , denoted as H 0 , is the hypothesis that the sample data occurs purely from chance.

The alternative hypothesis , denoted as H 1 or H a , is the hypothesis that the sample data is influenced by some non-random cause.

Hypothesis Tests

A hypothesis test consists of five steps:

1. State the hypotheses.

State the null and alternative hypotheses. These two hypotheses need to be mutually exclusive, so if one is true then the other must be false.

2. Determine a significance level to use for the hypothesis.

Decide on a significance level. Common choices are .01, .05, and .1.

3. Find the test statistic.

Find the test statistic and the corresponding p-value. Often we are analyzing a population mean or proportion and the general formula to find the test statistic is: (sample statistic – population parameter) / (standard deviation of statistic)

4. Reject or fail to reject the null hypothesis.

Using the test statistic or the p-value, determine if you can reject or fail to reject the null hypothesis based on the significance level.

The p-value tells us the strength of evidence in support of a null hypothesis. If the p-value is less than the significance level, we reject the null hypothesis.

5. Interpret the results.

Interpret the results of the hypothesis test in the context of the question being asked.

The Two Types of Decision Errors

There are two types of decision errors that one can make when doing a hypothesis test:

Type I error: You reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, often called alpha , and denoted as α.

Type II error: You fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is called the Power of the test or Beta , denoted as β.

One-Tailed and Two-Tailed Tests

A statistical hypothesis can be one-tailed or two-tailed.

A one-tailed hypothesis involves making a “greater than” or “less than ” statement.

For example, suppose we assume the mean height of a male in the U.S. is greater than or equal to 70 inches. The null hypothesis would be H0: µ ≥ 70 inches and the alternative hypothesis would be Ha: µ < 70 inches.

A two-tailed hypothesis involves making an “equal to” or “not equal to” statement.

For example, suppose we assume the mean height of a male in the U.S. is equal to 70 inches. The null hypothesis would be H0: µ = 70 inches and the alternative hypothesis would be Ha: µ ≠ 70 inches.

Note: The “equal” sign is always included in the null hypothesis, whether it is =, ≥, or ≤.

Related: What is a Directional Hypothesis?

Types of Hypothesis Tests

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

The following tutorials provide an explanation of the most common types of hypothesis tests:

Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test Introduction to the One Proportion Z-Test Introduction to the Two Proportion Z-Test

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Statistical Hypothesis Testing Overview

By Jim Frost 59 Comments

In this blog post, I explain why you need to use statistical hypothesis testing and help you navigate the essential terminology. Hypothesis testing is a crucial procedure to perform when you want to make inferences about a population using a random sample. These inferences include estimating population properties such as the mean, differences between means, proportions, and the relationships between variables.

This post provides an overview of statistical hypothesis testing. If you need to perform hypothesis tests, consider getting my book, Hypothesis Testing: An Intuitive Guide .

Why You Should Perform Statistical Hypothesis Testing

Hypothesis testing is a form of inferential statistics that allows us to draw conclusions about an entire population based on a representative sample. You gain tremendous benefits by working with a sample. In most cases, it is simply impossible to observe the entire population to understand its properties. The only alternative is to collect a random sample and then use statistics to analyze it.

While samples are much more practical and less expensive to work with, there are trade-offs. When you estimate the properties of a population from a sample, the sample statistics are unlikely to equal the actual population value exactly. For instance, your sample mean is unlikely to equal the population mean. The difference between the sample statistic and the population value is the sample error.

Differences that researchers observe in samples might be due to sampling error rather than representing a true effect at the population level. If sampling error causes the observed difference, the next time someone performs the same experiment the results might be different. Hypothesis testing incorporates estimates of the sampling error to help you make the correct decision. Learn more about Sampling Error .

For example, if you are studying the proportion of defects produced by two manufacturing methods, any difference you observe between the two sample proportions might be sample error rather than a true difference. If the difference does not exist at the population level, you won’t obtain the benefits that you expect based on the sample statistics. That can be a costly mistake!

Let’s cover some basic hypothesis testing terms that you need to know.

Background information : Difference between Descriptive and Inferential Statistics and Populations, Parameters, and Samples in Inferential Statistics

Hypothesis Testing

Hypothesis testing is a statistical analysis that uses sample data to assess two mutually exclusive theories about the properties of a population. Statisticians call these theories the null hypothesis and the alternative hypothesis. A hypothesis test assesses your sample statistic and factors in an estimate of the sample error to determine which hypothesis the data support.

When you can reject the null hypothesis, the results are statistically significant, and your data support the theory that an effect exists at the population level.

The effect is the difference between the population value and the null hypothesis value. The effect is also known as population effect or the difference. For example, the mean difference between the health outcome for a treatment group and a control group is the effect.

Typically, you do not know the size of the actual effect. However, you can use a hypothesis test to help you determine whether an effect exists and to estimate its size. Hypothesis tests convert your sample effect into a test statistic, which it evaluates for statistical significance. Learn more about Test Statistics .

An effect can be statistically significant, but that doesn’t necessarily indicate that it is important in a real-world, practical sense. For more information, read my post about Statistical vs. Practical Significance .

Null Hypothesis

The null hypothesis is one of two mutually exclusive theories about the properties of the population in hypothesis testing. Typically, the null hypothesis states that there is no effect (i.e., the effect size equals zero). The null is often signified by H 0 .

In all hypothesis testing, the researchers are testing an effect of some sort. The effect can be the effectiveness of a new vaccination, the durability of a new product, the proportion of defect in a manufacturing process, and so on. There is some benefit or difference that the researchers hope to identify.

However, it’s possible that there is no effect or no difference between the experimental groups. In statistics, we call this lack of an effect the null hypothesis. Therefore, if you can reject the null, you can favor the alternative hypothesis, which states that the effect exists (doesn’t equal zero) at the population level.

You can think of the null as the default theory that requires sufficiently strong evidence against in order to reject it.

For example, in a 2-sample t-test, the null often states that the difference between the two means equals zero.

When you can reject the null hypothesis, your results are statistically significant. Learn more about Statistical Significance: Definition & Meaning .

Related post : Understanding the Null Hypothesis in More Detail

Alternative Hypothesis

The alternative hypothesis is the other theory about the properties of the population in hypothesis testing. Typically, the alternative hypothesis states that a population parameter does not equal the null hypothesis value. In other words, there is a non-zero effect. If your sample contains sufficient evidence, you can reject the null and favor the alternative hypothesis. The alternative is often identified with H 1 or H A .

For example, in a 2-sample t-test, the alternative often states that the difference between the two means does not equal zero.

You can specify either a one- or two-tailed alternative hypothesis:

If you perform a two-tailed hypothesis test, the alternative states that the population parameter does not equal the null value. For example, when the alternative hypothesis is H A : μ ≠ 0, the test can detect differences both greater than and less than the null value.

A one-tailed alternative has more power to detect an effect but it can test for a difference in only one direction. For example, H A : μ > 0 can only test for differences that are greater than zero.

Related posts : Understanding T-tests and One-Tailed and Two-Tailed Hypothesis Tests Explained

P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is correct. In simpler terms, p-values tell you how strongly your sample data contradict the null. Lower p-values represent stronger evidence against the null. You use P-values in conjunction with the significance level to determine whether your data favor the null or alternative hypothesis.

Related post : Interpreting P-values Correctly

Significance Level (Alpha)

For instance, a significance level of 0.05 signifies a 5% risk of deciding that an effect exists when it does not exist.

Use p-values and significance levels together to help you determine which hypothesis the data support. If the p-value is less than your significance level, you can reject the null and conclude that the effect is statistically significant. In other words, the evidence in your sample is strong enough to be able to reject the null hypothesis at the population level.

Related posts : Graphical Approach to Significance Levels and P-values and Conceptual Approach to Understanding Significance Levels

Types of Errors in Hypothesis Testing

Statistical hypothesis tests are not 100% accurate because they use a random sample to draw conclusions about entire populations. There are two types of errors related to drawing an incorrect conclusion.

- False positives: You reject a null that is true. Statisticians call this a Type I error . The Type I error rate equals your significance level or alpha (α).

- False negatives: You fail to reject a null that is false. Statisticians call this a Type II error. Generally, you do not know the Type II error rate. However, it is a larger risk when you have a small sample size , noisy data, or a small effect size. The type II error rate is also known as beta (β).

Statistical power is the probability that a hypothesis test correctly infers that a sample effect exists in the population. In other words, the test correctly rejects a false null hypothesis. Consequently, power is inversely related to a Type II error. Power = 1 – β. Learn more about Power in Statistics .

Related posts : Types of Errors in Hypothesis Testing and Estimating a Good Sample Size for Your Study Using Power Analysis

Which Type of Hypothesis Test is Right for You?

There are many different types of procedures you can use. The correct choice depends on your research goals and the data you collect. Do you need to understand the mean or the differences between means? Or, perhaps you need to assess proportions. You can even use hypothesis testing to determine whether the relationships between variables are statistically significant.

To choose the proper statistical procedure, you’ll need to assess your study objectives and collect the correct type of data . This background research is necessary before you begin a study.

Related Post : Hypothesis Tests for Continuous, Binary, and Count Data

Statistical tests are crucial when you want to use sample data to make conclusions about a population because these tests account for sample error. Using significance levels and p-values to determine when to reject the null hypothesis improves the probability that you will draw the correct conclusion.

To see an alternative approach to these traditional hypothesis testing methods, learn about bootstrapping in statistics !

If you want to see examples of hypothesis testing in action, I recommend the following posts that I have written:

- How Effective Are Flu Shots? This example shows how you can use statistics to test proportions.

- Fatality Rates in Star Trek . This example shows how to use hypothesis testing with categorical data.

- Busting Myths About the Battle of the Sexes . A fun example based on a Mythbusters episode that assess continuous data using several different tests.

- Are Yawns Contagious? Another fun example inspired by a Mythbusters episode.

Share this:

Reader Interactions

January 14, 2024 at 8:43 am

Hello professor Jim, how are you doing! Pls. What are the properties of a population and their examples? Thanks for your time and understanding.

January 14, 2024 at 12:57 pm

Please read my post about Populations vs. Samples for more information and examples.

Also, please note there is a search bar in the upper-right margin of my website. Use that to search for topics.

July 5, 2023 at 7:05 am

Hello, I have a question as I read your post. You say in p-values section

“P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is correct. In simpler terms, p-values tell you how strongly your sample data contradict the null. Lower p-values represent stronger evidence against the null.”

But according to your definition of effect, the null states that an effect does not exist, correct? So what I assume you want to say is that “P-values are the probability that you would obtain the effect observed in your sample, or larger, if the null hypothesis is **incorrect**.”

July 6, 2023 at 5:18 am

Hi Shrinivas,

The correct definition of p-value is that it is a probability that exists in the context of a true null hypothesis. So, the quotation is correct in stating “if the null hypothesis is correct.”

Essentially, the p-value tells you the likelihood of your observed results (or more extreme) if the null hypothesis is true. It gives you an idea of whether your results are surprising or unusual if there is no effect.

Hence, with sufficiently low p-values, you reject the null hypothesis because it’s telling you that your sample results were unlikely to have occurred if there was no effect in the population.

I hope that helps make it more clear. If not, let me know I’ll attempt to clarify!

May 8, 2023 at 12:47 am

Thanks a lot Ny best regards

May 7, 2023 at 11:15 pm

Hi Jim Can you tell me something about size effect? Thanks

May 8, 2023 at 12:29 am

Here’s a post that I’ve written about Effect Sizes that will hopefully tell you what you need to know. Please read that. Then, if you have any more specific questions about effect sizes, please post them there. Thanks!

January 7, 2023 at 4:19 pm

Hi Jim, I have only read two pages so far but I am really amazed because in few paragraphs you made me clearly understand the concepts of months of courses I received in biostatistics! Thanks so much for this work you have done it helps a lot!

January 10, 2023 at 3:25 pm

Thanks so much!

June 17, 2021 at 1:45 pm

Can you help in the following question: Rocinante36 is priced at ₹7 lakh and has been designed to deliver a mileage of 22 km/litre and a top speed of 140 km/hr. Formulate the null and alternative hypotheses for mileage and top speed to check whether the new models are performing as per the desired design specifications.

April 19, 2021 at 1:51 pm

Its indeed great to read your work statistics.

I have a doubt regarding the one sample t-test. So as per your book on hypothesis testing with reference to page no 45, you have mentioned the difference between “the sample mean and the hypothesised mean is statistically significant”. So as per my understanding it should be quoted like “the difference between the population mean and the hypothesised mean is statistically significant”. The catch here is the hypothesised mean represents the sample mean.

Please help me understand this.

Regards Rajat

April 19, 2021 at 3:46 pm

Thanks for buying my book. I’m so glad it’s been helpful!

The test is performed on the sample but the results apply to the population. Hence, if the difference between the sample mean (observed in your study) and the hypothesized mean is statistically significant, that suggests that population does not equal the hypothesized mean.

For one sample tests, the hypothesized mean is not the sample mean. It is a mean that you want to use for the test value. It usually represents a value that is important to your research. In other words, it’s a value that you pick for some theoretical/practical reasons. You pick it because you want to determine whether the population mean is different from that particular value.

I hope that helps!

November 5, 2020 at 6:24 am

Jim, you are such a magnificent statistician/economist/econometrician/data scientist etc whatever profession. Your work inspires and simplifies the lives of so many researchers around the world. I truly admire you and your work. I will buy a copy of each book you have on statistics or econometrics. Keep doing the good work. Remain ever blessed

November 6, 2020 at 9:47 pm

Hi Renatus,

Thanks so much for you very kind comments. You made my day!! I’m so glad that my website has been helpful. And, thanks so much for supporting my books! 🙂

November 2, 2020 at 9:32 pm

Hi Jim, I hope you are aware of 2019 American Statistical Association’s official statement on Statistical Significance: https://www.tandfonline.com/doi/full/10.1080/00031305.2019.1583913 In case you do not bother reading the full article, may I quote you the core message here: “We conclude, based on our review of the articles in this special issue and the broader literature, that it is time to stop using the term “statistically significant” entirely. Nor should variants such as “significantly different,” “p < 0.05,” and “nonsignificant” survive, whether expressed in words, by asterisks in a table, or in some other way."

With best wishes,

November 3, 2020 at 2:09 am

I’m definitely aware of the debate surrounding how to use p-values most effectively. However, I need to correct you on one point. The link you provide is NOT a statement by the American Statistical Association. It is an editorial by several authors.

There is considerable debate over this issue. There are problems with p-values. However, as the authors state themselves, much of the problem is over people’s mindsets about how to use p-values and their incorrect interpretations about what statistical significance does and does not mean.

If you were to read my website more thoroughly, you’d be aware that I share many of their concerns and I address them in multiple posts. One of the authors’ key points is the need to be thoughtful and conduct thoughtful research and analysis. I emphasize this aspect in multiple posts on this topic. I’ll ask you to read the following three because they all address some of the authors’ concerns and suggestions. But you might run across others to read as well.

Five Tips for Using P-values to Avoid Being Misled How to Interpret P-values Correctly P-values and the Reproducibility of Experimental Results

September 24, 2020 at 11:52 pm

HI Jim, i just want you to know that you made explanation for Statistics so simple! I should say lesser and fewer words that reduce the complexity. All the best! 🙂

September 25, 2020 at 1:03 am

Thanks, Rene! Your kind words mean a lot to me! I’m so glad it has been helpful!

September 23, 2020 at 2:21 am

Honestly, I never understood stats during my entire M.Ed course and was another nightmare for me. But how easily you have explained each concept, I have understood stats way beyond my imagination. Thank you so much for helping ignorant research scholars like us. Looking forward to get hardcopy of your book. Kindly tell is it available through flipkart?

September 24, 2020 at 11:14 pm

I’m so happy to hear that my website has been helpful!

I checked on flipkart and it appears like my books are not available there. I’m never exactly sure where they’re available due to the vagaries of different distribution channels. They are available on Amazon in India.

Introduction to Statistics: An Intuitive Guide (Amazon IN) Hypothesis Testing: An Intuitive Guide (Amazon IN)

July 26, 2020 at 11:57 am

Dear Jim I am a teacher from India . I don’t have any background in statistics, and still I should tell that in a single read I can follow your explanations . I take my entire biostatistics class for botany graduates with your explanations. Thanks a lot. May I know how I can avail your books in India

July 28, 2020 at 12:31 am

Right now my books are only available as ebooks from my website. However, soon I’ll have some exciting news about other ways to obtain it. Stay tuned! I’ll announce it on my email list. If you’re not already on it, you can sign up using the form that is in the right margin of my website.

June 22, 2020 at 2:02 pm

Also can you please let me if this book covers topics like EDA and principal component analysis?

June 22, 2020 at 2:07 pm

This book doesn’t cover principal components analysis. Although, I wouldn’t really classify that as a hypothesis test. In the future, I might write a multivariate analysis book that would cover this and others. But, that’s well down the road.

My Introduction to Statistics covers EDA. That’s the largely graphical look at your data that you often do prior to hypothesis testing. The Introduction book perfectly leads right into the Hypothesis Testing book.

June 22, 2020 at 1:45 pm

Thanks for the detailed explanation. It does clear my doubts. I saw that your book related to hypothesis testing has the topics that I am studying currently. I am looking forward to purchasing it.

Regards, Take Care

June 19, 2020 at 1:03 pm

For this particular article I did not understand a couple of statements and it would great if you could help: 1)”If sample error causes the observed difference, the next time someone performs the same experiment the results might be different.” 2)”If the difference does not exist at the population level, you won’t obtain the benefits that you expect based on the sample statistics.”

I discovered your articles by chance and now I keep coming back to read & understand statistical concepts. These articles are very informative & easy to digest. Thanks for the simplifying things.

June 20, 2020 at 9:53 pm

I’m so happy to hear that you’ve found my website to be helpful!

To answer your questions, keep in mind that a central tenant of inferential statistics is that the random sample that a study drew was only one of an infinite number of possible it could’ve drawn. Each random sample produces different results. Most results will cluster around the population value assuming they used good methodology. However, random sampling error always exists and makes it so that population estimates from a sample almost never exactly equal the correct population value.

So, imagine that we’re studying a medication and comparing the treatment and control groups. Suppose that the medicine is truly not effect and that the population difference between the treatment and control group is zero (i.e., no difference.) Despite the true difference being zero, most sample estimates will show some degree of either a positive or negative effect thanks to random sampling error. So, just because a study has an observed difference does not mean that a difference exists at the population level. So, on to your questions:

1. If the observed difference is just random error, then it makes sense that if you collected another random sample, the difference could change. It could change from negative to positive, positive to negative, more extreme, less extreme, etc. However, if the difference exists at the population level, most random samples drawn from the population will reflect that difference. If the medicine has an effect, most random samples will reflect that fact and not bounce around on both sides of zero as much.

2. This is closely related to the previous answer. If there is no difference at the population level, but say you approve the medicine because of the observed effects in a sample. Even though your random sample showed an effect (which was really random error), that effect doesn’t exist. So, when you start using it on a larger scale, people won’t benefit from the medicine. That’s why it’s important to separate out what is easily explained by random error versus what is not easily explained by it.

I think reading my post about how hypothesis tests work will help clarify this process. Also, in about 24 hours (as I write this), I’ll be releasing my new ebook about Hypothesis Testing!

May 29, 2020 at 5:23 am

Hi Jim, I really enjoy your blog. Can you please link me on your blog where you discuss about Subgroup analysis and how it is done? I need to use non parametric and parametric statistical methods for my work and also do subgroup analysis in order to identify potential groups of patients that may benefit more from using a treatment than other groups.

May 29, 2020 at 2:12 pm

Hi, I don’t have a specific article about subgroup analysis. However, subgroup analysis is just the dividing up of a larger sample into subgroups and then analyzing those subgroups separately. You can use the various analyses I write about on the subgroups.

Alternatively, you can include the subgroups in regression analysis as an indicator variable and include that variable as a main effect and an interaction effect to see how the relationships vary by subgroup without needing to subdivide your data. I write about that approach in my article about comparing regression lines . This approach is my preferred approach when possible.

April 19, 2020 at 7:58 am

sir is confidence interval is a part of estimation?

April 17, 2020 at 3:36 pm

Sir can u plz briefly explain alternatives of hypothesis testing? I m unable to find the answer

April 18, 2020 at 1:22 am

Assuming you want to draw conclusions about populations by using samples (i.e., inferential statistics ), you can use confidence intervals and bootstrap methods as alternatives to the traditional hypothesis testing methods.

March 9, 2020 at 10:01 pm

Hi JIm, could you please help with activities that can best teach concepts of hypothesis testing through simulation, Also, do you have any question set that would enhance students intuition why learning hypothesis testing as a topic in introductory statistics. Thanks.

March 5, 2020 at 3:48 pm

Hi Jim, I’m studying multiple hypothesis testing & was wondering if you had any material that would be relevant. I’m more trying to understand how testing multiple samples simultaneously affects your results & more on the Bonferroni Correction

March 5, 2020 at 4:05 pm

I write about multiple comparisons (aka post hoc tests) in the ANOVA context . I don’t talk about Bonferroni Corrections specifically but I cover related types of corrections. I’m not sure if that exactly addresses what you want to know but is probably the closest I have already written. I hope it helps!

January 14, 2020 at 9:03 pm

Thank you! Have a great day/evening.

January 13, 2020 at 7:10 pm

Any help would be greatly appreciated. What is the difference between The Hypothesis Test and The Statistical Test of Hypothesis?

January 14, 2020 at 11:02 am

They sound like the same thing to me. Unless this is specialized terminology for a particular field or the author was intending something specific, I’d guess they’re one and the same.

April 1, 2019 at 10:00 am

so these are the only two forms of Hypothesis used in statistical testing?

April 1, 2019 at 10:02 am

Are you referring to the null and alternative hypothesis? If so, yes, that’s those are the standard hypotheses in a statistical hypothesis test.

April 1, 2019 at 9:57 am

year very insightful post, thanks for the write up

October 27, 2018 at 11:09 pm

hi there, am upcoming statistician, out of all blogs that i have read, i have found this one more useful as long as my problem is concerned. thanks so much

October 27, 2018 at 11:14 pm

Hi Stano, you’re very welcome! Thanks for your kind words. They mean a lot! I’m happy to hear that my posts were able to help you. I’m sure you will be a fantastic statistician. Best of luck with your studies!

October 26, 2018 at 11:39 am

Dear Jim, thank you very much for your explanations! I have a question. Can I use t-test to compare two samples in case each of them have right bias?

October 26, 2018 at 12:00 pm

Hi Tetyana,

You’re very welcome!

The term “right bias” is not a standard term. Do you by chance mean right skewed distributions? In other words, if you plot the distribution for each group on a histogram they have longer right tails? These are not the symmetrical bell-shape curves of the normal distribution.

If that’s the case, yes you can as long as you exceed a specific sample size within each group. I include a table that contains these sample size requirements in my post about nonparametric vs parametric analyses .

Bias in statistics refers to cases where an estimate of a value is systematically higher or lower than the true value. If this is the case, you might be able to use t-tests, but you’d need to be sure to understand the nature of the bias so you would understand what the results are really indicating.

I hope this helps!

April 2, 2018 at 7:28 am

Simple and upto the point 👍 Thank you so much.

April 2, 2018 at 11:11 am

Hi Kalpana, thanks! And I’m glad it was helpful!

March 26, 2018 at 8:41 am

Am I correct if I say: Alpha – Probability of wrongly rejection of null hypothesis P-value – Probability of wrongly acceptance of null hypothesis

March 28, 2018 at 3:14 pm

You’re correct about alpha. Alpha is the probability of rejecting the null hypothesis when the null is true.

Unfortunately, your definition of the p-value is a bit off. The p-value has a fairly convoluted definition. It is the probability of obtaining the effect observed in a sample, or more extreme, if the null hypothesis is true. The p-value does NOT indicate the probability that either the null or alternative is true or false. Although, those are very common misinterpretations. To learn more, read my post about how to interpret p-values correctly .

March 2, 2018 at 6:10 pm

I recently started reading your blog and it is very helpful to understand each concept of statistical tests in easy way with some good examples. Also, I recommend to other people go through all these blogs which you posted. Specially for those people who have not statistical background and they are facing to many problems while studying statistical analysis.

Thank you for your such good blogs.

March 3, 2018 at 10:12 pm

Hi Amit, I’m so glad that my blog posts have been helpful for you! It means a lot to me that you took the time to write such a nice comment! Also, thanks for recommending by blog to others! I try really hard to write posts about statistics that are easy to understand.

January 17, 2018 at 7:03 am

I recently started reading your blog and I find it very interesting. I am learning statistics by my own, and I generally do many google search to understand the concepts. So this blog is quite helpful for me, as it have most of the content which I am looking for.

January 17, 2018 at 3:56 pm

Hi Shashank, thank you! And, I’m very glad to hear that my blog is helpful!

January 2, 2018 at 2:28 pm

thank u very much sir.

January 2, 2018 at 2:36 pm

You’re very welcome, Hiral!

November 21, 2017 at 12:43 pm

Thank u so much sir….your posts always helps me to be a #statistician

November 21, 2017 at 2:40 pm

Hi Sachin, you’re very welcome! I’m happy that you find my posts to be helpful!

November 19, 2017 at 8:22 pm

great post as usual, but it would be nice to see an example.

November 19, 2017 at 8:27 pm

Thank you! At the end of this post, I have links to four other posts that show examples of hypothesis tests in action. You’ll find what you’re looking for in those posts!

Comments and Questions Cancel reply

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

1.2 - the 7 step process of statistical hypothesis testing.

We will cover the seven steps one by one.

Step 1: State the Null Hypothesis

The null hypothesis can be thought of as the opposite of the "guess" the researchers made. In the example presented in the previous section, the biologist "guesses" plant height will be different for the various fertilizers. So the null hypothesis would be that there will be no difference among the groups of plants. Specifically, in more statistical language the null for an ANOVA is that the means are the same. We state the null hypothesis as:

\(H_0 \colon \mu_1 = \mu_2 = ⋯ = \mu_T\)

for T levels of an experimental treatment.

Step 2: State the Alternative Hypothesis

\(H_A \colon \text{ treatment level means not all equal}\)

The alternative hypothesis is stated in this way so that if the null is rejected, there are many alternative possibilities.

For example, \(\mu_1\ne \mu_2 = ⋯ = \mu_T\) is one possibility, as is \(\mu_1=\mu_2\ne\mu_3= ⋯ =\mu_T\). Many people make the mistake of stating the alternative hypothesis as \(\mu_1\ne\mu_2\ne⋯\ne\mu_T\) which says that every mean differs from every other mean. This is a possibility, but only one of many possibilities. A simple way of thinking about this is that at least one mean is different from all others. To cover all alternative outcomes, we resort to a verbal statement of "not all equal" and then follow up with mean comparisons to find out where differences among means exist. In our example, a possible outcome would be that fertilizer 1 results in plants that are exceptionally tall, but fertilizers 2, 3, and the control group may not differ from one another.

Step 3: Set \(\alpha\)

If we look at what can happen in a hypothesis test, we can construct the following contingency table:

| Decision | In Reality | |

|---|---|---|

| \(H_0\) is TRUE | \(H_0\) is FALSE | |

| Accept \(H_0\) | correct | Type II Error \(\beta\) = probability of Type II Error |

| Reject \(H_0\) | Type I Error | correct |

You should be familiar with Type I and Type II errors from your introductory courses. It is important to note that we want to set \(\alpha\) before the experiment ( a-priori ) because the Type I error is the more grievous error to make. The typical value of \(\alpha\) is 0.05, establishing a 95% confidence level. For this course, we will assume \(\alpha\) =0.05, unless stated otherwise.

Step 4: Collect Data

Remember the importance of recognizing whether data is collected through an experimental design or observational study.

Step 5: Calculate a test statistic

For categorical treatment level means, we use an F- statistic, named after R.A. Fisher. We will explore the mechanics of computing the F- statistic beginning in Lesson 2. The F- value we get from the data is labeled \(F_{\text{calculated}}\).

Step 6: Construct Acceptance / Rejection regions

As with all other test statistics, a threshold (critical) value of F is established. This F- value can be obtained from statistical tables or software and is referred to as \(F_{\text{critical}}\) or \(F_\alpha\). As a reminder, this critical value is the minimum value of the test statistic (in this case \(F_{\text{calculated}}\)) for us to reject the null.

The F- distribution, \(F_\alpha\), and the location of acceptance/rejection regions are shown in the graph below:

Step 7: Based on Steps 5 and 6, draw a conclusion about \(H_0\)

If \(F_{\text{calculated}}\) is larger than \(F_\alpha\), then you are in the rejection region and you can reject the null hypothesis with \(\left(1-\alpha \right)\) level of confidence.

Note that modern statistical software condenses Steps 6 and 7 by providing a p -value. The p -value here is the probability of getting an \(F_{\text{calculated}}\) even greater than what you observe assuming the null hypothesis is true. If by chance, the \(F_{\text{calculated}} = F_\alpha\), then the p -value would be exactly equal to \(\alpha\). With larger \(F_{\text{calculated}}\) values, we move further into the rejection region and the p- value becomes less than \(\alpha\). So, the decision rule is as follows:

If the p- value obtained from the ANOVA is less than \(\alpha\), then reject \(H_0\) in favor of \(H_A\).

Hypothesis Testing

Hypothesis testing is a tool for making statistical inferences about the population data. It is an analysis tool that tests assumptions and determines how likely something is within a given standard of accuracy. Hypothesis testing provides a way to verify whether the results of an experiment are valid.

A null hypothesis and an alternative hypothesis are set up before performing the hypothesis testing. This helps to arrive at a conclusion regarding the sample obtained from the population. In this article, we will learn more about hypothesis testing, its types, steps to perform the testing, and associated examples.

| 1. | |

| 2. | |

| 3. | |

| 4. | |

| 5. | |

| 6. | |

| 7. | |

| 8. |

What is Hypothesis Testing in Statistics?

Hypothesis testing uses sample data from the population to draw useful conclusions regarding the population probability distribution . It tests an assumption made about the data using different types of hypothesis testing methodologies. The hypothesis testing results in either rejecting or not rejecting the null hypothesis.

Hypothesis Testing Definition

Hypothesis testing can be defined as a statistical tool that is used to identify if the results of an experiment are meaningful or not. It involves setting up a null hypothesis and an alternative hypothesis. These two hypotheses will always be mutually exclusive. This means that if the null hypothesis is true then the alternative hypothesis is false and vice versa. An example of hypothesis testing is setting up a test to check if a new medicine works on a disease in a more efficient manner.

Null Hypothesis

The null hypothesis is a concise mathematical statement that is used to indicate that there is no difference between two possibilities. In other words, there is no difference between certain characteristics of data. This hypothesis assumes that the outcomes of an experiment are based on chance alone. It is denoted as \(H_{0}\). Hypothesis testing is used to conclude if the null hypothesis can be rejected or not. Suppose an experiment is conducted to check if girls are shorter than boys at the age of 5. The null hypothesis will say that they are the same height.

Alternative Hypothesis

The alternative hypothesis is an alternative to the null hypothesis. It is used to show that the observations of an experiment are due to some real effect. It indicates that there is a statistical significance between two possible outcomes and can be denoted as \(H_{1}\) or \(H_{a}\). For the above-mentioned example, the alternative hypothesis would be that girls are shorter than boys at the age of 5.

Hypothesis Testing P Value

In hypothesis testing, the p value is used to indicate whether the results obtained after conducting a test are statistically significant or not. It also indicates the probability of making an error in rejecting or not rejecting the null hypothesis.This value is always a number between 0 and 1. The p value is compared to an alpha level, \(\alpha\) or significance level. The alpha level can be defined as the acceptable risk of incorrectly rejecting the null hypothesis. The alpha level is usually chosen between 1% to 5%.

Hypothesis Testing Critical region

All sets of values that lead to rejecting the null hypothesis lie in the critical region. Furthermore, the value that separates the critical region from the non-critical region is known as the critical value.

Hypothesis Testing Formula

Depending upon the type of data available and the size, different types of hypothesis testing are used to determine whether the null hypothesis can be rejected or not. The hypothesis testing formula for some important test statistics are given below:

- z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the size of the sample.

- t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). s is the sample standard deviation.

- \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\). \(O_{i}\) is the observed value and \(E_{i}\) is the expected value.

We will learn more about these test statistics in the upcoming section.

Types of Hypothesis Testing

Selecting the correct test for performing hypothesis testing can be confusing. These tests are used to determine a test statistic on the basis of which the null hypothesis can either be rejected or not rejected. Some of the important tests used for hypothesis testing are given below.

Hypothesis Testing Z Test

A z test is a way of hypothesis testing that is used for a large sample size (n ≥ 30). It is used to determine whether there is a difference between the population mean and the sample mean when the population standard deviation is known. It can also be used to compare the mean of two samples. It is used to compute the z test statistic. The formulas are given as follows:

- One sample: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

- Two samples: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing t Test

The t test is another method of hypothesis testing that is used for a small sample size (n < 30). It is also used to compare the sample mean and population mean. However, the population standard deviation is not known. Instead, the sample standard deviation is known. The mean of two samples can also be compared using the t test.

- One sample: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\).

- Two samples: t = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Hypothesis Testing Chi Square

The Chi square test is a hypothesis testing method that is used to check whether the variables in a population are independent or not. It is used when the test statistic is chi-squared distributed.

One Tailed Hypothesis Testing

One tailed hypothesis testing is done when the rejection region is only in one direction. It can also be known as directional hypothesis testing because the effects can be tested in one direction only. This type of testing is further classified into the right tailed test and left tailed test.

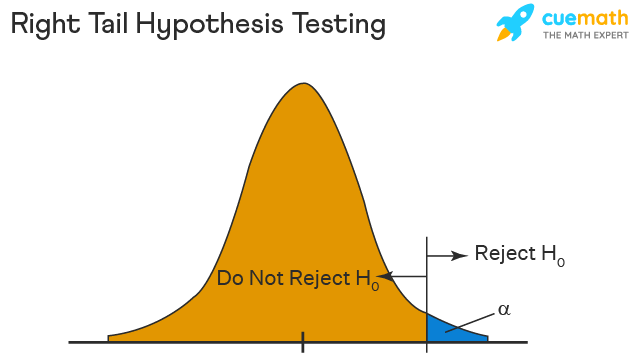

Right Tailed Hypothesis Testing

The right tail test is also known as the upper tail test. This test is used to check whether the population parameter is greater than some value. The null and alternative hypotheses for this test are given as follows:

\(H_{0}\): The population parameter is ≤ some value

\(H_{1}\): The population parameter is > some value.

If the test statistic has a greater value than the critical value then the null hypothesis is rejected

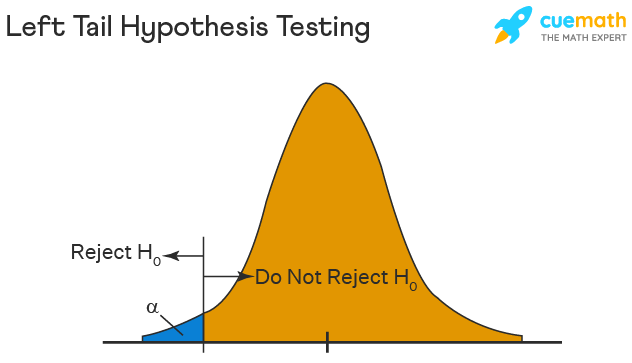

Left Tailed Hypothesis Testing

The left tail test is also known as the lower tail test. It is used to check whether the population parameter is less than some value. The hypotheses for this hypothesis testing can be written as follows:

\(H_{0}\): The population parameter is ≥ some value

\(H_{1}\): The population parameter is < some value.

The null hypothesis is rejected if the test statistic has a value lesser than the critical value.

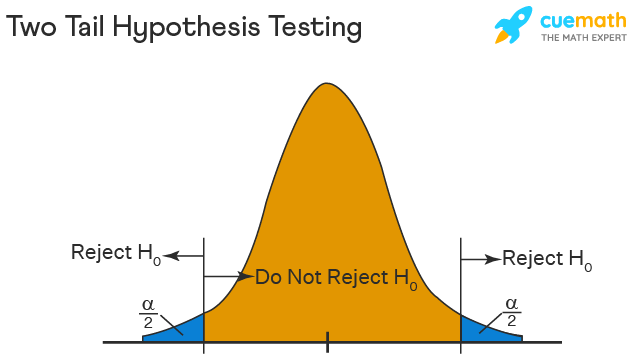

Two Tailed Hypothesis Testing

In this hypothesis testing method, the critical region lies on both sides of the sampling distribution. It is also known as a non - directional hypothesis testing method. The two-tailed test is used when it needs to be determined if the population parameter is assumed to be different than some value. The hypotheses can be set up as follows:

\(H_{0}\): the population parameter = some value

\(H_{1}\): the population parameter ≠ some value

The null hypothesis is rejected if the test statistic has a value that is not equal to the critical value.

Hypothesis Testing Steps

Hypothesis testing can be easily performed in five simple steps. The most important step is to correctly set up the hypotheses and identify the right method for hypothesis testing. The basic steps to perform hypothesis testing are as follows:

- Step 1: Set up the null hypothesis by correctly identifying whether it is the left-tailed, right-tailed, or two-tailed hypothesis testing.

- Step 2: Set up the alternative hypothesis.

- Step 3: Choose the correct significance level, \(\alpha\), and find the critical value.

- Step 4: Calculate the correct test statistic (z, t or \(\chi\)) and p-value.

- Step 5: Compare the test statistic with the critical value or compare the p-value with \(\alpha\) to arrive at a conclusion. In other words, decide if the null hypothesis is to be rejected or not.

Hypothesis Testing Example

The best way to solve a problem on hypothesis testing is by applying the 5 steps mentioned in the previous section. Suppose a researcher claims that the mean average weight of men is greater than 100kgs with a standard deviation of 15kgs. 30 men are chosen with an average weight of 112.5 Kgs. Using hypothesis testing, check if there is enough evidence to support the researcher's claim. The confidence interval is given as 95%.

Step 1: This is an example of a right-tailed test. Set up the null hypothesis as \(H_{0}\): \(\mu\) = 100.

Step 2: The alternative hypothesis is given by \(H_{1}\): \(\mu\) > 100.

Step 3: As this is a one-tailed test, \(\alpha\) = 100% - 95% = 5%. This can be used to determine the critical value.

1 - \(\alpha\) = 1 - 0.05 = 0.95

0.95 gives the required area under the curve. Now using a normal distribution table, the area 0.95 is at z = 1.645. A similar process can be followed for a t-test. The only additional requirement is to calculate the degrees of freedom given by n - 1.

Step 4: Calculate the z test statistic. This is because the sample size is 30. Furthermore, the sample and population means are known along with the standard deviation.

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\).

\(\mu\) = 100, \(\overline{x}\) = 112.5, n = 30, \(\sigma\) = 15

z = \(\frac{112.5-100}{\frac{15}{\sqrt{30}}}\) = 4.56

Step 5: Conclusion. As 4.56 > 1.645 thus, the null hypothesis can be rejected.

Hypothesis Testing and Confidence Intervals

Confidence intervals form an important part of hypothesis testing. This is because the alpha level can be determined from a given confidence interval. Suppose a confidence interval is given as 95%. Subtract the confidence interval from 100%. This gives 100 - 95 = 5% or 0.05. This is the alpha value of a one-tailed hypothesis testing. To obtain the alpha value for a two-tailed hypothesis testing, divide this value by 2. This gives 0.05 / 2 = 0.025.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Hypothesis Testing

- Hypothesis testing is a technique that is used to verify whether the results of an experiment are statistically significant.

- It involves the setting up of a null hypothesis and an alternate hypothesis.

- There are three types of tests that can be conducted under hypothesis testing - z test, t test, and chi square test.

- Hypothesis testing can be classified as right tail, left tail, and two tail tests.

Examples on Hypothesis Testing

- Example 1: The average weight of a dumbbell in a gym is 90lbs. However, a physical trainer believes that the average weight might be higher. A random sample of 5 dumbbells with an average weight of 110lbs and a standard deviation of 18lbs. Using hypothesis testing check if the physical trainer's claim can be supported for a 95% confidence level. Solution: As the sample size is lesser than 30, the t-test is used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) > 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 5, s = 18. \(\alpha\) = 0.05 Using the t-distribution table, the critical value is 2.132 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = 2.484 As 2.484 > 2.132, the null hypothesis is rejected. Answer: The average weight of the dumbbells may be greater than 90lbs

- Example 2: The average score on a test is 80 with a standard deviation of 10. With a new teaching curriculum introduced it is believed that this score will change. On random testing, the score of 38 students, the mean was found to be 88. With a 0.05 significance level, is there any evidence to support this claim? Solution: This is an example of two-tail hypothesis testing. The z test will be used. \(H_{0}\): \(\mu\) = 80, \(H_{1}\): \(\mu\) ≠ 80 \(\overline{x}\) = 88, \(\mu\) = 80, n = 36, \(\sigma\) = 10. \(\alpha\) = 0.05 / 2 = 0.025 The critical value using the normal distribution table is 1.96 z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\) z = \(\frac{88-80}{\frac{10}{\sqrt{36}}}\) = 4.8 As 4.8 > 1.96, the null hypothesis is rejected. Answer: There is a difference in the scores after the new curriculum was introduced.

- Example 3: The average score of a class is 90. However, a teacher believes that the average score might be lower. The scores of 6 students were randomly measured. The mean was 82 with a standard deviation of 18. With a 0.05 significance level use hypothesis testing to check if this claim is true. Solution: The t test will be used. \(H_{0}\): \(\mu\) = 90, \(H_{1}\): \(\mu\) < 90 \(\overline{x}\) = 110, \(\mu\) = 90, n = 6, s = 18 The critical value from the t table is -2.015 t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\) t = \(\frac{82-90}{\frac{18}{\sqrt{6}}}\) t = -1.088 As -1.088 > -2.015, we fail to reject the null hypothesis. Answer: There is not enough evidence to support the claim.

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on Hypothesis Testing

What is hypothesis testing.

Hypothesis testing in statistics is a tool that is used to make inferences about the population data. It is also used to check if the results of an experiment are valid.

What is the z Test in Hypothesis Testing?

The z test in hypothesis testing is used to find the z test statistic for normally distributed data . The z test is used when the standard deviation of the population is known and the sample size is greater than or equal to 30.

What is the t Test in Hypothesis Testing?

The t test in hypothesis testing is used when the data follows a student t distribution . It is used when the sample size is less than 30 and standard deviation of the population is not known.

What is the formula for z test in Hypothesis Testing?